Preparing for assessments on information systems requires a solid grasp of fundamental principles and techniques. This section covers essential material that forms the backbone of evaluating your proficiency in managing structured data. Each topic is designed to provide a comprehensive overview of key areas commonly encountered in academic or professional settings.

Mastering these core ideas not only improves your understanding but also sharpens your ability to tackle complex scenarios. Whether dealing with data organization, querying methods, or security protocols, building a strong foundation will significantly enhance your chances of success. By delving into these critical subjects, you will gain confidence and readiness to approach any challenge with clarity.

Database Concepts Exam Questions and Answers

In any assessment related to information management, a solid understanding of fundamental principles is key to success. This section focuses on various topics that are often tested in academic or professional settings. By familiarizing yourself with common challenges and solutions, you can approach these tests with greater confidence and proficiency.

Key Topics to Master

- Data organization and storage techniques

- Methods for retrieving and manipulating information

- Approaches for maintaining data consistency and accuracy

- Understanding data relationships and their implications

- Security protocols for safeguarding information

Commonly Encountered Scenarios

- Designing efficient systems for data management

- Handling complex retrieval tasks using advanced queries

- Implementing strategies for ensuring data integrity

- Balancing performance with security concerns

By reviewing these topics thoroughly, you can strengthen your ability to apply these principles in real-world situations. Practice solving typical problems and exploring various techniques to improve both speed and accuracy when addressing practical challenges.

Overview of Key Database Topics

To excel in any assessment focused on information systems, it’s crucial to understand the fundamental elements that govern how data is structured, managed, and utilized. This section covers essential areas of study that are frequently tested in academic or professional settings. A solid foundation in these topics not only improves comprehension but also equips you with the skills necessary to apply theoretical knowledge in practical scenarios.

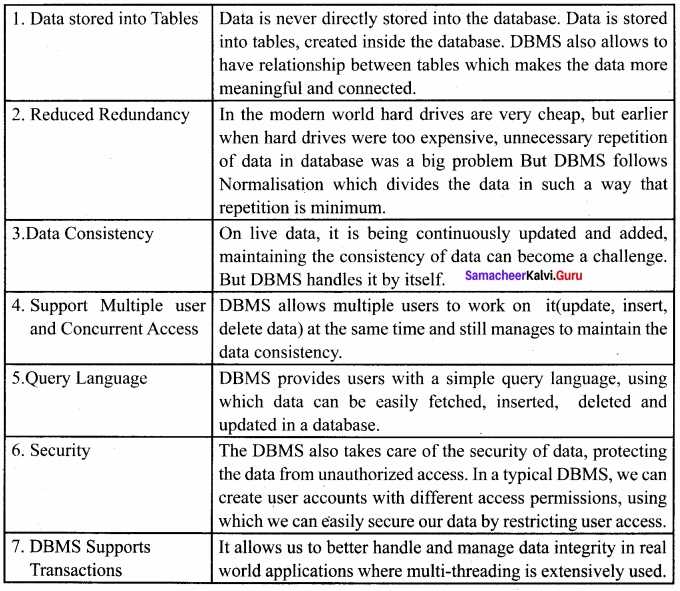

| Topic | Description |

|---|---|

| Data Models | The various structures used to organize and represent information, including relational, hierarchical, and object-oriented models. |

| Normalization | The process of organizing data to reduce redundancy and improve data integrity. |

| Querying Techniques | Methods for retrieving and manipulating data through commands such as SELECT, JOIN, and other operations. |

| Security Measures | Protocols designed to protect data from unauthorized access, corruption, or theft. |

| Transactions | Ensuring consistency, reliability, and durability when performing operations on data. |

Mastering these core areas not only helps in assessments but also enhances your ability to implement and maintain effective systems in real-world applications. Understanding the intricacies of each topic will provide the confidence needed to tackle complex challenges with ease.

Understanding Database Structures and Models

Grasping the different ways in which information can be organized and represented is crucial for building efficient systems. Various frameworks exist to structure data, each offering unique advantages depending on the nature of the information and the intended use. Understanding these frameworks is vital for both designing and interacting with complex data storage solutions.

Types of Data Models

Different structures are employed to organize information efficiently. The most common models include:

- Relational Model: Data is stored in tables, where relationships between entries are established using keys.

- Hierarchical Model: Information is organized in a tree-like structure, with records having parent-child relationships.

- Network Model: Similar to the hierarchical model but allows more complex relationships, where a record can have multiple parent nodes.

- Object-Oriented Model: Data is represented as objects, similar to objects in programming languages, with attributes and methods.

Choosing the Right Model

Selecting the right organizational framework depends on the type of information being handled, the relationships between data, and performance requirements. Each structure has strengths that make it suited for specific types of data processing tasks, and understanding these distinctions is key to effective system design.

SQL Query Fundamentals for Exams

Mastering the art of querying information is essential for effectively retrieving and manipulating data. Understanding the core principles behind forming efficient queries is key to both solving problems and optimizing performance. Whether you are dealing with simple data extraction or complex retrieval scenarios, a solid foundation in querying techniques is critical for success.

Basic SQL Syntax

The foundation of any querying task lies in understanding the basic structure of SQL commands. The most common operations include:

- SELECT: Used to retrieve data from one or more tables.

- INSERT: Adds new records into a table.

- UPDATE: Modifies existing records.

- DELETE: Removes records from a table.

Advanced Querying Techniques

Beyond the basics, more advanced techniques are necessary to handle complex tasks efficiently. Some of these techniques include:

- Joins: Combining rows from two or more tables based on related columns.

- Subqueries: Embedding one query within another to retrieve more specific results.

- Aggregations: Using functions like SUM, AVG, COUNT to perform calculations on data.

- Filtering: Applying conditions with WHERE, HAVING, and BETWEEN clauses to narrow results.

By becoming proficient in these fundamental operations, you will be able to handle a wide range of tasks with confidence, ensuring that you can extract, modify, and analyze information as needed in various scenarios.

Normalization Techniques and Examples

Organizing information efficiently is crucial for minimizing redundancy and improving consistency. One of the most important practices for achieving this is applying specific techniques that structure data in a way that ensures reliability and reduces errors. These techniques help to break down complex data into smaller, manageable pieces, making it easier to maintain and query.

One key approach to organizing data is through a process known as normalization. By breaking down large, repetitive data sets into smaller tables with unique relationships, this practice helps maintain data integrity and supports efficient updates. The process involves several stages, each addressing different forms of redundancy and inconsistencies.

Common stages of this technique include:

- First Normal Form (1NF): Ensures that all records are atomic, meaning that each field contains only one value, eliminating repeating groups.

- Second Normal Form (2NF): Involves removing partial dependencies, ensuring that non-key attributes are fully dependent on the primary key.

- Third Normal Form (3NF): Focuses on removing transitive dependencies, meaning that non-key attributes are not dependent on other non-key attributes.

By implementing these normalization techniques, the integrity and efficiency of data handling are significantly improved, making it easier to manage large amounts of information with minimal errors and redundancy.

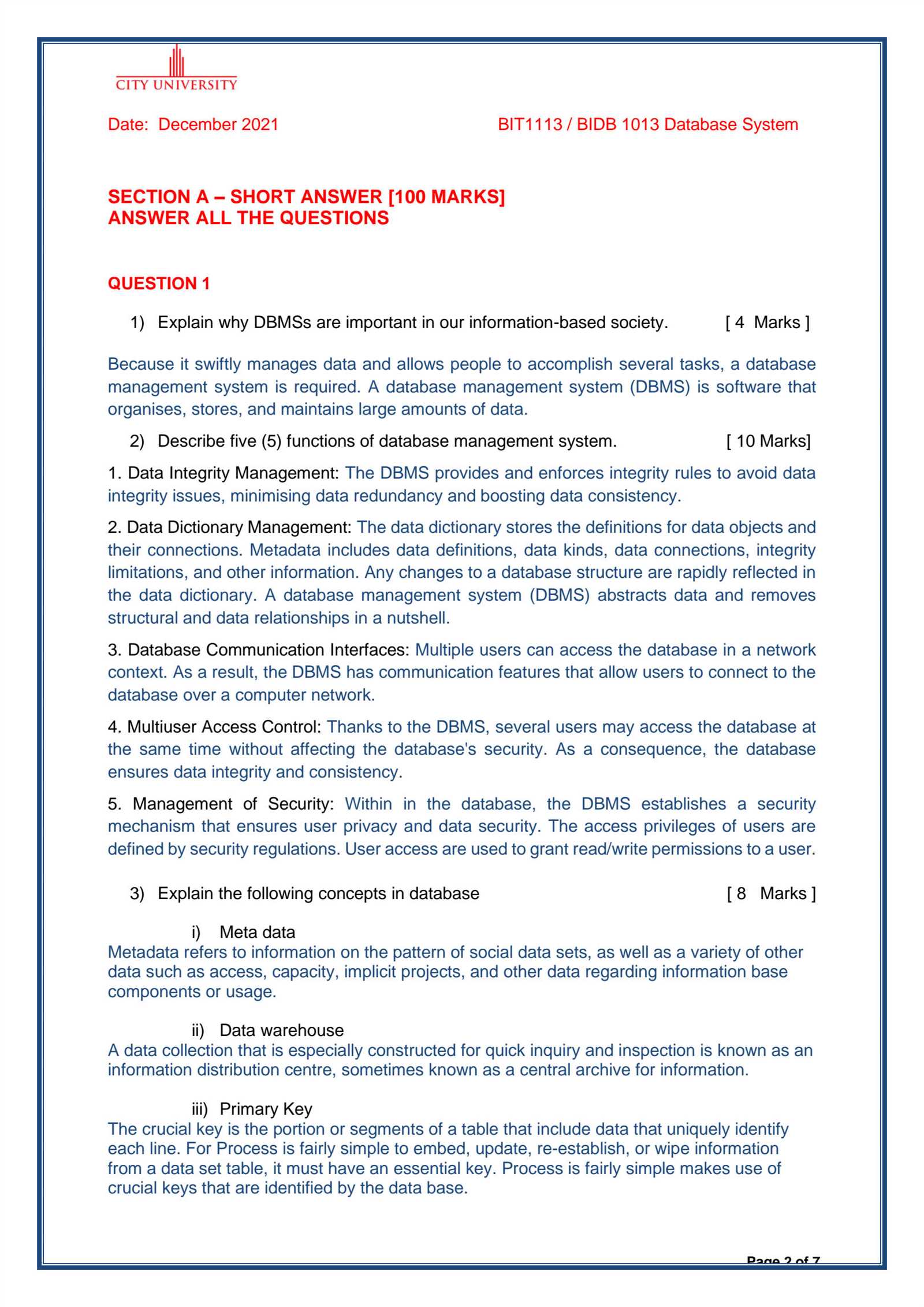

Common Database Constraints and Rules

When managing structured data, it is essential to enforce certain limitations and guidelines to ensure consistency, accuracy, and reliability. These rules help control the integrity of the stored information, prevent errors, and ensure that the system operates as expected. By applying constraints, you can maintain the relationships between different sets of information, ensuring that they follow the required format and logic.

Types of Constraints

Various types of restrictions are commonly applied to ensure data accuracy and consistency:

- Primary Key: Ensures that each record in a table has a unique identifier, preventing duplicate entries.

- Foreign Key: Establishes a link between two tables by ensuring that values in one table correspond to valid entries in another.

- Unique: Guarantees that values in a specified column are unique across all records in the table.

- Check: Enforces specific conditions on the values entered in a column, such as restricting them to a certain range or format.

- Not Null: Ensures that a column must contain a value, preventing it from being left empty.

Enforcing Consistency and Reliability

These rules play a critical role in preserving the quality of stored information by preventing invalid or inconsistent data from being entered. They support efficient data manipulation, querying, and integrity by ensuring that relationships between different pieces of information are well-defined and maintained. With these restrictions in place, data becomes more reliable and easier to work with in any system.

Handling Relationships in Databases

In any system that stores related sets of information, it is crucial to define how different pieces of data interact with one another. Managing these interactions properly ensures that information is accurately represented and easily accessible. Understanding the different types of relationships helps in organizing data efficiently and enables powerful queries and operations.

Types of Relationships

Data relationships can take various forms depending on how elements are linked. The most common types include:

- One-to-One: Each record in one table is associated with a single record in another table.

- One-to-Many: A single record in one table can be linked to multiple records in another table.

- Many-to-Many: Multiple records in one table are associated with multiple records in another table, often managed through a junction table.

Managing Relationships

Efficiently managing these connections requires the use of keys and references to maintain consistency and integrity:

- Primary Key: Uniquely identifies each record within a table.

- Foreign Key: Establishes a link between tables, referring to the primary key in another table to maintain relationships.

- Join Operations: Allow data from multiple tables to be combined based on these relationships, enabling more complex queries and analysis.

Properly handling relationships ensures that data is consistent, reduces redundancy, and makes it easier to maintain and retrieve information across tables in an organized manner.

Data Integrity and Consistency Principles

Ensuring that information remains accurate, reliable, and consistent across different stages of processing is a core aspect of any well-structured system. When managing large amounts of information, it’s essential to prevent errors, inconsistencies, and corruption that could compromise the quality of the data. Adhering to integrity and consistency principles helps maintain trustworthiness and supports the overall performance of the system.

Key Integrity Constraints

To safeguard the quality of stored data, certain rules and checks are applied throughout the system:

- Entity Integrity: Guarantees that each entry in a collection has a unique identifier, preventing duplication.

- Referential Integrity: Ensures that relationships between records are maintained by validating foreign key references between tables.

- Domain Integrity: Enforces rules on acceptable values for attributes, ensuring that only valid data is stored.

Maintaining Consistency

Consistency principles ensure that data remains in a valid state before and after any updates or transactions. Common strategies include:

- Atomicity: Ensures that all parts of a transaction are completed successfully or not at all, preventing partial updates.

- Consistency: Guarantees that a system always transitions from one valid state to another, preserving predefined rules and constraints.

- Isolation: Ensures that transactions are processed independently, preventing interference and maintaining consistent results even under concurrent operations.

By following these principles, systems can ensure that data remains accurate, consistent, and trustworthy, providing a stable foundation for decision-making and analysis.

ACID Properties and Their Importance

In any system handling multiple transactions, it is crucial to ensure that operations are completed reliably and accurately. The ACID properties provide a set of guarantees that help maintain consistency, reliability, and integrity during transactions. These principles form the backbone of any system that handles sensitive or critical data, ensuring that processes are executed without errors or data corruption, even in the case of failures.

Overview of ACID Properties

The ACID properties consist of four key principles that must be upheld during every transaction:

- Atomicity: Guarantees that each transaction is fully completed or not executed at all. If any part of the transaction fails, the entire process is rolled back.

- Consistency: Ensures that a transaction brings the system from one valid state to another, preserving the integrity of data throughout.

- Isolation: Ensures that multiple transactions occurring simultaneously do not interfere with each other, keeping each transaction isolated from the others.

- Durability: Guarantees that once a transaction has been committed, it will not be lost, even in the case of a system crash.

Importance of ACID Principles

The importance of these properties cannot be overstated. They are critical for ensuring that systems function reliably under various conditions. By maintaining these properties, systems can ensure that data remains accurate and consistent, regardless of system failures, interruptions, or concurrent operations. This level of reliability is especially crucial in applications where data integrity is paramount, such as in financial transactions or customer records.

Overall, the ACID properties provide the foundation for creating trustworthy and fault-tolerant systems that can handle complex tasks while ensuring data consistency and accuracy.

Database Design and Schema Creation

Designing an effective structure for storing and organizing information is essential for ensuring smooth data operations. The architecture of a system defines how various elements of data relate to each other, how they are stored, and how they can be accessed efficiently. A well-thought-out framework improves data consistency, retrieval speed, and scalability while minimizing redundancy.

Steps in Designing a Schema

Creating an optimal layout for information involves several key stages:

- Requirement Analysis: Understand the needs of the application and the types of data that need to be stored, ensuring a clear vision of the structure.

- Entity Identification: Identify key entities (e.g., customers, products) and the relationships between them to create a foundation for the design.

- Normalization: Apply rules to eliminate redundancy and organize data efficiently by grouping similar information together.

- Defining Constraints: Establish rules and limits (such as keys and integrity constraints) that ensure accurate and consistent data.

Best Practices for Schema Creation

When constructing the framework for storing data, consider the following practices to ensure efficiency and scalability:

- Modularization: Break the structure into smaller, manageable parts to make updates and changes easier over time.

- Indexing: Create indexes on frequently accessed fields to speed up data retrieval and improve system performance.

- Future-Proofing: Design the system to be flexible and adaptable to future changes in data types or business requirements.

Following these steps and best practices helps ensure that the system can handle current and future demands, with a focus on performance, data integrity, and ease of maintenance.

Types of Indexes in Database Management

Efficient data retrieval is crucial for maintaining the performance of a system. Indexes play a vital role in speeding up searches by providing quick access to the records based on specific criteria. By organizing data in a way that reduces the need for full table scans, indexes can dramatically improve the performance of queries, especially in large datasets.

Common Types of Indexes

There are several types of indexes, each designed to improve the performance of certain operations or queries. Below is a brief overview of the most widely used indexing methods:

| Index Type | Description | Use Cases |

|---|---|---|

| Single-Level Index | Indexes that store references to records in a single-level structure, typically used for simple queries. | Quick searches on small datasets or single-column lookups. |

| B-Tree Index | A balanced tree structure that allows for efficient searching, insertion, and deletion operations. | Common in systems where fast retrieval and range queries are needed. |

| Hash Index | Uses a hash function to compute an address where the data can be found, offering constant-time lookup. | Ideal for equality-based searches where the exact value is queried. |

| Bitmap Index | Stores a bitmap for each distinct value in a column, making it efficient for columns with a limited number of unique values. | Effective in scenarios with low cardinality columns (e.g., gender or status). |

| Composite Index | Combines multiple columns into a single index to improve performance for queries that involve more than one column. | Used for queries that filter by more than one column simultaneously. |

Choosing the Right Index

Choosing the right indexing method depends on the specific use cases and the types of queries most frequently executed. For example, B-tree indexes are ideal for range-based searches, while hash indexes are more efficient for exact match lookups. When creating an index, it’s essential to balance the benefits of faster query times with the potential overhead of maintaining the index, particularly during insert, update, and delete operations.

Transactions and Concurrency Control

Managing multiple tasks simultaneously while ensuring data consistency and integrity is a fundamental challenge in any system that handles concurrent operations. The ability to execute several processes without interfering with each other, while maintaining accuracy, is essential. This is where the management of processes and their interactions plays a critical role in preventing conflicts and ensuring the smooth execution of operations.

Key Concepts in Managing Multiple Tasks

When multiple operations are being executed concurrently, several issues can arise, such as conflicts, inconsistencies, or even data corruption. The following strategies are used to handle these challenges:

- Atomicity: Ensures that each process is treated as a single unit, meaning it either fully completes or does not execute at all.

- Consistency: Guarantees that the system moves from one valid state to another, maintaining all integrity rules during each operation.

- Isolation: Ensures that concurrent operations do not interfere with each other, preventing issues like dirty reads or lost updates.

- Durability: Once a task is completed, its effects are permanent, even in the case of a system crash.

Techniques for Managing Simultaneous Processes

Several approaches are employed to manage the simultaneous execution of operations while maintaining the stability and consistency of the system:

- Locking Mechanisms: Prevents multiple operations from accessing the same resource at the same time by using various types of locks (e.g., exclusive or shared locks).

- Optimistic Concurrency Control: Assumes that conflicts between operations are rare, allowing processes to execute freely and checking for issues only at the end of the task.

- Timestamp Ordering: Assigns a timestamp to each process, ensuring that operations are executed in a sequential order based on their timestamps.

- Multi-Version Concurrency Control (MVCC): Maintains multiple versions of data to allow concurrent reads and writes without conflicts, by ensuring that each operation works with a snapshot of the data.

By applying these strategies, systems can efficiently manage the execution of concurrent operations, maintaining both data integrity and performance. Understanding these techniques is essential for designing systems that handle multiple tasks without compromising reliability.

Database Security Best Practices

Ensuring the safety of critical information and preventing unauthorized access is a top priority for any system that handles sensitive data. Strong protection measures must be implemented to safeguard the integrity, confidentiality, and availability of stored information. By following certain guidelines and adopting proven methods, organizations can reduce the risk of breaches and maintain secure operations.

Core Strategies for Data Protection

Implementing robust security practices begins with understanding the different layers of protection required to safeguard valuable assets. Here are some essential strategies:

- Access Control: Limiting access to data based on roles and responsibilities ensures that only authorized personnel can view or modify sensitive information.

- Encryption: Encrypting data both in transit and at rest makes it unreadable to unauthorized users, reducing the risk of exposure in the event of a breach.

- Regular Audits: Conducting periodic security audits and vulnerability assessments helps identify potential weaknesses and address them before they become critical issues.

- Data Masking: Masking sensitive information for non-privileged users helps to minimize exposure, especially during development or testing phases.

Protective Measures for Network and Systems

Along with protecting the data itself, securing the infrastructure surrounding it is essential. Effective network and system protection practices include:

- Firewalls: Configuring firewalls to filter incoming and outgoing traffic helps prevent unauthorized access to internal systems.

- Intrusion Detection Systems (IDS): Monitoring network traffic with IDS can identify and respond to potential security threats in real time.

- Regular Software Updates: Keeping all systems and software up to date ensures that known vulnerabilities are patched, reducing the risk of exploitation.

- Backups: Maintaining secure, up-to-date backups allows for data recovery in the event of data loss or a security breach.

Adopting these practices can significantly strengthen the protection of valuable data assets. Organizations must continuously review and update their security measures to stay ahead of emerging threats and ensure long-term safety.

Understanding Stored Procedures and Functions

In modern systems, efficient management of operations often requires executing predefined sets of instructions. These sets are commonly used to automate repetitive tasks and enhance performance. By storing these operations for reuse, both time and resources can be saved. The two primary techniques for encapsulating logic in this way are stored procedures and functions, each serving a specific purpose in the development and management of systems.

Key Differences Between Stored Procedures and Functions

While both stored procedures and functions offer ways to organize logic, they differ in their structure, usage, and capabilities. Below is a comparison that highlights their unique aspects:

| Aspect | Stored Procedures | Functions |

|---|---|---|

| Purpose | Performs operations such as data modification or complex tasks. | Returns a single value and is typically used for calculations. |

| Return Type | Does not return a value directly. | Always returns a single value. |

| Call Usage | Called explicitly using a call statement. | Can be used in SQL statements like SELECT. |

| Side Effects | May modify system state (e.g., insert, update, delete). | Does not modify system state, only returns values. |

Best Practices for Using Stored Procedures and Functions

To make the most of these tools, developers should follow a few best practices that ensure efficiency and maintainability:

- Modularity: Break down complex operations into smaller, manageable stored procedures and functions for better reusability.

- Error Handling: Use proper error-handling mechanisms to prevent unexpected behavior during execution.

- Parameterization: Always use parameters to make the logic reusable and flexible, preventing hardcoded values.

- Performance Optimization: Ensure that the operations are optimized to run efficiently, especially when dealing with large datasets.

Stored procedures and functions are powerful tools for developers, enabling the creation of efficient, reusable logic that can help automate tasks and improve performance. Understanding the differences between the two and applying them appropriately is key to developing well-structured, high-performance systems.

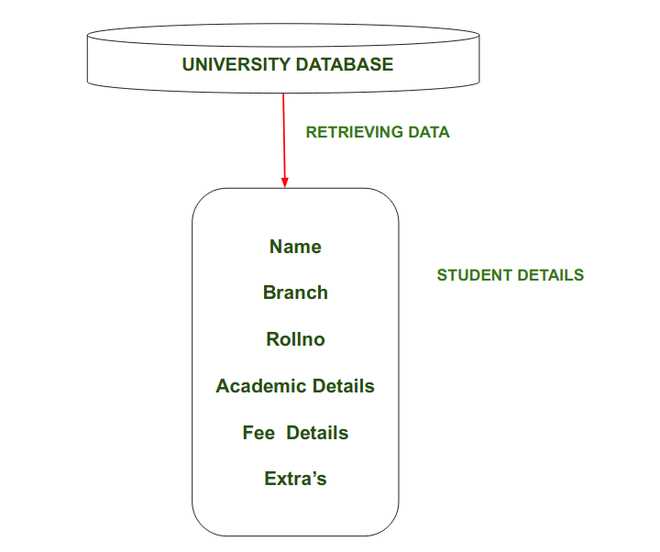

Data Retrieval with Joins and Subqueries

When working with large sets of information, it’s often necessary to retrieve data from multiple sources or tables. Two common techniques for this are joins and subqueries, which allow users to combine, filter, and organize data from different places within the same query. Both methods have their specific use cases, and understanding when and how to use them is essential for efficient data extraction and manipulation.

Understanding Joins

Joins are used to link related data from two or more tables based on common columns. There are several types of joins, each serving a specific purpose:

- Inner Join: Combines rows from two tables where there is a match in both. Only the matching rows are returned.

- Left Join (or Left Outer Join): Returns all records from the left table, along with matching records from the right table. If there is no match, NULL values are returned for the right table’s columns.

- Right Join (or Right Outer Join): Similar to Left Join, but returns all records from the right table and matching rows from the left table.

- Full Join (or Full Outer Join): Combines the results of both Left and Right Joins, returning all records when there is a match in either table.

Joins are particularly useful when data is spread across different tables but shares common attributes, such as customer information stored in one table and order details in another.

Using Subqueries for Data Extraction

Subqueries, or nested queries, allow one query to be embedded within another. This technique is useful for retrieving values that will be used in a larger query, especially when filtering or aggregating data. Subqueries can be used in the SELECT, WHERE, or FROM clauses.

- Scalar Subquery: Returns a single value, often used in the WHERE clause to compare against other values.

- Correlated Subquery: Refers to the outer query and is executed once for each row processed by the outer query.

- Non-correlated Subquery: Independent of the outer query, it is executed once and returns a result set that the outer query can use.

Subqueries are powerful for situations where a direct join may not be appropriate or where complex filtering is required. They allow for more flexible and dynamic queries, particularly when working with calculated values or conditions based on another query’s result.

In practice, combining joins and subqueries allows for sophisticated data retrieval strategies. By mastering both techniques, you can handle complex data extraction and manipulation tasks more effectively, ensuring that the required information is retrieved accurately and efficiently.

Advanced SQL Techniques for Exam Success

To achieve mastery in querying, a solid understanding of advanced SQL techniques is crucial. These techniques enable more efficient, complex, and optimized solutions, especially when working with large datasets or tackling challenging tasks. By expanding your skills beyond the basics, you can handle more sophisticated operations and prepare yourself for advanced scenarios that often arise in assessments.

Working with Subqueries and Nested Selects

Subqueries are essential for breaking down complex queries into smaller, manageable parts. By embedding one query inside another, you can retrieve results based on dynamically calculated values or conditions. These nested queries can be used in various clauses, such as WHERE, SELECT, and HAVING, making them versatile tools for filtering or aggregating data. When practicing for assessments, try to understand the distinction between:

- Scalar Subqueries: Return a single value to be used within the outer query.

- Correlated Subqueries: Depend on the outer query’s current row, often used in WHERE clauses for conditional filtering.

- Non-correlated Subqueries: Independent from the outer query and return a static result set for comparison or filtering.

Mastering these types of subqueries will give you an edge in solving complex data retrieval problems, especially when more than one condition needs to be evaluated across different tables.

Leveraging Window Functions for Advanced Calculations

Window functions allow for advanced calculations across a set of rows related to the current row without collapsing the result set. These functions are useful for calculating running totals, averages, or ranking records, while still preserving individual row data. Common examples of window functions include:

- ROW_NUMBER(): Assigns a unique number to each row within a result set.

- RANK(): Similar to ROW_NUMBER(), but rows with identical values receive the same rank.

- SUM(), AVG(), COUNT(): Aggregate functions that can be applied over a specified window of rows, rather than the entire dataset.

By using window functions, you can easily perform calculations on subsets of data, such as calculating the average order value for each customer or ranking sales performance across different regions, without losing row-level detail.

To truly excel, understanding how to combine these advanced techniques with standard SQL operations is key. Practice regularly, experiment with complex queries, and always seek to improve your problem-solving strategies for optimal results in any challenge.