In the realm of predictive analytics, understanding the fundamentals of classification models is crucial. These techniques are used to predict categorical outcomes based on input data, enabling data scientists to make informed decisions in various industries. A deep understanding of how these models work, their application, and their limitations is essential for anyone looking to excel in this field.

Preparation for assessments in this domain requires a solid grasp of both theory and practical implementation. From interpreting coefficients to evaluating model performance, mastery of these topics can be challenging but rewarding. Effective preparation not only helps in exams but also enhances real-world problem-solving skills.

This guide aims to provide clarity on the core principles, common pitfalls, and best practices. By reviewing key concepts and applying them through problem-solving, learners can build confidence and deepen their understanding of these vital analytical techniques. Whether you’re looking to sharpen your skills or improve your exam performance, this article offers essential insights to guide you through the process.

Logistic Regression Exam Questions and Answers

Mastering the principles of classification models is crucial for success in assessments that test understanding of statistical methods. These models are foundational tools used to predict outcomes based on categorical data, playing a significant role in various fields like healthcare, finance, and marketing. A clear grasp of the underlying concepts and techniques is essential for tackling challenges and performing well in related tests.

Success in this area requires familiarity with essential topics such as understanding model coefficients, interpreting odds ratios, and evaluating performance metrics. In addition to theoretical knowledge, being able to apply these concepts through practical examples is key to answering test-related problems accurately and efficiently.

By exploring common scenarios and problem-solving strategies, you can build a strong foundation for navigating assessments. This section will walk you through typical problems, clarifying how to approach each and ensuring you are well-prepared for the challenges ahead. Mastery of these topics will boost your confidence and help you achieve a deeper understanding of how to use classification techniques effectively in various real-world applications.

Understanding Logistic Regression Basics

The foundation of many predictive models lies in understanding how data can be classified into distinct categories. This method allows analysts to predict the probability of an outcome based on input variables. At its core, the process involves finding relationships between the predictors and the target variable, helping organizations make data-driven decisions.

Key to mastering this concept is comprehending how different types of input features influence the outcome. These models use a mathematical approach to estimate the likelihood of an event occurring, making them ideal for applications where results are binary or categorical in nature.

| Key Component | Explanation |

|---|---|

| Model Coefficients | Indicate the strength and direction of the relationship between predictors and the outcome. |

| Probability Function | Transforms the linear model output into a probability score, ranging from 0 to 1. |

| Threshold | Defines the cutoff point for classification, determining whether an event occurs or not. |

| Odds Ratio | Measures the odds of an event happening relative to the odds of it not happening. |

Understanding these basics is essential for building robust models that can accurately predict outcomes and provide valuable insights into complex datasets. Through proper application, these techniques become powerful tools for statistical analysis and decision-making.

Key Concepts in Logistic Regression

Understanding the essential elements of classification models is crucial for building accurate predictions based on data. These models rely on several key principles that help determine how input variables relate to the predicted outcome. Familiarity with these concepts ensures the correct interpretation and effective application of the techniques used in statistical analysis.

Important Principles to Master

- Odds and Odds Ratio: The odds represent the likelihood of an event occurring versus not occurring, while the odds ratio compares these odds between different groups or variables.

- Link Function: A mathematical function used to transform the predicted values into probabilities, typically bounded between 0 and 1.

- Model Coefficients: These values reflect the strength of the relationship between predictor variables and the target, helping to quantify the impact of each variable.

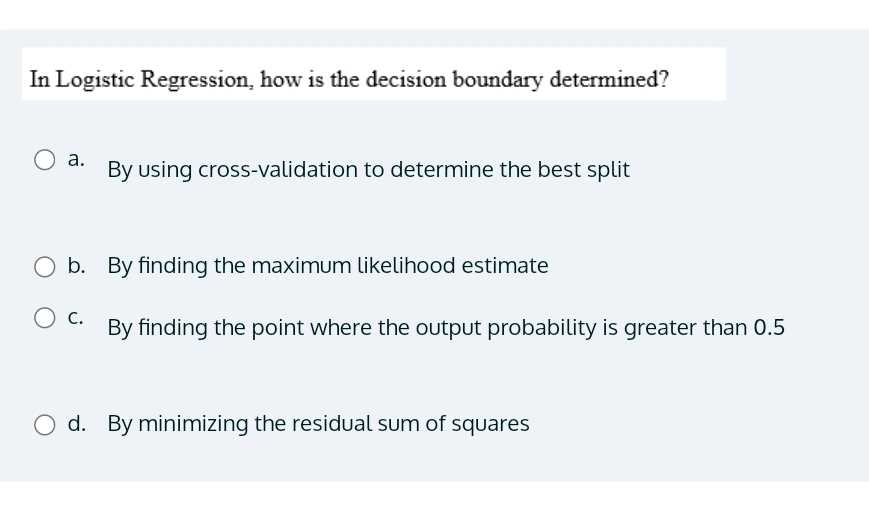

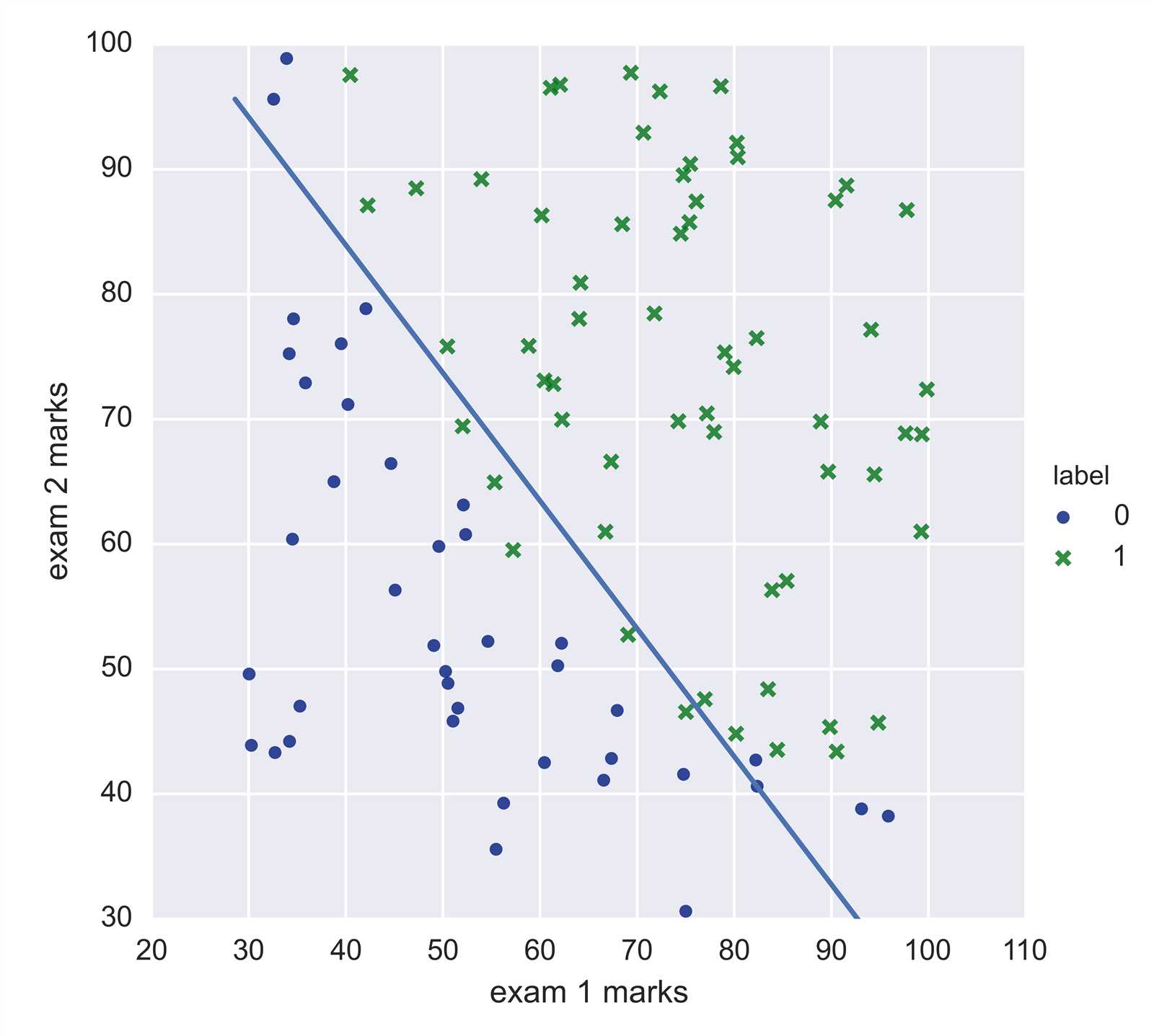

- Classification Threshold: The decision boundary that distinguishes one outcome from another based on the predicted probability.

- Confusion Matrix: A table used to evaluate model performance, comparing predicted outcomes with actual values.

Application Areas

- Health industry: Predicting the likelihood of disease occurrence based on patient data.

- Finance: Classifying customers based on their likelihood to default on loans.

- Marketing: Segmenting customers for targeted campaigns based on their purchasing behavior.

Grasping these fundamental concepts allows for a deeper understanding of how predictive models operate and how to apply them effectively in a wide range of domains.

Common Mistakes in Logistic Regression

When working with classification models, there are several pitfalls that can lead to inaccurate results or poor model performance. These mistakes often stem from misinterpretation of the model’s behavior or improper handling of data. Understanding these common errors can help ensure more accurate predictions and better insights from statistical analysis.

Overfitting the Model

One of the most common mistakes is overfitting, which occurs when the model becomes too complex and captures noise or irrelevant patterns in the data. This leads to excellent performance on training data but poor generalization to new, unseen data. To avoid overfitting, it’s essential to:

- Regularize the model by adding penalties to prevent large coefficients.

- Use cross-validation to assess model performance on different subsets of data.

- Limit the number of variables used in the model.

Ignoring Multicollinearity

Multicollinearity arises when predictor variables are highly correlated with each other. This can make it difficult to isolate the individual effect of each variable on the outcome. It can lead to unstable estimates and misleading conclusions. To address multicollinearity:

- Check for correlations among predictor variables before building the model.

- Remove or combine highly correlated features.

- Use techniques such as principal component analysis (PCA) to reduce dimensionality.

By recognizing these common issues and taking steps to address them, you can enhance the reliability and effectiveness of classification models.

How to Interpret Logistic Regression Coefficients

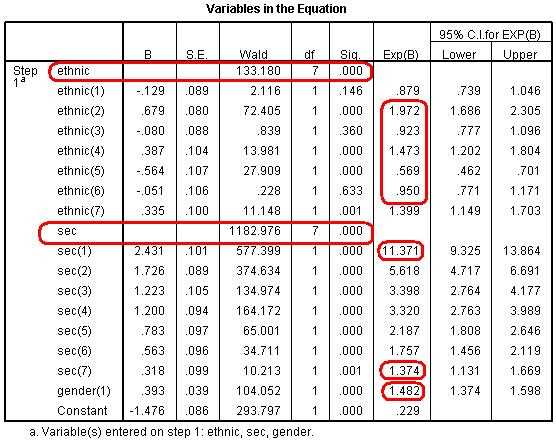

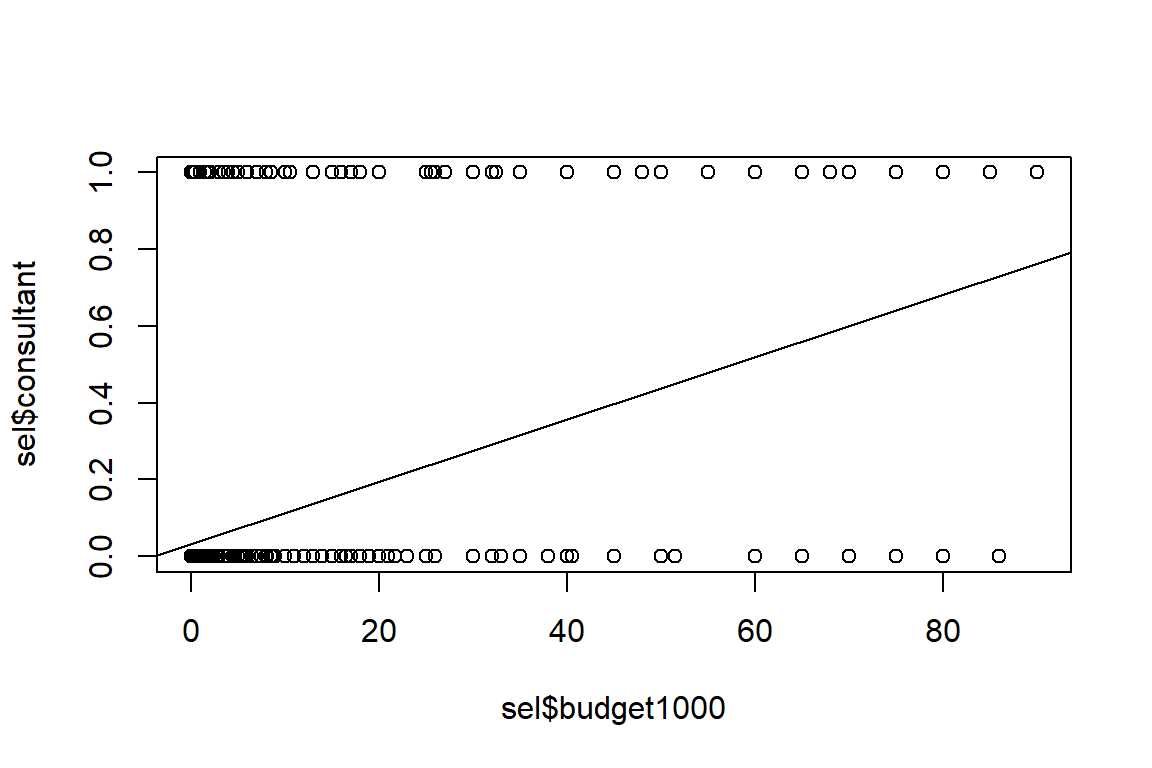

In statistical modeling, coefficients play a crucial role in understanding the relationship between predictor variables and the outcome. These values represent the effect that each predictor has on the likelihood of a certain event occurring. Properly interpreting these coefficients allows analysts to draw meaningful conclusions from their models and make data-driven decisions.

The interpretation of coefficients in classification models differs from traditional linear models. Since the output of these models is probabilistic, each coefficient indicates the change in the log-odds of the outcome for a one-unit increase in the predictor variable, holding all other variables constant.

To interpret these coefficients in a more intuitive way, the exponentiation of the coefficient (i.e., e^β) is often used. This gives the odds ratio, which tells you how the odds of the event change when the predictor variable increases by one unit.

- Positive Coefficients: If the coefficient is positive, an increase in the predictor variable increases the odds of the event occurring.

- Negative Coefficients: A negative coefficient suggests that an increase in the predictor variable decreases the odds of the event.

- Zero Coefficients: A coefficient close to zero means the predictor variable has little to no effect on the odds of the outcome.

By carefully examining the coefficients and their corresponding odds ratios, analysts can gain valuable insights into the factors that influence their outcome of interest.

Multicollinearity in Logistic Regression Models

When building predictive models, one common issue that arises is the high correlation between predictor variables. This phenomenon, known as multicollinearity, can significantly impact the stability and reliability of the model. When predictors are highly correlated, it becomes difficult to determine the individual effect of each variable on the outcome, leading to inaccurate coefficient estimates.

How Multicollinearity Affects Model Interpretation

Multicollinearity can cause the model to produce large standard errors for the coefficients, making it difficult to assess their significance. In extreme cases, it can lead to unstable estimates, where small changes in the data result in large fluctuations in the model’s coefficients. This undermines the interpretability of the results and can lead to misleading conclusions about the relationship between variables.

Detecting and Addressing Multicollinearity

There are several ways to detect multicollinearity in a model. One common approach is to examine the correlation matrix of the predictors. If two or more variables are highly correlated (typically above 0.8 or 0.9), multicollinearity may be a concern. Another method is to compute the variance inflation factor (VIF), which quantifies how much the variance of a coefficient is inflated due to collinearity with other predictors. A VIF greater than 10 suggests a high degree of multicollinearity.

- Removing Variables: One solution is to remove one of the highly correlated predictors from the model.

- Combining Variables: Combining similar predictors into a single composite variable can help reduce multicollinearity.

- Principal Component Analysis: This dimensionality reduction technique can transform correlated variables into a smaller set of uncorrelated components.

Addressing multicollinearity is essential for building a stable and interpretable model, ensuring that each predictor’s contribution to the outcome is accurately assessed.

Understanding Odds Ratios and Probabilities

In predictive modeling, understanding the relationship between input variables and the likelihood of an outcome is essential. Key metrics like odds ratios and probabilities help quantify the strength of this relationship. These concepts offer valuable insights into how changes in predictors affect the likelihood of an event happening, providing a clearer interpretation of model results.

Odds Ratios Explained

The odds ratio is a fundamental metric used to interpret the impact of predictor variables on the odds of an outcome. It is calculated by exponentiating the coefficients from the model, transforming the log-odds into a more interpretable value. An odds ratio greater than 1 indicates that the predictor increases the odds of the event occurring, while a value less than 1 suggests a decrease in the odds.

Probabilities and Their Interpretation

Probabilities represent the likelihood of a specific outcome occurring and are bounded between 0 and 1. In classification models, the probability of the event occurring is derived from the linear combination of the input variables. Understanding these probabilities allows analysts to make informed decisions based on the likelihood of an event rather than a simple binary outcome.

| Odds Ratio | Interpretation |

|---|---|

| 1 | No effect on the odds of the event. |

| Greater than 1 | Increases the odds of the event happening. |

| Less than 1 | Decreases the odds of the event happening. |

By understanding odds ratios and probabilities, you gain a more intuitive grasp of how model variables influence outcomes, enabling more accurate predictions and better decision-making.

Assessing Model Performance with AUC

Evaluating how well a predictive model performs is essential for understanding its effectiveness and reliability. One commonly used metric to assess the model’s accuracy in distinguishing between different classes is the Area Under the Curve (AUC). AUC provides a single value that summarizes the model’s ability to correctly classify positive and negative cases across various threshold settings.

Understanding AUC

The AUC is derived from the Receiver Operating Characteristic (ROC) curve, which plots the true positive rate against the false positive rate at different threshold levels. The higher the AUC, the better the model is at distinguishing between the two classes. An AUC of 0.5 suggests no discriminatory ability, meaning the model is no better than random guessing. An AUC of 1 indicates perfect classification.

Interpreting AUC Values

While AUC provides a robust way to evaluate model performance, it is important to understand what different AUC values imply:

- AUC between 0.7 and 0.8: Fair model performance. The model has a reasonable ability to distinguish between the classes.

- AUC between 0.8 and 0.9: Good model performance. The model does a solid job of differentiating between positive and negative outcomes.

- AUC above 0.9: Excellent model performance. The model reliably classifies the outcomes with high accuracy.

In summary, AUC is a valuable tool for assessing how well a model performs across all classification thresholds, providing a more comprehensive evaluation than simple accuracy alone.

Evaluating Model Fit in Logistic Regression

Assessing how well a statistical model fits the data is a crucial step in the model-building process. A well-fitted model ensures that the relationships between the predictors and the outcome are captured accurately, while poor fit can lead to biased predictions and misleading results. In classification tasks, evaluating model fit involves examining several statistical measures that help determine whether the model provides reliable and meaningful predictions.

One common approach to evaluating model fit is by examining the deviance, which is a measure of the goodness of fit based on the likelihood of the observed data under the model. A lower deviance indicates a better fit, as it suggests that the model is more likely to explain the data. However, there are other metrics that are equally important for understanding model performance.

Key Metrics for Evaluating Fit

Several metrics can be used to assess the fit of a model, each providing different insights into the model’s performance:

- Deviance: This statistic compares the log-likelihood of the fitted model to that of a perfect model. A lower deviance indicates a better fit.

- Hosmer-Lemeshow Test: This test evaluates the agreement between observed and expected frequencies. A non-significant result suggests that the model fits the data well.

- R-Squared (Pseudo-R-Squared): While traditional R-squared measures how much variance is explained by the model, in classification models, pseudo-R-squared values give an indication of model fit, though they are not as straightforward.

By analyzing these statistics, you can gain a deeper understanding of how well your model fits the data and identify areas for improvement. A thorough evaluation helps ensure that the model is both accurate and reliable in predicting outcomes.

Regularization Techniques for Logistic Regression

In the process of building predictive models, overfitting is a common challenge. It occurs when the model captures noise or random fluctuations in the data, rather than the actual underlying pattern. Regularization techniques help prevent overfitting by adding a penalty to the model’s complexity, thereby improving its generalization ability. These methods are especially useful when dealing with high-dimensional data or when there is concern about the model becoming too sensitive to small changes in the input variables.

Types of Regularization

There are several regularization methods commonly used to improve model performance. These methods adjust the cost function to penalize large coefficients, encouraging simpler models:

- L1 Regularization: Also known as Lasso, this technique adds the absolute value of the coefficients to the cost function. It can drive some coefficients to zero, effectively performing feature selection.

- L2 Regularization: Known as Ridge, this method adds the squared value of the coefficients to the cost function. It helps prevent large coefficients but does not perform feature selection like L1 regularization.

Choosing the Right Regularization

The choice between L1 and L2 regularization depends on the specific needs of the model. L1 is ideal when you believe many predictors are irrelevant and wish to reduce the feature set. On the other hand, L2 is effective when you want to shrink the coefficients of all predictors without necessarily eliminating them. In some cases, a combination of both methods, known as Elastic Net, can offer the best of both worlds.

By applying regularization techniques, you can create more robust models that generalize well to new data, ensuring more reliable predictions in real-world scenarios.

Binary vs Multinomial Logistic Regression

When working with classification problems, the choice of modeling technique depends heavily on the number of outcome categories. In some cases, the goal is to predict between two distinct classes, while in others, the objective may be to handle multiple categories. Understanding the differences between models that handle binary and multiple outcomes is essential for selecting the right approach based on the nature of the data.

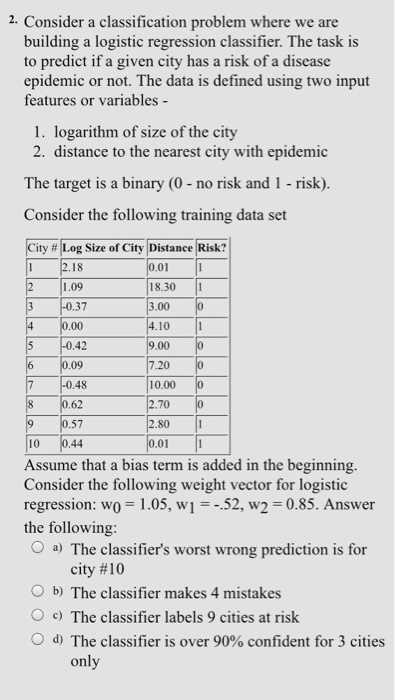

Binary Classification

In binary classification tasks, the model is used to predict one of two possible outcomes. This type of model is particularly useful when the dependent variable consists of two categories, such as “yes/no”, “spam/ham”, or “success/failure”. Binary classification models are typically simpler and faster to implement, as they focus on distinguishing between just two groups.

- Outcome Categories: Two possible outcomes (0 or 1, true or false).

- Example Use Case: Predicting whether a customer will buy a product or not.

- Modeling Approach: Estimates the probability of an event occurring in one of the two categories.

Multinomial Classification

In contrast, multinomial classification models are used when there are more than two possible categories in the dependent variable. This method extends the binary approach by handling multiple outcomes, making it more suitable for complex problems where more than two options need to be predicted. Multinomial models use a series of binary classifiers to make predictions for each category.

- Outcome Categories: More than two categories (e.g., low, medium, high).

- Example Use Case: Classifying different types of customer feedback (positive, neutral, negative).

- Modeling Approach: Estimates probabilities for each category, often using techniques like “one-vs-rest” or “softmax”.

Both binary and multinomial models are powerful tools for classification tasks. The choice between the two depends on the number of categories in the target variable and the complexity of the task at hand. Understanding these differences helps in selecting the most effective modeling strategy for accurate predictions.

Key Assumptions of Logistic Regression

When building predictive models, it is crucial to ensure that certain conditions are met in order to obtain valid results. These assumptions guide the construction of the model and influence its accuracy and reliability. Understanding the core assumptions behind classification models is essential for interpreting the results correctly and ensuring the validity of the analysis.

Independence of Observations

One key assumption is that the observations in the dataset should be independent of one another. This means that the outcome for one observation should not influence or depend on the outcome for another. Violating this assumption can lead to biased estimates and inaccurate predictions.

- Example: In a survey predicting consumer behavior, each participant’s response should be independent of others.

- Impact of Violation: Intra-group correlations can inflate standard errors and distort the significance of predictors.

Linearity of the Logit

Another assumption is that there is a linear relationship between the independent variables and the log-odds of the dependent variable. While the actual probability may not be linear, the log-odds (the natural log of the odds ratio) should be linearly related to the predictors. This assumption allows the model to fit the data effectively.

- Example: The relationship between age and the probability of purchasing a product may not be linear, but the log-odds should follow a straight line.

- Impact of Violation: If this assumption is violated, the model may fail to capture the correct relationships, leading to poor predictions.

Absence of Multicollinearity

Multicollinearity occurs when two or more predictor variables are highly correlated with each other. This can cause instability in the coefficient estimates, making it difficult to assess the individual impact of each predictor. It can also lead to inflated standard errors and unreliable results.

- Example: In a model predicting disease outcomes, age and years of experience in the healthcare field might be highly correlated.

- Impact of Violation: Multicollinearity makes it challenging to determine the independent effect of each predictor.

By ensuring these assumptions are met, you can create more reliable and valid models that offer insightful predictions and analysis. Failure to address these assumptions may compromise the results, leading to flawed interpretations and decisions.

Handling Imbalanced Datasets in Logistic Regression

In many real-world scenarios, the data used for classification is often imbalanced, meaning one class significantly outnumbers the other. This imbalance can lead to biased model predictions, where the model becomes overly inclined to predict the majority class and neglect the minority class. Addressing this issue is crucial to ensure accurate and reliable results, particularly when the cost of misclassifying the minority class is high.

Techniques for Balancing Datasets

There are several methods to handle imbalanced datasets that can improve model performance. These techniques aim to either adjust the dataset or modify the model to reduce bias towards the majority class.

- Resampling Methods: Both oversampling the minority class or undersampling the majority class can help balance the dataset. Techniques such as Synthetic Minority Over-sampling Technique (SMOTE) are often used to generate synthetic samples for the minority class.

- Cost-sensitive Learning: Adjusting the cost of misclassification for the minority class can help the model place more emphasis on correctly classifying the underrepresented class.

- Ensemble Methods: Using techniques such as bagging or boosting can help improve model accuracy by combining predictions from multiple models, which can help reduce bias towards the majority class.

Impact of Imbalanced Datasets on Model Performance

When the dataset is imbalanced, the model may achieve high overall accuracy by simply predicting the majority class most of the time. However, this can be misleading as it doesn’t reflect the true predictive power of the model, especially for the minority class. Key evaluation metrics such as precision, recall, and F1 score become more relevant in this context, as they provide a better understanding of the model’s performance on the minority class.

- Precision: Measures the proportion of true positives among all predicted positives.

- Recall: Measures the proportion of true positives among all actual positives.

- F1 Score: A balanced measure that combines both precision and recall into a single metric.

By applying the appropriate techniques to handle imbalanced datasets, you can significantly improve the performance and reliability of your predictive models, ensuring they are capable of accurately predicting both majority and minority classes.

How to Perform Cross-Validation

Cross-validation is an essential technique used to assess the performance of a model and ensure it generalizes well to unseen data. Instead of relying on a single training and testing split, this approach divides the dataset into multiple subsets, training and testing the model multiple times to get a more reliable estimate of its performance. This process helps to avoid overfitting and ensures the model isn’t just memorizing the training data but is capable of making accurate predictions on new data.

The most common method of cross-validation is k-fold cross-validation, where the data is randomly partitioned into k equal-sized folds. The model is then trained on k-1 folds and tested on the remaining fold. This process is repeated k times, with each fold used as the test set exactly once. The final performance is typically averaged across all iterations to provide a more robust evaluation.

Steps to Perform Cross-Validation

- Step 1: Divide the dataset into k equal-sized subsets (or “folds”). The number of folds is typically chosen to be 5 or 10, but it can vary depending on the dataset size.

- Step 2: For each fold, use the other k-1 folds for training and the current fold as the test set.

- Step 3: Train the model using the training data and evaluate its performance on the test fold.

- Step 4: Repeat the process for each fold, ensuring that every fold is used for testing exactly once.

- Step 5: After completing all iterations, calculate the average of the performance metrics (such as accuracy, precision, recall) to get a final evaluation score.

Types of Cross-Validation

While k-fold cross-validation is the most widely used, there are other variations to consider depending on the dataset and problem type.

- Stratified k-fold: In this variation, the data is divided in a way that ensures each fold has a similar distribution of classes, which is particularly useful for imbalanced datasets.

- Leave-One-Out Cross-Validation (LOOCV): This technique involves using each individual data point as a test set while training the model on all other data points. It’s computationally expensive but useful for small datasets.

- Time Series Cross-Validation: For time-dependent data, this method uses past observations for training and future ones for testing to maintain the temporal order of the data.

Cross-validation is an invaluable tool in model evaluation, allowing for more accurate insights into a model’s performance, especially when working with limited data. By following the steps and selecting the appropriate method, you can ensure your model is well-tested and ready for deployment.

Advanced Topics in Logistic Regression

As the field of predictive modeling evolves, several advanced techniques have emerged to improve the accuracy, efficiency, and interpretability of models. These methods extend the traditional approach by incorporating more complex data structures, regularization, and alternative model formulations. While the basics of predictive modeling are well-understood, exploring advanced topics can unlock new levels of performance and applicability across different domains.

Some of the key areas of focus in advanced model development include handling non-linear relationships, addressing multicollinearity, using regularization to prevent overfitting, and interpreting the results more effectively. These topics not only enhance model robustness but also ensure that the predictive model can be applied to real-world scenarios where data complexity is often high.

Regularization Techniques

Regularization methods like L1 and L2 penalties are designed to reduce overfitting by penalizing large coefficients in the model. L1 regularization (lasso) encourages sparsity in the model by driving some coefficients to zero, effectively selecting a subset of features. L2 regularization (ridge) helps to shrink the coefficients, preventing them from becoming too large, thus ensuring better generalization on unseen data.

Non-linear Models

While linear relationships are a cornerstone of predictive modeling, real-world data often exhibit non-linear patterns. To address this, techniques like polynomial features, kernel methods, and decision trees can be integrated with traditional models. By introducing non-linear transformations or using algorithms that can capture more complex relationships, these approaches enhance the model’s ability to adapt to diverse data structures.

Multicollinearity Handling

When predictor variables are highly correlated, it can cause issues in model interpretation and stability. This phenomenon, known as multicollinearity, can inflate the variance of coefficient estimates, making them unreliable. Techniques like principal component analysis (PCA) or removing highly correlated features can help mitigate the effects of multicollinearity, leading to more stable and interpretable models.

Interpretation of Complex Models

Understanding the output of advanced models is crucial for making informed decisions. Techniques such as partial dependence plots (PDPs), SHAP values, and feature importance rankings are essential tools for interpreting complex relationships between predictors and outcomes. These methods provide greater transparency, allowing data scientists and stakeholders to better understand how different factors influence predictions.

By diving into these advanced techniques, you can enhance the effectiveness of your model, particularly in complex scenarios where traditional methods may fall short. The ongoing evolution of predictive modeling ensures that these approaches continue to refine and expand the boundaries of what can be achieved with data.

Interpreting Confusion Matrix Results

Evaluating the performance of a classification model requires more than just accuracy. A deeper understanding of how well a model distinguishes between classes can be achieved through a confusion matrix. This matrix provides a detailed breakdown of a model’s predictions, highlighting both correct and incorrect classifications across each class. By analyzing these results, one can assess the strengths and weaknesses of a model in real-world scenarios.

The confusion matrix consists of four key values: true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). Each of these values plays an important role in evaluating different aspects of model performance. For instance, a true positive represents a correct classification where the model accurately predicted the positive class, while a false positive indicates an incorrect classification where the model falsely predicted the positive class.

Key Metrics Derived from the Matrix

Several important performance metrics can be calculated from the confusion matrix to assess model performance. These metrics give deeper insights into how well the model is distinguishing between the different classes:

- Accuracy: The proportion of total predictions that were correct. Calculated as (TP + TN) / (TP + TN + FP + FN).

- Precision: The proportion of positive predictions that were actually correct. Calculated as TP / (TP + FP).

- Recall (Sensitivity): The proportion of actual positive cases that were correctly identified. Calculated as TP / (TP + FN).

- F1-Score: The harmonic mean of precision and recall, providing a balance between the two metrics. Calculated as 2 * (Precision * Recall) / (Precision + Recall).

Understanding the Trade-offs

When interpreting the results from a confusion matrix, it’s important to understand the trade-offs between different metrics. For instance, a high precision value might come at the cost of lower recall, meaning the model is very accurate when it predicts a positive class but might miss some true positives. On the other hand, a model with high recall may correctly identify most of the positive instances but could include more false positives, reducing precision. Depending on the application, different metrics may be prioritized to optimize performance.

By carefully examining the confusion matrix and understanding these metrics, one can gain a more comprehensive view of the model’s capabilities and limitations, ensuring that it meets the specific needs of the task at hand.

Practical Applications of Logistic Regression

Classification models are widely used to make predictions based on input data, particularly when outcomes are binary or categorical. One such technique is commonly applied across various industries to solve real-world problems, ranging from predicting customer behavior to medical diagnoses. This method can be highly effective in predicting probabilities, offering clear decision-making insights, and assisting in risk assessment across numerous sectors.

In practical scenarios, this method helps identify patterns in historical data, enabling decision-makers to forecast future events or behaviors. Whether it’s determining the likelihood of an event occurring or classifying data into distinct groups, this technique is highly versatile and crucial for tasks that require clear and interpretable predictions.

Applications in Various Sectors

Here are some common fields where this method is frequently applied:

- Healthcare: It is used to predict patient outcomes, such as the likelihood of developing a certain disease based on various risk factors, or the success rate of a particular treatment.

- Finance: In credit scoring and risk assessment, it helps predict whether a customer is likely to default on a loan, aiding financial institutions in decision-making processes.

- Marketing: Businesses utilize it to identify the probability of customer conversion, predicting whether a user will purchase a product or service based on their interaction with marketing materials.

- Fraud Detection: Used to identify fraudulent transactions by analyzing patterns in historical data, helping financial institutions and e-commerce platforms detect anomalies and reduce risks.

- Social Sciences: Researchers use it to study societal trends, such as predicting voting behavior or understanding factors contributing to social phenomena.

Benefits in Real-World Decision Making

One of the main advantages of this method in real-world applications is its ability to generate interpretable results. The output provides clear probabilities, allowing organizations to set thresholds that align with business goals or risk tolerance. For example, a marketing department may decide that a lead with a 60% or higher chance of conversion is worth pursuing, while a lower probability might indicate that additional resources should be spent elsewhere.

Additionally, it is relatively easy to implement and computationally efficient, making it an attractive choice for many practical applications. This simplicity, combined with its predictive power, explains why it remains one of the most popular techniques in data science and analytics.

Preparation Tips for Logistic Regression Exams

Successfully tackling assessments in this field requires both theoretical understanding and practical application. Being able to grasp core concepts and apply them to real-life situations is essential. Focusing on the key principles, developing problem-solving skills, and practicing with various data sets can greatly improve your performance. This section will guide you through essential strategies to help you prepare effectively and confidently.

Understanding Core Concepts

Before diving into solving problems, ensure you have a strong grasp of the fundamental principles behind the technique. Understand the underlying mathematics, such as how coefficients relate to probabilities, and familiarize yourself with the terminology used in classification tasks. Key areas to focus on include:

- Understanding how models classify data based on input features

- The role of cost functions and how they are minimized

- The interpretation of coefficients and their influence on prediction outcomes

- Methods used to assess model performance, such as confusion matrices and AUC

Practice with Real Data

Hands-on experience is crucial. Make sure to apply the learned concepts by working on data sets. Practice solving problems related to classification, ensuring you can use the technique to make accurate predictions. Key activities include:

- Identifying the right data transformations and feature selections

- Evaluating model fit through various performance metrics

- Working on model optimization techniques such as cross-validation and regularization

Additionally, reviewing example problems from textbooks or past coursework can help you gain confidence in identifying patterns and applying the technique effectively. Remember, the more problems you solve, the more proficient you will become.