To succeed in scripting certifications, it’s essential to familiarize yourself with a range of topics that will be tested. Mastery of various commands, scripting practices, and troubleshooting techniques will help build confidence and increase your chances of passing. A well-rounded understanding of key concepts is crucial for performing well in any certification challenge.

While preparing, focus on both theoretical knowledge and practical skills. You’ll need to be proficient in automating tasks, managing system resources, and handling different types of errors efficiently. Developing a strong foundation will make it easier to tackle more complex scenarios, whether you’re working with local scripts or managing remote systems.

Study diligently by reviewing common practices, common pitfalls, and effective methods for problem-solving. By grasping these essential elements, you will be well-prepared for the various challenges presented in the certification process. Whether you’re a beginner or an experienced user, staying focused on the key objectives will be your key to success.

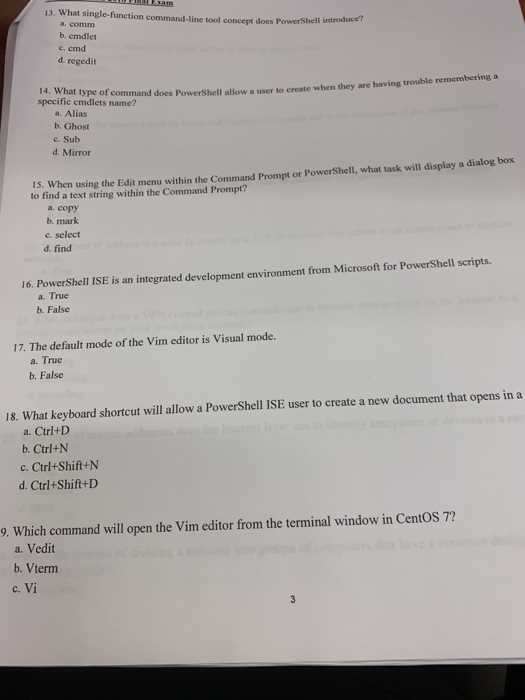

Powershell Exam Questions and Answers

In order to successfully pass any scripting certification, it is crucial to understand the key concepts and tasks that may be covered. Being familiar with the most commonly asked tasks and challenges will help you approach your assessment with confidence. Below are essential topics and scenarios that often appear during the certification process.

- Automating tasks using simple commands

- Managing files and directories effectively

- Handling errors and exceptions in scripts

- Creating reusable functions and cmdlets

- Manipulating system services and processes

- Working with remote systems and automating actions

- Understanding data types, variables, and objects

- Using loops, conditional statements, and switches

By focusing on these areas, you can better prepare yourself to address the practical challenges presented in any assessment. It’s important to be comfortable with not only theoretical knowledge but also with applying these skills to real-world tasks. Test scenarios typically revolve around automating complex workflows, resolving issues with scripts, and working with data from multiple sources.

- Creating scheduled tasks for regular automation

- Using pipeline operators to streamline operations

- Validating input and handling user errors

- Interacting with APIs and web services for automation

- Optimizing scripts for better performance and scalability

Focusing on these topics will ensure that you have the practical knowledge needed to pass any certification assessment. By learning how to tackle these tasks effectively, you can confidently approach the challenges ahead and achieve a high level of proficiency in scripting.

Overview of Powershell Exam Topics

Understanding the core concepts is essential for success in any scripting certification. The main areas typically covered include automation techniques, file and system management, as well as troubleshooting scripts. Mastery of these topics will allow you to address various challenges with ease and efficiency, preparing you for real-world scenarios. A strong foundation in these subjects is necessary to excel in assessments and to use scripting tools effectively in professional environments.

The primary focus is often on creating, modifying, and executing scripts to automate routine tasks. Topics such as working with variables, loops, and conditionals form the building blocks of effective automation. Additionally, knowledge of how to interact with the operating system, manage files, and handle errors is critical for successful task automation and problem-solving.

Another key area involves remote system management. This includes executing tasks across multiple machines, interacting with networked systems, and automating administrative tasks remotely. Security-related topics, such as managing permissions and ensuring safe script execution, are also frequently covered in such assessments.

In addition to these, you’ll encounter topics like debugging, performance optimization, and best practices for maintaining efficient scripts. A comprehensive understanding of these areas ensures that you can both write functional scripts and optimize them for performance in varied environments.

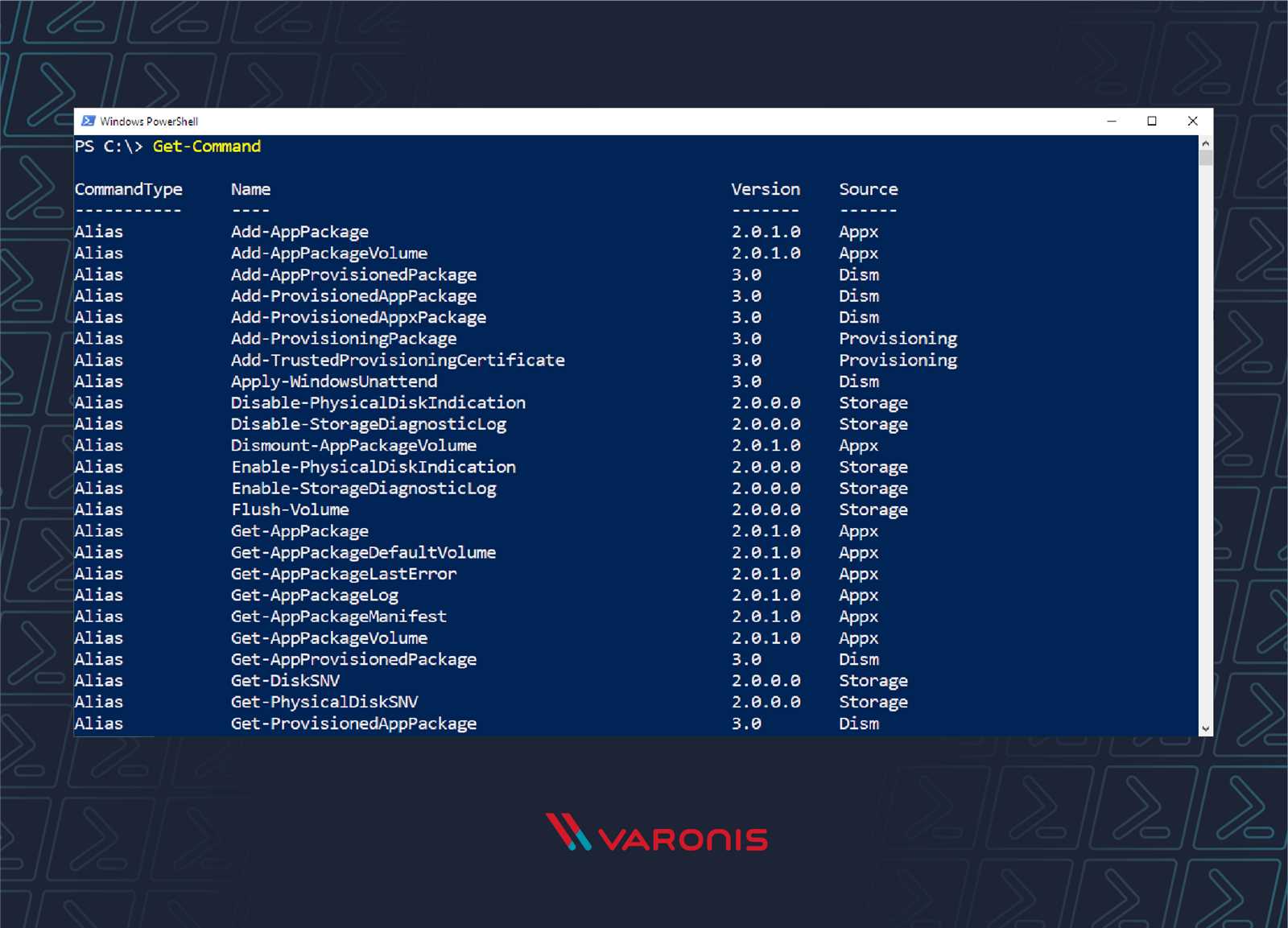

Common Powershell Commands You Must Know

To effectively automate tasks and manage systems, familiarity with essential commands is crucial. These commands form the backbone of automation and script development. Knowing how to utilize the right tools will significantly streamline your workflows, making common administrative tasks easier and faster to execute. Below are some of the most important commands that every scripter should master for efficient task automation and system management.

- Get-Help – Displays information about cmdlets, functions, and scripts.

- Set-ExecutionPolicy – Configures the execution policy to allow script running.

- Get-Command – Retrieves a list of all cmdlets, functions, and aliases available.

- Get-Process – Provides a list of all running processes on the system.

- Stop-Process – Terminates a running process by its name or ID.

- Get-Service – Displays the status of all services on a machine.

- Start-Service – Starts a service that is currently stopped.

- Set-Service – Modifies the properties of a service.

- Get-Content – Reads the contents of a file.

- Set-Content – Writes data to a file.

- New-Item – Creates new items such as files or directories.

- Remove-Item – Deletes items like files, folders, or registry keys.

- Get-EventLog – Retrieves event log data from Windows logs.

- Get-Variable – Retrieves the values of variables in the current session.

- Export-Csv – Exports data to a CSV file for further processing.

These commands are just the beginning, but mastering them will provide a solid foundation for more complex tasks. They are fundamental to creating efficient, functional scripts that can be used for system administration, automation, and troubleshooting across various environments.

Essential Scripting Concepts for Exams

To succeed in any scripting certification, it’s important to understand the key concepts that form the foundation of effective automation. These concepts not only help in writing clean and efficient scripts but also enable troubleshooting and optimizing code. By mastering these basic elements, you will be able to tackle a wide range of challenges and scenarios presented in any assessment.

Variables and Data Types

Understanding how to use variables and manage different data types is fundamental to scripting. Variables are used to store data that can be manipulated throughout a script, while data types define what kind of data can be stored in those variables. Knowing when to use integers, strings, arrays, and hash tables is essential for creating flexible and efficient scripts.

Control Flow and Loops

Control structures such as conditionals (if/else) and loops (for, while) are key to making scripts dynamic. These tools allow scripts to perform different actions based on input or the environment, enabling automation of repetitive tasks. Mastering how to implement and combine these structures will help you create more powerful and adaptable scripts.

With a strong understanding of variables, data types, and control flow, you’ll be well-equipped to tackle real-world automation problems and demonstrate proficiency in scripting tasks during assessments. These concepts are not just theoretical–they are practical tools used to solve everyday system administration and automation challenges.

Understanding Variables and Data Types

Mastering variables and data types is a crucial step in becoming proficient with any scripting language. These elements allow you to store, manipulate, and process data efficiently. Understanding how to declare variables, assign values, and choose the appropriate data type for each task ensures that your scripts are both functional and optimized for performance.

Variables are containers that hold information you can reference or change during script execution. The value stored in a variable can be anything from a simple number to more complex data structures. Each variable is assigned a data type, which defines the kind of data it can hold–whether it’s a string, number, or collection of items like an array or hash table. Choosing the right data type is essential for ensuring that your scripts run smoothly and avoid errors during execution.

Common data types include:

- String – Represents text data, such as words or sentences.

- Integer – Stores whole numbers, positive or negative.

- Array – Holds a collection of items, allowing you to manage lists of data.

- Boolean – Represents true or false values.

- Hashtable – A collection of key-value pairs for storing related data in an organized way.

Each data type has its specific uses and advantages, depending on the task at hand. By learning how to properly assign and manipulate these data types, you will be able to create more efficient and effective scripts that meet your automation needs. Whether you’re working with simple values or more complex data structures, a solid understanding of variables and data types is essential to writing functional code.

Working with Loops and Conditional Statements

Loops and conditional statements are fundamental to automating tasks and making scripts more flexible. They allow scripts to make decisions based on certain conditions and repeat actions multiple times without needing to write redundant code. Mastering these tools is essential for controlling the flow of execution and optimizing scripts for efficiency.

Conditional Statements

Conditional statements allow scripts to evaluate conditions and perform different actions based on the outcome. The most common forms are if, else, and elseif. These statements enable a script to make decisions by checking if a condition is true or false, executing the appropriate block of code based on the result. For example, you can use an if statement to check if a variable has a certain value before proceeding with a specific task.

Example of a simple conditional:

if ($x -eq 10) {

Write-Host "The value is 10"

} else {

Write-Host "The value is not 10"

}

Loops for Repetition

Loops allow repetitive execution of a block of code. There are several types, with for, foreach, and while being the most commonly used. These structures are particularly useful when you need to perform the same operation on multiple items, such as processing a list of files or handling a set of data. A for loop is useful when you know in advance how many times to iterate, while foreach is ideal for iterating through a collection of objects.

Example of a foreach loop:

$numbers = 1..5

foreach ($number in $numbers) {

Write-Host $number

}

By mastering loops and conditional statements, you can significantly enhance your ability to automate complex tasks and control the flow of your scripts. These building blocks are vital for creating flexible, efficient, and error-free code.

File Management and Automation in Powershell

Managing files and automating repetitive tasks is at the heart of many system administration scripts. By using the right tools, you can create efficient workflows that handle file creation, modification, movement, and deletion with minimal effort. These automation techniques can save time, reduce human error, and streamline processes that would otherwise take significant manual effort.

Managing Files with Cmdlets

One of the first steps in automation is understanding how to manipulate files and directories. Built-in cmdlets offer a wide range of operations for file management, from creating new files to reading their contents and removing them when they are no longer needed. Commands like New-Item, Get-Content, Set-Content, and Remove-Item are used to handle various file operations.

For example, you can create a new file with the following command:

New-Item -Path "C:ExampleNewFile.txt" -ItemType File

Reading the contents of the file is just as simple:

Get-Content -Path "C:ExampleNewFile.txt"

Automating File Operations

Automation becomes even more powerful when you can process multiple files at once. Using loops, conditional logic, and file-related cmdlets, you can automate tasks such as moving, renaming, or modifying files based on specific criteria. For instance, you might want to back up files older than a certain date or move files to a different directory based on their file type.

Here’s an example of automating file backup:

$files = Get-ChildItem -Path "C:Documents" -Recurse

foreach ($file in $files) {

if ($file.LastWriteTime -lt (Get-Date).AddDays(-30)) {

Copy-Item $file.FullName -Destination "C:Backup"

}

}

This script checks all files in a specified directory and copies those that have not been modified in the last 30 days to a backup location. With such automation, you can ensure important tasks are completed on time, every time.

Mastering file management and automation allows you to manage system resources more effectively and free up time for other tasks. By using these techniques, you can optimize your workflow and ensure that your environment stays organized and efficient.

How to Handle Errors in Scripts

Error handling is an essential aspect of writing resilient and reliable scripts. Proper error management ensures that your automation tasks continue to run smoothly even when unexpected issues arise. By anticipating potential failures and managing them effectively, you can improve the user experience, minimize disruptions, and make debugging easier.

There are various techniques to catch, handle, and respond to errors in scripts. From basic checks to advanced constructs like try-catch-finally blocks, these methods help you ensure that scripts do not fail silently, allowing for more graceful recovery and troubleshooting.

Using Try, Catch, Finally

The try-catch-finally construct is one of the most effective ways to manage errors. It allows you to try a block of code, catch any errors that occur, and then perform clean-up tasks regardless of whether an error occurred or not. This structure makes it easier to isolate problems and apply solutions as needed.

Example:

try {

# Attempting to access a file that may not exist

$content = Get-Content "C:InvalidFile.txt"

} catch {

# Handling the error if file is not found

Write-Host "Error: Unable to read file"

} finally {

# Ensuring any necessary clean-up happens

Write-Host "Process complete"

}

In this example, if the file does not exist, the error is caught in the catch block and displayed to the user. The finally block ensures that the script completes the process, even if an error occurs.

Using Error Variables

Scripts also rely on special error-handling variables to track the success or failure of operations. The $? variable checks the status of the last command, while the $Error variable stores a collection of errors that occurred during the session.

- $? returns a Boolean value indicating whether the last operation was successful ($true) or failed ($false).

- $Error is an array that contains detailed information about any errors that occurred. You can review this array to diagnose problems more deeply.

Example:

Get-Content "C:NonExistentFile.txt"

if ($?) {

Write-Host "File read successfully"

} else {

Write-Host "Failed to read file"

}

In this case, the script checks the success of the previous command using the $? variable and provides appropriate feedback based on whether it succeeded or failed.

Implementing robust error handling not only helps in troubleshooting but also ensures your scripts are more reliable and stable, even when unexpected situations arise.

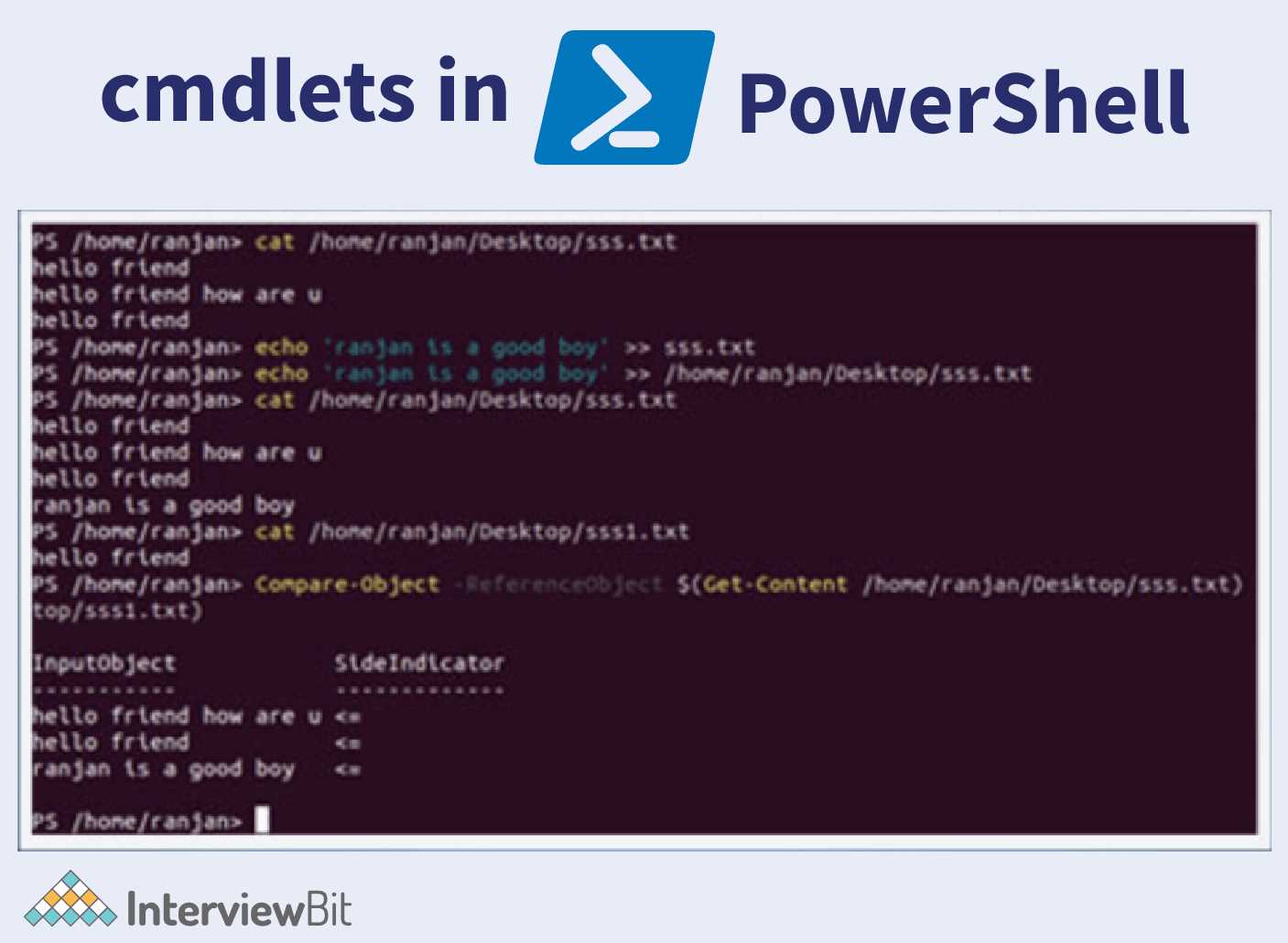

Understanding Cmdlets and Functions

In scripting environments, commands are essential building blocks that perform specific tasks. Understanding how to use these commands effectively can greatly enhance the automation process. These commands can range from simple tasks, like fetching system information, to complex operations, such as managing processes or interacting with external data sources. Two primary types of commands are cmdlets and functions, both of which are integral to creating powerful scripts.

Cmdlets are specialized commands built into the environment, while functions are custom scripts created by the user. Knowing how to differentiate between the two and when to use each is crucial for developing efficient and maintainable code.

What Are Cmdlets?

Cmdlets are predefined, lightweight commands that are part of the scripting environment. They are designed to perform a single task and typically follow a standard naming convention, making them easy to remember and use. Cmdlets are optimized for quick execution and can be easily combined with other commands to build more complex solutions.

- Cmdlets are typically written in a verb-noun format, such as Get-Process or Set-Item.

- They are native to the environment, meaning you don’t need to define them before use.

- Cmdlets return objects, making them highly versatile in pipeline processing.

Example:

Get-Process

This cmdlet retrieves a list of currently running processes on the system. It is quick, efficient, and easy to use, without the need for custom scripting.

What Are Functions?

Functions are user-defined blocks of code that can be reused throughout scripts. Unlike cmdlets, which are built-in, functions are customized for specific tasks and can be as simple or complex as needed. Functions provide greater flexibility, allowing for more control over logic and behavior.

- Functions are defined with the function keyword followed by a name and a script block.

- They can accept parameters, which make them adaptable to different scenarios.

- Functions can be called multiple times in a script, promoting code reusability and readability.

Example:

function Get-FileSize {

param ($filePath)

return (Get-Item $filePath).length

}Get-FileSize "C:pathtofile.txt"

This function calculates and returns the size of a specified file. It accepts a parameter (the file path) and can be reused anywhere within the script.

Both cmdlets and functions are essential tools in a scriptwriter’s toolbox. Understanding when to use each allows for greater control and efficiency in automating tasks.

Preparing for Security-Related Topics

When automating tasks and managing systems, security becomes a crucial consideration. Understanding how to secure scripts, manage access permissions, and protect sensitive data is vital. This section covers essential concepts to ensure that your scripts follow best practices for security, minimizing vulnerabilities while enhancing the integrity and confidentiality of your operations.

Proper security measures not only help safeguard systems but also contribute to the overall reliability and trustworthiness of your scripts. By understanding common threats, such as unauthorized access and data breaches, you can take proactive steps to mitigate risks in your automated tasks.

Managing Credentials and Authentication

One of the first aspects of security in scripting is managing user credentials and ensuring proper authentication methods. Protecting access to sensitive systems involves storing credentials securely and implementing appropriate authentication mechanisms. This reduces the likelihood of unauthorized users gaining access to critical resources.

- Secure storage of credentials: Avoid hardcoding passwords or sensitive information directly in scripts. Use credential objects or secure storage mechanisms such as Windows Credential Store or environment variables.

- Authentication methods: Implement multi-factor authentication (MFA) where possible, and use secure protocols such as HTTPS to communicate with external services.

- Credential delegation: Always use the principle of least privilege, ensuring that scripts run with only the necessary permissions to perform the intended tasks.

Example:

$SecureCredential = Get-Credential

Invoke-Command -ScriptBlock { Get-Process } -Credential $SecureCredential

In this example, the Get-Credential cmdlet is used to securely retrieve credentials, preventing sensitive data from being exposed.

Managing Permissions and Access Control

Another critical aspect of security is managing permissions and controlling who can access what resources. Properly assigning and limiting permissions ensures that only authorized individuals or processes can access specific files, directories, or systems.

- Set access permissions: Use cmdlets to assign file and folder permissions based on user roles. For example, Set-Acl allows you to control who can read, write, or execute files.

- Access control lists (ACLs): Understand how to work with ACLs to define who has permission to access resources and to what extent.

- Audit access: Regularly audit access logs and monitor user activities to detect any suspicious behavior or potential security breaches.

Example:

$acl = Get-Acl "C:SensitiveData"

$rule = New-Object System.Security.AccessControl.FileSystemAccessRule("User", "Read", "Allow")

$acl.SetAccessRule($rule)

Set-Acl "C:SensitiveData" $acl

In this case, access control rules are applied to a sensitive file, ensuring that only authorized users can read it.

By preparing for security-related topics, you can ensure that your scripts are safe, reliable, and resistant to common vulnerabilities. These foundational practices are key to managing both simple tasks and complex automation workflows securely.

Key Practices for Troubleshooting Scripts

When working with automation scripts, encountering issues is inevitable. Efficient troubleshooting requires a systematic approach to identifying the root cause of errors. By following best practices, you can quickly resolve issues and ensure that your scripts run smoothly and efficiently. This section outlines essential techniques for troubleshooting common script errors.

Understanding error messages, utilizing debugging tools, and implementing clear logging practices are some of the most effective ways to identify and fix issues in scripts. By mastering these troubleshooting methods, you can improve both the reliability and efficiency of your automation tasks.

Leveraging Error Handling Techniques

Error handling is one of the most critical aspects of effective troubleshooting. Properly managing errors helps you identify issues early, and prevents scripts from failing silently. Common techniques include using try-catch blocks and the finally statement to catch exceptions and handle them gracefully.

- Try-Catch Blocks: Use try to run the code that might fail, and catch to handle errors if they occur. This ensures that your script continues running without abrupt stops.

- Finally Statement: The finally block is useful for cleaning up resources or executing certain commands regardless of whether an error occurred or not.

- Specific Error Types: Catch specific errors by targeting particular exceptions, such as System.IO.IOException for file handling issues, which makes it easier to diagnose problems.

Example:

try {

$file = Get-Content "C:nonexistentfile.txt"

} catch {

Write-Error "File not found"

} finally {

Write-Host "Error handling complete"

}

Utilizing Logging for Better Insights

Logging provides valuable insight into script execution and helps track down the source of issues. It’s important to log key events, errors, and output so you can later review the script’s performance and behavior. A well-structured logging system can be a powerful tool for troubleshooting.

- Verbose Logging: Use the -Verbose flag to print detailed information about script execution.

- Event Logs: Leverage the system’s event logs to capture important system-level errors or events triggered by the script.

- Custom Log Files: Create custom log files to track specific actions or store detailed debugging information throughout script execution.

Example:

Write-Host "Starting process..." -Verbose Write-Error "An error occurred while processing"

By setting up detailed logging, you gain the ability to trace back the issue and monitor script behavior over time. This is especially useful for long-running scripts or complex workflows.

Using Debugging Tools Effectively

Debugging tools are essential when troubleshooting complex scripts. These tools allow you to step through your code, inspect variables, and pinpoint exactly where the issue lies. Tools such as debugging cmdlets and IDE integrations can save time and improve script accuracy.

- Set-PSDebug: The Set-PSDebug cmdlet helps yo

Best Practices for Efficiency

Creating efficient scripts involves optimizing both performance and readability. By focusing on best practices, you can ensure that your code runs smoothly, consumes fewer resources, and is easy to maintain. Efficiency isn’t only about speed but also about making the code more readable, reusable, and scalable.

In this section, we’ll explore various techniques to improve the efficiency of your scripts. These strategies can help you manage resources better, reduce execution time, and create cleaner, more modular code.

Optimize Resource Usage

Managing resources effectively is crucial for improving the performance of your scripts. By focusing on how data is handled, stored, and passed through different commands, you can optimize memory usage and execution time. Below are key practices for managing resources efficiently:

Practice Benefit Use Pipelines Effectively Passing data directly between commands reduces memory usage and accelerates execution. Avoid Storing Large Data Sets Reducing the need to hold large data sets in memory prevents memory overload. Process Data in Chunks Processing data in smaller chunks rather than in large batches enhances performance. Use Built-in Commands

Utilizing built-in functions and commands that are optimized for specific tasks can help you save time and improve efficiency. These commands are optimized for common tasks and can replace the need for custom solutions that might introduce overhead. Here are some efficient commands:

Command Purpose Get-Content Reads file content with minimal memory consumption, making it faster than other methods. Where-Object Filters data within pipelines without the need for additional loops, reducing overhead. ForEach-Object Processes each item in a collection more efficiently than using manual loops. Modularize Your Code

Breaking your script into smaller, reusable functions not only improves readability but also enables easier maintenance. By modularizing your code, you can isolate problems, optimize individual functions, and reuse them across different projects:

- Reusable Functions: Write small, task-focused functions that can be used in different scenarios.

- Clear Naming: Use descriptive names for functions and variables to improve code readability.

- Commenting: Properly comment complex sections of code to make future updates easier.

These practices reduce redundancy and make your codebase easier to manage and optimize over time.

Minimize External Resource Access

Limiting the number of calls to external resources, such as databases or APIs, can help improve script performance. Instead of repeatedly accessing remote systems, consider caching frequently accessed data locally or reducing the frequency of requests:

- Local Caching: Store frequently accessed data locally to avoid unnecessary external calls.

- Batch Requests: Combine multiple requests into one to reduce network overhead and improve efficiency.

By minimizing unnecessary external requests, you reduce latency and improve the overall speed of your scripts.

Parallel Execution

When working with tasks that can be run concurrently, utilizing parallel execution can significantly improve performance. Running multiple processes at the same time reduces the overall time required for tasks that would otherwise be performed sequentially. Here’s how to implement parallelism:

- Background Jobs: Use background jobs to perform tasks in parallel, freeing up resources for other tasks.

- Parallel Loops: For independent tasks, consider using parallel loops to speed up execution.

Parallel execution is especially useful for processing multiple independent items or performing batch operations more quickly.

By adopting these best practices, you can ensure that your scripts are optimized for speed, efficiency, and resource management. These strategies will help you develop solutions that perform well under various conditions and are easier to maintain over time.

Using Command-Line Tools for System Administration

System administrators rely heavily on automation and command-line tools to streamline tasks and ensure smooth operation across various systems. These tools allow administrators to execute complex tasks efficiently, manage configurations, and handle repetitive chores without manual intervention. The ability to automate and execute scripts remotely has revolutionized how administrators manage networks, servers, and systems.

In this section, we will explore how command-line scripting can be used effectively for system administration, emphasizing key tasks such as configuration management, monitoring, and automation.

Automation and Scripting

Automating administrative tasks reduces the risk of human error and speeds up processes. Whether it’s configuring multiple machines or updating software, automating these tasks ensures consistency and accuracy.

Task Automation Benefit Software Installation Automates the installation and configuration process across multiple systems. User Management Enables bulk creation, modification, or removal of user accounts with scripts. System Updates Ensures that systems are regularly updated without needing manual intervention. System Monitoring and Management

Monitoring system performance and ensuring that configurations are applied consistently is critical for maintaining system integrity. Command-line tools provide powerful methods for system diagnostics, tracking system health, and collecting data for performance analysis.

Tool/Command Purpose Get-Process Displays a list of active processes, allowing administrators to monitor CPU and memory usage. Get-Service Shows the status of services, enabling administrators to check for running or stopped services. Get-EventLog Fetches system event logs, which are helpful for troubleshooting and auditing system performance. Remote Management

Managing multiple systems remotely is one of the key advantages of command-line tools. Administrators can connect to systems across a network and execute commands without physically being at the location. This capability is particularly useful for managing large networks or cloud-based infrastructure.

Command Use Enter-PSSession Initiates a remote session on another system, enabling administrators to execute commands as if they were local. Invoke-Command Executes commands on one or more remote systems simultaneously, allowing administrators to automate tasks across multiple machines. New-PSSession Creates a persistent remote session for performing tasks over time without reconnecting. By leveraging these tools and commands, administrators can efficiently handle routine tasks, perform complex operations, and ensure that systems are optimized and secure. The flexibility and power offered by scripting for system administration provide the means to handle a wide variety of tasks quickly and effectively.

Regular Expressions and Pattern Matching

Pattern matching is a powerful tool used to search, manipulate, and validate strings of text based on defined patterns. Regular expressions, or regex, allow users to create complex queries for matching specific patterns within text, making it easier to extract, modify, or validate information from large datasets. This technique is particularly useful for tasks like searching logs, validating inputs, and parsing text files.

Basic Syntax and Structure

Regular expressions follow a particular syntax that includes special characters, operators, and modifiers. These elements work together to define search patterns that can match a wide variety of text formats.

Character Description . Matches any single character except for line breaks. * Matches zero or more occurrences of the preceding character or group. + Matches one or more occurrences of the preceding character or group. ^ Anchors the match to the beginning of the string. $ Anchors the match to the end of the string. Using Regular Expressions for Text Search

Once the pattern is defined, the search can be performed on any string or text input. Regular expressions can be used to find specific words, phrases, or patterns that match certain criteria. This method helps automate tasks such as data extraction, input validation, or replacing text in a document.

Method/Command Use -match Tests whether a string matches the specified regular expression pattern. Returns $true if the pattern matches. -replace Replaces text that matches a pattern with a new string. -split Splits a string into an array using the pattern as a delimiter. By mastering regular expressions, users can efficiently search through data, validate inputs, and perform various string manipulations. Whether working with large logs, text files, or user inputs, regex allows for more advanced and flexible text processing techniques.

Understanding Remote Management

Remote management allows administrators to control and configure systems from a distance, making it easier to manage multiple machines without being physically present. This capability is essential for maintaining large environments or managing servers and workstations across various locations. Through secure communication protocols, administrators can perform tasks such as executing commands, managing services, and retrieving system information from remote devices.

In order to utilize remote management, certain protocols and configurations must be set up. These include enabling remote access on the target system, establishing secure communication channels, and using the appropriate tools for initiating and running remote sessions. This process not only saves time but also reduces the need for manual intervention, making system management more efficient.

Key Concepts and Setup

For successful remote management, it’s necessary to configure both the local and remote machines properly. This typically involves setting up services like remote shell access, ensuring the right ports are open, and verifying that both systems can communicate over the network. The following tools and configurations are essential:

- Enable remote administration features on the remote machine.

- Configure secure authentication methods to protect sensitive data.

- Ensure network connectivity between the local and remote systems.

- Set up firewalls and port forwarding as necessary.

Common Commands and Techniques

Once the systems are properly set up, various commands and techniques can be employed to interact with remote systems. Here are some common methods used to perform remote management tasks:

- Remote sessions: Initiate remote connections to execute commands directly on remote machines.

- File transfer: Copy files to or from a remote system as part of system configuration or update processes.

- Service management: Start, stop, or manage services remotely without direct physical access.

By using remote management techniques, system administrators can significantly improve their efficiency, perform tasks on multiple systems simultaneously, and address issues without needing to travel to the physical location of each machine.

Important Modules for Certification

To achieve proficiency in automation and system management, it’s essential to be familiar with a variety of modules that provide tools and functions. These modules not only extend the capabilities of your environment but also play a significant role in certification preparation. A solid understanding of these modules is crucial for anyone aiming to validate their skills in system automation, administration, and configuration.

Essential Modules for System Administration

These modules are key for managing systems effectively, allowing administrators to handle configuration, tasks, and monitoring from a central platform. Below are some of the most important ones:

- Active Directory Module: Essential for managing user accounts, groups, and organizational units in Active Directory environments.

- Remote Management Module: Helps in managing remote servers and workstations, providing commands for session initiation, file transfers, and task execution.

- Storage Module: Provides cmdlets to manage disk storage, file systems, and storage pools, crucial for system storage configurations.

- Networking Module: Facilitates network configurations, including IP address management, network interfaces, and firewall settings.

Automation and Task Management Modules

For automating common tasks, such as job scheduling and script execution, these modules are indispensable:

- Scheduled Tasks Module: Allows users to schedule scripts and commands to run automatically at specified times or intervals.

- Job Management Module: Enables parallel task execution, making it possible to run multiple processes concurrently for faster automation.

- PSReadLine Module: Improves the command-line interface experience by offering features like command history, syntax highlighting, and text editing capabilities.

Mastering these modules will help ensure success when managing complex systems and automating routine tasks, as they provide a wide range of essential tools for system administration and automation.

Final Tips for Success

Achieving proficiency in automation and system management requires not only understanding core concepts but also practicing problem-solving under real-world conditions. Success is often determined by a combination of focused preparation, time management, and the ability to quickly troubleshoot and apply the right solutions. Below are key strategies that can guide your journey toward mastering essential tasks and commands.

Master the Fundamentals

A strong foundation is crucial. Understanding basic concepts thoroughly will help you tackle more complex tasks later. Focus on mastering:

- Core commands: Familiarize yourself with common commands and their syntax.

- System administration tools: Be comfortable with modules for managing users, directories, and network configurations.

- Automation techniques: Practice writing scripts that automate everyday tasks, such as file management or task scheduling.

Practice with Real-World Scenarios

Simulation and hands-on practice are irreplaceable. Set up practice environments where you can test and apply different commands and solutions to real-world problems. This can include:

- Creating and running scripts: Write scripts to automate specific administrative tasks.

- Debugging scripts: Focus on error handling and troubleshooting common issues.

- Managing remote sessions: Test your skills in accessing and managing remote systems.

By actively engaging with practical examples and solving challenges on your own, you will gain the confidence and competence necessary for long-term success.