When preparing for complex assessments in the field of statistics, a solid understanding of fundamental principles is essential. Grasping core concepts and techniques will not only help you solve problems efficiently but also build the foundation needed for applying these methods in real-world scenarios. Each question posed in such evaluations tests your ability to analyze data, interpret results, and apply statistical tools appropriately.

To excel, it’s important to familiarize yourself with the most frequently tested topics and the specific methods required to tackle different types of problems. By honing your problem-solving approach and practicing various calculation techniques, you can gain confidence and improve your performance. Whether it’s calculating probabilities, working with distributions, or interpreting data trends, a systematic study plan can significantly enhance your readiness.

In the following sections, we will cover practical strategies and provide examples that will help you navigate through the challenges typically found in these types of assessments. With the right preparation, you’ll be equipped to approach every problem methodically and confidently.

Mastering Key Concepts for Statistical Assessments

Preparing for advanced assessments in statistics requires more than just memorizing formulas; it demands a deep understanding of the concepts and methods used to analyze and interpret data. Each task challenges your ability to apply theoretical knowledge to practical situations, requiring both analytical skills and critical thinking. To succeed, it’s important to approach each problem with a clear strategy, ensuring that your methods are accurate and your reasoning sound.

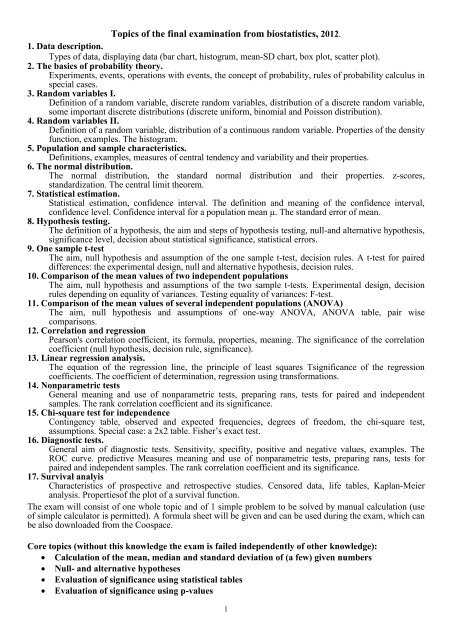

Common Topics Covered in Assessments

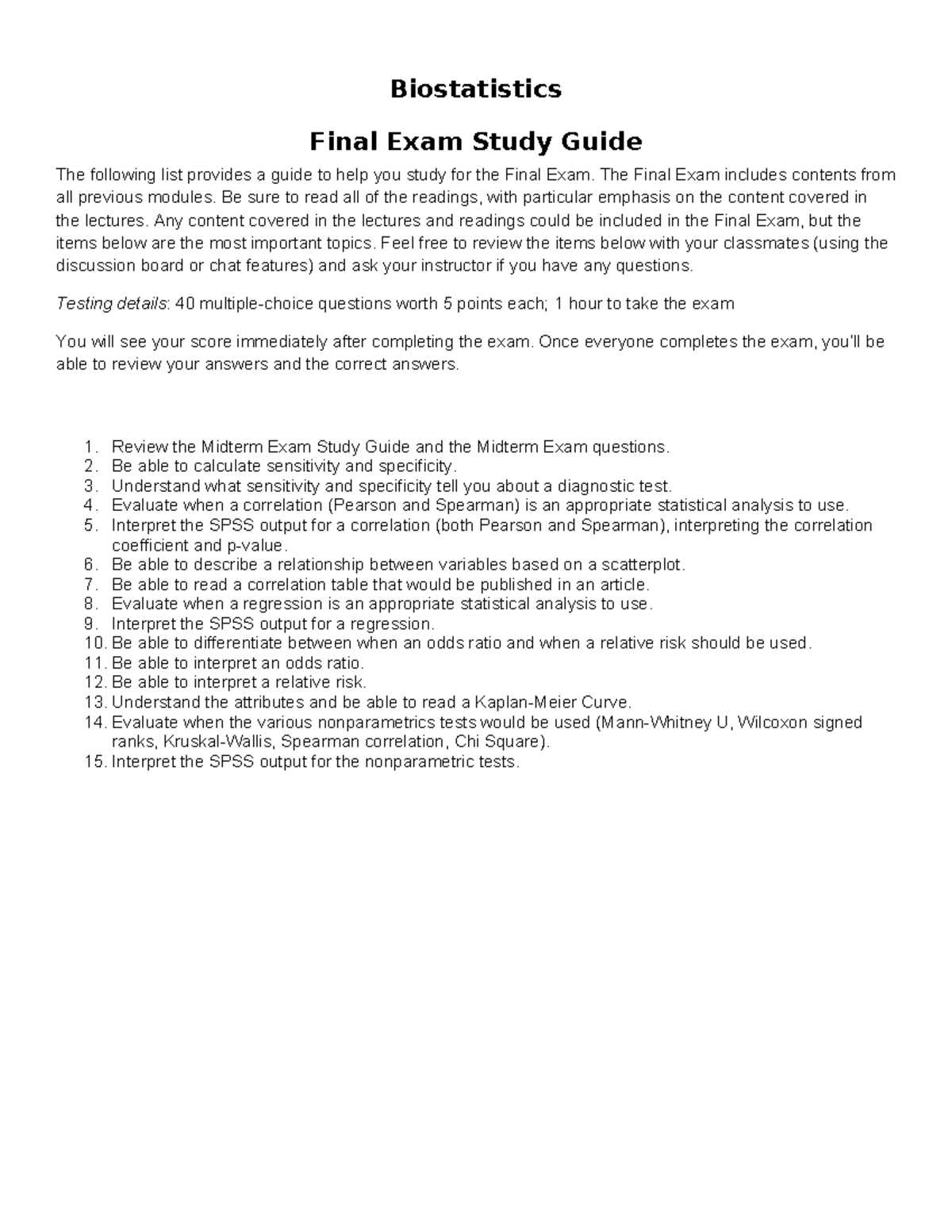

The most commonly tested areas involve topics like hypothesis testing, confidence intervals, regression analysis, and probability distributions. A strong grasp of these areas will allow you to solve problems with precision. For instance, understanding the nuances of p-values and confidence levels is essential when interpreting test results. Similarly, mastering regression analysis enables you to draw meaningful conclusions from data sets, which is crucial for real-world applications.

Effective Problem-Solving Techniques

To approach problems methodically, it’s important to break down each question into smaller steps. Start by identifying the type of data you’re working with and the appropriate statistical tests to apply. Carefully review the assumptions underlying each method to avoid common pitfalls. As you practice, develop a routine for handling complex questions, allowing you to build confidence and improve your speed. With a solid approach, you can tackle even the most challenging tasks with ease.

Understanding Key Statistical Concepts

Grasping fundamental concepts in statistics is essential for approaching any analysis. A deep understanding of the core principles allows you to interpret data accurately and make informed decisions based on statistical results. This knowledge goes beyond simply applying formulas; it involves understanding the reasoning behind various methods and knowing when to use each approach effectively.

One of the most critical areas to master is the concept of probability. Knowing how to calculate and interpret probabilities is the foundation for many statistical techniques, such as hypothesis testing and risk assessment. Additionally, an understanding of distributions and how data behaves is vital for selecting the correct model for analysis. Data variability and central tendency are also key elements that provide insights into the behavior and characteristics of the dataset.

Moreover, understanding the assumptions behind each statistical test ensures that results are valid and reliable. Whether you’re analyzing data for patterns or testing hypotheses, a clear grasp of these basic concepts will enhance your ability to draw meaningful conclusions.

Important Formulas for Statistical Assessments

Mastering the essential formulas is crucial for solving statistical problems efficiently. Whether you’re calculating probabilities, testing hypotheses, or interpreting data, knowing the right formula and how to apply it is key to success. Each formula serves a specific purpose, helping you translate raw data into meaningful insights. Below are some of the most frequently used equations in statistical analysis.

Basic Formulas for Data Analysis

- Mean: The average value of a dataset. It is calculated by dividing the sum of all data points by the number of points.

- Variance: A measure of how far each number in the dataset is from the mean, calculated by averaging the squared differences from the mean.

- Standard Deviation: The square root of variance, providing a measure of the spread of data points.

- Proportions: Used to determine the ratio of a specific outcome within a given population.

Key Formulas for Hypothesis Testing

- t-Test: Used to determine if there is a significant difference between the means of two groups.

- Z-Score: Measures how many standard deviations an element is from the mean.

- Chi-Square Test: A test used to determine if there is a significant association between categorical variables.

- Confidence Interval: A range of values that is likely to contain the population parameter with a certain level of confidence.

These formulas are foundational for tackling a wide range of problems, and understanding their application will greatly enhance your analytical skills and problem-solving efficiency.

Common Mistakes to Avoid in Statistical Analysis

While working through statistical tasks, it’s easy to make small mistakes that can have a big impact on the accuracy of your results. These errors often stem from misunderstandings of concepts, misapplication of formulas, or failure to check assumptions before performing tests. Recognizing and avoiding these common pitfalls will improve your efficiency and accuracy, ensuring that your conclusions are both valid and reliable.

Misinterpreting Data and Results

One of the most frequent mistakes is misinterpreting the meaning of statistical results. For example, confusing correlation with causation can lead to incorrect conclusions. It’s also important not to rely solely on p-values or confidence intervals without considering the context of the data. Always ensure that you understand what the numbers represent before drawing any conclusions.

Ignoring Assumptions Behind Statistical Tests

Each statistical method comes with specific assumptions about the data, such as normality, independence, or equal variance. Failing to check these assumptions can lead to incorrect analysis and unreliable results. For instance, using a t-test on data that doesn’t meet the assumption of normality can distort your conclusions. Always verify that the assumptions for the test you’re using are met before proceeding.

By staying aware of these common mistakes and carefully reviewing your work, you can greatly improve your ability to analyze data accurately and draw meaningful conclusions. Ensuring that you apply the correct methods and interpret results properly is key to mastering statistical analysis.

How to Interpret Statistical Results

Interpreting statistical results is a crucial skill for drawing valid conclusions from data. It’s not enough to simply perform calculations–understanding the meaning behind the numbers is what allows you to make informed decisions. Proper interpretation involves analyzing the context of the data, evaluating the significance of the findings, and ensuring that the results align with your research questions or hypotheses.

Evaluating Significance

One of the first things to consider when interpreting results is statistical significance. A common threshold is the p-value, which indicates the probability that the observed result occurred by chance. However, it’s important to not rely solely on this value. A low p-value suggests that the null hypothesis can be rejected, but this does not necessarily mean that the effect is large or meaningful in real-world terms. Always assess significance in the context of the data and the problem at hand.

Understanding Confidence Intervals

Confidence intervals provide a range of values within which the true parameter is likely to lie. Interpreting these intervals involves looking at the range to see if it includes the null value or if it provides a clear estimate of the effect. A narrower interval typically indicates more precise estimates, while a wider one suggests more uncertainty in the data.

By carefully evaluating both significance and the practical relevance of results, you can make more informed conclusions and ensure that your interpretations are accurate and actionable.

Step-by-Step Approach to Solving Problems

When faced with complex tasks or questions, taking a methodical approach is key to finding the correct solution. Breaking down the problem into smaller, manageable steps helps ensure that you address each aspect thoroughly and systematically. A structured process allows you to apply the right techniques, minimize errors, and reach accurate conclusions efficiently.

Identifying the Problem and Gathering Information

The first step is to clearly define the problem. Understand what is being asked and gather all relevant information. This includes reviewing any provided data, understanding the context, and identifying the specific requirements of the task. Without a clear grasp of the question, it’s easy to misapply methods or overlook important details.

Applying the Correct Methods

Once the problem is defined, the next step is to choose the appropriate statistical tools or formulas. Ensure that the method you select aligns with the type of data and the question at hand. For example, if you’re working with two sets of data and need to compare their means, a t-test might be suitable. Double-check that any assumptions required for the method are met before applying it. Carefully carry out the necessary calculations and interpret the results within the context of the problem.

By following this step-by-step approach, you can solve problems more efficiently and ensure that your solutions are both accurate and reliable.

Essential Statistical Topics to Review

To prepare effectively for any assessment in statistics, it’s crucial to focus on the key areas that frequently appear in problems and analyses. These foundational topics form the backbone of many statistical methods and understanding them deeply will enable you to apply the right techniques in various situations. Reviewing these topics will not only improve your problem-solving skills but also help you make informed decisions when interpreting data.

Core Areas to Focus On

| Topic | Description |

|---|---|

| Probability Distributions | Understanding different distributions like normal, binomial, and Poisson is crucial for modeling data and calculating probabilities. |

| Hypothesis Testing | Mastering the process of setting up and testing hypotheses helps in making decisions based on sample data. |

| Confidence Intervals | Learn how to interpret ranges that estimate population parameters with a given level of confidence. |

| Regression Analysis | Review linear and logistic regression techniques to explore relationships between variables. |

| Sample Size and Power Analysis | Understand how to determine the appropriate sample size to detect an effect of a given size with a certain level of confidence. |

Key Methods to Master

Along with these core topics, ensure you review essential statistical methods such as correlation analysis, ANOVA, and chi-square tests. Each of these techniques serves a specific purpose, whether it’s comparing groups, testing associations, or analyzing categorical data. Mastery of these methods will provide a solid foundation for tackling any statistical challenge.

Tips for Efficient Time Management During Assessments

Managing your time effectively during assessments is crucial for maximizing performance and ensuring that you can complete all tasks within the allotted time. By prioritizing tasks, allocating time wisely, and staying organized, you can approach each question with clarity and confidence. These strategies will help you avoid rushing through sections and ensure that every question receives the attention it deserves.

Start by reading through the entire set of questions to get an overview of the assessment. This will allow you to identify which sections are more time-consuming and which ones can be completed quickly. Prioritize questions that you feel confident about, tackling the easier ones first to build momentum. Keep track of time, setting small goals for each section to ensure that you are progressing as planned.

It’s also helpful to leave a few minutes at the end to review your work. Double-check your answers for any overlooked details or errors, and ensure that all steps in your calculations or reasoning are clearly shown. By managing your time efficiently, you can reduce stress and enhance the quality of your performance.

Strategies for Answering Multiple Choice Questions

Multiple choice questions are designed to test your knowledge and understanding of key concepts in a structured way. While these questions may seem straightforward, effective strategies are essential for selecting the correct answers efficiently. By applying the right approach, you can improve your chances of success, even when uncertain about some of the options.

Start by reading each question carefully and ensuring you understand what is being asked before looking at the options. Eliminate any clearly incorrect choices to narrow down your options. If you’re unsure of the correct answer, try to recall related concepts or formulas that could lead to the correct selection. Often, there are subtle clues within the wording of the question or options that can help guide your choice. Remember, in some cases, the most specific answer tends to be the correct one, as it directly addresses the question asked.

Time management is also key when answering multiple choice questions. Don’t get stuck on one question for too long; if needed, move on to the next one and come back later if time allows. By maintaining a steady pace and staying focused, you can approach these questions with confidence and clarity.

Practical Examples of Statistical Calculations

Understanding how to apply statistical methods is essential for analyzing real-world data. In many cases, calculations are required to interpret patterns, make predictions, and test hypotheses. By working through practical examples, you can solidify your understanding of various techniques and gain the skills needed to apply them to new problems.

Example 1: Calculating Mean and Standard Deviation

Consider the following data set representing the number of hours studied by a group of students: 5, 8, 12, 9, 7. To calculate the mean and standard deviation:

- Mean: Add all values together (5 + 8 + 12 + 9 + 7 = 41) and divide by the total number of values (41 ÷ 5 = 8.2).

- Standard Deviation: First, calculate the variance by finding the squared differences between each value and the mean, averaging them, and then taking the square root.

This calculation helps to understand the spread of the data points and how much variation exists within the group of students.

Example 2: Conducting a t-Test

A researcher wants to compare the average height between two groups of individuals: Group A (N = 10) and Group B (N = 12). To determine if there’s a statistically significant difference in the means of the two groups, a t-test can be applied. The steps include:

- Calculate the mean for each group.

- Determine the standard deviation and standard error for each group.

- Compute the t-statistic by using the formula and compare it to the critical value from the t-distribution table.

By conducting this test, the researcher can determine whether any observed differences between the groups are statistically significant or due to random chance.

Practical examples like these demonstrate how statistical techniques are used in real-world scenarios to make informed decisions and interpret data effectively.

Best Resources for Statistical Assessment Preparation

When preparing for any type of statistical evaluation, having the right study materials and tools is crucial for mastering the necessary concepts. From textbooks to online platforms, a variety of resources are available to help you deepen your understanding and strengthen your problem-solving abilities. The key is to identify materials that are not only comprehensive but also tailored to your learning style.

Books and Textbooks

Books are a great foundation for studying statistical concepts in detail. Some essential textbooks provide clear explanations, practice problems, and step-by-step solutions. Look for books that cover a wide range of topics, from basic probability theory to complex regression analysis. Textbooks such as “Statistics for the Behavioral Sciences” or “The Essentials of Statistics” are well-regarded for their clarity and thoroughness.

Online Courses and Platforms

Online learning platforms offer a flexible way to prepare for assessments with video tutorials, quizzes, and interactive exercises. Websites like Coursera, Khan Academy, and Udemy host courses taught by experienced instructors. These platforms provide an opportunity to learn at your own pace, revisit difficult topics, and engage with other learners. Online resources are especially beneficial for those who prefer visual explanations or need practice with real-world examples.

By using a combination of books, online courses, and additional resources such as flashcards, practice tests, and forums, you can ensure a comprehensive approach to mastering statistical techniques. These resources will help reinforce your knowledge and boost your confidence as you approach your assessment.

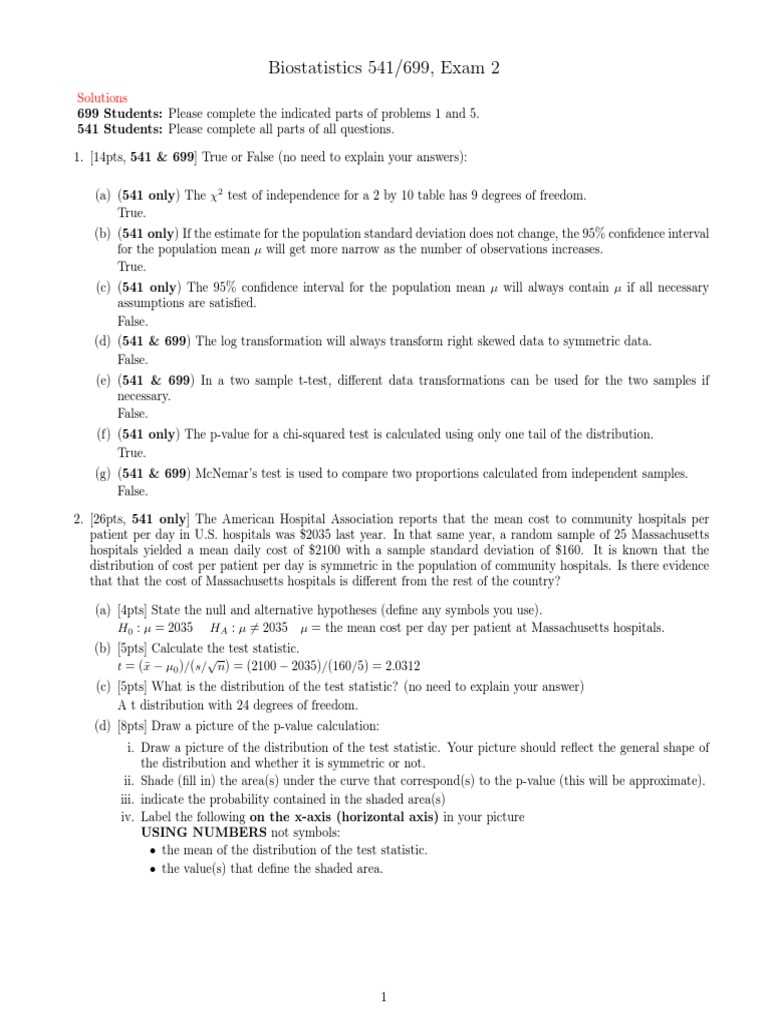

Understanding Hypothesis Testing

Hypothesis testing is a fundamental method used to assess the validity of claims or assumptions about a population based on sample data. It provides a structured approach to determine whether there is enough evidence to support a specific hypothesis. By applying statistical methods, researchers can evaluate whether the observed data align with or contradict the hypothesis being tested.

The Process of Hypothesis Testing

When conducting hypothesis testing, the process generally involves the following steps:

- Formulate the Hypotheses: Develop a null hypothesis (H₀) that assumes no effect or relationship and an alternative hypothesis (H₁) that suggests a potential effect or relationship.

- Select the Significance Level: Choose a significance level (α), commonly set at 0.05, which represents a 5% risk of rejecting the null hypothesis when it is actually true.

- Collect Data and Perform the Test: Gather sample data and use an appropriate statistical test, such as a t-test or chi-square test, to evaluate the hypotheses.

- Analyze the Results: Compare the p-value from the test with the significance level. If the p-value is less than α, reject the null hypothesis; otherwise, fail to reject it.

- Draw Conclusions: Based on the results, conclude whether the data provide enough evidence to support the alternative hypothesis or not.

Types of Hypothesis Tests

Different situations require different types of tests. Some common ones include:

- T-Test: Used to compare the means of two groups and determine if they are statistically different from each other.

- Chi-Square Test: Applied to categorical data to assess whether there is a significant association between two variables.

- ANOVA: Used when comparing the means of more than two groups to see if at least one group differs significantly from the others.

Understanding hypothesis testing and its various methods is crucial for drawing valid conclusions from data and making informed decisions in research and analysis.

Calculating Confidence Intervals Correctly

Confidence intervals are a vital tool in statistics, used to estimate the range within which a population parameter is likely to fall. These intervals give us a sense of how precise an estimate is and help to assess the reliability of sample data. When calculating a confidence interval, it’s crucial to ensure accuracy to avoid misleading conclusions or errors in decision-making.

To calculate a confidence interval, the following elements must be considered:

- Sample Mean: The average value from your sample data, which serves as the central estimate of the population parameter.

- Standard Deviation: A measure of the variability or spread of the sample data, indicating how much individual data points deviate from the sample mean.

- Sample Size: The number of observations or data points in the sample. A larger sample size generally leads to a more precise estimate.

- Z-Score or t-Score: This represents the critical value that corresponds to the desired level of confidence, such as 1.96 for a 95% confidence level.

The formula for calculating a confidence interval is as follows:

Confidence Interval = Sample Mean ± (Critical Value * Standard Error)

Where the standard error is calculated as:

Standard Error = Standard Deviation / √(Sample Size)

Interpreting Confidence Intervals

Once the interval is calculated, it provides a range of values. For example, a 95% confidence interval indicates that we can be 95% certain the population parameter lies within this range. It’s important to note that the interval does not guarantee that the true parameter is within the range, but it reflects a high level of probability based on the sample data.

Common Mistakes to Avoid

- Using the wrong formula: Ensure the correct standard deviation (population or sample) and critical value are used.

- Misunderstanding confidence: A 95% confidence interval does not mean there’s a 95% chance the parameter lies within the interval, but rather that 95% of intervals constructed from repeated sampling will contain the true parameter.

Accurately calculating confidence intervals and interpreting them correctly is essential for making informed decisions in any statistical analysis.

Interpreting Regression Analysis Results

Regression analysis is a powerful statistical technique used to examine the relationship between variables. By analyzing how one or more independent variables affect a dependent variable, this method helps in predicting outcomes and understanding complex relationships. Interpreting the results correctly is crucial for making accurate predictions and drawing reliable conclusions from the data.

Key components of regression results that should be interpreted include:

- Coefficient Estimates: These values represent the impact of each independent variable on the dependent variable. A positive coefficient indicates a positive relationship, while a negative coefficient suggests an inverse relationship.

- Intercept: The intercept is the expected value of the dependent variable when all independent variables are zero. It provides a baseline for the regression model.

- p-Value: The p-value tests the null hypothesis that the coefficient is zero. A small p-value (typically less than 0.05) indicates that the corresponding variable is statistically significant in predicting the outcome.

- R-Squared: This statistic represents the proportion of variance in the dependent variable explained by the independent variables. A higher R-squared value indicates a better fit of the model to the data.

- Standard Error: This measure tells you the average distance that the observed values fall from the regression line. Smaller standard errors suggest more precise estimates.

Understanding Statistical Significance

When interpreting the results of a regression model, it’s important to focus on statistical significance. A variable with a p-value less than 0.05 is typically considered significant, meaning that there is a low probability that the observed relationship occurred by chance. However, it is also important to consider the practical significance and context of the data, as a statistically significant relationship may not always be meaningful in real-world scenarios.

Model Diagnostics and Assumptions

Before fully trusting the regression results, it’s essential to check the assumptions of the model. These include linearity, independence, homoscedasticity (constant variance), and normality of residuals. If these assumptions are violated, the model’s results may be misleading or biased.

In summary, interpreting regression analysis results involves not only understanding the statistical significance of the variables but also assessing the model’s overall fit and checking for assumptions. By doing so, you can make more accurate predictions and informed decisions based on the data.

Real-World Applications of Biostatistics

The application of statistical methods in understanding health data is crucial for solving real-world problems in various fields. These techniques help in making informed decisions regarding public health, clinical research, environmental health, and policy-making. By analyzing patterns, predicting outcomes, and assessing the effectiveness of interventions, statistical models play a vital role in improving the well-being of populations.

In the medical and health sectors, statistical analysis is widely used to determine the effectiveness of treatments, track disease outbreaks, and identify risk factors for various conditions. Researchers and healthcare providers rely on these methods to make evidence-based decisions that guide both individual and public health strategies.

Applications in Public Health

In public health, data analysis helps to identify trends in disease prevalence and the factors that contribute to health disparities. Epidemiologists use statistical tools to track the spread of infectious diseases, evaluate vaccination programs, and assess the impact of environmental factors on health outcomes. These insights lead to more effective prevention strategies and the allocation of resources to areas with the highest need.

Clinical Trials and Medical Research

In clinical trials, statistical analysis is essential to determine the safety and efficacy of new drugs, treatments, or medical devices. Researchers use randomized controlled trials (RCTs) to compare different interventions and evaluate their outcomes. This allows scientists to make evidence-based recommendations and ensure that new treatments provide real benefits to patients. Statistical techniques also help in analyzing large medical datasets, such as electronic health records, to uncover patterns that might not be visible through traditional observation.

Overall, the use of statistical methods in health and medical research enables more precise decision-making, better resource allocation, and improved health outcomes across populations.

Key Theories to Focus On for Success

To excel in the study and application of statistical methods, focusing on key theoretical frameworks is essential. These core concepts lay the foundation for solving complex problems and making data-driven decisions. A deep understanding of these theories not only enhances comprehension but also improves the ability to analyze and interpret various types of data effectively.

From understanding the basics of probability to mastering more advanced techniques like regression analysis and hypothesis testing, each theory offers valuable tools for interpreting data. By prioritizing these key principles, students and professionals can build the necessary skills to tackle statistical challenges in a variety of fields, including healthcare, social sciences, and public policy.

Important Theories to Master

| Theory | Explanation | Application |

|---|---|---|

| Probability Theory | Helps in understanding random phenomena and calculating the likelihood of different outcomes. | Used to predict the probability of events, such as disease outbreaks or the effectiveness of treatments. |

| Sampling Theory | Focuses on how samples are drawn from a population and how this affects statistical analysis. | Essential in survey design, clinical trials, and epidemiological studies to ensure representative data. |

| Regression Analysis | Analyzes the relationship between variables and helps in predicting outcomes based on observed data. | Used to assess the effect of one or more factors on a dependent variable, such as treatment impact or risk factors. |

| Hypothesis Testing | Provides a method for testing assumptions and determining whether observed data is statistically significant. | Crucial for making decisions in clinical research, such as whether a new drug is effective. |

Applying Theories to Real-World Data

Once these key theories are understood, applying them to real-world data becomes a valuable skill. Whether analyzing health data, conducting market research, or studying social trends, these theories provide the tools necessary for making informed, data-driven decisions. The ability to choose the right theory for the problem at hand is vital for successful outcomes in any field that relies on statistical analysis.

What to Do if You’re Stuck on a Question

When facing a challenging question, it’s easy to feel overwhelmed. However, taking a structured approach can help you regain focus and work through the problem more effectively. It’s important to stay calm, as panicking can lead to poor decision-making and missed opportunities. Instead, try to break down the question into smaller, more manageable parts.

First, reread the question carefully to ensure you fully understand what is being asked. Identify any key terms or concepts that could provide a clue about the approach to take. If necessary, underline or highlight important information. Next, recall any relevant formulas, theories, or methods that might apply. This can help you form a strategy for solving the problem.

If you’re still unsure, consider the following steps:

- Skip and Come Back Later: Sometimes moving on to another question can provide a fresh perspective. You may find that you can answer the difficult question after you’ve solved others and cleared your mind.

- Eliminate Clearly Incorrect Options: If you’re dealing with multiple-choice questions, eliminate answers that are clearly wrong. This increases the probability of selecting the correct one when you come back to it.

- Look for Patterns or Clues: Often, questions are designed to follow certain patterns or to build upon knowledge from previous questions. Identify these patterns to guide your approach.

- Check for Simpler Questions: Review the question for any simpler, overlooked aspects that may offer easier solutions.

By applying these strategies, you can approach difficult questions with greater confidence, minimizing stress and maximizing your chances of success.

Reviewing Past Exams for Better Preparation

One of the most effective ways to prepare for an upcoming assessment is to review previous tests. This approach helps you identify patterns in the types of questions asked, the topics that are frequently covered, and the specific problem-solving techniques that are commonly required. By analyzing past assessments, you gain insight into what is expected and how best to approach each question, which ultimately boosts your confidence and efficiency.

Why Reviewing Past Tests is Essential

Going through previous assessments allows you to recognize recurring themes and formats, making it easier to predict what may appear on future tests. This method is especially useful for understanding the test’s structure and improving time management skills. In addition, reviewing past materials reinforces your understanding of core concepts, ensuring that you can apply them effectively when faced with similar questions.

How to Make the Most of Past Assessments

Here are some strategies to maximize the benefit of reviewing past assessments:

- Analyze Question Types: Look for patterns in the questions–are they more conceptual, computational, or focused on data interpretation? This will guide your study focus.

- Understand the Mistakes: Pay attention to any errors you made. Understanding why you got a question wrong helps you identify knowledge gaps and improve your approach to similar problems.

- Practice Time Management: While working through past questions, time yourself. This ensures that you are pacing yourself appropriately and helps reduce anxiety on the actual day.

- Review Solutions and Explanations: When reviewing past tests, make sure to go through the solutions. Focus on understanding the reasoning behind each answer, as this will deepen your conceptual knowledge.

- Simulate Test Conditions: Try to simulate the test environment by taking the assessments under timed conditions. This helps you get accustomed to working within a set time frame and improves your focus.

By regularly reviewing previous assessments, you not only improve your understanding of the material but also enhance your ability to perform under pressure.