To excel in assessments involving programming and numerical tasks, it is crucial to have a deep understanding of various techniques used to manipulate, process, and visualize complex sets of information. Mastery of these skills not only enhances problem-solving abilities but also improves efficiency in handling real-world challenges.

Throughout this guide, we will cover essential tools, strategies, and methods that are commonly evaluated in technical assessments. By focusing on practical examples and important concepts, you will gain a strong foundation to tackle diverse questions and scenarios that require careful data handling, manipulation, and interpretation.

Preparation is key to success in any testing environment. Thorough knowledge of key libraries and functions, along with the ability to solve problems efficiently, will ensure you are well-equipped to approach any task confidently. Whether dealing with structured or unstructured information, effective methods can make all the difference in achieving optimal results.

Data Analysis with Python Final Exam Answers

In any test that involves working with programming and numerical problems, understanding the core methods and tools used for processing and interpreting complex sets of information is crucial. Being able to confidently apply the right techniques to solve challenges will significantly boost performance and lead to more accurate results. This section will provide guidance on how to approach such tasks, focusing on the strategies and solutions that are commonly evaluated in these types of assessments.

Key Skills and Techniques

When tackling problems that require numerical computations, it’s important to be familiar with essential programming methods and libraries. These tools enable you to manipulate variables, conduct calculations, and present results in a way that is both meaningful and easy to understand. Understanding the different approaches and when to apply them is a key part of succeeding in these evaluations.

How to Approach Problem-Solving

Breaking down the task into smaller, manageable steps is vital for navigating through programming challenges efficiently. Identify the main objectives, determine which methods or functions to use, and then test your results to ensure accuracy. Familiarity with commonly used functions and being able to quickly implement them will help you save time and enhance performance.

Key Concepts to Review for the Exam

To ensure a solid understanding and the ability to solve challenges efficiently, it’s important to focus on the core principles that underpin the use of computational tools and mathematical operations. By mastering these fundamental ideas, you’ll be able to approach various tasks with confidence and precision. Below are some key areas that require attention for effective problem-solving.

- Understanding of Libraries – Familiarity with essential modules and tools, including how they simplify complex tasks, is crucial. Key libraries help streamline processes and improve productivity.

- Variables and Data Structures – Knowing how to handle different data types, such as lists, dictionaries, and arrays, is necessary for efficient computation and manipulation.

- Mathematical Operations – Solidifying your grasp of basic operations, such as arithmetic calculations and transformations, is fundamental to solving more advanced problems.

- Looping and Control Structures – Mastery of loops, conditions, and functions is essential for automating repetitive tasks and controlling the flow of code.

- Error Handling – Understanding how to identify and fix mistakes ensures that your solutions are robust and reliable.

By focusing on these areas, you’ll be better prepared to address questions that assess both your technical knowledge and problem-solving capabilities. Practice implementing these concepts in various scenarios to strengthen your proficiency.

Essential Python Libraries for Data Analysis

In any programming task that involves working with numerical problems, having access to the right tools is essential. The right set of libraries can simplify complex operations, increase productivity, and ensure that your solutions are both effective and efficient. Below is an overview of some key libraries that are frequently used in computational work, especially for tasks that require manipulation, visualization, and statistical processing of information.

| Library | Purpose | Key Features |

|---|---|---|

| NumPy | Efficient handling of arrays and numerical operations | Support for large multi-dimensional arrays, mathematical functions, linear algebra operations |

| Pandas | Data manipulation and handling of structured data | Powerful data structures, handling of missing data, easy filtering and grouping |

| Matplotlib | Creating static visualizations | Wide range of customizable plotting options, integration with other libraries for visualization |

| Seaborn | Statistical data visualization | Built on top of Matplotlib, easy-to-use interfaces for creating attractive plots |

| Scikit-learn | Machine learning and statistical modeling | Pre-built algorithms for regression, classification, clustering, and model evaluation |

These libraries offer a wide range of functions and capabilities that are essential for performing computational tasks efficiently. Mastery of these tools allows you to streamline your workflow and tackle a variety of challenges in a structured and manageable way.

Understanding Data Structures in Python

When working with programming tasks, it’s essential to understand how to store, organize, and manipulate information effectively. Choosing the right structures for your variables can significantly affect the performance and efficiency of your solutions. Understanding how different structures work and when to use each one is a critical skill for successful problem-solving.

Common Types of Structures

The most frequently used structures in programming are lists, tuples, sets, and dictionaries. Each one has its own unique properties, and selecting the appropriate structure depends on the nature of the problem you’re tackling. For example, lists are ideal for ordered collections, while sets are useful for ensuring uniqueness in your data.

Key Considerations for Using Structures

Choosing the right structure involves considering factors such as the need for ordering, the possibility of duplicates, and the frequency of updates to the stored values. Efficient use of structures can lead to optimized code, faster execution times, and clearer solutions to complex problems.

Common Data Wrangling Techniques in Python

In any task that involves processing and transforming information, it’s essential to clean and reshape your input before meaningful analysis can occur. Handling incomplete, inconsistent, or unstructured information is a common challenge, and mastering these techniques is key to efficient problem-solving. The ability to manipulate and prepare data correctly ensures that the final results are both accurate and usable.

Handling Missing Values

One of the most common issues when dealing with information is missing or null values. Approaches like filling in missing values with default values, forward/backward filling, or removing rows with missing data are often used. The choice of method depends on the type of task at hand and the amount of missing information.

Filtering and Reshaping Data

When you need to focus on specific subsets or restructure the data, filtering and reshaping techniques become essential. This includes operations such as selecting particular rows or columns, aggregating values, or transforming the shape of the dataset. These techniques allow you to manipulate the data into a more suitable format for your analysis.

How to Handle Missing Data in Python

When working on computational tasks, incomplete or missing information is a common challenge. It’s crucial to identify these gaps and decide how to address them effectively to avoid affecting the accuracy of the final results. Various techniques can be employed to manage these issues, depending on the context and the amount of missing values present in the dataset.

| Method | Description | Use Case |

|---|---|---|

| Removing Missing Data | Simply removing rows or columns containing missing values | Useful when the missing values are minimal and removing them won’t affect the dataset’s integrity |

| Filling Missing Data | Replacing missing values with a predefined value (mean, median, mode, etc.) | Suitable when you want to maintain dataset size or avoid removing valuable information |

| Forward/Backward Filling | Using the last or next valid value to fill in the gap | Useful in time-series data or when the missing value is expected to follow a trend |

| Interpolation | Estimating missing values based on the surrounding data points | Ideal for numerical data when you want a more accurate replacement |

Choosing the right approach to handle missing values depends on the nature of the task and the extent of the missing data. Proper handling ensures that your results are both reliable and meaningful, without being distorted by incomplete information.

Data Visualization Tools for Python Users

Effective visualization is essential for conveying complex information in a clear and understandable way. Being able to translate numerical and categorical values into meaningful charts, graphs, and plots allows users to uncover patterns and insights that might otherwise remain hidden. Below are some of the most widely used tools to help create high-quality visual representations in any computational task.

Popular Libraries for Creating Visuals

- Matplotlib: A versatile library for creating static, interactive, and animated plots. It provides a range of customizable options for different types of visualizations, including bar charts, histograms, and scatter plots.

- Seaborn: Built on top of Matplotlib, Seaborn is designed for creating attractive and informative statistical graphics. It simplifies the creation of complex visualizations, such as heatmaps and pair plots, with minimal code.

- Plotly: Known for its interactive charts, Plotly allows users to create dynamic visualizations that can be embedded into web applications. It’s ideal for interactive dashboards and real-time data updates.

- Bokeh: A tool designed for creating interactive plots that can be embedded into websites. It offers a wide range of options for designing complex visualizations that update in real-time based on user input.

Choosing the Right Tool

The choice of visualization tool largely depends on the specific requirements of your task. If interactivity is essential, Plotly or Bokeh may be the best options. For simple static plots, Matplotlib is a great starting point, while Seaborn excels when creating aesthetically pleasing statistical visuals. Each library offers unique features, and understanding these will help you decide which one to use based on your project needs.

Statistical Analysis Using Python Libraries

For many computational tasks, statistical methods are essential for making sense of numerical information and deriving meaningful insights. Leveraging the right libraries allows users to perform a wide range of statistical operations, from basic measures like mean and standard deviation to more complex procedures such as hypothesis testing and regression analysis. Below are some powerful tools that can help facilitate these tasks.

Key Libraries for Statistical Methods

- Scipy: A robust library for scientific computing that includes modules for optimization, integration, interpolation, eigenvalue problems, and other advanced mathematical functions. It is commonly used for statistical tests, probability distributions, and hypothesis testing.

- Statsmodels: Ideal for conducting statistical modeling, including linear regression, time series analysis, and generalized linear models. It provides detailed statistical tests and diagnostic tools.

- Numpy: Although primarily used for numerical operations, Numpy offers essential statistical functions such as mean, median, variance, and standard deviation, making it a fundamental tool for basic calculations.

- Pandas: While often used for data manipulation, Pandas also offers built-in statistical methods for handling time series data, correlation analysis, and basic aggregation operations.

Performing Statistical Tests and Modeling

Statistical tests such as t-tests, chi-squared tests, and ANOVA are essential for comparing groups and testing hypotheses. Python libraries like Scipy and Statsmodels provide easy-to-use functions for conducting these tests, while Numpy and Pandas are useful for summarizing and preparing the data for statistical procedures. Once data is cleaned and organized, these tools can be used to identify relationships, trends, and patterns across different datasets.

Effective Data Cleaning Strategies

Ensuring the quality of your dataset is essential for accurate results. Raw information often contains errors, inconsistencies, or missing values that must be addressed before meaningful insights can be drawn. Effective cleaning involves identifying and correcting these issues to make the dataset reliable and suitable for further analysis. Below are some key strategies for improving the quality of your data.

Key Cleaning Techniques

- Handling Missing Values: Missing data can distort results, so it’s crucial to either remove or fill in gaps using methods like mean imputation, forward/backward filling, or interpolation.

- Removing Duplicates: Duplicated entries can lead to skewed findings. Identifying and removing duplicates ensures the integrity of the dataset.

- Correcting Inconsistencies: Standardizing formats, such as date or time formats, and correcting spelling errors helps eliminate variations that could cause issues during analysis.

- Outlier Detection: Outliers can significantly impact the outcome. Identifying and dealing with extreme values through removal or transformation can improve the overall quality.

- Normalizing Values: Standardizing numerical values helps bring data to a common scale, which is important for many modeling techniques.

Automation of Cleaning Tasks

For large datasets, automating cleaning tasks is essential for efficiency. Libraries like Pandas and Numpy provide functions that allow for the identification and handling of missing values, duplicates, and outliers automatically. Using these tools effectively can save time and reduce human error, ensuring the dataset is prepared properly for further processing.

Understanding Python’s Pandas Library

Pandas is a powerful tool for managing and analyzing structured information. It provides easy-to-use data structures and operations for manipulating numerical and categorical values. The library is designed to handle large datasets efficiently, offering features that simplify tasks like cleaning, transforming, and summarizing information. Here’s an overview of its key functionalities and why it is an essential tool for any data-related task.

Core Features of Pandas

- DataFrame: This two-dimensional, size-mutable structure is the cornerstone of Pandas. It allows for storing data in rows and columns, similar to a table in a database, making it easier to work with complex datasets.

- Series: A one-dimensional labeled array, similar to a column in a DataFrame. Series can hold various types of data, making them highly flexible for different tasks.

- Efficient Indexing: Pandas offers advanced indexing options, allowing users to access data quickly and efficiently, including multi-indexing for handling hierarchical data.

- Data Cleaning: The library includes built-in methods for identifying and handling missing or duplicate entries, as well as for converting data types.

- Aggregation and Grouping: Pandas supports various aggregation functions such as sum, mean, and count, which can be applied to subsets of data for easy comparison and summarization.

Why Pandas is Essential

The flexibility and speed of Pandas make it indispensable for working with large volumes of information. Whether you are cleaning, transforming, or analyzing structured content, this library simplifies many tasks. Its intuitive syntax and powerful functionality allow users to focus on extracting meaningful insights, making it one of the most widely used tools in data-related workflows.

Best Practices for Data Preprocessing

Preprocessing is a critical step in any analytical workflow, ensuring that the information is clean, consistent, and ready for further analysis. Effective preprocessing involves transforming raw inputs into a form that can be efficiently used for modeling or other tasks. By following best practices, you can optimize the quality of your dataset and enhance the accuracy of the results. Below are some essential practices to consider during this stage.

- Handle Missing Values: One of the first steps in preprocessing is addressing missing or incomplete entries. Depending on the context, you can choose to either remove the rows or fill in gaps using techniques like mean imputation or interpolation.

- Remove Duplicates: Redundant data can skew results. Identifying and eliminating duplicates helps ensure that the information remains representative and avoids bias.

- Standardize Formats: Ensure that all data follows a consistent format, whether it’s for dates, text, or numerical values. This makes it easier to compare and work with the data efficiently.

- Normalize and Scale: For numerical features, it’s often beneficial to normalize or scale values. This ensures that no single feature disproportionately influences the outcome, especially in models sensitive to scale.

- Encode Categorical Variables: Many algorithms require numerical inputs, so encoding categorical variables into numerical representations (e.g., one-hot encoding) is often necessary for successful processing.

- Outlier Detection: Extreme values can distort analysis. Identifying and addressing outliers, either by removing them or transforming the values, is a critical step to maintain data integrity.

By implementing these practices, you ensure that the dataset is free from errors and inconsistencies, setting the stage for more reliable and accurate insights. Proper preprocessing can significantly improve the efficiency and quality of any analytical process.

Working with Large Datasets in Python

Handling extensive collections of information can be challenging, especially when dealing with memory constraints and processing speed. Efficiently managing large datasets is crucial for ensuring that operations are performed quickly without overloading system resources. Python offers a variety of tools and techniques that can help optimize this process, allowing for faster computation and more manageable workflows. In this section, we will explore strategies for dealing with large volumes of information effectively.

Key Strategies for Efficiently Handling Large Collections

- Use Chunking: Instead of loading the entire dataset into memory at once, break it into smaller chunks. This method enables processing data incrementally, reducing the amount of memory required at any given time.

- Optimized Data Structures: Python’s built-in structures like lists and dictionaries may not be the most memory-efficient when working with large volumes of data. Using libraries such as NumPy or pandas for arrays and tables can greatly improve performance.

- Lazy Loading: This technique involves loading only the parts of the dataset that are needed at a particular moment. By deferring data loading, you minimize memory usage.

- Out-of-Core Computation: For datasets too large to fit into memory, out-of-core computation allows for operations on data that reside on disk. Libraries like dask enable this functionality, helping users perform computations without overwhelming system resources.

- Efficient File Formats: The format in which data is stored can have a significant impact on processing efficiency. For example, using Parquet or HDF5 formats instead of CSVs can reduce file size and improve read/write speeds.

Tools and Libraries for Managing Large Datasets

| Tool/Library | Features |

|---|---|

| pandas | Efficient handling of structured data; supports chunking, indexing, and out-of-core computations. |

| NumPy | Optimized for numerical computations, supports large arrays with minimal memory overhead. |

| dask | Parallel computing framework designed for handling large datasets with out-of-core computation. |

| vaex | Efficient data manipulation and exploration on large datasets, optimized for speed and memory usage. |

By applying these techniques and using the right tools, it’s possible to manage and process large datasets efficiently, ensuring smooth operations even with vast amounts of information. Whether working with numerical arrays, time-series data, or tables, these strategies allow for seamless scaling and improved performance.

Interpreting Python Output for Data Analysis

When performing tasks in programming, understanding the results produced by your code is essential for making informed decisions. The output generated by your code offers valuable insights, whether it’s numerical values, error messages, or summaries of computations. Correctly interpreting this information helps in identifying issues, verifying results, and ensuring that the operations performed are in line with the expected outcomes. In this section, we’ll explore how to read and understand different types of outputs effectively.

Types of Outputs and How to Interpret Them

- Numerical Results: When working with numerical calculations, the output often consists of values that represent summaries or computations based on the input. Ensure that the result aligns with your expectations, especially for large datasets or complex computations.

- Error Messages: Error messages in Python are critical for diagnosing issues. They provide the location and nature of the problem, whether it’s a syntax error, runtime error, or logical issue. Understanding these messages is crucial for debugging your code efficiently.

- Textual Summaries: Python may output textual summaries of computations or data manipulations, such as counts, averages, or statistical information. It’s important to verify that these summaries reflect the correct application of methods to the data set.

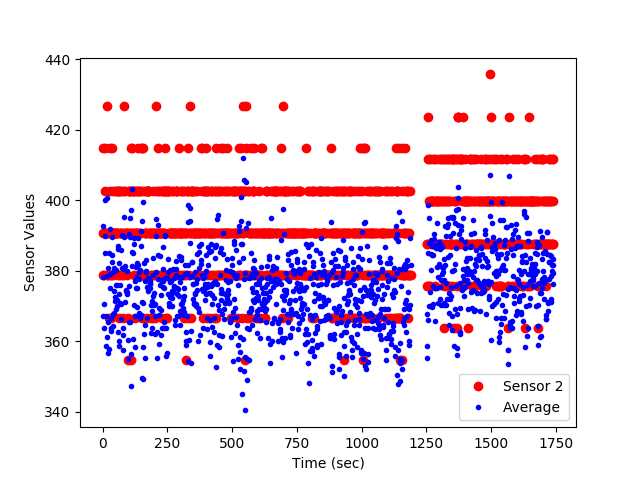

Using Visualizations to Aid Interpretation

Visualizations are another effective way of interpreting the results of computations. By plotting graphs or charts, it becomes easier to identify trends, outliers, and patterns in the output. Using libraries like Matplotlib or Seaborn helps transform numerical outputs into more accessible visual formats, which often makes interpretation simpler and more intuitive.

Once you have a grasp on how to read the output generated by your code, it’s easier to assess whether your operations are yielding the intended results or if adjustments are needed. Understanding output is an ongoing process that helps refine your approach and enhances the efficiency of your work.

Advanced Techniques for Data Analysis

In the world of programming, complex tasks require sophisticated methods to manage and interpret large volumes of information efficiently. Mastering advanced techniques allows practitioners to uncover deeper insights, handle challenges in large datasets, and improve the accuracy and scalability of their operations. These methods go beyond basic computations and enable more nuanced decision-making through advanced algorithms and tools.

Machine Learning for Predictive Modeling

One of the most powerful tools in advanced computational tasks is machine learning. By employing supervised or unsupervised learning algorithms, you can create predictive models that anticipate outcomes based on historical data. Common techniques such as decision trees, random forests, or support vector machines allow for high-precision predictions, often leading to insights that were not immediately apparent in the raw dataset. A good understanding of model evaluation metrics such as accuracy, precision, and recall is essential to ensure the reliability of predictions.

Natural Language Processing for Textual Data

For tasks involving textual information, advanced techniques like natural language processing (NLP) provide the tools needed to process and extract meaning from unstructured data. NLP techniques, including sentiment analysis, text classification, and topic modeling, can transform raw text into structured formats that reveal hidden patterns and trends. These techniques are crucial for projects that involve analyzing large amounts of unstructured text, such as customer feedback or social media posts.

By leveraging these advanced methods, you can significantly enhance the depth and breadth of your insights, improving your ability to make data-driven decisions in complex environments. Mastery of these techniques also helps in tackling increasingly intricate datasets and in maintaining scalability as data volumes grow over time.

Common Pitfalls in Data Analysis Exams

While attempting complex assessments in the field of computational tasks, many individuals often encounter common obstacles that can hinder their performance. These challenges, though avoidable, may seem subtle at first, but they can lead to significant mistakes if not carefully addressed. Understanding these pitfalls beforehand can help you navigate through tasks more effectively and ensure that the solutions you provide are both accurate and efficient.

Overlooking Data Preparation

One of the most frequent mistakes students make is skipping or rushing through the data preparation phase. Often, raw datasets require significant cleaning and transformation before any meaningful work can be done. Missing out on this crucial step can result in flawed models or misleading conclusions. Below are some typical data preparation mistakes:

| Common Mistakes | Consequences |

|---|---|

| Ignoring missing or inconsistent values | Results in unreliable or biased outcomes |

| Using the wrong encoding or data type | Causes errors in calculations and visualizations |

| Not normalizing or scaling data | Leads to poor model performance or incorrect conclusions |

Relying on Default Settings

Another common mistake is depending too much on default settings in libraries or tools. While these defaults may work in some cases, they are often not optimal for every scenario. For example, using default parameters for machine learning algorithms without tuning them can lead to subpar model performance. Always check and adjust the settings based on the specific characteristics of the dataset you’re working with.

By avoiding these common mistakes and taking a more thoughtful, systematic approach, you can ensure better results in your computational assessments and be more confident in your problem-solving abilities.

Tips for Time Management During Exams

Effective time management is essential for success when facing challenging assessments. Properly allocating time for each task ensures that all questions are addressed thoroughly, reducing the risk of rushing through the test and making avoidable mistakes. By practicing thoughtful time distribution and maintaining a focused approach, you can maximize your performance and minimize stress.

1. Prioritize Tasks

Start by quickly reviewing the entire test to get a sense of the questions and their difficulty levels. Identify the sections that require more time or effort and tackle them first. This strategy ensures that you don’t run out of time for more complex problems while leaving easier ones for later.

2. Set Time Limits for Each Question

Estimate how long you can spend on each question and stick to it. Setting time limits helps prevent spending too much time on any single task and encourages a balanced approach. If you find yourself stuck, move on to the next question and return to the difficult one later if time permits.

3. Break Tasks into Smaller Steps

For complicated problems, break them down into smaller, manageable steps. This approach helps keep you organized and prevents feeling overwhelmed. Tackling each part systematically ensures you stay on track and don’t waste time trying to solve everything at once.

4. Avoid Perfectionism

Avoid getting caught up in making everything perfect. Focus on providing clear, logical answers rather than obsessing over every small detail. Perfectionism can lead to unnecessary delays, and what matters most is presenting your ideas effectively within the time available.

5. Practice Under Time Constraints

Before the actual assessment, practice solving problems under timed conditions. This will help you get accustomed to the pressure and refine your time management skills. Simulating real test scenarios boosts your confidence and improves your ability to manage time effectively.

By incorporating these strategies into your study and test-taking routine, you can ensure that you make the best use of your available time and increase your chances of success.

How to Prepare for Python Data Exams

Preparing effectively for any assessment related to programming and computational tasks requires a strategic approach. Success depends not only on understanding the core principles but also on honing practical skills and becoming familiar with common problem-solving techniques. A focused, structured study plan ensures that you cover the necessary topics and practice sufficiently before the test.

1. Master Core Programming Concepts

Before diving into more complex topics, it’s essential to strengthen your grasp of fundamental programming concepts. Make sure you are comfortable with:

- Basic syntax and control flow (loops, conditional statements)

- Functions and modularity

- Data structures such as lists, dictionaries, and sets

- File input/output operations

Having a solid foundation will help you solve problems quickly and efficiently during the assessment.

2. Practice Using Key Libraries and Tools

Familiarize yourself with the essential libraries that are frequently used for computational tasks. Knowing how to work with tools like Pandas, NumPy, and Matplotlib is crucial for performing complex operations, handling large datasets, and visualizing results. Practice using these libraries by:

- Importing data from various formats (CSV, Excel, JSON, etc.)

- Cleaning and processing data (handling missing values, outliers)

- Performing basic mathematical operations

- Creating and customizing plots and charts for results

Regular practice will not only make you more efficient but also help you understand common pitfalls and best practices.

3. Solve Practice Problems

The best way to prepare is to work on as many practice problems as possible. These can range from basic exercises to more advanced tasks. Look for online platforms that offer problem sets, or revisit past assignments. Solving problems under timed conditions will also help you develop the necessary speed and accuracy for the assessment.

Additionally, try to explain your solutions clearly, as this will help reinforce your understanding and improve your ability to articulate solutions during the assessment.

4. Review and Understand Common Error Types

Errors are inevitable during programming, but understanding the types of errors you may encounter can help you troubleshoot quickly during the test. Be sure to:

- Understand common syntax and runtime errors

- Learn how to read and interpret error messages

- Familiarize yourself with debugging tools and techniques

With sufficient practice, you will become more adept at identifying and fixing issues efficiently during the assessment.

By combining these approaches–strengthening your programming knowledge, practicing common techniques, and learning to troubleshoot effectively–you’ll be well-prepared to succeed in any programming-related assessment.