Gaining a solid understanding of applied statistical methods is essential for excelling in academic evaluations. These assessments often challenge individuals to interpret models, analyze datasets, and draw meaningful conclusions. Proper preparation requires familiarity with common formats and the ability to apply theoretical knowledge in practical scenarios.

To succeed, one must focus on critical areas such as model estimation, hypothesis evaluation, and data interpretation. Understanding key principles and practicing problem-solving techniques can significantly improve performance. Developing clear strategies for tackling diverse tasks is equally important for achieving top results.

In this guide, you’ll find a variety of practice scenarios, tips for improving efficiency, and insights into common pitfalls. These tools are designed to enhance confidence and ensure thorough readiness for academic challenges in the field of quantitative analysis.

Understanding Common Topics in Quantitative Analysis Assessments

Mastering applied statistical methods involves more than memorizing formulas; it requires critical thinking, problem-solving skills, and the ability to analyze real-world data. These evaluations often test both theoretical knowledge and practical application, providing an opportunity to demonstrate comprehensive understanding.

- Model Interpretation: Learn how to explain the significance of variables and evaluate the fit of statistical models.

- Data Analysis Techniques: Develop the skills needed to clean, interpret, and present data effectively.

- Hypothesis Testing: Focus on identifying appropriate methods for va

Understanding Key Concepts in Econometrics

To analyze data effectively, it’s crucial to grasp the fundamental principles that underpin statistical modeling. These key concepts allow for the creation of accurate models, ensuring data is interpreted in a meaningful way. By focusing on core methodologies, one can better understand how variables interact and influence each other, leading to more reliable insights and predictions.

The following table highlights some of the most important areas to focus on, their significance, and how they apply in practice:

Concept Purpose Practical Application Regression Analysis Explores relationships between dependent and independent variables Used in forecasting, trend analysis, and policy evaluation Correlation Measures the strength and direction of relationships Important in market research, financial analysis, and risk assessment Sampling Methods Determines how data is selected for analysis Critical for surveys, experiments, and generalizing findings to larger populations Hypothesis Testing Evaluates the validity of assumptions based on sample data Used to confirm or reject theories in scientific research and business decisions By mastering these concepts, one can more confidently navigate complex data problems and apply statistical techniques to solve real-world challenges.

Common Mistakes in Econometrics Exams

Many students struggle with certain pitfalls when tackling statistical problems, often due to misconceptions or inadequate preparation. Recognizing these errors is key to improving accuracy and performance. This section highlights frequent missteps and provides insights on how to avoid them effectively.

Errors in Interpretation

One of the most significant challenges involves misinterpreting data or results, leading to incorrect conclusions. This often stems from a lack of clarity on how to analyze relationships or assess the reliability of outcomes.

- Confusing correlation with causation.

- Failing to account for outliers or anomalies in the dataset.

- Overlooking assumptions underlying statistical models.

Technical Missteps

Technical errors often arise from misusing formulas, tools, or techniques. These mistakes can undermine the credibility of findings and impact final scores.

- Incorrectly applying regression methods.

- Using inappropriate sample sizes or ignoring data limitations.

- Rounding errors that distort calculations.

By understanding these common issues and implementing strategies to address them, students can enhance their analytical skills and achieve more accurate outcomes in assessments.

How to Approach Hypothesis Testing

Testing assumptions is a critical aspect of data analysis, as it allows for the validation or rejection of proposed relationships between variables. To approach this process effectively, one must follow a structured methodology to ensure that conclusions are both reliable and meaningful.

Start by clearly defining the null and alternative hypotheses. The null hypothesis typically represents the idea that there is no effect or relationship, while the alternative suggests a significant association exists. Once these are established, proceed with selecting the appropriate statistical test based on the data type and research objectives.

Next, determine the significance level, commonly set at 0.05, which indicates the probability of rejecting the null hypothesis when it is actually true. Collect the necessary data, conduct the test, and interpret the resulting p-value. A p-value less than the significance level leads to rejecting the null hypothesis, while a value greater than 0.05 suggests no sufficient evidence to reject it.

Finally, consider the power of the test to ensure that the sample size is adequate to detect a true effect, if one exists. This thoughtful approach minimizes errors and helps in making well-supported decisions based on statistical evidence.

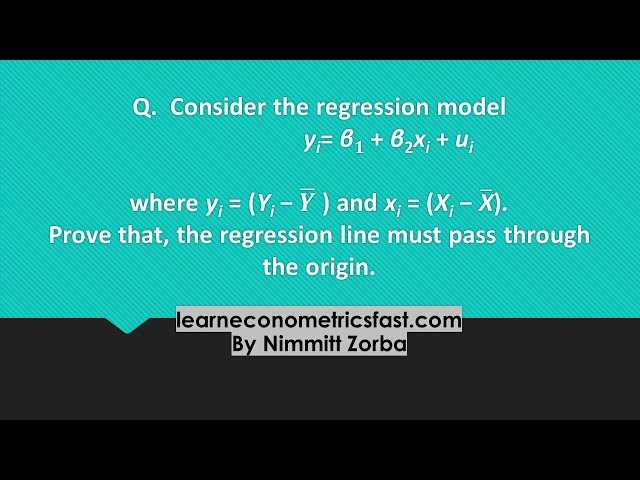

Interpreting Regression Results Effectively

Understanding the output from statistical modeling is essential for drawing meaningful conclusions from data. Regression results provide insight into the relationships between variables, but interpreting these results accurately requires careful attention to detail and an understanding of the underlying statistical principles.

Key Elements of Regression Output

When reviewing regression results, there are several critical components to consider. These elements help in evaluating the strength and direction of relationships between variables.

- Coefficients: These represent the estimated effect of each predictor on the dependent variable. A positive coefficient suggests a direct relationship, while a negative coefficient indicates an inverse relationship.

- Standard Error: This measures the precision of the coefficient estimates. Smaller standard errors suggest more reliable estimates.

- t-Statistic: This test statistic helps assess whether a coefficient is statistically significant. A higher absolute value of the t-statistic typically indicates greater significance.

- p-Value: A p-value less than the significance level (commonly 0.05) indicates that the relationship between the predictor and the outcome is statistically significant.

Assessing Model Fit

In addition to interpreting individual coefficients, it is crucial to evaluate how well the model fits the data as a whole. Key metrics include:

- R-Squared: This value indicates how much of the variation in the dependent variable is explained by the independent variables in the model. Higher values suggest a better fit.

- Adjusted R-Squared: Unlike R-squared, this metric accounts for the number of predictors in the model, making it a more reliable measure when comparing models with different numbers of variables.

- Residuals: The difference between observed and predicted values. Analyzing residuals helps identify potential model issues, such as non-linearity or outliers.

By carefully considering these elements, one can accurately interpret regression results and make data-driven decisions based on robust statistical analysis.

Tips for Answering Data Analysis Questions

When tasked with analyzing datasets, clear and structured responses are key to effectively demonstrating your understanding. The goal is to not only interpret the data but also to provide insights backed by statistical evidence. The following guidelines can help in crafting well-organized and thoughtful responses to data analysis tasks.

Understand the Problem Clearly

Before diving into the analysis, make sure to fully comprehend the problem at hand. Identify the objectives and focus on the key variables involved. Understanding the context allows for more precise interpretations of the results and ensures that the analysis aligns with the question’s requirements.

Step-by-Step Approach

Break the analysis into manageable steps. This methodical approach helps ensure that all aspects of the data are addressed systematically. Common steps include:

- Data cleaning: Ensure the dataset is free from errors or inconsistencies that could distort the analysis.

- Exploratory Data Analysis (EDA): Visualize and summarize the data to uncover trends, patterns, or outliers.

- Modeling: Choose an appropriate statistical model based on the nature of the data and the problem.

- Interpretation: Draw conclusions from the results, providing meaningful insights related to the original question.

By following a structured approach, you not only improve the clarity of your response but also demonstrate a thorough understanding of the process required to tackle the problem.

Econometric Models You Should Know

In data analysis, selecting the right model is crucial for drawing accurate conclusions. Understanding various statistical frameworks helps in addressing different types of research questions and interpreting relationships between variables. Here are some essential models that every analyst should be familiar with, each serving a specific purpose in quantitative analysis.

Linear Regression Model

The linear regression model is a fundamental tool for predicting outcomes based on one or more explanatory variables. It is widely used due to its simplicity and ease of interpretation, making it a common choice for many types of analysis.

Logistic Regression Model

Logistic regression is ideal when the dependent variable is binary, for example, when predicting outcomes like yes/no or success/failure. It models the probability of an event occurring, making it essential in classification tasks.

Time Series Models

Time series analysis models are used to understand data that is collected over time. These models help to identify trends, seasonal effects, and cyclical patterns within the data. Popular time series models include ARIMA and GARCH.

Table of Common Econometric Models

Model Type Use Case Key Features Linear Regression Predict continuous outcomes Assumes a linear relationship between dependent and independent variables Logistic Regression Predict binary outcomes Models probability of an event Time Series Analysis Analyze data over time Identifies trends, seasonality, and cyclic patterns Panel Data Models Analyze multi-dimensional data Uses both cross-sectional and time-series data Mastering these models will equip you with the tools necessary to analyze a wide variety of datasets, drawing meaningful insights for both theoretical and practical applications.

Common Econometrics Exam Question Formats

In assessments of quantitative data analysis, it is important to be familiar with the variety of question types that can test one’s understanding of theoretical concepts and practical applications. Different formats may assess how well you can apply models, interpret results, or evaluate assumptions within a dataset. Below are common types of formats encountered in these evaluations, each testing a specific skill or knowledge area.

Conceptual Understanding

These questions typically require a thorough understanding of the theoretical underpinnings of various models and methods. They test your ability to explain concepts clearly and demonstrate the fundamental principles behind statistical techniques.

- Define and explain the purpose of hypothesis testing.

- Describe the assumptions of linear regression and their implications.

- Explain the difference between correlation and causation.

Practical Applications

These questions focus on your ability to apply methods and models to real-world scenarios. You may be provided with data and asked to perform analysis, interpret output, or derive conclusions.

- Interpret the results of a regression model output.

- Analyze a given dataset and suggest the appropriate model for prediction.

- Calculate p-values and confidence intervals from sample data.

Model Evaluation

Here, you will be asked to evaluate the validity of a model, identify potential issues, or critique the assumptions made during analysis. These questions aim to test your ability to critically assess the appropriateness of methods used in practice.

- Assess the potential problems with multicollinearity in a given regression model.

- Explain the consequences of heteroskedasticity on regression results.

- Discuss the limitations of a time series model in forecasting future values.

Being prepared for these formats helps in understanding the variety of questions that may be presented and enhances your ability to think critically about methods and their real-world applications.

Best Practices for Writing Econometrics Essays

Writing essays on quantitative analysis requires a clear structure, precise argumentation, and a strong understanding of statistical methods. Whether you’re analyzing a model, interpreting results, or evaluating a set of data, it’s essential to present your thoughts logically and persuasively. Below are key recommendations to follow when crafting your analysis papers.

Organize Your Thoughts Clearly

Begin by outlining your essay to ensure a coherent flow of ideas. Start with a brief introduction that sets the context, followed by the methodology section where you explain your approach. Conclude with a discussion of your results and any implications. This logical structure helps readers follow your analysis with ease.

- Introduction: Provide a concise overview of the issue you’re addressing and the methods you’ll use.

- Methodology: Explain the models or techniques used to analyze the data. Be clear about the assumptions and limitations.

- Results: Present your findings in a clear, structured manner, emphasizing the key points.

- Conclusion: Summarize your main findings, their implications, and suggest potential areas for further research.

Use Data and Visuals Effectively

Support your arguments with appropriate data and visuals. Tables, charts, and graphs are powerful tools to convey complex information more effectively. Be sure to explain any figures presented so that readers understand their relevance to your analysis.

- Interpret Data Carefully: Avoid presenting raw data without context. Always explain what the numbers represent and how they support your argument.

- Visual Clarity: When using graphs or tables, ensure they are easy to read and accurately labeled.

- Use Visuals Sparingly: Only include visuals when they add value to your argument. Overloading your essay with unnecessary charts can detract from your analysis.

By following these best practices, you can write essays that not only demonstrate a strong understanding of the subject but also communicate your findings effectively to the reader.

Strategies for Time Management During Exams

Effective time management is crucial when facing any timed assessment. Proper planning allows you to allocate enough time to tackle each section of the test, ensuring you don’t rush through critical components or leave questions unanswered. Below are some key strategies to optimize your performance under time constraints.

- Familiarize Yourself with the Format: Understand the structure of the test beforehand. Knowing how many questions or sections you will face can help you plan how much time to allocate to each part.

- Prioritize Easy Questions: Start with questions that you feel most confident about. This approach builds momentum and allows you to secure easy points quickly, leaving more time for the challenging ones.

- Time Your Practice: Regularly practice with timed mock tests. This helps you become more familiar with working under time pressure and improves your ability to pace yourself during the actual test.

- Set a Time Limit per Question: Allocate a specific amount of time for each question or section. If you find yourself stuck on a question, move on and come back to it later if time allows.

- Leave Time for Review: Always leave a few minutes at the end to review your answers. This final check can help you catch any mistakes or refine incomplete responses.

By applying these strategies, you can manage your time more effectively, reduce stress, and ensure you make the most of the time you have to complete the test.

How to Solve Econometric Calculations Quickly

Efficiently solving quantitative problems during an assessment requires a strategic approach. Understanding the methods and applying them swiftly can significantly improve your performance under time pressure. The following techniques can help you tackle complex calculations more quickly and accurately.

Prepare with Practice

Before any timed task, practice is essential. By familiarizing yourself with the various formulas and procedures used in statistical analysis, you will be able to apply them more efficiently when the time is limited.

- Work Through Example Problems: Regularly solve practice problems to become quicker at applying techniques without needing to recheck steps.

- Understand Key Formulas: Ensure that you have a deep understanding of key formulas and their applications, such as regression equations or hypothesis testing methods.

- Memorize Common Steps: Remembering the most frequently used steps in calculations (like transforming variables or calculating coefficients) can save valuable time.

Use Time-Saving Strategies

During the actual test, use strategies to ensure you do not waste time on any one problem or calculation.

- Break Problems into Smaller Parts: Divide complex problems into simpler, manageable steps. Tackling smaller pieces makes it easier to stay organized and reduces mistakes.

- Skip Challenging Problems: If a problem seems too complicated or time-consuming, move on and come back to it later. This ensures you complete the easier questions first, securing those points.

- Use Calculators or Software Efficiently: If available, utilize calculators or software to perform repetitive calculations. However, make sure you understand the results and their implications.

By practicing these strategies, you can enhance your ability to complete calculations quickly and with confidence, allowing you to maximize your performance on assessments.

Understanding Sampling and Estimation Techniques

Sampling and estimation are foundational elements of statistical analysis. They allow researchers to make inferences about a larger population based on data collected from a smaller subset. This process helps ensure that conclusions drawn are representative and valid while minimizing the cost and time associated with gathering data from every member of the population.

Sampling Methods

There are several methods for selecting a sample that can provide reliable insights about the whole population. The choice of method impacts the accuracy and generalizability of the results.

- Simple Random Sampling: Each member of the population has an equal chance of being selected, ensuring an unbiased sample.

- Stratified Sampling: The population is divided into subgroups, and samples are taken from each subgroup to ensure representation across key categories.

- Cluster Sampling: The population is divided into clusters, and some clusters are randomly selected for study. This method is useful when populations are large and spread out geographically.

Estimation Techniques

Estimation involves using sample data to estimate population parameters. The two main types of estimation are point estimation and interval estimation.

- Point Estimation: This involves using sample statistics (like the sample mean) to estimate the population parameter (like the population mean).

- Interval Estimation: This provides a range of values within which the true population parameter is expected to lie, offering a degree of confidence about the estimate.

- Maximum Likelihood Estimation: A method that finds the values of parameters that make the observed data most likely under a given model.

By mastering these techniques, analysts can draw more accurate and reliable conclusions from their data, ultimately improving the quality of decision-making processes.

Econometric Theories to Focus On

In the field of statistical analysis, several key theories provide the framework for analyzing relationships between variables and predicting outcomes. These theories help analysts understand how to model data effectively, test hypotheses, and make accurate inferences about real-world phenomena. Mastery of these theories is essential for anyone looking to advance their understanding of data modeling and statistical inference.

Key Theories to Study

- Classical Linear Regression Model (CLRM): This theory is foundational in modeling the relationship between dependent and independent variables. It assumes that there is a linear relationship and that the error terms are normally distributed with constant variance.

- Gauss-Markov Theorem: This important theory underpins the idea that ordinary least squares (OLS) estimators are the best linear unbiased estimators (BLUE) when the assumptions of the classical linear regression model hold true.

- Instrumental Variables (IV): This theory is crucial when dealing with endogeneity issues in regression analysis. It suggests using external variables, known as instruments, to help obtain consistent estimators when independent variables are correlated with the error term.

- Generalized Least Squares (GLS): This extends the OLS method to handle situations where the error terms have non-constant variance or are correlated. GLS is particularly useful in the presence of heteroscedasticity or autocorrelation.

- Maximum Likelihood Estimation (MLE): A method used for estimating the parameters of a statistical model by maximizing the likelihood function. It is widely applicable, especially for complex models that do not fit within the classical assumptions of linear regression.

- Panel Data Models: These models deal with data that follows multiple subjects over time, enabling researchers to account for both individual and time-based variations. Fixed effects and random effects models are often used in panel data analysis.

Focusing on these core theories provides a solid foundation for understanding how data can be modeled, analyzed, and interpreted. Their application enables more accurate predictions and better decision-making in real-world contexts.

Dealing with Multicollinearity in Exams

In the context of statistical modeling, multicollinearity occurs when two or more predictor variables are highly correlated, making it difficult to estimate the individual effect of each variable on the dependent variable. This issue can undermine the reliability of the estimated coefficients, leading to misleading conclusions. Understanding how to identify and address multicollinearity is crucial for analyzing data effectively and ensuring accurate results.

Identifying Multicollinearity

- Correlation Matrix: Examining the correlation between independent variables is one of the simplest ways to detect multicollinearity. A high correlation (typically above 0.8 or 0.9) suggests potential problems.

- Variance Inflation Factor (VIF): VIF quantifies how much the variance of a regression coefficient is inflated due to collinearity with other variables. A VIF value above 10 often indicates significant multicollinearity.

- Condition Index: A condition index above 30 may signal issues with multicollinearity, as it reflects the degree of multicollinearity in the model.

Strategies for Addressing Multicollinearity

- Remove Highly Correlated Variables: One approach is to exclude one of the correlated predictors, especially if it does not add unique information to the model.

- Combine Variables: In some cases, combining highly correlated variables into a single index or composite measure can reduce multicollinearity.

- Regularization Techniques: Methods such as ridge regression or lasso regression can help mitigate multicollinearity by applying penalties to large coefficients, thus improving the model’s stability.

- Increase Sample Size: Larger sample sizes can sometimes reduce the impact of multicollinearity, as they provide more information for estimating the coefficients.

Addressing multicollinearity effectively is key to producing reliable models and making valid inferences. Recognizing the problem and implementing appropriate strategies is essential for successful statistical analysis.

Preparing for Advanced Statistical Inquiries

When tackling complex theoretical and practical challenges, it’s important to approach each problem systematically. Building a deep understanding of core principles and developing the ability to apply them in novel scenarios will significantly enhance your ability to tackle difficult tasks. The key is to combine conceptual clarity with hands-on practice, ensuring that you’re ready to engage with more intricate models and analytical techniques.

Mastering Core Techniques

- Understand Key Assumptions: Many advanced problems are rooted in specific assumptions about data and relationships. Be clear on the assumptions that underlie methods such as ordinary least squares, instrumental variables, or maximum likelihood estimation.

- Practice Complex Models: Work through multiple examples involving systems of equations, nonlinear models, or panel data analysis. Familiarity with these topics will make them easier to manage in more demanding scenarios.

- Use Advanced Software Tools: Many problems require advanced computational methods. Proficiency in statistical software such as R, Stata, or Python for data analysis and model estimation will be a critical asset in addressing more sophisticated inquiries.

Approaching Difficult Problems

- Break Down the Problem: Don’t be intimidated by complex problems. Divide them into manageable parts, solve each step methodically, and integrate the results gradually.

- Look for Patterns: Often, solutions to complex problems emerge when you recognize patterns from earlier problems you’ve solved. Drawing on this familiarity can save time and avoid unnecessary mistakes.

- Check Your Work: Ensure that all assumptions hold and that the results are internally consistent. Advanced problems can sometimes lead to errors in logic, so double-checking is crucial to avoid faulty conclusions.

By honing these skills, you’ll be better equipped to handle intricate challenges, ensuring you can navigate the complexities of statistical analysis with confidence and precision.

How to Review Your Responses Effectively

Reviewing your work after completing any written assessment is crucial for ensuring the quality and correctness of your responses. A thorough review allows you to catch any mistakes, refine your arguments, and ensure that your answers are fully aligned with the required objectives. It’s essential to not only look for errors but also to ensure that your approach is coherent and logical throughout.

Key Steps in Reviewing Your Work

- Double-check calculations: Ensure all arithmetic is accurate and that you’ve followed the correct procedures in solving mathematical problems. Even small errors can result in significant deviations in your results.

- Review your reasoning: Revisit the logic behind each part of your response. Check that each step flows smoothly and leads to a valid conclusion. If something feels unclear, reconsider your approach.

- Examine your assumptions: Verify that any assumptions you’ve made during the process are valid and do not lead to incorrect conclusions. Unsupported assumptions can weaken your argument.

Common Mistakes to Look Out For

- Misinterpreting variables: Ensure that you are using each variable correctly and consistently throughout your response. Misinterpretation can skew results and undermine your conclusions.

- Inconsistent units: Pay attention to the units in your calculations and ensure they are consistent across all steps. Inconsistent units can lead to errors in the final outcome.

- Rushing through final checks: Often, the final steps are where small mistakes can slip through. Take your time to carefully review each part of your work, especially your final answer.

Checklist for Effective Review

Review Step Action Common Issue Step 1 Recheck all calculations Arithmetic errors Step 2 Ensure logical consistency Disconnected reasoning Step 3 Verify assumptions Invalid assumptions Step 4 Check variable usage Incorrect variable use Step 5 Confirm unit consistency Inconsistent units By following these review steps and paying attention to common mistakes, you can ensure that your work is well-organized, accurate, and logically sound, leading to stronger results.