Preparing for a test on data processing involves mastering a wide range of topics that evaluate your understanding of how information is structured, stored, and accessed. Whether you’re a beginner or looking to deepen your expertise, this section covers critical areas you need to focus on. Each subject is designed to enhance your ability to work with large sets of information in real-world scenarios.

Focus on key principles such as data organization, security measures, and the various techniques used for efficient retrieval and modification. Grasping these concepts not only prepares you for assessments but also sharpens your practical skills for professional tasks.

Thorough practice with complex problem-solving exercises is crucial for building a solid foundation. Whether dealing with structuring data or troubleshooting performance issues, familiarity with these concepts will set you apart when facing any challenge in this field.

Database Management Systems Exam Preparation Guide

To succeed in assessments focused on data organization and retrieval, it’s essential to approach your preparation strategically. A strong foundation in the core principles will ensure you’re equipped to solve complex problems quickly and efficiently. Understanding the underlying theories behind the techniques used for data manipulation, security, and optimization is crucial for achieving top results.

Key Topics to Focus On

Familiarize yourself with the different models for storing and retrieving information. Focus on understanding normalization, indexing methods, and how data integrity is maintained through various techniques. These are the concepts most likely to appear in a practical scenario, and mastering them will give you the confidence to handle challenging tasks. Additionally, paying attention to performance optimization and security practices will make you well-rounded and ready for any question that may arise.

Effective Study Methods

Practice is the key to mastering the material. Solve past problems, explore common mistakes, and try to simulate real-life situations. This hands-on approach will sharpen your ability to think critically under pressure. Make sure to review theoretical knowledge, but don’t neglect the importance of applying that knowledge to solve practical problems.

Key Concepts in Database Design

When structuring large volumes of information, understanding the principles behind effective design is essential. A well-structured framework ensures that data can be easily accessed, updated, and maintained. Several concepts play a critical role in shaping the efficiency and scalability of these structures, guiding how they interact and support business needs.

Core Principles to Consider

Before diving into the specific components, it’s important to understand the fundamental aspects of organizing data effectively:

- Data Integrity – Ensuring accuracy and consistency in the data throughout its lifecycle.

- Normalization – Reducing redundancy and improving efficiency by organizing data into logical groups.

- Scalability – Designing systems that can handle increasing amounts of information without sacrificing performance.

- Security – Protecting sensitive data through access control and encryption techniques.

Important Design Techniques

Several methodologies help optimize the process of structuring information. Here are some key techniques commonly used in designing efficient structures:

- Entity-Relationship (ER) Modeling – A visual representation of the data entities and their relationships.

- Schema Design – Creating logical structures that outline how data will be stored, related, and accessed.

- Indexing – Implementing data access paths that improve retrieval speed and efficiency.

- Denormalization – Sometimes necessary for improving performance by intentionally introducing some redundancy.

Types of Database Models Explained

In the world of data storage, various structures exist to help organize and access information efficiently. These structures, or models, define how data is stored, related, and manipulated. Understanding the different types of models is crucial for designing systems that meet specific business or technical needs. Each model offers distinct advantages depending on the use case, from handling large volumes of data to ensuring fast and reliable retrieval.

Common Types of Data Models

Each model serves a unique purpose and is best suited for particular scenarios. Below is a brief overview of the most widely used types:

| Model Type | Description | Advantages |

|---|---|---|

| Relational | Data is organized into tables with rows and columns, and relationships are established through keys. | Ease of use, flexibility, support for complex queries. |

| Hierarchical | Data is structured in a tree-like model, with parent-child relationships. | Efficient for representing hierarchical data, fast data retrieval in simple structures. |

| Network | Data is represented as a graph with nodes and connecting relationships, allowing more complex data structures. | Supports complex relationships, more flexible than the hierarchical model. |

| Object-Oriented | Data is represented as objects, similar to programming objects, which encapsulate both data and methods. | Efficient for handling complex data types, integration with object-oriented programming languages. |

Choosing the Right Model

Choosing the appropriate data structure depends on the requirements of the task at hand. If the primary concern is simplicity and the ability to handle a variety of queries, a relational approach may be best. However, for situations involving hierarchical relationships or complex data interconnections, models like hierarchical or network can provide more flexibility. Understanding the trade-offs of each model helps in selecting the best fit for any given challenge.

Normalization and Its Importance

Organizing information in an efficient way is crucial to ensure its accuracy and minimize redundancy. One key practice that achieves this is the process of normalization, which systematically arranges data to eliminate unnecessary duplication. By breaking down complex data sets into smaller, more manageable parts, the likelihood of errors decreases, and the overall performance improves. This process is an essential aspect of creating robust structures that support efficient storage and retrieval of information.

Normalization helps in maintaining consistency across various records and facilitates easier updates. Without proper structure, data can become fragmented, leading to inefficiencies and complications when performing queries or making changes. The goal is to simplify the overall structure while maintaining relationships between various elements of the data set.

| Normal Form | Key Purpose | Benefits |

|---|---|---|

| First Normal Form (1NF) | Eliminates duplicate data and ensures that each column contains only atomic values. | Reduces redundancy, simplifies structure. |

| Second Normal Form (2NF) | Builds on 1NF by removing partial dependencies between attributes. | Improves data integrity, optimizes storage. |

| Third Normal Form (3NF) | Eliminates transitive dependencies, ensuring that non-key attributes are independent of other non-key attributes. | Increases consistency and clarity in the data. |

| Boyce-Codd Normal Form (BCNF) | Addresses anomalies in 3NF by ensuring that every determinant is a candidate key. | Further minimizes redundancy and improves integrity. |

By applying the appropriate level of normalization, you can ensure that data remains consistent, avoids unnecessary duplication, and supports efficient query execution. This process is fundamental in keeping the structure flexible and scalable as requirements evolve over time.

Understanding SQL Query Optimization

When dealing with large volumes of data, efficient retrieval is crucial for maintaining performance. One of the most important aspects of ensuring quick response times is the process of optimizing queries. This involves fine-tuning how data is fetched, so that resources are used efficiently, and results are returned faster. The goal is to streamline how requests are processed, minimizing the time and computational power required to fetch the desired data.

Techniques for Improving Query Efficiency

Optimizing queries can involve several methods. A few common techniques include:

- Indexing: Creating indexes on frequently queried columns can significantly speed up data retrieval by allowing the system to locate data more quickly.

- Query Refactoring: Rewriting queries to avoid unnecessary complexity or subqueries can improve execution times by simplifying the request structure.

- Joins Optimization: Using the most efficient type of join for the situation, such as INNER JOIN or LEFT JOIN, can reduce the number of operations the system needs to perform.

- Limiting Results: Applying filters like WHERE clauses or LIMIT statements helps narrow down the data set, ensuring that only relevant data is fetched.

Monitoring and Adjusting for Optimal Performance

Even after optimization, continuous monitoring is key. Tools and techniques such as query execution plans allow you to evaluate how queries are processed and identify potential bottlenecks. With this information, further refinements can be made to keep performance levels high, especially as data grows or system requirements change over time.

Essential Indexing Techniques for Databases

Efficient data retrieval is a key factor in system performance, especially when dealing with large volumes of information. One of the most effective ways to speed up access to relevant data is through the use of indexing. Indexes create optimized pathways for searching and retrieving data, significantly reducing the time it takes to execute queries. By understanding and applying the right indexing techniques, systems can be made more responsive and resource-efficient.

Common Index Types

Different types of indexes serve specific purposes, depending on the use case. Some of the most common include:

- Single-column Index: This is the simplest form of index, applied to a single column to speed up searches on that particular attribute.

- Composite Index: Also known as a multi-column index, this type is created for queries that involve multiple columns. It helps when multiple fields are frequently queried together.

- Unique Index: Ensures that the values in a column are distinct, helping to enforce data integrity while improving search efficiency.

- Full-text Index: Specialized for searching large text fields, enabling quick searching of words or phrases within a document.

Best Practices for Indexing

To get the most out of indexing, certain best practices should be followed:

- Index Selectively: Creating indexes on every column can negatively affect performance. Instead, focus on columns that are frequently searched or used in JOIN operations.

- Monitor Index Usage: Regularly review which indexes are used and which are not. Unused indexes should be removed to save system resources.

- Consider Query Patterns: When creating indexes, think about the common queries being executed. This will help ensure that the most frequently accessed data is optimized for fast retrieval.

- Balance Between Speed and Space: While indexes can drastically improve performance, they also take up additional space. Strive to find a balance that optimizes both speed and resource usage.

Difference Between Primary and Foreign Keys

In the context of organizing and linking data, keys play a vital role in maintaining relationships between various elements. While both primary and foreign keys are essential for ensuring data consistency and integrity, they serve different purposes and have distinct characteristics. Understanding these differences is crucial for building structured and efficient data models.

Primary Key

A primary key is used to uniquely identify each record within a set of data. It ensures that no two rows in a table can have the same value for the primary key column. This key is essential for maintaining the integrity and uniqueness of the data.

- Uniqueness: The values in a primary key column must be unique for every record.

- Non-null: A primary key cannot have a null value, ensuring every record has a valid identifier.

- One per table: A table can only have one primary key, which may consist of one or more columns (composite key).

Foreign Key

A foreign key is a column or group of columns in one table that references the primary key of another table. This relationship helps maintain data integrity and enforces referential integrity between two tables by ensuring that values in the foreign key column match values in the referenced primary key column.

- Reference: The foreign key refers to the primary key in another table, establishing a relationship between the two sets of data.

- Allows duplicates: Unlike a primary key, a foreign key can have duplicate values, as it is used to reference multiple records.

- Nullable: A foreign key column can allow null values, meaning that not all records must reference another table.

In summary, a primary key ensures that data within a single table is unique and non-null, while a foreign key establishes a relationship between two tables by referencing the primary key of another table. Together, these keys help maintain consistency and integrity across a data model.

Database Security Practices to Know

Ensuring the protection of sensitive information is a critical task for anyone responsible for managing large sets of data. Implementing robust security practices helps to prevent unauthorized access, data breaches, and potential system vulnerabilities. By following key security strategies, organizations can safeguard their valuable data from threats and maintain user trust.

Security practices are designed to not only protect data from malicious attacks but also to ensure that access to information is restricted to authorized users only. These techniques help in maintaining the confidentiality, integrity, and availability of data across systems.

Common Security Measures

Several security measures should be implemented to ensure robust protection:

- Encryption: Encrypting data both in transit and at rest ensures that even if data is intercepted, it remains unreadable without the proper decryption key.

- Access Control: Implementing strong access control policies, such as role-based access control (RBAC), helps restrict data access based on user roles, ensuring that only authorized personnel can view or modify sensitive information.

- Authentication and Authorization: Strong authentication methods like multi-factor authentication (MFA) ensure that only legitimate users can access the system. Authorization mechanisms ensure users have the appropriate level of access.

- Regular Audits: Conducting regular security audits and vulnerability assessments helps identify potential weaknesses in the system and ensures compliance with security protocols.

Best Practices for Security Management

In addition to technical measures, adopting best practices can significantly strengthen overall security:

- Use Strong Passwords: Enforce the use of strong, complex passwords for all user accounts to prevent unauthorized login attempts.

- Patch Management: Regularly updating and patching software is crucial for protecting against newly discovered vulnerabilities that could be exploited by attackers.

- Backup Data: Regular data backups ensure that critical information can be restored in case of an attack or failure, minimizing downtime and loss.

- Data Masking: Data masking techniques obfuscate sensitive information, making it usable for testing or analysis without exposing actual data.

By adopting these security practices, organizations can mitigate the risk of data breaches and ensure that their information remains secure and protected from external threats.

Relational vs NoSQL Database Comparisons

As the demand for handling large and complex data sets grows, two primary approaches for organizing and accessing data have emerged: relational structures and NoSQL models. Both offer unique advantages depending on the type of information, use cases, and scalability requirements. Understanding the distinctions between these two approaches is essential for making informed decisions on how to store, retrieve, and manipulate data efficiently.

Relational Approach

Relational structures have been around for decades and are built on tables that store data in rows and columns. These models are highly structured, with predefined schemas dictating how data is organized and relationships between data points are maintained. This method is ideal for transactional data and scenarios where consistency is paramount.

- Structured Data: Data must adhere to a strict structure defined by schemas.

- ACID Compliance: Ensures strong consistency and data integrity by following ACID (Atomicity, Consistency, Isolation, Durability) properties.

- SQL Language: Utilizes Structured Query Language (SQL) for querying and manipulating data, which is widely known and used.

- Scalability: Horizontal scaling can be more complex and often requires additional techniques like sharding.

NoSQL Approach

NoSQL models are more flexible, allowing for various types of data structures, including key-value stores, document databases, column-family stores, and graph databases. These models are designed for handling unstructured or semi-structured data, offering greater scalability and performance in specific contexts.

- Schema-less: Data can be stored without a predefined structure, allowing for flexibility in handling different types of data.

- Eventual Consistency: Unlike relational databases, NoSQL databases often focus on availability and partition tolerance, relaxing consistency guarantees.

- Scaling: Designed for horizontal scaling, making it easier to handle large volumes of data across distributed systems.

- Types of Data: Suitable for handling unstructured, semi-structured, or rapidly changing data.

Choosing between relational and NoSQL models depends on specific needs. Relational databases are ideal for applications requiring structured data and strong consistency, while NoSQL systems excel in environments that demand high scalability, flexibility, and the ability to manage large, diverse data sets.

Handling Transactions in Database Systems

In data processing environments, a transaction refers to a sequence of operations that must be treated as a single unit of work. Whether data is being inserted, updated, or deleted, the system must ensure that these operations are completed without errors. If any part of the transaction fails, all operations should be rolled back to maintain data consistency and integrity. Understanding how transactions are handled is crucial to ensuring the reliability of the system.

Transaction Properties

Transactions rely on a set of properties known as ACID, which stand for Atomicity, Consistency, Isolation, and Durability. These principles are designed to guarantee the integrity of data, even in the event of power failures, crashes, or other unexpected interruptions.

| Property | Description |

|---|---|

| Atomicity | Ensures that all operations in a transaction are completed successfully. If one operation fails, the entire transaction is rolled back. |

| Consistency | Guarantees that the database starts and ends in a valid state. Any transaction will bring the system from one valid state to another. |

| Isolation | Ensures that concurrently executing transactions do not affect each other. Each transaction is isolated until it is fully completed. |

| Durability | Once a transaction is committed, its changes are permanent, even in the event of a system crash. |

Types of Transactions

There are different types of transactions based on the level of operations they perform and how they interact with other transactions. Understanding these types helps in selecting the appropriate transaction model for the required use case.

- Simple Transactions: Involves basic operations like inserting, updating, or deleting a single record.

- Complex Transactions: Comprises multiple operations across several records or even different systems, often with interdependencies.

- Distributed Transactions: Occur when data is spread across multiple systems or locations, requiring coordination between these systems to ensure consistency.

Efficiently managing transactions is essential for the integrity and reliability of any data-driven system. Ensuring the proper implementation of ACID properties is crucial for maintaining smooth operations in environments where data consistency is critical.

Database Integrity Constraints Overview

In data handling environments, integrity constraints are essential rules or conditions that ensure the correctness, consistency, and reliability of the stored information. These rules prevent invalid or incorrect data from being entered into the system, maintaining the quality and accuracy of the data. Integrity constraints help ensure that relationships and dependencies between different pieces of information are properly enforced and maintained throughout the system’s operations.

There are several types of integrity constraints that can be applied to data structures, each serving a distinct purpose in safeguarding the accuracy of information. These constraints are critical for ensuring that operations like inserting, updating, or deleting data do not compromise the integrity of the entire dataset.

- Entity Integrity: Ensures that each record is unique by preventing duplicate identifiers or primary keys from being used.

- Referential Integrity: Guarantees that relationships between data are maintained, typically by ensuring foreign keys in one record correspond to valid records in another.

- Domain Integrity: Enforces the validity of data entries by ensuring that values entered into a field adhere to predefined rules, such as acceptable data types or ranges.

- Key Integrity: Ensures that a primary key uniquely identifies each record and is not left empty or null.

Implementing these integrity rules is vital for keeping data reliable and consistent, particularly in complex environments where information is constantly being updated or accessed by multiple users. These constraints reduce the risk of errors, data corruption, and inconsistencies that could lead to incorrect conclusions or business decisions.

Best Practices for Data Backup and Recovery

Effective data protection strategies are crucial for ensuring that important information remains secure and recoverable in case of unexpected events, such as hardware failures, data corruption, or accidental deletions. Backup and recovery processes provide a safety net for preserving data integrity and minimizing the impact of disruptions. Proper planning and implementation of backup strategies are key to maintaining business continuity and avoiding costly downtime.

Several best practices can be followed to ensure that backup and recovery procedures are efficient, reliable, and secure. These guidelines help organizations safeguard critical information while also ensuring that recovery can be executed promptly in the event of an issue.

- Regular Backup Schedule: Consistently backing up data ensures that recent versions are always available for recovery. Set up automated backup routines to reduce the risk of human error and ensure that backups occur regularly.

- Multiple Backup Locations: Storing backups in various locations, including offsite or in cloud storage, provides an added layer of security in case of local disasters like fires or floods.

- Test Recovery Procedures: Simply having backups is not enough; it is essential to regularly test recovery processes to ensure that data can be restored quickly and accurately when needed.

- Versioning: Keep multiple versions of backups to protect against the risk of corrupted backups or unwanted changes. This allows recovery from earlier points in time if necessary.

- Encryption and Security: Sensitive information should be encrypted both during the backup process and while stored, ensuring that only authorized individuals can access the data.

- Monitoring and Alerts: Implement monitoring tools that track backup status and notify administrators of failures or potential issues. This proactive approach helps identify problems before they become critical.

By following these best practices, organizations can significantly reduce the risk of data loss and ensure that recovery is fast and reliable when it matters most. A solid backup strategy provides peace of mind, knowing that critical data is protected and can be restored in case of an emergency.

Examining Database Management System Architecture

The structure of a data storage environment is crucial for ensuring the efficient handling, retrieval, and manipulation of information. A well-designed framework supports various user requirements, maintains performance, and allows for easy scalability. The architecture is composed of different layers and components that work together to manage vast amounts of data while ensuring consistency and accessibility. Understanding the components and their roles is essential for optimizing the performance of these environments.

Key Components of the Architecture

The architecture can be broken down into several fundamental components, each of which has a distinct function that contributes to the overall functionality of the system.

- Storage Manager: Responsible for managing how data is stored, retrieved, and updated. It handles the physical storage of information and interacts with the operating system’s file management components.

- Query Processor: Interprets and executes queries, optimizing how data is fetched or manipulated. It plays a vital role in determining the most efficient way to process user requests.

- Transaction Manager: Manages operations that involve multiple tasks. It ensures that all parts of a transaction are completed successfully or that none are executed, thus maintaining consistency.

- Security Manager: Protects sensitive data and manages user permissions, ensuring that only authorized individuals can access or modify specific records.

Types of Architecture

The structure of the environment can vary depending on how the components are organized and interact with each other. Two of the most common architectures are:

- Single-Tier Architecture: All components are integrated into a single system. This is typically used for smaller applications where simplicity and low cost are priorities.

- Multi-Tier Architecture: This architecture separates components into different layers, each handling distinct tasks, such as storage, processing, and security. This is more scalable and suitable for larger, more complex applications.

Understanding the design and structure of these environments is essential for optimizing performance, ensuring scalability, and maintaining the integrity of the stored data. By carefully considering each layer’s role and its interactions, an organization can develop an efficient and reliable infrastructure.

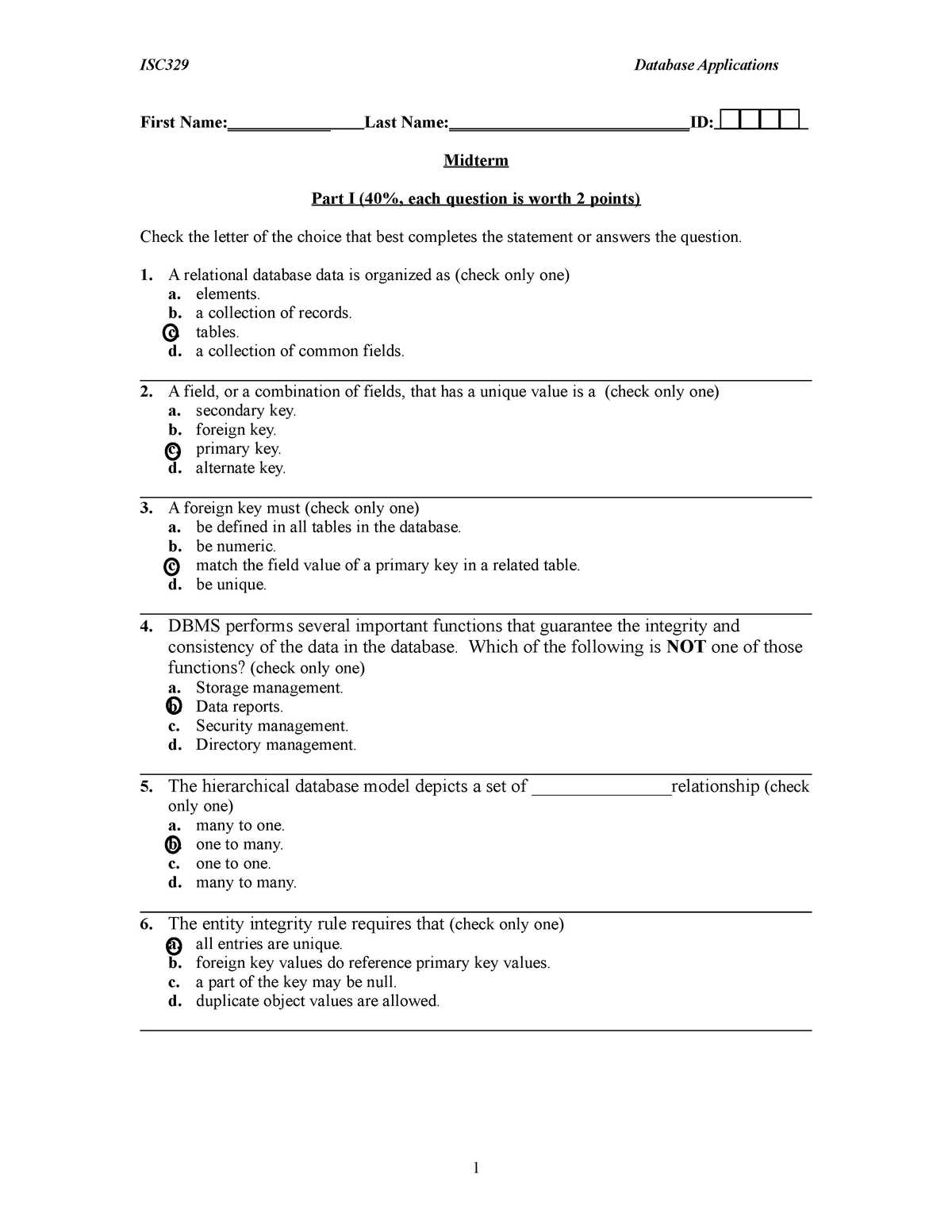

Common Mistakes in Database Exams

When preparing for assessments related to data handling and organization, many students tend to make recurring errors that can significantly impact their performance. These mistakes often arise from misunderstandings of key concepts, insufficient practice, or a lack of attention to detail when solving problems. Recognizing these common pitfalls and addressing them can help ensure a more successful outcome and a deeper understanding of the subject matter.

Common Mistakes to Avoid

Here are some typical errors that candidates often make in such assessments:

- Overlooking Details in Questions: Students may skip over specific instructions or details provided in the task. This can lead to incomplete answers or misinterpretations of the problem’s requirements.

- Confusing Similar Terms: Many concepts in this field share similar terminology, such as “primary key” versus “foreign key” or “indexing” versus “searching.” Confusing these terms can lead to incorrect explanations or solutions.

- Poor Time Management: Spending too much time on one problem can result in not having enough time to address others. Practicing time management during study sessions can help improve performance during the actual assessment.

- Neglecting to Optimize Queries: Optimizing queries for efficiency is crucial. Not considering performance aspects when writing queries can lead to slower results, especially when dealing with large datasets.

- Failure to Review Work: Failing to double-check answers can result in avoidable mistakes. Taking a few minutes to review responses can often uncover small errors that would otherwise go unnoticed.

How to Improve Performance

To minimize mistakes, it is essential to adopt effective study techniques and exam strategies:

- Practice Regularly: Consistent practice helps to reinforce concepts and solidify understanding. Regularly solving problems and reviewing solutions can prevent common errors from reoccurring.

- Understand Key Concepts: Focus on grasping the core principles behind each topic rather than memorizing isolated facts. This deeper understanding will help when faced with unfamiliar problems.

- Take Mock Assessments: Simulating the test environment by taking practice exams can help improve time management and reduce anxiety on the day of the assessment.

By being mindful of these common mistakes and adopting strategies to address them, students can increase their chances of success in assessments related to data handling and organization, ultimately leading to better performance and a stronger grasp of the material.

Effective Time Management During Exams

Managing time efficiently during assessments is crucial for achieving optimal performance. Many students struggle with balancing the amount of time allocated to each task, which can lead to rushed or incomplete answers. The key to success lies in understanding the importance of pacing yourself, prioritizing tasks, and strategically allocating time based on the complexity and requirements of each section. By employing specific techniques, students can ensure they have ample time to complete all questions thoroughly and accurately.

Techniques for Better Time Allocation

Here are some strategies to help you manage your time effectively during assessments:

- Read Through the Entire Paper First: Before diving into solving problems, take a few minutes to scan the entire paper. This will give you an idea of the types of tasks involved and allow you to gauge how much time to allocate to each section.

- Allocate Time to Each Section: Based on the number and complexity of questions, set a specific time limit for each section. Stick to your plan as much as possible to avoid spending too much time on one task at the expense of others.

- Start with Easier Questions: Begin by tackling questions you are most confident in. This will help you build momentum and reduce stress, allowing more time for tougher problems later on.

- Take Brief Breaks: If allowed, take a short break in between sections to recharge. A few moments to relax can help you maintain focus and perform better overall.

Handling Time Pressure Effectively

Even with careful planning, it is easy to feel overwhelmed when time runs short. Here’s how you can deal with pressure:

- Stay Calm: Don’t panic if time is running low. Keep a clear head, focus on the task at hand, and prioritize key points in your answers.

- Skip Difficult Questions: If a particular problem is taking too long, move on to the next one. You can always come back to the more challenging tasks after completing easier ones.

- Review Your Work: If time permits, allocate a few minutes at the end to review your answers. This can help catch any errors or missed details that could impact your final score.

By adopting these time management strategies, students can approach assessments with more confidence and reduce the likelihood of rushing through tasks. Managing time effectively not only enhances the quality of your work but also reduces anxiety, leading to a more productive and successful performance.

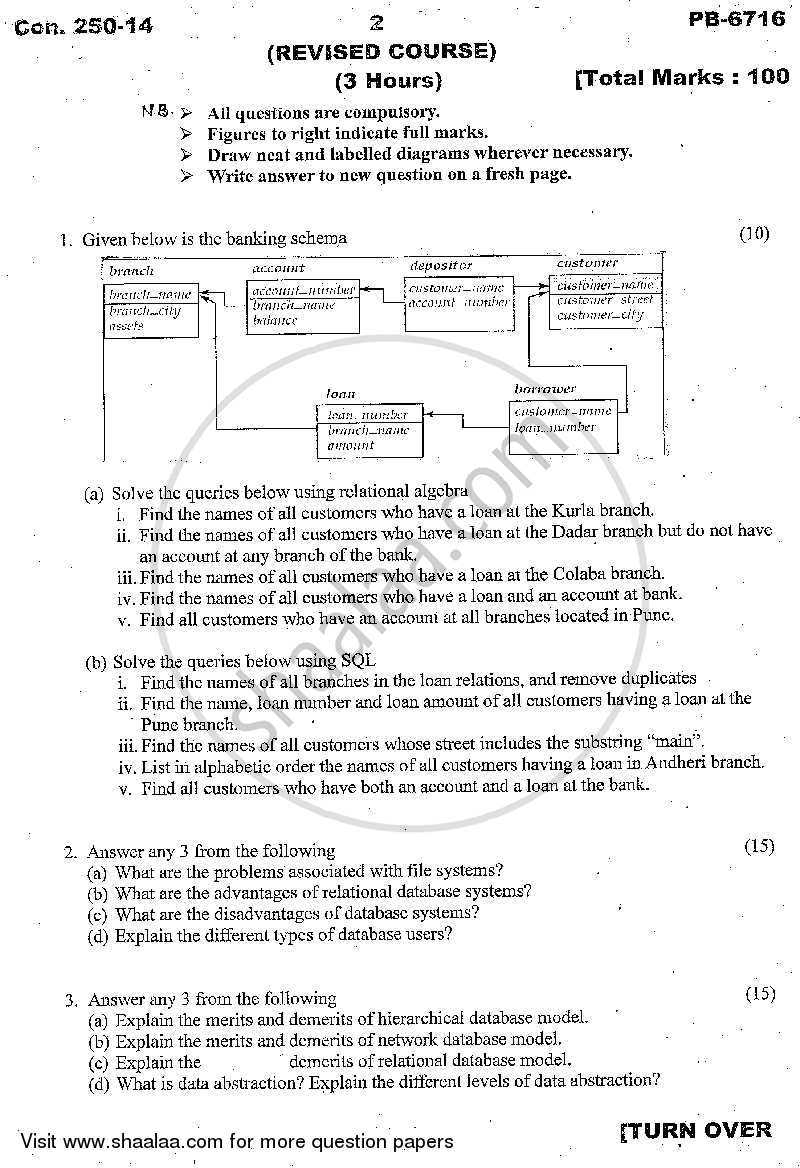

Reviewing Past Exam Papers

Looking at previous assessment papers is an excellent way to prepare for upcoming evaluations. By analyzing past tests, students can gain insight into the types of tasks likely to appear, identify recurring themes, and understand the level of detail expected in responses. This method allows students to build familiarity with the structure and format of the paper, improving confidence and performance under exam conditions.

Why Review Previous Papers?

Here are some reasons why revisiting past papers can be highly beneficial:

- Identifying Recurrent Themes: Certain topics tend to appear frequently across multiple assessments. By reviewing past papers, students can identify these recurring themes and prioritize their study accordingly.

- Understanding Question Formats: Previous papers offer valuable insight into how questions are structured, enabling students to become familiar with phrasing and the expectations of the examiner.

- Learning from Mistakes: Reviewing past attempts can help highlight common mistakes, allowing students to correct their approach and avoid similar errors in the future.

- Managing Time Effectively: Past papers allow students to practice under timed conditions, helping them develop a sense of timing and pacing for each type of question.

How to Make the Most of Past Papers

Here are some tips on how to effectively utilize past papers as a study tool:

- Simulate Exam Conditions: When practicing with previous papers, aim to recreate the conditions of the actual test. Time yourself, avoid distractions, and try to complete the paper as if you were in the real evaluation.

- Analyze the Marking Scheme: If available, study the marking criteria for past assessments. This will help you understand what examiners are looking for and guide your response approach.

- Review Your Responses: After completing a past paper, go back and check your answers. Compare your responses with model answers or suggested solutions to identify areas for improvement.

- Focus on Weak Areas: If certain types of questions are consistently challenging, spend extra time reviewing those topics and practicing similar questions.

By strategically reviewing past papers, students can enhance their preparation and increase their chances of success. This approach helps build familiarity with both content and format, allowing for more effective study and improved exam performance.