Preparing for a certification in cloud infrastructure management can be challenging but rewarding. This guide provides valuable insights into the key concepts and practical skills you need to succeed. By focusing on the core topics and hands-on experience, you can build a solid foundation that ensures success in the evaluation process.

We will explore various critical aspects, such as deployment strategies, network configuration, and storage management. Mastering these areas will help you solve real-world problems with confidence and efficiency. Practical exercises are also included to reinforce your understanding and test your knowledge under realistic conditions.

Through this resource, you’ll be equipped with the tools and strategies needed to approach the certification with clarity. Whether you’re new to the field or refining your expertise, this guide offers practical advice for achieving your goals in a structured and systematic way. Preparation is key to success, and with the right focus, you’ll be well-prepared to demonstrate your skills and knowledge.

Kubernetes CKA Exam Questions and Answers

In order to successfully pass a certification focused on container orchestration, candidates must demonstrate a comprehensive understanding of various cloud infrastructure topics. This section provides a selection of essential scenarios and challenges you may encounter during the certification process. By exploring these real-world examples, you can refine your practical skills and ensure you’re ready to tackle any situation with confidence.

Each example is designed to help you focus on the most crucial aspects of system management and configuration. From managing workloads to troubleshooting complex issues, mastering these key concepts will enable you to perform tasks efficiently in an actual environment. The following table summarizes some of the core areas covered in these practical scenarios:

| Topic | Description |

|---|---|

| Cluster Setup | Configuring and deploying a cluster, including networking and access control |

| Workload Management | Deploying, scaling, and maintaining applications with resilience |

| Network Configuration | Setting up communication between services, handling DNS, and load balancing |

| Storage Solutions | Managing persistent volumes and configuring storage classes for different needs |

| Security Practices | Implementing authentication, authorization, and security policies |

| Troubleshooting | Diagnosing and resolving issues in both cluster and application levels |

Familiarizing yourself with these critical areas will significantly improve your chances of success. In addition to theoretical knowledge, practical experience in managing these elements will prepare you for the real-world challenges you will face as a certified professional.

Key Topics Covered in the CKA Exam

To successfully achieve certification in cloud-native infrastructure management, it’s essential to master several key concepts and skills. This section highlights the core areas that will be tested, which encompass both theoretical knowledge and hands-on abilities. Candidates must familiarize themselves with the foundational elements of container orchestration, deployment, and cluster management to perform tasks effectively.

The primary topics typically include:

- Cluster Architecture: Understanding how clusters are structured, configured, and managed, including access controls and networking.

- Workload Deployment: Managing pods, services, and application scaling using various strategies and deployment methods.

- Networking: Configuring internal and external communication between services, DNS, load balancing, and ingress management.

- Storage Management: Working with persistent volumes, dynamic provisioning, and integrating storage solutions into applications.

- Security: Configuring role-based access control (RBAC), service accounts, and applying security policies across the cluster.

- Monitoring and Logging: Setting up and troubleshooting monitoring tools, analyzing logs, and diagnosing system performance.

- Troubleshooting: Diagnosing issues related to pods, services, nodes, and network communication within the cluster.

- Resource Management: Efficiently allocating resources, managing quotas, and ensuring optimal use of hardware resources.

- Helm: Using Helm charts to automate and streamline application deployment processes.

Each of these topics requires both theoretical understanding and practical expertise to successfully manage a cloud-native environment. Preparation in these areas will ensure a well-rounded proficiency and the ability to handle challenges during certification evaluation. By gaining hands-on experience in these key areas, candidates can approach their certification with greater confidence and readiness.

Mastering Core Kubernetes Concepts

In order to excel in managing containerized applications, it’s crucial to have a deep understanding of the foundational concepts behind orchestration platforms. Mastery of these core elements enables you to efficiently deploy, scale, and manage workloads in a cloud-native environment. The following areas are essential for building this foundation and will be key to success in any certification related to this field.

Key concepts to focus on include:

- Containers and Pods: Understanding the basic unit of deployment and the relationship between containers and pods in orchestrated environments.

- Namespaces: Using namespaces to organize resources, isolate environments, and manage permissions effectively.

- Deployments and ReplicaSets: Managing the deployment of applications and ensuring scalability and availability through replica sets.

- Services: Configuring services for stable networking and load balancing between application components across clusters.

- Volume Management: Understanding the persistence of data by working with different types of volumes and their integration with running applications.

- Scheduling and Resource Allocation: Effectively scheduling workloads and allocating resources to ensure optimal cluster performance.

- Configuration Management: Using config maps and secrets to manage configuration and sensitive data within the cluster securely.

- Networking Policies: Implementing network policies to control communication between services and ensure security across the platform.

- Health Checks: Configuring liveness and readiness probes to monitor the health and status of applications and services.

By mastering these core elements, you can build a strong foundation for orchestrating containerized workloads, ensuring applications run smoothly and efficiently in a production environment. This knowledge will not only help you manage infrastructure but also resolve issues quickly and keep your system running at peak performance.

Understanding Kubernetes Architecture and Components

To efficiently manage containerized applications in a distributed environment, it’s essential to understand the structure and key elements that make up the orchestration platform. A solid grasp of the underlying architecture enables you to navigate complex tasks like scaling, monitoring, and troubleshooting with ease. This section explores the primary components that form the foundation of a container orchestration system.

Core Components of the Cluster

The cluster is the central unit of the platform, consisting of several key components working together. These include the master node, which controls the overall state of the system, and the worker nodes, where containers and applications run. The architecture is designed to provide resilience, scalability, and ease of management.

- Master Node: The control plane that manages the cluster’s state, including scheduling tasks, handling communication, and ensuring security.

- Worker Nodes: The machines that run containerized applications and provide compute resources for running workloads.

- API Server: The central point of communication between the master node and the worker nodes, handling requests and maintaining the cluster’s state.

- Controller Manager: Ensures the desired state of the system by constantly monitoring and making adjustments as needed.

- Scheduler: Determines where workloads should be placed based on available resources and other scheduling criteria.

Supporting Infrastructure

Besides the core components, several additional elements work together to provide vital functionality for cluster management. These components help in managing resources, ensuring security, and maintaining high availability of applications.

- etcd: A distributed key-value store that holds the cluster’s configuration data, ensuring consistency and availability.

- Cloud Controller Manager: Interfaces with cloud service providers to manage cloud-specific resources such as load balancers and storage volumes.

- DNS: Provides a reliable and scalable way to resolve service names to IP addresses within the cluster.

Understanding the architecture and how these components interact is crucial for troubleshooting, optimizing, and scaling a containerized system effectively. Each part of the system plays a vital role in ensuring that the platform runs smoothly and securely.

Hands-On Practice with Pod Management

To effectively manage containerized workloads, it’s essential to gain hands-on experience with pod management. Pods are the smallest deployable units in container orchestration, and mastering their configuration, scaling, and lifecycle management is crucial for running applications in a cloud-native environment. This section covers the core tasks involved in managing pods, from deployment to scaling and troubleshooting.

Basic Pod Operations

The first step in pod management is understanding how to create, monitor, and modify pods. These operations allow you to control the state of your applications, ensuring that they are deployed correctly and can scale according to demand. Below is a summary of essential pod operations:

| Operation | Command | Description |

|---|---|---|

| Pod Creation | kubectl run |

Deploy a pod from a specified image to the cluster. |

| Pod Status | kubectl get pods |

Check the current state of pods, including whether they are running or pending. |

| Pod Deletion | kubectl delete pod |

Remove a specific pod from the cluster. |

| Pod Logs | kubectl logs |

View the logs of a running pod to diagnose issues. |

Scaling and Managing Pods

Once you are comfortable with basic pod operations, scaling and managing pods becomes the next essential skill. Scaling allows you to meet changing application demands by increasing or decreasing the number of pod replicas. Proper resource allocation ensures that applications run efficiently and remain highly available. Here are key tasks related to scaling:

- Scaling Pods: Increase or decrease the number of pod replicas using commands like

kubectl scale deployment. - Resource Requests and Limits: Set resource requests (CPU, memory) to ensure efficient resource utilization and prevent resource contention.

- Rolling Updates: Perform rolling updates to upgrade pods without downtime, using

kubectl rolloutcommands.

By continuously practicing pod management tasks, you will become proficient in deploying, scaling, and maintaining containers in a production environment. This hands-on experience is vital to understanding how orchestration platforms function and enables you to troubleshoot problems effectively.

Deploying and Scaling Applications Effectively

Effective deployment and scaling of applications are essential for maintaining performance and reliability in a cloud-native environment. Whether you’re managing microservices or monolithic applications, understanding how to deploy, scale, and manage resources efficiently ensures that your applications remain responsive and meet user demands. This section focuses on the best practices for deploying applications and scaling them to handle varying workloads.

To deploy applications effectively, follow these core steps:

- Containerization: Ensure that your application is packaged in containers for portability and easy management across different environments.

- Use of Deployment Configurations: Create deployment configurations to define how applications are released and updated within the cluster.

- Rolling Updates: Implement rolling updates to deploy new versions of applications without downtime, ensuring that there’s no service interruption during the upgrade process.

- Health Checks: Configure health checks to automatically monitor application performance and remove or replace unhealthy instances when necessary.

Scaling applications involves adjusting the number of instances or resources allocated based on demand. Here are some strategies for effective scaling:

- Horizontal Scaling: Increase the number of replicas to handle higher traffic loads, ensuring availability and load distribution across multiple instances.

- Vertical Scaling: Adjust the resources allocated to each instance, such as CPU or memory, to handle resource-intensive workloads more effectively.

- Auto-scaling: Configure auto-scaling policies to automatically adjust resources based on real-time traffic or load metrics, allowing the system to scale up or down as needed.

- Load Balancing: Ensure proper load balancing to distribute traffic evenly across application instances, preventing any single instance from becoming overwhelmed.

By deploying applications with these best practices and scaling them efficiently, you ensure that your applications are not only robust and resilient but also optimized for performance in dynamic environments.

Networking and Communication in Kubernetes

In a distributed environment, efficient networking and communication between services are crucial for ensuring that applications run smoothly and scale effectively. Understanding how networking functions within an orchestrated system is key to maintaining connectivity, managing traffic, and securing communication channels. This section will explore the fundamental aspects of networking and how components within the system communicate with one another.

Cluster Networking

The first step in understanding networking within an orchestrated platform is to grasp the concept of cluster networking. A cluster consists of multiple nodes that need to communicate with each other. Ensuring seamless communication between the nodes, regardless of their location, is fundamental to the cluster’s operation.

- Pod-to-Pod Communication: Pods within the same cluster can communicate with one another over an internal network, making it possible for services to discover and interact with each other directly.

- Service Discovery: Services within the cluster can be exposed via service names, allowing pods to communicate with one another by name rather than IP address, which can change dynamically.

- Network Policies: Network policies are used to control the flow of traffic between pods, limiting communication between services based on specific rules and security needs.

External Communication and Load Balancing

For applications that need to interact with the outside world, it’s essential to expose them through external endpoints. Load balancing and ingress controllers play a significant role in directing external traffic to the correct services within the cluster.

- Service Exposure: Services can be exposed to external traffic using different types, such as NodePort, LoadBalancer, and ClusterIP, depending on the specific requirements for external access.

- Ingress Controllers: Ingress controllers manage external access to services, often providing features like SSL termination, URL routing, and load balancing.

- Load Balancing: Load balancers ensure that traffic is distributed evenly across available service instances, preventing any single instance from becoming overwhelmed.

Effective networking and communication strategies are essential for creating resilient and scalable applications in a distributed system. By implementing best practices in network design and managing traffic efficiently, you can ensure that your system operates smoothly and securely at scale.

Configuring and Managing Storage Volumes

Effective storage management is a critical aspect of running containerized applications, particularly when dealing with data persistence. Whether applications need temporary scratch space or long-term data storage, configuring volumes correctly ensures that data is retained across container restarts or failures. This section will focus on the principles of managing storage volumes, from setting up basic storage to ensuring data availability and security.

Types of Storage Volumes

When configuring storage, it’s important to understand the different types of volumes available. Each type serves a distinct purpose and can be used in various scenarios based on performance, reliability, and scalability needs.

- EmptyDir: A temporary volume used by containers within the same pod. Data stored in an EmptyDir is lost when the pod is terminated.

- HostPath: Provides access to a directory on the node’s filesystem. This is useful for testing or development but not recommended for production due to potential data loss.

- Persistent Volumes (PVs): A resource in the cluster that allows for durable storage. Persistent Volumes are independent of the lifecycle of a pod and can be reused across different pods.

- Persistent Volume Claims (PVCs): A request for storage resources. PVCs allow users to dynamically allocate storage based on their application’s needs.

- Cloud Volumes: External cloud storage solutions (e.g., AWS EBS, Google Persistent Disk) that can be used as persistent storage across nodes in a cloud environment.

Managing Volumes and Access

Once the appropriate storage volume is selected, managing access and configuring it properly are crucial for ensuring consistent data performance and availability. The following steps outline the best practices for managing volumes and ensuring proper access control:

- Volume Mounting: Mount volumes into containers to provide them with access to storage. Volumes can be mounted at specific paths in the container’s filesystem.

- Dynamic Provisioning: Set up storage classes to automatically provision persistent volumes when a PVC is created. This allows for the flexible allocation of storage resources.

- Access Modes: Configure access modes for volumes, such as ReadWriteOnce (RWO), ReadOnlyMany (ROX), or ReadWriteMany (RWX), based on how many instances need access to the volume simultaneously.

- Volume Expansion: Some storage volumes can be expanded to accommodate increasing data storage needs. Ensure that your storage class supports dynamic resizing if required.

- Backup and Recovery: Implement backup and recovery strategies to safeguard against data loss and ensure that data is protected during upgrades or failures.

Properly managing storage volumes ensures data persistence, improves application stability, and allows for easier scaling of resources. By following these best practices, you can guarantee that your applications can handle critical data while maintaining flexibility and resilience.

Security Best Practices for Kubernetes

Securing a container orchestration platform is essential to protect sensitive data and ensure the overall integrity of applications. Given the complexity of distributed systems and the dynamic nature of containers, applying robust security practices is crucial. This section explores essential strategies to safeguard the system, prevent vulnerabilities, and manage access effectively across your environment.

Securing Access and Authentication

Access management is a cornerstone of security. Ensuring that only authorized entities can interact with the platform minimizes the risk of security breaches. Implementing the right authentication and access control mechanisms is vital for securing both the control plane and worker nodes.

- Role-Based Access Control (RBAC): Implement RBAC policies to ensure that only authorized users can access or modify specific resources within the system. Define roles based on the principle of least privilege, limiting user access to only necessary resources.

- Authentication Methods: Use strong authentication methods such as multi-factor authentication (MFA) or certificate-based authentication to verify the identity of users or services.

- API Server Authentication: Secure the API server by using strong authentication mechanisms, including OAuth, OpenID Connect, or service account tokens, to manage communication between components securely.

Network Security and Communication

Effective network security is key to protecting the communication between different components and services. Implementing network segmentation, encrypting traffic, and enforcing security policies can prevent unauthorized access and reduce the attack surface.

- Network Policies: Define network policies that restrict traffic between services, ensuring that only necessary communication is allowed. By isolating services, you can prevent lateral movement in case of a breach.

- Encryption in Transit: Ensure that all communication between nodes and services is encrypted. Use Transport Layer Security (TLS) to protect data integrity and confidentiality while in transit.

- Service Mesh: Implement a service mesh to control traffic flow, apply security policies, and manage secure service-to-service communication within the platform.

Container and Pod Security

Securing containers and pods from the inside out is critical for maintaining the integrity of the applications running on the system. Proper configuration of security policies, limits, and restrictions can reduce the risk of compromise within containers and pods.

- Security Context: Define security contexts for pods to control privileges such as user IDs, group IDs, and file system access. Ensure that containers run with the least privileges necessary to perform their tasks.

- Image Scanning: Regularly scan container images for vulnerabilities using automated tools to ensure that they do not contain known security flaws or malware.

- Pod Security Policies: Use pod security policies to enforce security standards, such as limiting privileged access, disallowing host network access, or restricting the use of certain types of volumes.

Monitoring and Auditing

Continuous monitoring and auditing are essential to identify and respond to potential security incidents. By implementing logging, monitoring, and alerting systems, you can detect unusual behavior and act swiftly to mitigate risks.

- Audit Logs: Enable auditing to track all actions performed in the cluster, providing a detailed record of who did what and when. These logs help to detect unauthorized actions and facilitate incident investigations.

- Security Monitoring: Use security monitoring tools to continuously monitor the system for signs of unusual activity or vulnerabilities. Tools such as Falco, Sysdig, or Prometheus can be valuable for this purpose.

- Regular Updates: Regularly update the system to patch vulnerabili

Helm and Managing Kubernetes Deployments

Managing containerized applications at scale requires a streamlined approach to deployment, configuration, and updates. One powerful tool to automate and simplify these tasks is a package manager that helps define, install, and upgrade applications in a consistent manner. By using this tool, operators can manage application deployments effectively, ensuring reproducibility and reducing configuration drift across environments.

This tool allows developers and system administrators to define complex applications with reusable templates, making it easier to deploy, manage, and version applications within a containerized environment. Through simple commands, users can install, upgrade, and roll back deployments, streamlining operational workflows and ensuring that applications are consistently deployed with the correct configurations.

One of the key benefits of this tool is its ability to package applications into reusable charts, which are easy to share, update, and maintain. These charts contain all necessary configuration details, allowing users to deploy applications with minimal setup. By using this approach, scaling applications, rolling back updates, or ensuring consistency across multiple clusters becomes a much more manageable task.

Problem Solving with Kubernetes Logs

Diagnosing and troubleshooting issues in containerized environments often begins with logs. These logs provide valuable insights into the behavior of applications, services, and system components. By analyzing logs effectively, administrators and developers can identify problems, trace errors, and improve the overall performance of the system. Logs act as the first line of defense when it comes to resolving issues that might arise during application deployment or runtime.

Accessing and interpreting logs from various sources, including pods, nodes, and containers, is crucial for understanding system health and debugging issues. Whether it’s an unexpected crash, poor performance, or communication failures between services, logs can provide detailed information about the root cause. Having a systematic approach to log management ensures that critical information is captured and available for analysis when needed.

In addition to local log files, centralized log management solutions can aggregate and analyze logs from across the system, providing a comprehensive view of the environment. This makes it easier to spot patterns, detect anomalies, and identify potential issues early on. By leveraging the power of log data, teams can troubleshoot problems faster and ensure that their applications run smoothly and efficiently.

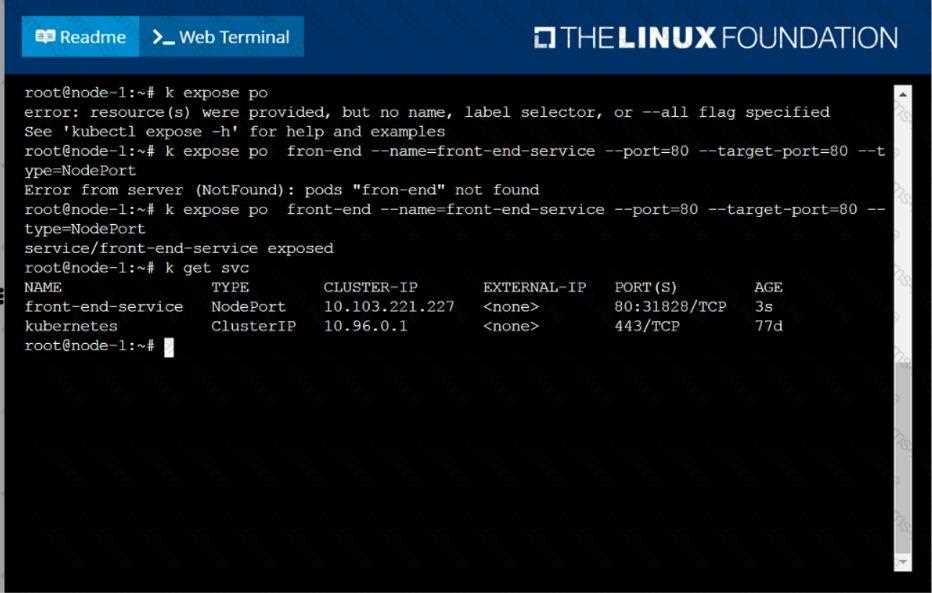

Essential Kubernetes Commands and Tools

When working with container orchestration platforms, having the right set of commands and tools at your disposal is essential for managing deployments, scaling applications, and ensuring smooth operations. These utilities provide the functionality needed to interact with the system, retrieve information, and make changes quickly. A deep understanding of these tools allows for more efficient management and troubleshooting of containerized environments.

Some of the most commonly used tools include the command-line interface (CLI) that facilitates interaction with clusters, as well as various utilities for monitoring, logging, and managing configurations. These tools offer various options for querying system status, controlling deployments, inspecting logs, and modifying system settings. Mastering these commands not only improves productivity but also enhances the ability to diagnose issues and make updates swiftly.

Additionally, automating common tasks using these utilities can significantly reduce the complexity of operations, enabling smoother workflows and better system reliability. Whether you’re managing configurations, scaling workloads, or troubleshooting application issues, knowing which commands to use and when to use them can make a significant difference in the overall efficiency of managing containerized environments.

Managing Namespaces and Resources

In containerized environments, effectively organizing and allocating resources is crucial for maintaining system performance and scalability. By segmenting the system into logical units, users can isolate workloads, ensure efficient resource usage, and simplify management tasks. The practice of organizing applications and services into isolated groups helps prevent conflicts, optimize resource allocation, and enhance overall operational efficiency.

One of the key concepts in this environment is the use of namespaces, which act as virtual clusters within a larger system. They provide a mechanism for grouping resources and controlling access to those resources, allowing administrators to apply specific policies or limits. Managing these namespaces properly ensures that each part of the system operates within its required limits and has access only to the resources it needs.

Optimizing Resource Usage

Resource management is another critical aspect. Setting up proper resource quotas and limits ensures that no individual workload consumes excessive amounts of CPU or memory, potentially degrading the performance of other services. By defining limits for CPU, memory, and storage, administrators can prevent resource hogging and maintain a balanced environment. This also helps in optimizing costs, particularly when dealing with cloud-based infrastructures.

Efficient Allocation of Resources

Additionally, using tools to monitor the usage of resources within namespaces allows teams to track consumption patterns, identify inefficiencies, and adjust resource allocation as needed. Whether it’s scaling up resources for high-demand applications or scaling down idle ones, proper resource allocation ensures that the system operates efficiently and cost-effectively.

Cluster Maintenance and Troubleshooting

Maintaining a healthy cluster and ensuring its continuous performance are fundamental aspects of managing containerized environments. Regular monitoring, updating, and troubleshooting are essential to prevent downtime and resolve issues before they escalate. Efficient maintenance ensures that the system remains stable, secure, and scalable while minimizing the risk of disruptions to services and applications.

Cluster maintenance includes tasks such as updating software, managing nodes, checking the health of services, and ensuring that configurations remain optimal. Effective troubleshooting involves identifying and resolving issues related to resource allocation, networking, or application failures. Being able to quickly diagnose and address problems ensures minimal downtime and consistent availability.

Key Maintenance Practices

To keep the cluster running smoothly, it’s vital to regularly check the system’s health using monitoring tools. This includes tracking the status of nodes, inspecting logs for errors, and reviewing the performance metrics of the system. It’s also important to implement rolling updates to ensure that the cluster’s components stay up to date without affecting running services.

Troubleshooting Common Issues

When problems arise, having a structured approach to troubleshooting can help quickly pinpoint the root cause. Key areas to focus on include:

- Resource Allocation: Ensure that applications have the necessary CPU, memory, and storage resources.

- Networking: Check for issues with service discovery or communication between components.

- Pod Failures: Investigate pod crashes, restarts, or liveness/readiness probe failures.

Utilizing diagnostic tools such as logs, events, and command-line queries can significantly expedite troubleshooting efforts, ensuring that problems are resolved promptly.

Performance Tuning and Optimization Tips

Optimizing the performance of containerized environments is crucial to ensure applications run efficiently, scaling seamlessly with increased demands. Performance tuning involves identifying potential bottlenecks, enhancing resource utilization, and ensuring that both the infrastructure and applications are configured to achieve peak efficiency. By applying best practices, you can improve response times, reduce resource consumption, and maintain system stability under varying workloads.

To enhance overall performance, several strategies can be applied across different levels of the infrastructure, from network optimization to fine-tuning application configurations. Regular monitoring and adjustments based on metrics are essential to achieve continuous optimization. Additionally, balancing resource allocation and prioritizing critical workloads can ensure that performance remains stable even under high load.

Here are some key tips for optimizing performance:

- Resource Requests and Limits: Properly configure CPU and memory requests and limits to ensure containers get the resources they need while preventing over-provisioning and under-provisioning.

- Horizontal Scaling: Use horizontal scaling to add more instances of applications when needed, improving performance during traffic spikes.

- Efficient Load Balancing: Implement optimal load balancing strategies to distribute traffic evenly across services and prevent overload on individual nodes or pods.

- Storage Optimization: Use persistent storage volumes efficiently and monitor I/O performance to prevent latency issues during data access.

By following these best practices and continuously monitoring key performance indicators, you can ensure that the environment remains performant, responsive, and able to scale efficiently as demands increase.

Effective Preparation Strategies for the CKA Exam

Preparing for a certification test in a complex containerized environment requires a structured approach, blending practical hands-on experience with theoretical understanding. A comprehensive study plan, focused on both core concepts and advanced configurations, can significantly boost your readiness. Understanding the exam format and identifying key topics are essential to ensure that your preparation is focused and efficient. In addition, practicing real-world scenarios can help reinforce your knowledge and improve your confidence.

Effective preparation is not solely about memorizing commands or concepts but about gaining a deeper understanding of how everything works together in a production-grade environment. Below are some strategies that can help you excel:

Hands-on Practice

The best way to reinforce your knowledge is through practical experience. Set up your own environments and work through different tasks, focusing on various components such as networking, storage, scaling, and security. The more you practice, the easier it will be to navigate the actual test.

Study Resources

Utilize a variety of resources to expand your knowledge base. Official documentation, online courses, and books can provide valuable insights into complex topics. Forums and study groups also allow you to exchange knowledge with others and solve problems together, simulating real-world troubleshooting.

By focusing on practical skills, leveraging multiple study resources, and organizing your preparation, you’ll be better equipped to tackle challenges and succeed in achieving certification. Consistent practice, time management, and a deep dive into critical topics will significantly enhance your chances of success.