In order to succeed in your certification journey, it’s important to familiarize yourself with the core concepts and practical skills required. Understanding key topics and mastering essential tools will ensure you’re ready for any challenge that may arise during the assessment process.

Practical knowledge and the ability to apply it efficiently are the foundations of achieving a high score. By reviewing relevant subjects and practicing problem-solving techniques, you’ll build the confidence needed to excel. Focusing on common scenarios that appear in evaluations can significantly enhance your performance.

Strategic preparation involves not only theoretical understanding but also hands-on experience. Through dedicated study and practice, you can solidify your grasp of critical systems and operations, making your path to success clearer and more achievable.

Preparation for Certification Success

To achieve success in your upcoming evaluation, it is crucial to develop a comprehensive understanding of the key concepts and tools required for the assessment. The journey involves mastering essential practices, troubleshooting techniques, and theoretical knowledge that are frequently tested in such evaluations.

Focus on Core Skills

Start by reviewing the most common topics that tend to appear in tests. Concentrating on system management, user configuration, file handling, and security protocols will lay a strong foundation for your preparation. A good grasp of command-line operations and system processes will significantly improve your efficiency during the test.

Practical Experience and Mock Tests

Hands-on practice is essential to internalize the concepts you study. Engage in practical exercises, simulations, and mock scenarios that replicate real-world challenges. This will not only enhance your technical expertise but also help you feel more confident and comfortable when facing actual problems during the assessment.

Common Topics in Certification Assessments

In preparation for your upcoming certification, there are several core areas that are frequently covered during the evaluation process. Focusing on these essential subjects will greatly enhance your ability to navigate the test successfully. The majority of assessments center around practical knowledge of system operations, file management, and security protocols, all of which are vital for any IT-related role.

Understanding system processes, memory management, network configuration, and troubleshooting techniques will be crucial. Additionally, familiarity with command-line tools, package management, and user/group management are often key components that are tested. Mastery of these topics ensures you can handle a wide range of tasks and challenges that may arise in a professional environment.

Essential Commands for Certification Success

Mastering a set of core commands is key to achieving success in any technical evaluation. These commands are fundamental for managing systems, files, and processes efficiently. Understanding their proper usage will allow you to navigate tasks with confidence and speed, making it easier to handle complex challenges during your assessment.

System Management Commands

Managing system resources is a crucial aspect of any evaluation. Here are some essential commands you should be familiar with:

- top – Displays real-time system performance and process information.

- ps – Shows currently running processes on the system.

- df – Checks disk space usage across file systems.

- free – Displays memory usage statistics.

- uptime – Shows system uptime and load averages.

File and Directory Management

Efficient file handling is vital in many technical assessments. The following commands are essential for managing files and directories:

- ls – Lists the contents of a directory.

- cp – Copies files or directories.

- mv – Moves or renames files and directories.

- rm – Removes files or directories.

- chmod – Changes file permissions.

- chown – Changes file ownership.

Understanding File System Structure

Grasping the organization of a computer’s file system is a fundamental skill for managing data and system operations. The structure defines how files are stored, accessed, and arranged in directories, allowing the system to efficiently organize large amounts of information. A solid understanding of this organization is essential for troubleshooting, configuring, and managing resources effectively.

Root Directory and Its Importance

The root directory is the foundation of the entire file system hierarchy. It is the starting point from which all other directories branch out. Everything within the system, including system files, configuration files, and user data, is contained within this structure.

- / – Represents the root directory itself.

- /bin – Contains essential command binaries.

- /etc – Stores system-wide configuration files.

Commonly Used Directories

Several directories are critical for both system administration and user tasks. Understanding their locations and purposes will help ensure that the system operates smoothly:

- /home – Contains personal user directories.

- /var – Stores variable files such as logs and caches.

- /usr – Contains user applications and utilities.

- /tmp – Temporary files used by the system.

Key Networking Concepts

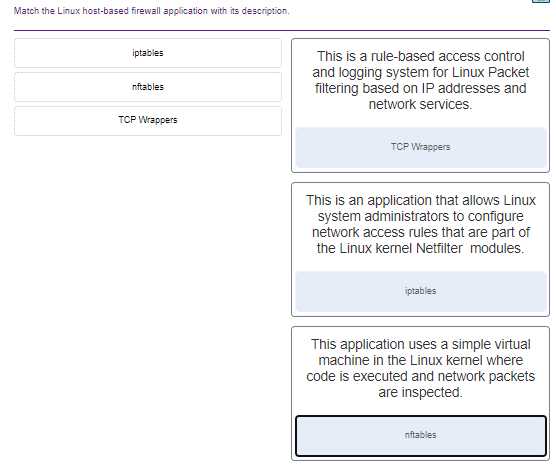

Understanding the fundamental networking concepts is essential for managing and troubleshooting communication between systems. These concepts lay the groundwork for connecting devices, configuring network interfaces, and ensuring secure data transfer. Mastery of networking tools and principles is indispensable for anyone aiming to work with modern systems.

Basic Network Configuration

Setting up and configuring network connections is a critical skill. Below are some key tasks you should be familiar with:

- IP Addressing – Configuring static and dynamic IP addresses for network interfaces.

- Subnetting – Dividing networks into smaller sub-networks to improve organization and efficiency.

- Routing – Defining how data packets are forwarded between systems on different networks.

- DNS Configuration – Setting up Domain Name System settings to resolve hostnames to IP addresses.

Networking Tools and Utilities

Several utilities are indispensable when diagnosing network-related issues. Below are a few tools commonly used for troubleshooting and network configuration:

- ping – Tests network connectivity between devices.

- ifconfig – Displays and configures network interfaces.

- netstat – Shows active connections and listening ports.

- traceroute – Traces the path of packets between systems, helping to diagnose routing issues.

- iptables – Configures firewall rules for security and traffic filtering.

Important Shell Scripting Skills

Shell scripting is an essential skill for automating tasks and managing system operations efficiently. By writing scripts, users can automate repetitive processes, manage system configurations, and handle complex workflows without manual intervention. Mastering this skill allows for greater flexibility and control over system administration tasks.

Core Scripting Concepts

When creating effective shell scripts, there are several core concepts to understand. These elements form the foundation for any script you write, whether for automation, maintenance, or monitoring purposes. Below is a table outlining key concepts:

| Concept | Description |

|---|---|

| Variables | Used to store data that can be referenced or modified throughout the script. |

| Loops | Allow for the repetition of commands or operations over a set range or condition. |

| Conditionals | Used to execute commands based on specific conditions, such as if-else statements. |

| Functions | Reusable blocks of code that perform specific tasks within the script. |

| Input/Output | Handling data from files or user input and outputting results to files or the terminal. |

Key Tools and Commands for Scripting

Several tools and commands are commonly used in shell scripting to enhance functionality and automate tasks effectively. Here are some of the most frequently used:

- echo – Prints output to the terminal or to a file.

- grep – Searches for patterns within files or input streams.

- awk – Processes and analyzes text files and data streams.

- sed – Performs text transformations and editing within files.

- crontab – Schedules tasks to be executed at specific intervals.

Managing Users and Groups

Efficient user and group management is a key aspect of system administration. Properly managing access controls, permissions, and user accounts ensures that system resources are secure and accessible only to authorized individuals. By creating and maintaining users and groups, administrators can organize the system and enforce security policies effectively.

Creating and Modifying Users

To manage user accounts, administrators must be familiar with several commands used to create, modify, and delete users. Key tasks include assigning user IDs, setting passwords, and defining home directories. Below are some essential commands:

- useradd – Creates a new user account.

- usermod – Modifies an existing user account.

- userdel – Deletes a user account.

- passwd – Changes a user’s password.

Group Management

Groups are used to manage permissions for a set of users. By grouping users, administrators can assign permissions to the entire group rather than to individuals. This simplifies the management of access controls and ensures consistency. Some useful group management commands include:

- groupadd – Creates a new group.

- groupdel – Deletes a group.

- gpasswd – Modifies group passwords.

- vigr – Edits group configurations in the system’s group file.

Managing User Permissions

Once users and groups are created, it’s important to manage their access to system resources. Permissions control who can read, write, or execute files. The following commands and concepts are crucial for assigning and managing permissions:

- chmod – Changes file permissions for users, groups, and others.

- chown – Changes file ownership.

- chgrp – Changes group ownership of files.

System Monitoring and Performance Tools

Monitoring system performance is critical for ensuring smooth operation and identifying potential issues before they affect functionality. By leveraging various tools, administrators can track resource usage, identify bottlenecks, and optimize overall system efficiency. Understanding how to use these tools effectively can drastically improve system reliability and performance.

Key Monitoring Tools

There are several tools available for monitoring various aspects of system performance, from CPU and memory usage to disk I/O and network traffic. Below is a table highlighting some of the most commonly used tools:

| Tool | Description |

|---|---|

| top | Displays real-time system information, including CPU, memory, and process usage. |

| htop | A more advanced version of top with a user-friendly interface and additional features. |

| vmstat | Reports on virtual memory statistics, processes, CPU activity, and system performance. |

| iostat | Provides input/output statistics for devices and partitions, helping to monitor disk performance. |

| netstat | Displays active network connections and network statistics. |

| sar | Collects, reports, and saves system activity information, providing historical data analysis. |

Performance Optimization

Once you’ve identified performance issues, the next step is optimizing the system. Several tools help to tweak system parameters, improve resource utilization, and enhance performance:

- sysctl – Modifies kernel parameters to optimize system performance.

- nice – Adjusts the priority of processes to allocate CPU resources more efficiently.

- ionice – Modifies I/O scheduling to prioritize disk operations.

- ulimit – Sets limits on system resources for processes, such as file sizes and memory usage.

Package Management Essentials

Managing software packages is an integral part of maintaining and configuring any system. Proper package management allows users to easily install, update, and remove software, ensuring that the system remains secure and up to date. It also simplifies the management of dependencies and helps prevent potential issues related to conflicting software versions.

Key Package Management Commands

To effectively manage software on your system, you will need to use various package management commands. These commands are specific to the system’s package manager and allow administrators to handle software installations, removals, and updates. Below are some common commands:

- apt-get – A command-line tool used to handle packages in Debian-based systems, including installing and updating software.

- yum – Used for managing packages in Red Hat-based distributions, it helps install, remove, and update software packages.

- dnf – A more modern package manager for Red Hat-based systems, replacing yum with improved features and performance.

- zypper – The command-line tool for managing packages in SUSE Linux distributions, similar to yum and apt.

- pacman – A package manager for Arch Linux, used for installing, updating, and managing software packages.

Package Management Operations

Below is a list of essential package management operations and their corresponding commands. These commands help you manage your system’s software efficiently:

- Install a Package – Use the package manager to install new software:

- apt-get install [package]

- yum install [package]

- dnf install [package]

- zypper install [package]

- pacman -S [package]

- Update a Package – Keep packages up to date:

- apt-get update && apt-get upgrade

- yum update

- dnf update

- zypper update

- pacman -Syu

- Remove a Package – Uninstall software that is no longer needed:

- apt-get remove [package]

- yum remove [package]

- dnf remove [package]

- zypper remove [package]

- pacman -R [package]

Understanding these essential tools and commands will help ensure that your system is always running the most up-to-date software while allowing you to manage resources effectively and securely.

Understanding Permissions and Ownership

Managing access control and ownership of files and directories is crucial for maintaining system security and ensuring that users only have the permissions necessary for their tasks. By understanding how file permissions and ownership work, administrators can protect sensitive data, manage user access, and troubleshoot potential security issues effectively.

File Permissions

Each file and directory has specific access rights that determine who can read, write, or execute them. These permissions are assigned to three types of users: the file owner, the group associated with the file, and others. Permissions are typically represented using a combination of letters (r, w, x) or numbers (0-7).

- r (read) – Allows the user to view the contents of the file.

- w (write) – Allows the user to modify the contents of the file.

- x (execute) – Allows the user to execute the file as a program or script.

Permissions can be modified using the chmod command, which allows users to grant or revoke specific rights for the owner, group, or others. For example, chmod 755 file.txt assigns read, write, and execute permissions to the owner and read and execute permissions to others.

File Ownership

Every file and directory is owned by a user and a group. The owner is typically the creator of the file, while the group defines a collection of users who share access rights to the file. You can view the ownership details of a file by using the ls -l command, which displays the owner and group alongside the file’s permissions.

Ownership can be changed with the chown command. For example, chown user:group file.txt changes the file’s owner to user and assigns it to the group.

Understanding and managing file permissions and ownership are fundamental skills for ensuring data security and efficient system administration. Properly configured permissions prevent unauthorized access while allowing legitimate users to perform their tasks effectively.

Handling Disk Management and Partitions

Effective management of storage devices and partitions is essential for optimizing system performance and ensuring efficient use of available space. By understanding how to handle disk drives, create partitions, and format file systems, administrators can maintain system integrity and improve resource management. Disk management allows users to organize their storage according to their needs while ensuring data safety and accessibility.

Disk Partitioning

Partitioning is the process of dividing a physical disk into multiple sections, each of which can be formatted and used independently. Partitioning helps organize data, manage system resources, and isolate different types of data or applications. Common tools used for partitioning include:

- fdisk – A command-line utility for creating and managing partitions on MBR (Master Boot Record) formatted disks.

- parted – A more flexible tool that can handle both MBR and GPT (GUID Partition Table) formatted disks, useful for larger disks and advanced partition schemes.

- gparted – A graphical partition editor that makes partition management more accessible for users who prefer a visual interface.

To create a partition, you would typically select the disk, choose the size, and define the type (e.g., primary, extended). Once the partition is created, it can be formatted with a file system such as ext4, xfs, or btrfs, depending on your needs.

Mounting Partitions

Once partitions are created and formatted, they need to be mounted to make them accessible to the operating system. Mounting is the process of linking a file system to a directory, making it available for use. The mount command is used to attach partitions to specific directories in the system’s file tree.

For example, mount /dev/sda1 /mnt would mount the first partition on the first disk to the /mnt directory. For permanent mounting, users can add entries to the /etc/fstab file, which allows partitions to be automatically mounted at boot time.

Proper disk management and partitioning practices ensure that systems are well-organized, with adequate space allocated for various tasks, and protect data integrity. Understanding these concepts is essential for system administrators who need to configure, optimize, and maintain storage efficiently.

Basic Security Practices for Linux

Securing a system is crucial for protecting sensitive data, maintaining privacy, and ensuring that the system runs smoothly. Security practices range from configuring user access rights to implementing encryption techniques that safeguard information. By following basic security principles, users and administrators can minimize the risk of unauthorized access and ensure the overall integrity of the environment.

System Updates and Patch Management

Regular updates and patching are key components of any effective security strategy. Vulnerabilities in software are frequently discovered, and patches or updates are released to fix them. Ensuring that the system is regularly updated helps protect against known exploits and reduces the risk of attacks.

- apt-get update – On Debian-based systems, this command ensures the latest package information is downloaded.

- yum update – On Red Hat-based systems, this command updates all installed packages to their latest versions.

- unattended-upgrades – This tool can automatically install security updates without manual intervention.

Securing User Access

Limiting user access and using strong authentication methods are essential to prevent unauthorized access. This includes setting up secure passwords, using multi-factor authentication, and restricting user privileges based on the principle of least privilege.

- Using strong passwords – Passwords should be complex and unique to prevent brute force attacks.

- Limit user privileges – Assign users only the permissions necessary for their tasks.

- Use sudo – Grant temporary administrative rights through the sudo command instead of logging in as root.

Following these practices ensures that the system remains secure and reduces the likelihood of being compromised by external threats. The combination of routine updates and effective user access control provides a strong foundation for a secure environment.

Configuration Files Every Linux User Should Know

Configuration files play a vital role in controlling system behavior and user preferences. These files allow users and administrators to tailor the system to specific needs, whether it’s managing network settings, customizing user environments, or adjusting security policies. Understanding the most important configuration files can help users make informed adjustments and troubleshoot effectively.

Key Configuration Files

Here are some essential configuration files that every user should be familiar with:

- /etc/passwd – This file stores basic user information, including the username, user ID (UID), group ID (GID), home directory, and default shell.

- /etc/fstab – It contains information about disk drives and partitions, and it specifies how they should be mounted at boot time.

- /etc/hostname – This file defines the system’s hostname, which is used to identify the machine on a network.

- /etc/network/interfaces – On Debian-based systems, this file controls network interfaces and their configurations, such as IP address settings and DNS servers.

- /etc/hosts – It maps IP addresses to hostnames, allowing the system to resolve hostnames without needing a DNS server.

- /etc/sudoers – This configuration file controls user permissions for using the sudo command, allowing certain users to execute commands as superuser.

- ~/.bashrc – This file holds user-specific environment settings for the Bash shell, such as aliases, functions, and prompt configurations.

- /etc/ssh/sshd_config – This file configures Secure Shell (SSH) settings for remote login, including encryption methods, authentication methods, and access restrictions.

Editing Configuration Files

To modify configuration files, users typically need administrative privileges. It is important to use caution when editing these files, as incorrect changes can cause system instability or security vulnerabilities. Use text editors like nano, vim, or vi to safely edit configuration files:

- nano – A simple, easy-to-use text editor suitable for beginners.

- vim – A powerful text editor that provides advanced features for experienced users.

- vi – A versatile editor similar to vim, often used in older systems.

Having a strong understanding of these configuration files is essential for system administration, troubleshooting, and customization. By knowing where to look and what to change, users can ensure their system runs smoothly and meets their specific needs.

Kernel and Module Management

The core component of any operating system is its kernel, which acts as the bridge between the hardware and software layers. Kernel management ensures that the system is optimized for performance, stability, and security. In addition, modules allow for the dynamic loading of specific functionalities without requiring a reboot. Understanding how to manage the kernel and its modules is crucial for maintaining a reliable system.

Kernel management typically involves tasks such as checking the current version, upgrading to newer versions, and configuring settings to match the system’s requirements. Modules, on the other hand, extend the kernel’s capabilities by adding support for various hardware devices, filesystems, or networking protocols. These can be loaded and unloaded dynamically, providing flexibility and efficiency.

Managing Kernel Versions

To ensure the system is using the appropriate kernel version, users can check the current version with the command:

uname -r

If an update or upgrade is needed, the system may require the installation of a newer kernel package. This can often be achieved through the package manager or by downloading the kernel source code, compiling, and installing it manually.

Working with Modules

Modules are an essential part of the operating system, allowing additional functionalities to be added without the need to restart the system. Some common tasks include:

- Loading a module: To load a module into the kernel, use the

modprobecommand followed by the module name. - Removing a module: To remove a module, use the

rmmodcommand. This will unload the module from the kernel. - Listing loaded modules: To view all currently loaded modules, use the

lsmodcommand. - Module information: For detailed information about a specific module, use the

modinfocommand.

For example, to load the nfs module, use:

sudo modprobe nfs

By understanding and managing the kernel and its modules effectively, users can optimize the system’s performance, add necessary drivers or capabilities, and ensure that the operating environment remains stable and secure.

Backup and Recovery Strategies

Effective data protection involves planning for both the prevention of data loss and the ability to recover quickly in case of a system failure. A comprehensive backup and recovery strategy is essential to safeguard critical files and system configurations. This process ensures that users can recover from hardware failures, accidental deletions, or other unforeseen events that might disrupt normal operations.

There are several approaches to creating a robust backup strategy, including full, incremental, and differential backups. Each method serves a different purpose and can be selected based on specific needs. Additionally, recovery planning involves establishing procedures for restoring lost data quickly and reliably, minimizing downtime, and ensuring business continuity.

Types of Backup Strategies

There are three main types of backup strategies used to protect data:

- Full Backup: A complete copy of all data on the system. While it provides the most comprehensive protection, it can be time-consuming and requires significant storage space.

- Incremental Backup: Only the data that has changed since the last backup is saved. This method is efficient in terms of storage and time but may require multiple restoration steps.

- Differential Backup: This method captures data changes since the last full backup. It strikes a balance between storage and restoration time, making it a common choice for many users.

Recovery Procedures

Once data has been backed up, it’s essential to have a clear process for restoring it when needed. Recovery procedures typically include:

- Identifying Critical Files: Before starting the recovery, determine which files need to be restored, whether it’s individual files or the entire system.

- Recovery Tools: Utilize tools such as

rsync,tar, or specialized backup software to retrieve data from backup media. - Testing Restores: Periodically test recovery procedures to ensure they work as expected in a real emergency.

By implementing a strategic backup plan and recovery procedures, users can mitigate the risks associated with data loss and ensure that their systems remain functional even after unexpected disruptions.

Common Troubleshooting Techniques

Troubleshooting system issues requires a systematic approach to diagnose and resolve problems effectively. Whether dealing with software malfunctions, network connectivity issues, or hardware failures, following a structured process helps identify the root cause and implement an appropriate solution. The key is to break down complex problems into manageable steps while remaining methodical and focused on the symptoms presented.

There are several techniques that can be used to troubleshoot problems efficiently. By gathering relevant information, using diagnostic tools, and isolating variables, one can narrow down potential causes and address them one by one. A logical, step-by-step approach often yields the best results and ensures that no underlying issues are overlooked.

Step-by-Step Troubleshooting Approach

The following steps outline a general troubleshooting methodology:

- Identify the Problem: Carefully observe the issue and collect any error messages, logs, or user reports. Understanding the symptoms fully helps to define the scope of the problem.

- Reproduce the Issue: If possible, try to recreate the problem to see under which conditions it occurs. This can help isolate whether it’s related to specific actions, settings, or environmental factors.

- Check for External Factors: Ensure that external systems or network issues aren’t contributing to the problem. For example, a connectivity issue may be caused by faulty cables or an ISP outage.

- Test Possible Solutions: Once you have a hypothesis about the cause, implement potential solutions one at a time. Testing each change allows you to assess whether the solution resolves the issue.

- Verify and Confirm: After applying a solution, verify that the system is functioning as expected. Confirm that the problem has been fully resolved and check that no new issues have emerged.

Useful Diagnostic Tools

Several tools can assist in diagnosing issues more efficiently. These tools range from system monitoring utilities to network analysis programs. Some of the most commonly used diagnostic tools include:

- Top: Displays system resource usage in real-time, helpful for monitoring CPU, memory, and process performance.

- Ping: Tests network connectivity and can help determine if a remote system is reachable.

- Traceroute: Maps the route packets take across the network to help diagnose network delays or interruptions.

- Netstat: Provides information about network connections, routing tables, and interface statistics.

- Log Files: System logs are invaluable for understanding what’s happening behind the scenes. Common logs include system logs, application logs, and security logs.

By using these troubleshooting techniques and tools, you can effectively diagnose and resolve a wide range of system issues, minimizing downtime and ensuring smooth operations. A methodical approach, coupled with a solid understanding of system behavior, makes troubleshooting a more predictable and manageable process.

Exam Strategies for Certification

Successfully obtaining a certification requires a strategic approach to preparation and execution. It’s not just about mastering the content, but also about understanding how to approach the testing process effectively. This includes time management, prioritizing topics, and knowing the tools and resources available during the assessment. By employing a clear strategy, you can maximize your chances of passing and demonstrating proficiency in key areas.

Before diving into preparation, it’s important to create a structured study plan. A good plan balances depth of knowledge with time constraints. It also involves practicing real-world scenarios, ensuring that you’re not only familiar with theory but also capable of applying it in practical situations.

Key Strategies for Preparation

To excel in the certification process, focus on the following strategies:

- Understand the Exam Format: Knowing the layout of the test, including the number of sections, types of tasks (practical vs. theoretical), and scoring methods, is crucial for planning your approach.

- Review Official Study Materials: Use official resources as they are designed to cover the necessary topics comprehensively. Supplement these with additional materials for a broader perspective.

- Focus on Practical Skills: Hands-on practice is often the most effective form of study. Set up labs or virtual environments to experiment with configurations and commands.

- Take Practice Tests: Simulate exam conditions by taking practice tests. This will help familiarize you with the format and improve your ability to manage time under pressure.

- Study Key Areas: Some topics are more heavily weighted than others. Be sure to review the core concepts thoroughly, especially those most likely to appear in the assessment.

Time Management During the Assessment

Managing your time effectively during the certification process can make a significant difference in your success. The following tips can help:

| Tip | Description |

|---|---|

| Allocate Time for Each Section | Ensure that you spend an appropriate amount of time on each part of the test, focusing more on areas with heavier point allocation. |

| Don’t Get Stuck on Difficult Tasks | If you encounter a tough question, move on and return to it later. Don’t waste valuable time when you can tackle easier questions first. |

| Review Before Submitting | If time permits, review your responses or configurations before finalizing your submission to ensure everything is accurate. |

By following these strategies, you can boost your preparation, manage your time effectively, and improve your chances of obtaining your certification. With a focused approach and the right resources, you’ll be well on your way to success.

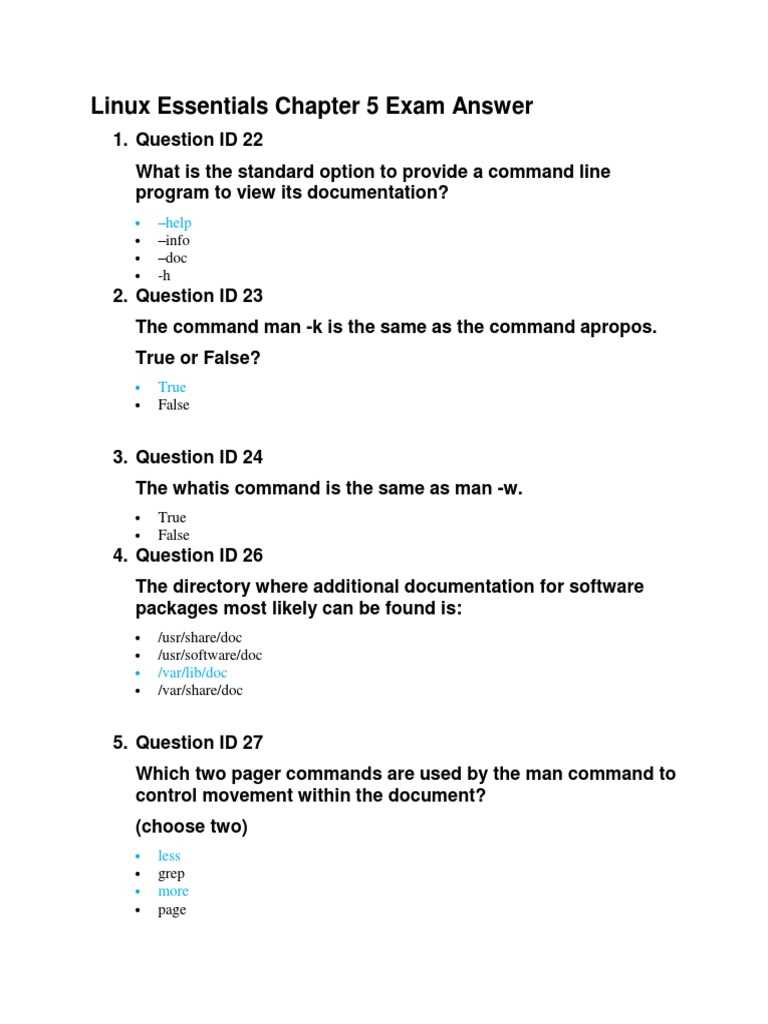

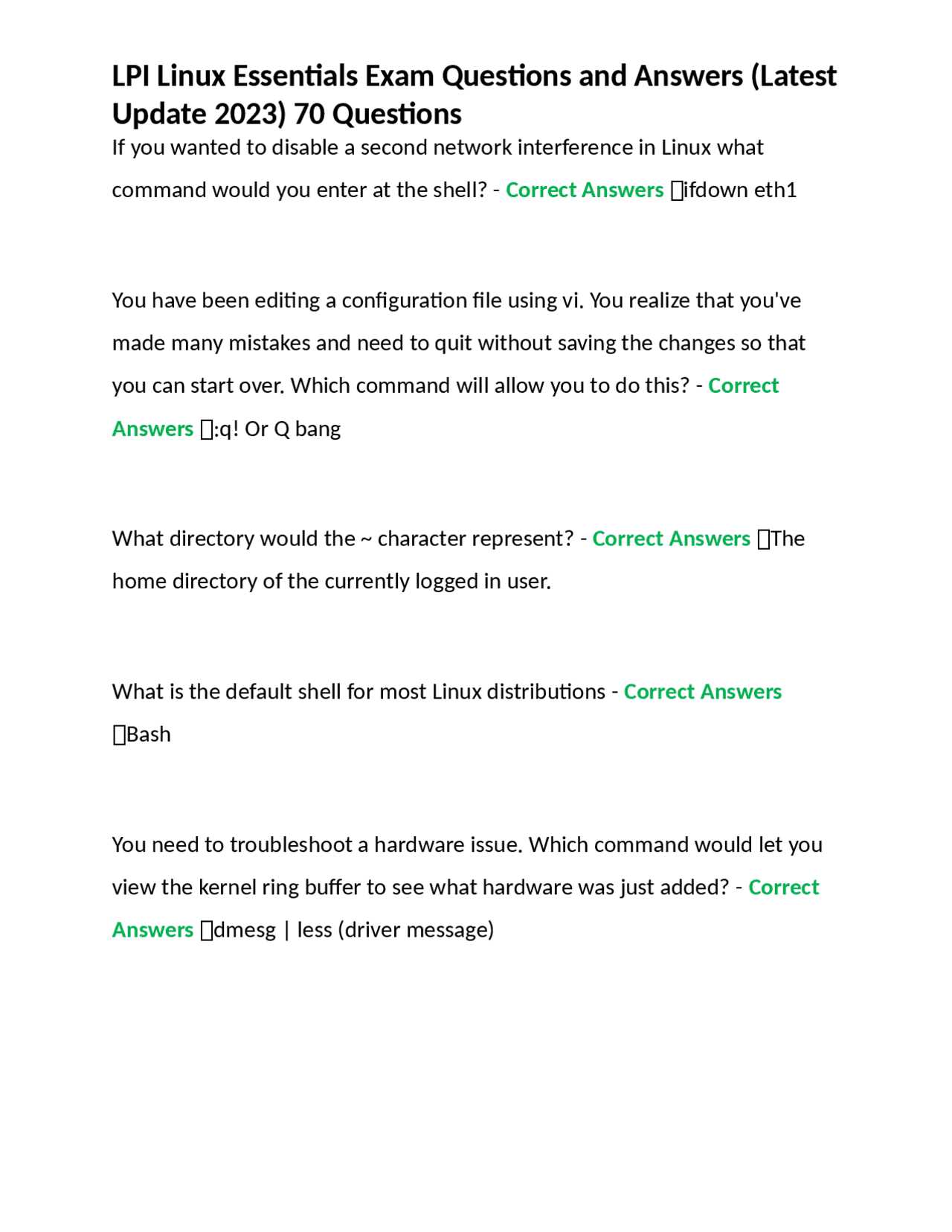

Reviewing Practice Questions for Certification

Reviewing practice scenarios is an essential part of preparing for any assessment. These exercises not only help reinforce your understanding of core concepts but also familiarize you with the types of challenges you might encounter during the actual evaluation. By working through these practice scenarios, you can identify areas where you need improvement and develop the critical thinking skills necessary to solve complex tasks under time constraints.

When preparing for an assessment, it’s important to focus on various aspects of the topics being tested. Practicing through realistic scenarios allows you to apply theoretical knowledge to practical situations. This hands-on approach will enhance your retention and boost your confidence when faced with similar tasks in the real evaluation.

Benefits of Practicing with Realistic Scenarios

Working through exercises that mirror the structure of the test offers several advantages:

- Familiarity with Format: Understanding the structure of the questions or tasks helps reduce anxiety and increases your comfort level.

- Problem-Solving Skills: Practicing real-world tasks enhances your ability to troubleshoot and think critically under pressure.

- Time Management: By timing yourself, you’ll learn to pace yourself effectively and ensure that you can complete all tasks within the allotted time.

- Identifying Knowledge Gaps: Working through exercises helps pinpoint areas that require further review, enabling you to target specific topics for improvement.

Tips for Effective Practice Sessions

Maximize the value of your practice sessions with these strategies:

- Start with the Basics: Begin by mastering the fundamental concepts before moving on to more complex scenarios. A strong foundation will make tackling advanced tasks easier.

- Simulate Test Conditions: Try to recreate the test environment by limiting distractions and adhering to time limits, just as you would during the actual assessment.

- Review Mistakes: After completing a practice session, thoroughly review your mistakes. Understanding why a solution didn’t work will help you avoid making the same errors during the actual evaluation.

- Focus on Weak Areas: If you consistently struggle with certain topics, dedicate additional time to those areas until you feel more confident.

By consistently reviewing realistic scenarios and implementing these strategies, you can improve your preparedness and increase your chances of success. This targeted approach will ensure you’re fully equipped to face the challenges that lie ahead.