Preparing for assessments in the field of artificial intelligence requires a deep understanding of key concepts and techniques. With a variety of topics to cover, it’s essential to focus on the most relevant areas that test your knowledge and application skills. From algorithms to model evaluation, each aspect plays a crucial role in demonstrating expertise.

Whether you’re tackling theoretical principles or practical applications, a structured approach is necessary. A solid grasp of important methodologies will help you navigate through complex problems, ensuring you are ready for any challenge that might arise during an evaluation. Emphasizing critical thinking and problem-solving will allow you to showcase your proficiency effectively.

The goal is to not only memorize concepts but to understand how they interconnect and can be applied in real-world scenarios. This section will guide you through essential topics, offering insights into how best to approach a variety of subject matters, helping you build a strong foundation for any upcoming assessment.

Machine Learning Exam Questions and Answers

In any rigorous assessment focused on artificial intelligence, understanding core principles and practical applications is key to achieving success. Whether you’re dealing with theoretical foundations or hands-on problem-solving, it’s essential to familiarize yourself with the types of challenges you may face. By mastering fundamental concepts, you can effectively demonstrate your knowledge and analytical abilities.

Strategic preparation involves identifying the most commonly tested topics and practicing how to approach them. It’s not just about recalling definitions, but understanding how to apply techniques to real-world data and problems. Being able to explain methods, justify choices, and recognize limitations will set you apart during the evaluation.

Focusing on key areas such as algorithms, model assessment, and performance metrics is critical. A thorough understanding of these elements will not only help you tackle specific problems but also enhance your ability to think critically when faced with new scenarios. This section highlights essential topics you need to be familiar with and offers insights into how best to prepare for various types of inquiries you might encounter in a professional context.

Understanding Key Machine Learning Concepts

Grasping the foundational principles is essential for navigating the complexities of artificial intelligence. To excel, it’s vital to not only know the theoretical aspects but also understand how these concepts translate into practical applications. This section explores some of the most critical ideas that form the backbone of any advanced study in the field.

Core Principles and Methods

Every advanced system relies on specific methods designed to process data, make predictions, and improve over time. These techniques range from statistical models to more complex approaches like neural networks, each with its own strengths and limitations. Understanding these methods is the first step toward mastering the material and confidently tackling problems in the field.

Model Evaluation and Optimization

Equally important is the ability to assess the effectiveness of models once they have been developed. Evaluating performance through various metrics allows practitioners to refine their systems, enhancing accuracy and efficiency. This continuous improvement process is at the heart of practical applications in numerous industries.

Common Types of Machine Learning Models

In the field of artificial intelligence, there are various approaches to building systems that can process data, recognize patterns, and make decisions. Each model type has its own unique way of handling data and solving problems. Understanding these different models is crucial for selecting the right approach depending on the task at hand.

Some models focus on predicting outcomes based on historical data, while others work by grouping similar data points together. Regardless of the method, each model comes with its own set of advantages and limitations. Choosing the right one requires careful consideration of the problem’s complexity, the amount of data available, and the desired outcome.

Essential Algorithms You Should Know

Mastering the key algorithms is fundamental for tackling a variety of tasks in the realm of artificial intelligence. These algorithms enable systems to make decisions, identify patterns, and optimize processes. Understanding how they work, and when to apply them, is crucial for solving complex problems effectively.

From classification to regression models, each algorithm serves a specific purpose depending on the type of data you’re working with and the desired result. Some algorithms are designed to find the best-fit line in datasets, while others group similar data points together. Developing a deep knowledge of these techniques ensures that you can select the most efficient one for any given task.

For example, algorithms like decision trees, k-nearest neighbors, and support vector machines are foundational tools that can be applied across various scenarios. Learning the intricacies of these algorithms will improve your ability to handle data in a meaningful way, ultimately driving better outcomes.

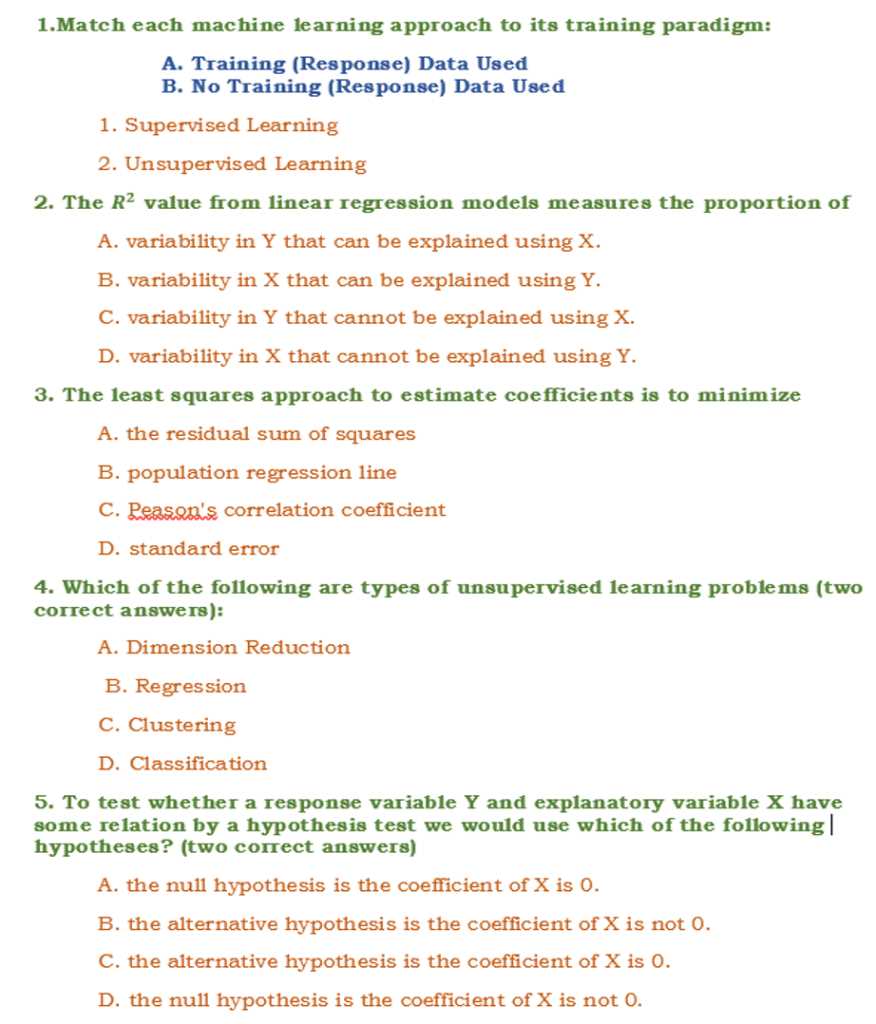

Commonly Asked Questions in ML Exams

In the field of artificial intelligence, certain topics frequently come up during assessments, testing your understanding of both fundamental concepts and practical applications. These inquiries often require a combination of theoretical knowledge and the ability to apply techniques to solve real-world challenges. Familiarity with the types of questions can help you prepare for these challenges effectively.

Questions typically revolve around model selection, performance evaluation, and algorithm understanding, with a focus on how different techniques can be applied to various datasets. Being able to explain key concepts, such as overfitting, underfitting, or the bias-variance trade-off, is often essential to demonstrate a comprehensive understanding of the subject.

Furthermore, practical scenarios may test your ability to choose the most suitable model for a given problem, taking into account factors such as data type, expected outcomes, and resource constraints. By preparing for these types of inquiries, you can build a solid foundation for tackling any challenges you may face during an assessment.

Preparing for Supervised Learning Topics

When it comes to supervised approaches, preparation involves understanding both the theoretical foundations and practical techniques that drive predictive modeling. These methods rely on using labeled data to train models, allowing them to make accurate predictions based on new inputs. A strong grasp of the underlying concepts is crucial for applying these techniques to solve real-world problems effectively.

Key Concepts and Techniques

One of the most important aspects is understanding the difference between classification and regression tasks. While both involve making predictions, the nature of the output varies. Classification tasks deal with categorical outcomes, whereas regression focuses on continuous values. Mastering these distinctions will help you choose the right approach depending on the problem at hand.

Evaluation and Optimization Methods

Equally vital is the ability to assess model performance using various metrics, such as accuracy, precision, recall, and F1 score. Understanding how to optimize models, reduce overfitting, and fine-tune hyperparameters will allow you to build more effective and efficient systems. This knowledge is essential for tackling challenges related to model evaluation and improvement.

Unsupervised Learning and Its Applications

Unsupervised approaches are fundamental for discovering hidden patterns and structures within data. Unlike supervised techniques, which rely on labeled data, these methods analyze datasets without predefined outcomes. They focus on identifying relationships, clustering similar data points, and reducing complexity, which can uncover valuable insights in large datasets.

Clustering and Dimensionality Reduction

One of the most common applications of unsupervised methods is clustering, where data points are grouped based on shared characteristics. Techniques like k-means and hierarchical clustering help in segmenting data, making it easier to analyze large volumes of information. These methods are widely used in customer segmentation, anomaly detection, and image recognition.

Real-World Use Cases

Beyond clustering, dimensionality reduction techniques like PCA (Principal Component Analysis) are crucial for simplifying datasets with numerous features. These methods help retain the most important information while reducing noise, making data easier to visualize and analyze. From recommender systems to natural language processing, unsupervised approaches offer powerful tools for solving a wide range of real-world problems.

How to Tackle Regression Questions

When faced with tasks that involve predicting continuous values, it’s crucial to approach them systematically. Regression tasks require selecting the appropriate model, preparing the data, and applying the right evaluation metrics to ensure accurate predictions. Understanding these core aspects will guide you through solving such challenges effectively.

Choosing the Right Model

One of the first steps in tackling regression tasks is identifying which model is most suitable for the data. Linear regression is often the go-to approach, but more complex models such as decision trees, random forests, or support vector machines may be more appropriate depending on the problem’s complexity. Understanding the strengths and limitations of each model is essential for making the right choice.

Evaluation Metrics and Model Improvement

Once a model is selected, evaluating its performance using appropriate metrics is key. Common evaluation metrics for regression tasks include Mean Absolute Error (MAE), Mean Squared Error (MSE), and R-squared. These metrics help assess how well the model fits the data and where improvements might be needed. Tuning hyperparameters and addressing overfitting are additional steps to improve the model’s accuracy and generalization.

Classifications Methods and Their Importance

Classification techniques play a crucial role in categorizing data into distinct groups based on predefined criteria. These methods are widely applied in various fields, from finance to healthcare, where making accurate predictions and sorting data into meaningful categories is essential. Understanding these techniques is vital for solving problems that require decision-making based on input data.

Types of Classification Methods

There are several classification methods, each with its own strengths depending on the data characteristics. Some of the most commonly used approaches include:

- Logistic Regression – A simple yet effective method for binary classification tasks, where the outcome is categorical with two possible results.

- Decision Trees – These models split the data based on feature values to make decisions, providing clear interpretation of how decisions are made.

- Random Forests – An ensemble method that combines multiple decision trees to improve accuracy and reduce overfitting.

- Support Vector Machines (SVM) – SVMs are effective for both linear and nonlinear classification problems, where the goal is to find the optimal separating hyperplane.

- K-Nearest Neighbors (KNN) – A non-parametric method that classifies data based on proximity to other data points.

Why Classification Matters

Accurate classification enables better decision-making and can have a profound impact on various industries. Whether it’s diagnosing diseases based on medical data, classifying emails into spam or not, or predicting customer behaviors, these methods are foundational to creating intelligent systems that automate tasks and improve outcomes. In many cases, the effectiveness of these techniques directly influences the quality and reliability of predictions.

Neural Networks in Machine Learning

Neural networks are powerful tools that are used to model complex patterns and make predictions. These systems, inspired by the human brain’s architecture, consist of layers of interconnected nodes that process information and generate outputs based on input data. They are particularly effective for tasks such as image recognition, natural language processing, and predictive analytics.

Structure and Functionality

At the core of neural networks are layers of neurons, each performing mathematical operations to transform input data into useful information. The basic structure typically includes:

- Input Layer – The first layer that receives the raw data.

- Hidden Layers – Intermediate layers where data is processed through weights and activation functions to extract patterns.

- Output Layer – The final layer that produces the model’s prediction or classification result.

Applications of Neural Networks

Neural networks have revolutionized many fields by providing solutions to problems that were previously too complex for traditional models. Some of the key applications include:

- Image Classification – Neural networks are widely used in identifying objects and patterns in images, as seen in facial recognition software and autonomous vehicles.

- Speech Recognition – These systems allow devices to convert spoken language into text, powering virtual assistants like Siri and Alexa.

- Predictive Modeling – Neural networks are used in forecasting trends, such as stock market predictions and demand forecasting.

Evaluating Model Performance in Exams

Assessing the effectiveness of a model is a crucial part of the process, especially when tasked with analyzing its ability to make accurate predictions. A well-evaluated model provides insights into how well it generalizes to unseen data, and identifying potential areas of improvement is key to refining its performance. Various metrics are used to quantify success and help understand the model’s strengths and weaknesses.

Key Evaluation Metrics

Common performance measures used to evaluate predictive models include accuracy, precision, recall, and F1 score. Each metric serves a specific purpose and helps determine how well the model performs in different aspects:

- Accuracy – The percentage of correct predictions made by the model. While simple, it may not always be the best indicator, especially in imbalanced datasets.

- Precision – This measure focuses on the proportion of true positive predictions among all positive predictions, helping to assess the model’s reliability in positive classifications.

- Recall – Recall evaluates how many actual positive cases were correctly identified, making it useful for detecting missed positive predictions.

- F1 Score – A balance between precision and recall, this metric is useful when there is a need to balance false positives and false negatives.

Cross-Validation for Robust Assessment

Cross-validation is another powerful technique for evaluating a model’s robustness. By dividing the data into multiple subsets and training the model on different combinations, this method helps ensure the model is not overfitting or underperforming on certain data splits. This approach gives a more reliable estimate of the model’s performance across various scenarios and data variations.

Important ML Terminologies to Remember

In the field of data analysis, understanding key terms is essential for comprehending concepts and applying them effectively. Various technical terms define the methods, processes, and results associated with data modeling, and having a solid grasp of these concepts is necessary for tackling complex tasks. Below are some of the most important terms to familiarize yourself with when working in this domain.

- Overfitting – Occurs when a model is too complex and fits the training data too closely, resulting in poor performance on unseen data.

- Underfitting – Happens when a model is too simple and fails to capture important patterns in the data, leading to low accuracy both on training and testing datasets.

- Bias – Refers to systematic errors introduced by the model, which may lead to inaccurate predictions or conclusions.

- Variance – The model’s sensitivity to fluctuations in the training data. High variance can result in overfitting, while low variance can lead to underfitting.

- Feature Engineering – The process of selecting, modifying, or creating new features from raw data to improve model performance.

- Training Data – The data used to train a model, allowing it to learn patterns and make predictions.

- Testing Data – The dataset used to evaluate a model’s performance after it has been trained, ensuring that it generalizes well to new data.

- Hyperparameters – The settings or configurations used to tune a model before training, such as learning rate or number of layers in a neural network.

Having a strong foundation in these terms will help you better understand the underlying principles and challenges faced during data analysis and model development.

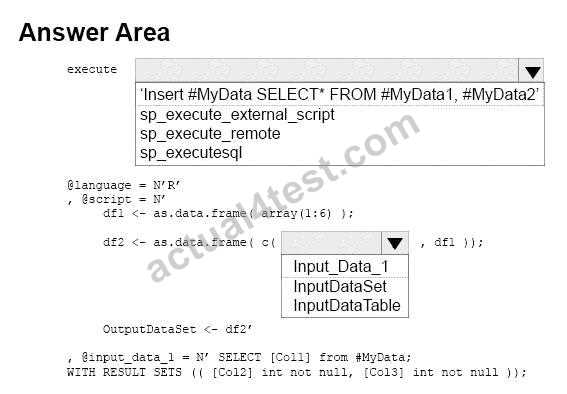

Handling Data Preprocessing Questions

When preparing data for model training, proper handling of preprocessing tasks is essential for obtaining reliable results. Preprocessing is the process of transforming raw data into a format that can be efficiently used by a model. Understanding key techniques for cleaning, transforming, and normalizing data is crucial for ensuring that the model learns from the most relevant features without being affected by noise or irrelevant information.

Common Data Preprocessing Steps

Several critical steps are involved in preparing data for use in predictive models. Below are the key actions typically taken:

- Handling Missing Values – It’s important to address any gaps in the data by either imputing missing values or removing incomplete records to maintain the quality of the dataset.

- Encoding Categorical Data – Categorical variables, such as labels or names, need to be converted into numerical format through techniques like one-hot encoding or label encoding.

- Feature Scaling – Standardization or normalization helps to bring features to a comparable scale, preventing bias towards variables with larger ranges and improving model performance.

- Outlier Detection – Identifying and handling outliers is vital to prevent them from distorting the model’s predictions and ensuring the robustness of the learning process.

- Data Transformation – Some models perform better when data is transformed, such as applying log transformations or polynomial features to make relationships more linear.

Challenges in Data Preprocessing

While preprocessing can significantly enhance model performance, it also presents challenges. Selecting the right method and ensuring that the transformed data remains meaningful requires experience and understanding of the underlying domain. Balancing between over-processing and under-processing the data is a key consideration in this phase.

Best Practices for Hyperparameter Tuning

Tuning the parameters that control the behavior of a model is a crucial step in optimizing its performance. By carefully adjusting these settings, you can significantly enhance the model’s ability to generalize to unseen data. Understanding the underlying principles of hyperparameter optimization helps in achieving better outcomes without overfitting or underfitting the model. Below are key practices to consider when tuning the parameters of your predictive models.

| Practice | Description |

|---|---|

| Grid Search | Systematically tests all possible combinations of specified hyperparameters to find the best set. |

| Random Search | Randomly selects parameter values to explore a wider range of potential combinations, often more efficient than grid search. |

| Bayesian Optimization | Uses probability models to predict the most promising hyperparameters to test next, reducing the number of trials needed. |

| Early Stopping | Monitors model performance during training and halts if improvements stop, helping avoid unnecessary computations and overfitting. |

| Cross-validation | Evaluates model performance across different subsets of the data, ensuring the chosen hyperparameters work well across all variations. |

By applying these best practices, you can fine-tune your model more effectively, achieving optimal results with a better understanding of how different parameter values impact the predictive power. Properly managing the trade-offs between computational cost and model accuracy is essential in this process.

Key Differences Between Deep Learning and ML

Though both deep models and traditional predictive techniques belong to the same field of data science, they differ in various aspects of their architecture, data handling, and complexity. Understanding these distinctions is essential for determining which approach to apply in a given problem, as each excels in different scenarios.

Data Dependency and Complexity

Traditional algorithms often rely on manual feature extraction and domain expertise to create the right set of features for predictions. In contrast, deep architectures automatically learn complex features from raw data, often eliminating the need for explicit feature engineering. However, this ability to work with large amounts of unstructured data comes with increased computational demands.

Model Architecture

Traditional models, like decision trees or support vector machines, typically involve simpler structures and require less computational power compared to deep neural networks. These models tend to operate on a smaller scale and focus on direct input-output relationships. Deep neural networks, on the other hand, utilize multiple layers of interconnected nodes, making them highly effective at capturing intricate patterns within large, high-dimensional datasets.

Key differences to consider include:

- Feature extraction: In classical approaches, manual intervention is necessary to define relevant features, while deep models extract features automatically.

- Data volume: Deep architectures generally require massive datasets to perform well, unlike traditional models, which can often produce satisfactory results with smaller datasets.

- Computation: Deep models demand significantly more computational resources, especially when working with large datasets and intricate structures.

- Performance: For tasks involving unstructured data, such as image or speech recognition, deep models typically outperform traditional models due to their ability to process raw data effectively.

Choosing between deep models and traditional methods ultimately depends on the task at hand, the size and quality of available data, and computational resources. Each has its place in modern data science workflows, with deep models being the go-to for large-scale, complex problems and traditional models being ideal for smaller datasets or situations requiring faster execution.

Examining Overfitting and Underfitting Concepts

In predictive modeling, it’s essential to create models that can generalize well to unseen data. However, two common pitfalls often arise during model development: overfitting and underfitting. Both scenarios prevent the model from performing optimally, but in different ways. Understanding these concepts is critical for building robust models that provide accurate predictions on new data.

Overfitting Explained

Overfitting happens when a model becomes too tailored to the training data, capturing not just the true underlying patterns but also the noise or random fluctuations. This results in a model that performs very well on the training set but struggles to generalize to new, unseen data. Overfitting is often a consequence of using an overly complex model with too many parameters relative to the amount of data available.

Underfitting Explained

Underfitting, in contrast, occurs when a model is too simplistic to capture the patterns in the data. This happens when the model lacks the necessary complexity to learn from the data adequately. Underfitting can result in poor performance on both the training set and the test set, as the model fails to learn the important relationships between features and outcomes.

Key Differences Between Overfitting and Underfitting:

| Overfitting | Underfitting |

|---|---|

| Model is too complex, capturing noise along with patterns. | Model is too simple, missing important patterns in the data. |

| Excellent performance on training data but poor performance on new data. | Poor performance on both training and test data. |

| Low bias, high variance. | High bias, low variance. |

| Occurs when the model memorizes the data instead of generalizing. | Occurs when the model does not have enough complexity to learn from the data. |

To avoid these issues, various techniques such as cross-validation, regularization, and model simplification can be employed. The goal is to find the right balance between model complexity and generalizability, ensuring that the model can make accurate predictions on both seen and unseen data.

Strategies for Time Management in ML Exams

Effective time management is crucial when tackling assessments related to predictive modeling and data analysis tasks. With limited time to complete all the required sections, it is important to allocate your resources efficiently to ensure that you can answer all tasks while maintaining accuracy. The following strategies can help optimize your performance by focusing on key areas without rushing through complex problems.

Prioritize Tasks

When you first receive your test, take a moment to quickly scan through all the sections. Identify which tasks require more time and which ones are simpler. This will help you determine which questions should be prioritized. A strategic approach to tackling the assessment involves spending the right amount of time on each part based on its difficulty level.

- Start with easier tasks: Begin with tasks you can complete quickly and confidently. This will help build momentum and give you more time for the harder sections later.

- Tackle complex problems second: After completing easier sections, focus on the more complex questions. Since these typically require deeper analysis, they may take longer.

- Leave difficult sections for last: If a particular question is too time-consuming or unclear, move on and come back to it later. Sometimes, a fresh perspective helps solve tricky problems.

Time Allocation

Another key strategy is to allocate a specific amount of time to each section. By setting time limits for each task, you ensure that you do not dwell too long on any one section. Be realistic with your expectations, considering the time required for reading, problem-solving, and reviewing answers.

- Estimate the time per question: Divide your total available time by the number of sections to estimate how much time you should spend on each.

- Use a timer: Setting a timer for each section can help you stay on track and prevent spending too much time on any one part.

- Monitor progress: Regularly check how much time remains and adjust if you’re spending too much time on a particular task.

By staying organized, focusing on key areas first, and adhering to time constraints, you can maximize your ability to complete all tasks with accuracy. Managing time effectively also allows for sufficient time to review your work before submitting, which can help catch small mistakes and improve your overall score.