As the field of computational linguistics continues to grow, understanding core techniques and models has become essential for anyone looking to excel in related tests. The ability to analyze text data, build models, and interpret results is crucial for success. This section aims to guide you through common topics and help sharpen your skills for upcoming evaluations.

Preparation is key when facing any challenge in this area, whether you’re dealing with theoretical principles or practical implementations. By familiarizing yourself with relevant concepts, you can approach your test confidently and efficiently. The ability to break down complex topics into manageable chunks will not only enhance your understanding but also improve your problem-solving capabilities.

Throughout this article, we’ll explore various aspects of the field, providing insight into common problems and offering strategies for overcoming them. Whether you are tackling algorithms, model evaluation, or practical coding tasks, these guidelines will help you stay on track and perform well in any assessment.

Mastering Key Topics for NLP Assessments

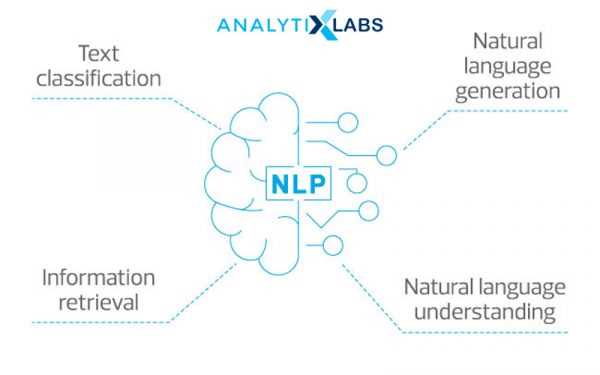

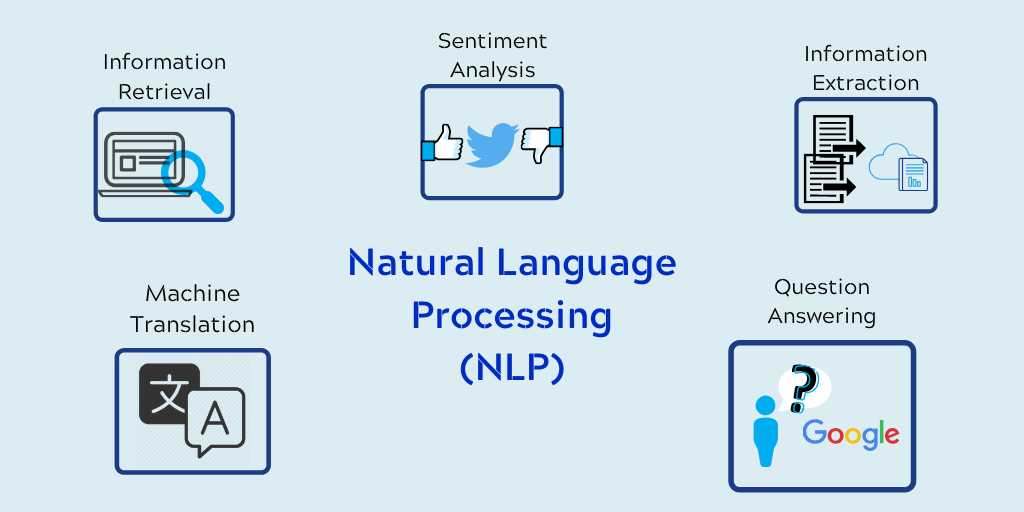

When preparing for evaluations in the field of computational text analysis, it’s important to understand both the theoretical foundations and practical applications. Key topics often cover a range of methods and algorithms used to analyze, interpret, and manipulate written data. Gaining familiarity with these concepts will help you tackle different kinds of problems, whether you are working with text classification, sentiment analysis, or machine learning models.

Core topics typically include understanding various techniques, such as tokenization, part-of-speech tagging, and named entity recognition. These methods are fundamental to breaking down large text datasets and extracting meaningful information. Another critical area is model training and evaluation, where you’ll learn how to measure the accuracy of different approaches and optimize them for better performance.

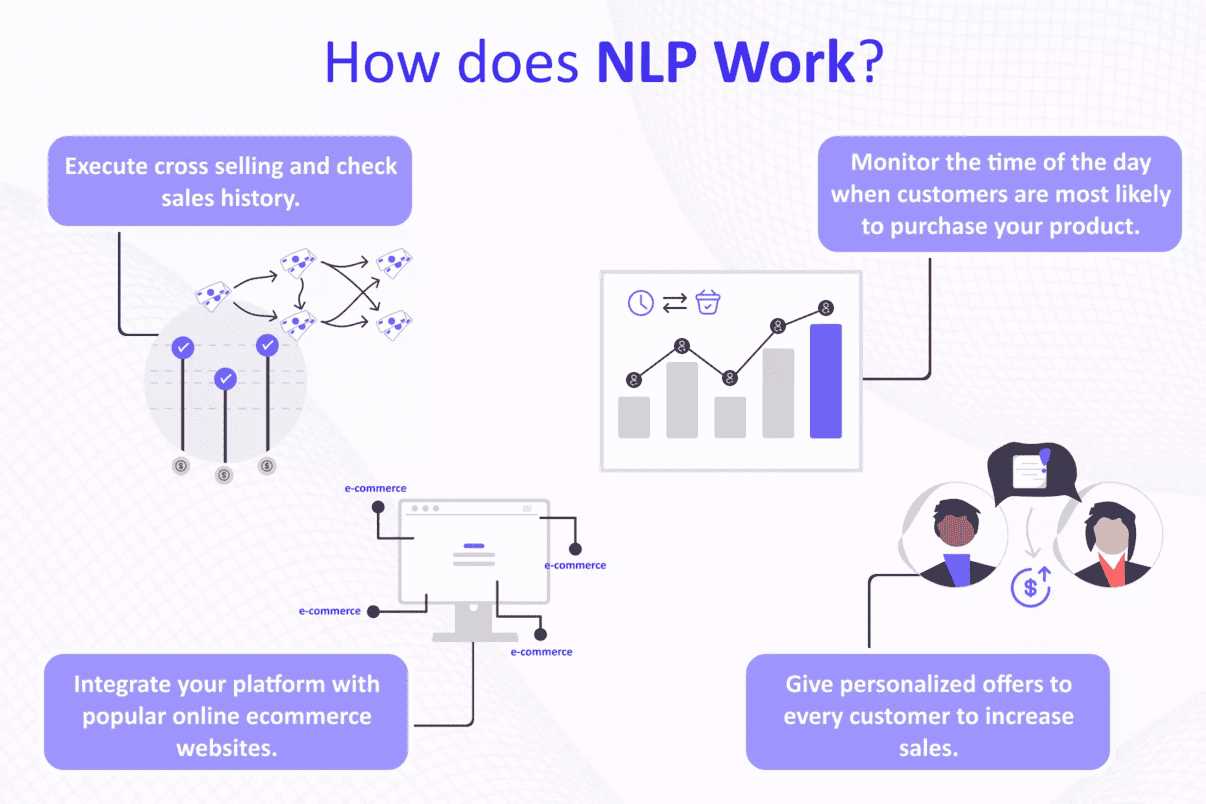

Additionally, practical skills in implementing models are equally essential. For example, you may be asked to demonstrate how a certain algorithm can be applied to solve real-world challenges, like identifying patterns in customer feedback or classifying documents by topic. Mastering both theory and practice will prepare you for any task that may appear on an evaluation in this field.

Understanding NLP Concepts for Assessments

To succeed in any test related to computational text analysis, it’s essential to build a solid understanding of the foundational concepts. These core ideas often revolve around how text data can be represented, processed, and analyzed through various methods. A deep grasp of these topics allows you to approach complex problems confidently and efficiently, whether they involve machine learning models or algorithmic techniques.

Among the most important concepts are methods like tokenization, which breaks down text into smaller, meaningful units, and vectorization, which transforms text into numerical data for model training. Another critical area is syntactic structure analysis, which helps in understanding sentence construction and relationships between words. Being familiar with these core principles ensures that you’re well-equipped to tackle a variety of challenges in any evaluation.

As you delve into these concepts, it’s also helpful to consider how they are applied in real-world scenarios. This practical approach will deepen your understanding and provide the tools needed to implement solutions effectively, whether you’re working on predictive models or text classification tasks. Mastery of these basic ideas is key to performing well in assessments that focus on computational methods for text analysis.

Common Challenges in NLP Assessments

When facing evaluations in the field of text analysis, many individuals encounter specific obstacles that test both their theoretical understanding and practical skills. These challenges often stem from the complexity of working with unstructured data, where subtle nuances in meaning or structure can lead to difficulties in interpretation and processing. Understanding these common hurdles can significantly improve your approach to solving problems efficiently.

One major difficulty is handling ambiguity in text, where words or phrases can have multiple meanings depending on context. Another challenge is working with noisy or incomplete data, which is often encountered in real-world scenarios. Preprocessing tasks like cleaning and normalizing data can be time-consuming and error-prone, but they are crucial for building accurate models. Additionally, the computational cost of training sophisticated models, especially deep learning networks, can be a limiting factor for many learners.

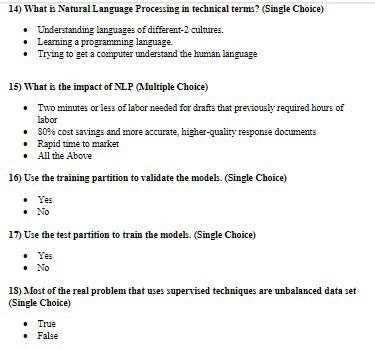

In addition to these technical challenges, there’s also the need to understand the performance metrics used to evaluate models. Determining how well a model generalizes to new data, versus overfitting to the training set, is another common area of difficulty. Overcoming these obstacles requires both a solid theoretical foundation and practical experience in implementing effective solutions.

Key Techniques in Text Analysis

In the field of computational text analysis, various methods are used to extract meaning from written content. These techniques enable the conversion of unstructured data into actionable insights. A strong understanding of these core methods is essential for tackling tasks ranging from sentiment detection to document classification.

Tokenization and Text Segmentation

One of the foundational techniques in this field is breaking down text into smaller, manageable units. This process, known as tokenization, is often the first step in preparing text for further analysis. Common sub-tasks in tokenization include:

- Dividing text into words or phrases

- Handling punctuation and special characters

- Splitting text based on whitespace or delimiters

Once tokenized, the next step is often text segmentation, which groups related tokens together based on their syntactic or semantic meaning. This is crucial for tasks like sentence boundary detection and paragraph segmentation.

Text Representation and Vectorization

Another essential technique is transforming text into a numerical format that algorithms can process. Text representation methods, such as:

- Bag-of-words (BoW)

- TF-IDF (Term Frequency-Inverse Document Frequency)

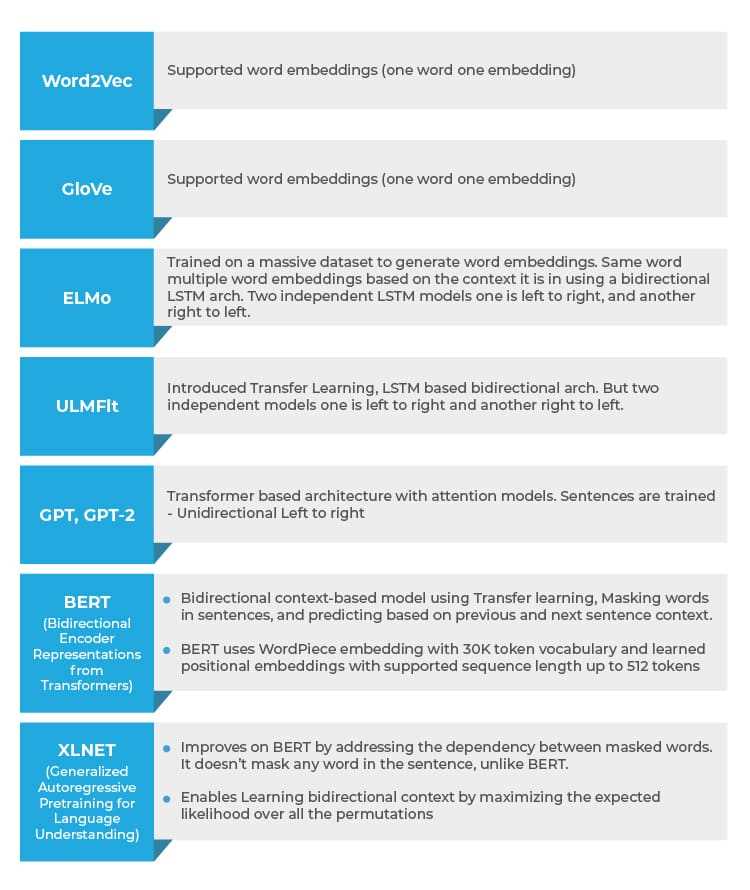

- Word embeddings (e.g., Word2Vec, GloVe)

These approaches allow machines to analyze and compare textual data. They capture different levels of meaning and contextual information, from the frequency of individual words to complex relationships between them.

Mastering these techniques is vital for successfully implementing more advanced models and ensuring that your analysis is both accurate and efficient.

Machine Learning Approaches in NLP

In the realm of text analysis, machine learning techniques play a crucial role in automating tasks such as classification, prediction, and clustering. These approaches allow models to learn from data, improve over time, and tackle complex challenges that would be difficult to solve manually. By training algorithms on large datasets, machine learning models can discern patterns and make decisions with minimal human intervention.

Supervised Learning

Supervised learning is one of the most commonly used methods in this field. It involves training a model on labeled data, where the input data is paired with the correct output. The goal is to teach the model to generalize from the training examples so that it can predict the outcomes for unseen data. Some popular supervised learning algorithms include:

| Algorithm | Use Case |

|---|---|

| Logistic Regression | Text classification, sentiment analysis |

| Support Vector Machines | Document categorization |

| Random Forest | Named entity recognition, topic modeling |

Unsupervised Learning

Unsupervised learning, on the other hand, involves training models on data that does not have predefined labels. This approach is useful for discovering hidden patterns or structures within text data. Common techniques in unsupervised learning include:

- Clustering algorithms (e.g., K-means)

- Dimensionality reduction (e.g., PCA, t-SNE)

- Topic modeling (e.g., Latent Dirichlet Allocation)

By leveraging unsupervised methods, models can uncover relationships within large datasets and provide insights that might otherwise remain hidden.

Important Algorithms for Language Processing

In text analysis, algorithms serve as the backbone for many tasks, from categorizing documents to extracting meaningful features from raw data. These computational techniques enable systems to interpret large volumes of unstructured information and produce accurate, actionable insights. Understanding the key algorithms in this domain is essential for building effective models that can handle complex linguistic data.

Text Classification Algorithms

Text classification is a common task in computational linguistics, where the goal is to assign predefined labels to text. Several algorithms are frequently used to solve this problem, including:

| Algorithm | Application |

|---|---|

| Naive Bayes | Spam detection, sentiment analysis |

| Support Vector Machine (SVM) | Document categorization, topic classification |

| Decision Trees | Text classification, feature selection |

Sequence Labeling Algorithms

Sequence labeling involves assigning labels to elements of a sequence, such as words in a sentence. Some widely used algorithms include:

- Hidden Markov Models (HMM)

- Conditional Random Fields (CRF)

- Recurrent Neural Networks (RNN)

These algorithms are essential for tasks like part-of-speech tagging, named entity recognition, and syntactic parsing, where context is important for accurately understanding each element in the sequence.

Data Preprocessing for NLP Exams

Before any meaningful analysis or model building can take place, raw text data must be prepared and cleaned. This initial step is crucial for improving the performance of models and ensuring accurate results. Proper data preparation involves removing noise, handling inconsistencies, and transforming text into a structured format that machine learning algorithms can process effectively.

The preprocessing pipeline typically involves several stages, such as:

- Text cleaning: Removing unwanted characters, punctuation, and special symbols.

- Tokenization: Splitting text into words or phrases to facilitate analysis.

- Lowercasing: Converting all text to lowercase to ensure uniformity.

- Stopword removal: Eliminating common words (e.g., “the”, “is”) that don’t add meaningful information.

- Stemming and Lemmatization: Reducing words to their root forms to group similar variations together.

By applying these preprocessing techniques, raw data is transformed into a clean, structured form, ready for use in machine learning models or further analysis. Mastering this essential step can greatly enhance the accuracy and efficiency of your work in the field of text analysis.

Text Classification Methods You Should Know

Text classification is a fundamental task in computational linguistics, aimed at categorizing text into predefined categories. Whether for spam detection, sentiment analysis, or topic categorization, the ability to accurately classify text is essential for many applications. There are several key methods used to perform text classification, each with its unique advantages and challenges.

Here are some of the most widely used approaches in text categorization:

- Logistic Regression: A simple yet effective algorithm for binary and multiclass classification tasks. It is easy to implement and interprets well.

- Naive Bayes: Based on probabilistic models, this method assumes independence between features and is particularly effective for text classification in spam filtering and sentiment analysis.

- Support Vector Machines (SVM): Known for its robustness, SVM is effective for both linear and nonlinear text classification tasks. It works well with high-dimensional data such as text.

- Decision Trees: A tree-based model that splits data based on feature values to classify text. It’s easy to understand and visualize but may suffer from overfitting without proper tuning.

- Deep Learning (Neural Networks): Advanced models, such as convolutional or recurrent neural networks, are particularly effective in complex classification tasks involving large amounts of unstructured data.

Each of these methods has its strengths, and choosing the right approach depends on the dataset, the complexity of the task, and the computational resources available. Understanding these classification techniques can significantly improve the accuracy and efficiency of your models.

Deep Learning Applications in NLP

Deep learning techniques have revolutionized the field of text analysis, offering powerful models that can learn from large amounts of unstructured data. By utilizing layered neural networks, these models are capable of understanding intricate patterns in textual data, making them invaluable for tasks that were previously challenging or infeasible with traditional methods.

Some of the most notable applications of deep learning in text-based tasks include:

- Sentiment Analysis: Deep neural networks can effectively predict the sentiment of a text, whether positive, negative, or neutral, by learning complex relationships between words and context.

- Machine Translation: Deep learning models, particularly recurrent neural networks (RNNs) and transformer models, have made significant advancements in translating text between languages with high accuracy.

- Named Entity Recognition (NER): These models identify and classify key entities in text, such as names, locations, dates, and organizations, which is crucial for understanding and structuring information.

- Text Summarization: Using encoder-decoder architectures, deep learning models can generate concise summaries of long documents while preserving important information.

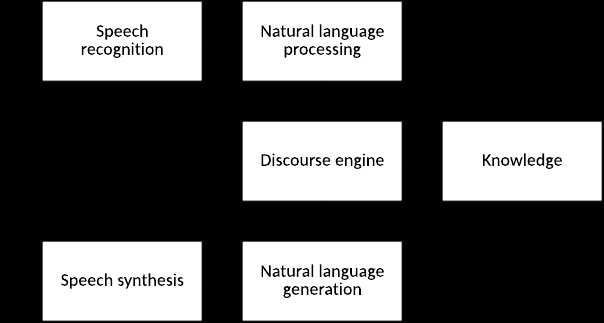

- Speech Recognition: Deep learning models, particularly those utilizing convolutional neural networks (CNNs) and long short-term memory (LSTM) networks, enable accurate speech-to-text conversion.

By leveraging the power of deep learning, these applications are not only more efficient but also provide more nuanced and accurate results, contributing to the continued evolution of text analysis and related fields.

Understanding Syntax and Semantics

In text analysis, two critical components shape how we interpret and understand textual data: structure and meaning. The structure of sentences governs how words are arranged to form coherent thoughts, while meaning focuses on the interpretation of these arrangements. Together, these elements help models derive contextual understanding, enabling accurate analysis of content.

Understanding both the structural organization and the conveyed meaning is essential in building systems that can interpret complex data. The relationship between structure (syntax) and meaning (semantics) plays a vital role in tasks like machine translation, question answering, and sentiment analysis.

Syntax: The Structure of Sentences

Syntax refers to the rules that dictate the arrangement of words and phrases to create well-formed sentences. By analyzing syntax, machines can identify sentence components and their relationships. Common syntactical structures include:

| Structure Type | Description |

|---|---|

| Phrase Structure Grammar | Organizes sentences into hierarchically structured phrases, such as noun and verb phrases. |

| Dependency Parsing | Identifies relationships between words based on syntactic dependencies, like subject-verb-object relationships. |

Semantics: The Meaning Behind the Words

Semantics deals with the meaning conveyed by words and their arrangement. Unlike syntax, which focuses on form, semantics is concerned with the interpretation of content. Some important areas of semantics include:

- Word Sense Disambiguation: Determining the meaning of words with multiple possible interpretations based on context.

- Named Entity Recognition: Identifying and classifying entities such as people, places, and organizations in text.

- Sentiment Analysis: Extracting subjective information, such as the sentiment or emotional tone conveyed in the text.

By combining insights from both syntax and semantics, we can develop more robust models capable of understanding complex texts, enhancing accuracy in tasks ranging from machine translation to content summarization.

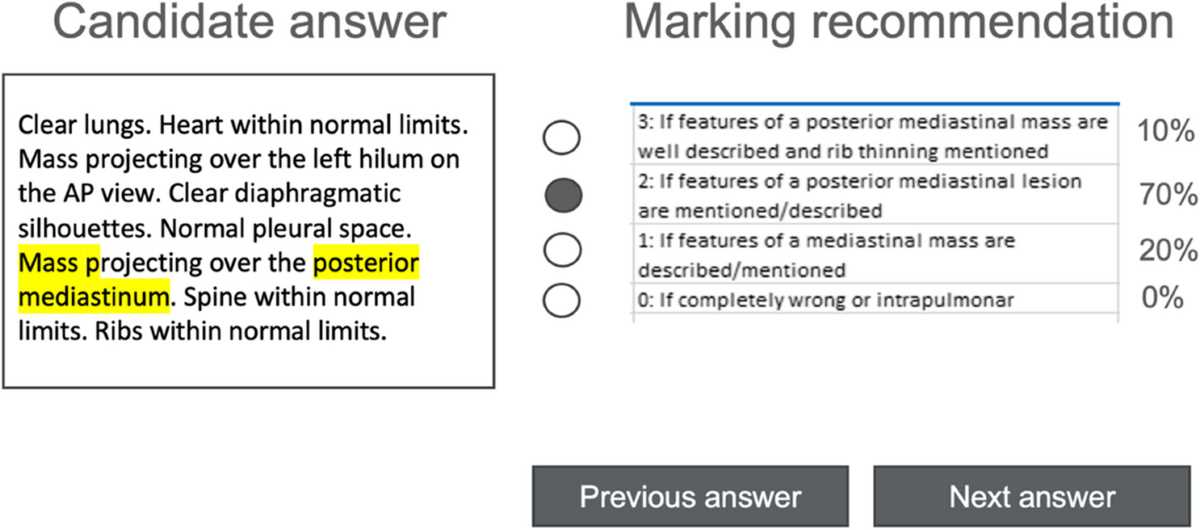

Evaluating NLP Model Performance

To assess the effectiveness of models designed for text analysis, it is essential to use proper evaluation metrics. These metrics help in determining how well a model is performing and highlight areas that require improvement. By measuring accuracy, precision, recall, and other relevant metrics, one can understand how well the model interprets and processes the data.

The evaluation process involves comparing the model’s predictions to the actual outcomes. Depending on the task–whether it’s classification, extraction, or generation–different metrics may be more or less useful. Below are some common methods for evaluating model performance:

- Accuracy: The percentage of correct predictions made by the model. It is a simple yet effective metric for balanced datasets.

- Precision: Measures the ratio of correctly predicted positive observations to the total predicted positives. It’s particularly important in tasks where false positives are costly.

- Recall: Also known as sensitivity, it calculates the ratio of correctly predicted positive observations to all actual positives. It is crucial when false negatives need to be minimized.

- F1 Score: The harmonic mean of precision and recall. This metric is used when the balance between precision and recall is important, especially in imbalanced datasets.

- Confusion Matrix: A table used to describe the performance of a classification model, showing true positives, true negatives, false positives, and false negatives.

For tasks that involve multiple classes or require ranking, more advanced evaluation methods are necessary. It is important to select metrics based on the specific characteristics of the model and task to get a true sense of its performance.

Commonly Asked NLP Exam Questions

When preparing for assessments in text analysis, it’s essential to understand the kinds of inquiries that often arise. These questions typically focus on core concepts, algorithms, applications, and challenges in the field. Familiarity with common topics can help in effectively addressing tasks and scenarios presented during the evaluation.

Below are some examples of common topics that tend to be tested in assessments related to this field. They provide a solid foundation for understanding the key areas you’ll likely encounter.

Conceptual Questions

- What is the difference between supervised and unsupervised methods in text analysis?

- Explain the role of tokenization in text processing.

- What are the key challenges when dealing with ambiguity in text?

- Describe how stemming differs from lemmatization and provide examples.

Application-Oriented Questions

- How would you approach building a sentiment analysis model for product reviews?

- What techniques would you use for named entity recognition in a news article?

- Discuss the advantages and limitations of using deep learning for text classification tasks.

- What preprocessing steps would be necessary before applying a machine learning model to text data?

By preparing for such topics, you’ll be better equipped to demonstrate both your theoretical understanding and practical application of various techniques in text analysis.

How to Solve NLP Problems Effectively

To tackle challenges in the field of text analysis, it is crucial to adopt a structured approach. The process often involves understanding the problem, preparing the data, selecting appropriate techniques, and evaluating the results. By following a clear methodology, you can improve both the accuracy and efficiency of your solutions.

Here are some key strategies to effectively address problems in this domain:

Understanding the Problem

- Begin by clearly defining the task. Are you working on classification, extraction, or generation? Each task has different requirements and methods.

- Identify the specific goals. For example, are you trying to improve model performance, reduce errors, or enhance interpretability?

- Understand the challenges associated with the problem. Text data can be messy, ambiguous, and high-dimensional, so it’s important to anticipate difficulties that may arise during model building.

Data Preparation and Preprocessing

- Clean and preprocess your data. This includes removing irrelevant content, normalizing text, handling missing values, and splitting the data into training and testing sets.

- Tokenize and vectorize the text. This step transforms raw text into a format suitable for machine learning algorithms.

- Consider applying techniques like lemmatization or stemming to improve consistency in the text.

By carefully following these steps, you can effectively solve problems in text analysis, yielding more accurate and robust models for a variety of applications.

Preparing for NLP Practical Tests

Practical tests in the field of text analysis often require not just theoretical knowledge, but also the ability to apply algorithms and techniques to real-world problems. Preparing for these assessments involves building hands-on experience and being ready to demonstrate your skills in coding, model implementation, and problem-solving. A well-prepared candidate should be familiar with the tools and methods commonly used in the field, as well as be able to optimize and troubleshoot models effectively.

Here are some key steps to help you prepare for practical assessments:

Master the Key Tools and Libraries

- Familiarize yourself with popular libraries such as scikit-learn, TensorFlow, PyTorch, and spaCy.

- Learn how to manipulate datasets using Pandas and NumPy.

- Understand how to build pipelines for data preprocessing, feature extraction, and model evaluation.

Build Strong Problem-Solving Skills

- Practice solving problems related to text classification, sentiment analysis, named entity recognition, and clustering.

- Work with datasets of varying complexity, ensuring you can handle noisy, unstructured text data.

- Develop a deep understanding of model tuning, including selecting the right hyperparameters and understanding evaluation metrics such as accuracy, precision, recall, and F1 score.

Simulate Real-World Scenarios

- Take part in coding challenges and competitions, such as those on Kaggle, to improve your practical skills.

- Work on small projects that involve end-to-end implementation, from data collection and cleaning to model evaluation and deployment.

By following these steps and practicing regularly, you’ll be well-prepared to perform effectively in practical tests, demonstrating both your technical expertise and your ability to solve real-world text analysis problems.

Study Resources for NLP Exams

Effective preparation for assessments in text analysis involves utilizing a range of study materials that cover both theoretical concepts and practical skills. These resources will help you understand core topics such as algorithms, techniques, and common challenges. A variety of books, online courses, and research papers can provide a comprehensive learning experience, while hands-on practice will reinforce your understanding.

Here are some key resources to help you prepare:

Books

- Speech and Language Processing by Jurafsky and Martin – A thorough textbook covering the foundations and modern advancements in the field.

- Python Machine Learning by Sebastian Raschka – A great resource for learning machine learning techniques with Python, focusing on implementation.

- Deep Learning with Python by François Chollet – This book provides insight into deep learning techniques, particularly for text data.

Online Courses

- Coursera: Applied Text Mining in Python – A practical course that focuses on text data analysis using Python.

- edX: Introduction to Artificial Intelligence (AI) – A comprehensive introduction to AI, with several sections focused on text and speech data processing.

- Udemy: NLP with Deep Learning in Python – A highly rated course offering in-depth training on advanced methods for working with text data.

Research Papers and Journals

- ACL Anthology – A collection of papers from the Association for Computational Linguistics that covers cutting-edge research in text analysis.

- Journal of Machine Learning Research – Features high-quality articles on machine learning techniques applicable to text data.

- arXiv: cs.CL – A preprint repository where you can find the latest research on computational linguistics.

Practical Platforms

- Kaggle – A platform that offers hands-on challenges, competitions, and datasets to practice text analysis techniques.

- GitHub – Explore repositories with open-source projects, code snippets, and tutorials related to text data analysis.

- Google Colab – A cloud-based environment that allows you to practice coding in Python with pre-installed libraries for text analysis.

By utilizing these diverse resources, you can gain a solid understanding of the subject and prepare effectively for any assessment related to text analysis techniques.

Importance of Tokenization in NLP

Tokenization is a critical initial step in the analysis of textual data. It involves breaking down text into smaller, manageable components, known as tokens, which can be words, phrases, or other meaningful units. This process is essential as it prepares the data for further analysis, making it easier to identify patterns, extract information, and perform various machine learning tasks.

Key Benefits of Tokenization

- Facilitates Text Analysis – By dividing text into tokens, it becomes easier to process and analyze individual elements such as words or phrases, aiding in tasks like sentiment analysis, classification, and clustering.

- Improves Model Accuracy – Proper tokenization helps improve the performance of algorithms by providing clear, distinct units of data for learning models to work with, enhancing the accuracy of predictions and results.

- Handles Different Data Structures – Tokenization allows for flexible handling of various data formats, from simple sentences to complex documents, ensuring that the system can work with both structured and unstructured text.

- Reduces Data Noise – By removing irrelevant symbols or noise in the text (such as punctuation marks or unnecessary spaces), tokenization helps clean the data, improving the efficiency of subsequent tasks.

Types of Tokenization Techniques

- Word Tokenization – Splits the text into individual words, which is often the most straightforward approach.

- Sentence Tokenization – Breaks down a passage into sentences, which is useful for understanding the structure and flow of the text.

- Subword Tokenization – Divides words into smaller units like prefixes, stems, or suffixes, which can be helpful in handling complex or uncommon words.

- Character Tokenization – Involves breaking text down into individual characters, which is particularly useful for tasks like spelling correction or handling languages with complex scripts.

Tokenization, while simple in concept, lays the groundwork for much more sophisticated text analysis tasks. Without effective tokenization, it’s challenging to apply more advanced techniques such as part-of-speech tagging, named entity recognition, or machine translation.

Challenges with Ambiguity in Language

Ambiguity is a pervasive issue in textual data that poses significant challenges when attempting to interpret or analyze meaning. Words, phrases, or sentences can often be understood in multiple ways depending on context, leading to confusion or misinterpretation. Such uncertainty is inherent in many forms of communication and can severely impact the accuracy of computational models designed to understand or generate text.

Types of Ambiguity

| Type of Ambiguity | Description |

|---|---|

| Lexical Ambiguity | Occurs when a word has multiple meanings, such as the word “bank” (financial institution or the side of a river). |

| Syntax Ambiguity | Happens when a sentence can be parsed in multiple ways. For example, “I saw the man with the telescope” could imply either the man had a telescope or the observer used a telescope. |

| Semantic Ambiguity | Refers to situations where the meaning of a sentence or phrase is unclear due to the interpretation of its components, such as “They are flying planes” (literal or metaphorical meaning). |

| Pragmatic Ambiguity | Emerges when the context in which something is said leaves room for various interpretations, affecting the understanding of the intended message. |

Addressing Ambiguity in Models

Dealing with ambiguity requires robust techniques in text analysis. Some common methods include:

- Contextual Analysis: Understanding the surrounding text to clarify the intended meaning of ambiguous elements.

- Disambiguation Algorithms: Using machine learning models to predict the correct meaning of ambiguous terms based on training data and context.

- Part-of-Speech Tagging: Assigning grammatical categories to words in a sentence to reduce ambiguity in interpretation.

- Semantic Role Labeling: Identifying the roles that words or phrases play within a sentence to disambiguate meanings.

While complete elimination of ambiguity may not always be possible, these techniques help to minimize its impact, leading to more accurate understanding and processing of textual data.

Tips for Mastering NLP Concepts

Successfully mastering key concepts in computational text analysis requires a combination of understanding theoretical principles, practicing with real-world data, and honing technical skills. The ability to apply these concepts effectively often comes with experience, but there are certain strategies that can accelerate learning and deepen comprehension. These strategies range from strengthening foundational knowledge to engaging with practical applications that reinforce theoretical lessons.

Here are some essential tips for mastering the key ideas in this field:

- Understand Core Theories: Ensure a solid grasp of fundamental topics, such as syntactic structures, semantic interpretation, and statistical models. These are the building blocks for more advanced concepts.

- Practice Regularly: Apply theoretical concepts to hands-on tasks, such as tokenization, part-of-speech tagging, and machine learning model training. Real-world experience helps reinforce abstract concepts.

- Engage with Open-Source Tools: Use widely adopted libraries and frameworks, such as NLTK, SpaCy, or TensorFlow, to implement algorithms and workflows. This will deepen your understanding of how these models work in practice.

- Review Case Studies: Examine case studies or research papers on applied tasks like sentiment analysis or information extraction. This provides insight into how concepts are implemented to solve complex challenges.

- Collaborate with Peers: Joining study groups or online forums can provide opportunities for discussion, problem-solving, and feedback. Explaining difficult concepts to others can also help reinforce your own understanding.

- Stay Current: The field evolves quickly, so it’s important to follow the latest research, methodologies, and technological developments. Engage with blogs, online courses, and academic journals.

By incorporating these strategies into your study routine, you can develop a deep and practical understanding of the most important concepts in text analysis, equipping you for success in both academic and professional settings.