In any technical field, understanding core principles is essential for mastering the subject and excelling in evaluations. This section aims to explore fundamental topics related to artificial intelligence models, focusing on how these concepts are commonly tested. A solid grasp of these principles is crucial for answering typical problems presented in assessments.

Various methods of model training, architecture choices, and optimization techniques play a significant role in shaping the performance of these systems. By addressing common challenges, the section helps you prepare for tackling related problems effectively. It is not only about memorizing formulas but also understanding their application in solving real-world tasks.

Key areas to focus on include the mechanics of building systems that can learn from data, the challenges of model evaluation, and the process of enhancing accuracy through various strategies. With practice, these concepts become intuitive, ensuring readiness for any related inquiry.

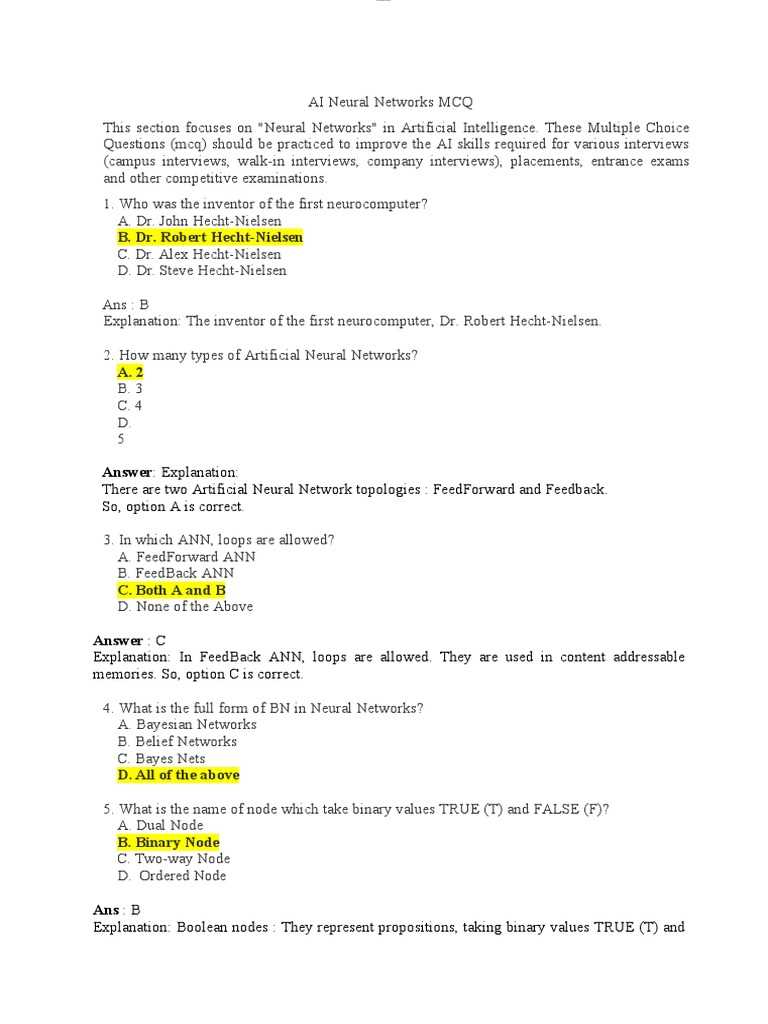

Neural Network Exam Questions and Answers

Preparing for assessments related to artificial intelligence systems involves understanding key principles and techniques that are frequently evaluated. A deep knowledge of how these intelligent models function, learn, and make predictions is vital for solving problems efficiently. This section will cover typical challenges you may encounter, providing insights into the types of topics commonly tested.

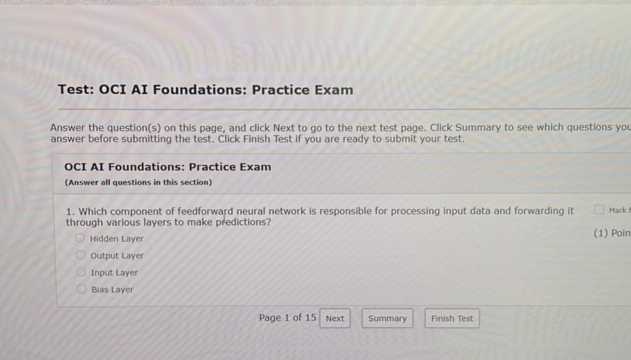

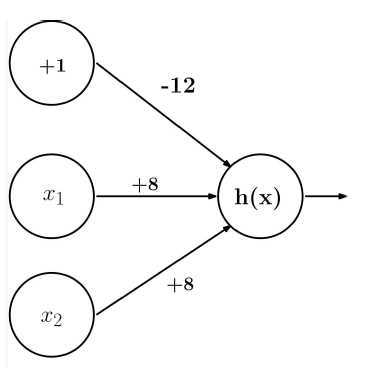

One common area often explored in evaluations is the architecture of different models. Understanding the structure of computational systems and how they process data is critical. Being able to explain concepts such as the layers, the flow of information, and the impact of various parameters on the output will help you tackle a wide range of problems.

In addition to architectural knowledge, optimization techniques play a central role in boosting the effectiveness of these models. Expect questions that involve methods to fine-tune system performance, from adjusting learning rates to implementing advanced algorithms for faster convergence.

Lastly, it is crucial to grasp the concept of overfitting and underfitting. These challenges arise when a model becomes too specific or too general, leading to suboptimal predictions. Recognizing the signs of each and understanding how to address them are common themes in related assessments.

Understanding the Basics of Neural Networks

At the core of many intelligent systems lies the ability to process data in a way that mimics the human brain. This fundamental concept involves the use of interconnected units that work together to recognize patterns, make predictions, or classify data. Grasping the basic structure and function of these systems is essential for building a solid foundation in artificial intelligence.

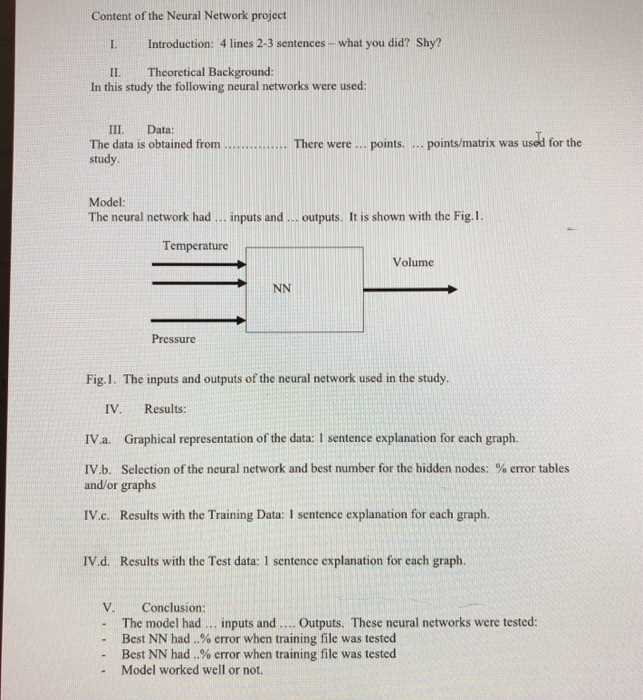

The Architecture of Intelligent Models

The structure of these systems typically consists of multiple layers that each serve a specific purpose. These include the input layer, hidden layers, and output layer. Each layer contains units that are responsible for processing information and passing it to the next layer. Understanding how data flows through these layers and how weights are adjusted during training is a key concept.

Learning Process and Training Methods

Training these systems involves adjusting the weights of the units based on the data they process. The goal is to minimize the difference between predicted and actual outcomes. This is achieved through various optimization techniques that help fine-tune the system’s parameters, allowing it to learn from past data and improve over time. Familiarity with these learning processes is crucial for mastering more advanced topics.

Key Concepts to Focus on for Exams

Mastering the fundamental principles behind intelligent systems is essential for success in evaluations. Focusing on core topics will ensure you are well-prepared to tackle a wide range of challenges. This section highlights key areas to concentrate on, providing a clear understanding of the most critical aspects that are frequently tested.

Core Algorithms and Techniques

One of the primary areas to focus on is the variety of algorithms used to train models effectively. These include optimization techniques such as gradient descent and methods for fine-tuning system parameters. A solid understanding of how these algorithms adjust based on feedback is crucial for answering more advanced queries.

Performance Evaluation and Metrics

Another important concept to review is the evaluation of system performance. Familiarize yourself with key metrics such as accuracy, precision, recall, and F1 score, as these are commonly used to assess the effectiveness of a model. Understanding how to interpret these metrics will help in formulating well-informed solutions to practical problems.

Common Questions on Neural Network Models

When preparing for assessments on artificial intelligence systems, it is important to be familiar with the types of challenges that typically arise. Understanding how these systems function, how they are structured, and how they can be applied to real-world problems is crucial for tackling a variety of problems effectively. This section focuses on some of the most common topics that may appear in evaluations.

Architecture and Structure

One area that is often tested involves the different layers and components of intelligent systems. Expect to encounter questions about how the input, hidden, and output layers work together, as well as the role each layer plays in data processing. Understanding the flow of information through these layers is essential for explaining how a system learns and makes decisions.

Training and Optimization Techniques

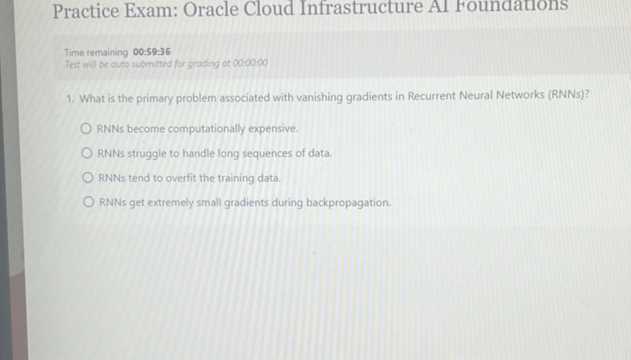

Another common topic centers around the process of training models and improving their accuracy. Be prepared to discuss various techniques, such as gradient descent and backpropagation, which are used to adjust model parameters during training. Knowing how to optimize a model’s performance and prevent issues like overfitting is a key area of focus.

Important Algorithms in Neural Networks

In the realm of intelligent systems, algorithms are the backbone that drives learning and decision-making processes. These computational methods allow models to adapt, improve, and make predictions based on data. This section highlights key algorithms that are essential for building effective models and ensuring they can learn efficiently from the provided input.

Among the most significant algorithms is gradient descent, which is commonly used for optimizing models by adjusting parameters in response to the error between predicted and actual values. Additionally, backpropagation is another fundamental algorithm, enabling systems to learn from mistakes by propagating errors backward through the layers to adjust weights and minimize discrepancies.

Other important algorithms include various variants of optimization techniques, such as stochastic gradient descent (SGD) and Adam, each offering different benefits in terms of speed and accuracy. These methods are crucial for fine-tuning systems to perform well on a wide range of tasks.

Training Techniques for Neural Networks

Effective training is essential for ensuring that intelligent systems can learn from data and make accurate predictions. Several techniques help improve the learning process, allowing models to generalize well on unseen data while avoiding issues like overfitting. This section outlines some of the most important training strategies used in artificial intelligence development.

- Supervised Learning: This approach involves training a model on labeled data, where both the input and the correct output are provided. The model learns to map inputs to outputs by minimizing the difference between its predictions and actual values.

- Unsupervised Learning: In this case, the model learns patterns from data without predefined labels. This method is useful for clustering, dimensionality reduction, and discovering hidden structures in data.

- Reinforcement Learning: This technique is based on reward-based learning, where the model takes actions within an environment and learns from the consequences of those actions, improving its behavior over time.

In addition to these basic approaches, several other training methods help enhance model performance:

- Batch Gradient Descent: The entire dataset is used to compute the gradient at each step, which helps ensure stability in training.

- Stochastic Gradient Descent (SGD): A faster variant that uses only a single sample at each iteration, allowing for quicker updates, though it may introduce more variance.

- Mini-Batch Gradient Descent: A compromise between the previous two methods, using a small subset of data for each step, balancing efficiency and stability.

By understanding and applying these techniques, you can enhance the performance of artificial systems and ensure they are capable of learning complex tasks efficiently.

Overfitting and Underfitting in Neural Networks

When training artificial intelligence systems, two common issues that arise are the inability to generalize well or the model’s failure to capture underlying patterns in data. These problems, overfitting and underfitting, can significantly impact a model’s performance, making it essential to understand and address them during the training process.

Overfitting: Learning Too Much

Overfitting occurs when a model learns the details and noise of the training data to the extent that it negatively impacts its performance on new, unseen data. Essentially, the model becomes too tailored to the training set, capturing patterns that are not applicable outside that specific data. This often happens when the model is too complex, with too many parameters relative to the amount of data available.

Underfitting: Not Learning Enough

Underfitting, on the other hand, happens when the model is too simple or not trained long enough, failing to capture important patterns in the data. In this case, the model’s performance is poor on both the training set and new data. It lacks the complexity needed to understand the underlying structure of the problem, often resulting in an overly simplistic model that cannot perform well.

To address these issues, it’s essential to strike a balance. Regularization techniques, proper data augmentation, and careful selection of model complexity are crucial steps in preventing both overfitting and underfitting, ensuring that the system generalizes well across different datasets.

Activation Functions Explained for Exams

Activation functions are a crucial component in the functioning of intelligent systems, as they determine the output of each unit or node in the model. These functions introduce non-linearity, allowing the system to learn from data in a more complex way and solve problems that cannot be addressed through simple linear equations. This section will explore the most commonly used activation functions and their roles in training models effectively.

Activation functions work by taking the weighted sum of inputs and passing them through a mathematical function, which generates an output used for the next layer or decision-making step. By incorporating non-linearity, these functions enable the model to capture more intricate patterns in the data, which is essential for tasks such as classification, regression, and pattern recognition.

Several types of activation functions are commonly used in practice. Some of the most important include:

- Sigmoid: This function produces an output between 0 and 1, making it ideal for binary classification tasks.

- ReLU (Rectified Linear Unit): A popular function that outputs the input directly if it is positive, and zero otherwise. It is widely used due to its simplicity and efficiency in training deep models.

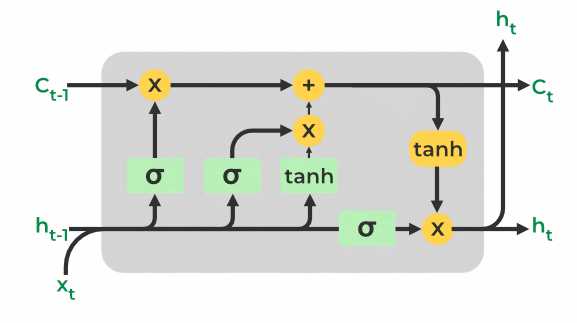

- Tanh (Hyperbolic Tangent): Similar to the sigmoid, but with an output range between -1 and 1. It is often used in recurrent models and situations requiring normalized outputs.

- Softmax: Typically used in the output layer for multi-class classification, as it converts raw output values into probabilities.

Each activation function has its strengths and weaknesses, making it important to choose the appropriate one based on the task at hand. Understanding how each function works and its impact on model performance is essential for mastering artificial intelligence systems.

Neural Network Optimization Methods

Optimizing models is crucial for improving their performance and ensuring that they make accurate predictions. The process of optimization involves adjusting the model’s parameters to minimize errors and enhance learning efficiency. This section explores several key methods that help in fine-tuning models, making them more effective in handling complex tasks.

One of the most commonly used techniques is gradient descent, which helps find the optimal parameters by iteratively adjusting them in the direction that minimizes the loss function. Variants of this method, such as stochastic gradient descent (SGD) and mini-batch gradient descent, offer different trade-offs between speed and stability during training.

Another important approach is the use of regularization methods like L2 and L1 regularization, which prevent the model from becoming too complex and overfitting the data. These techniques add penalty terms to the loss function, discouraging the model from learning overly intricate patterns that may not generalize well to new data.

Additionally, optimization can be enhanced through techniques like learning rate schedules and adaptive learning rates. Methods such as Adam or Adagrad adjust the learning rate dynamically based on the progress of training, improving convergence speed and accuracy.

By applying these methods, the model can become more efficient in learning from data, ultimately leading to better performance and generalization on unseen tasks.

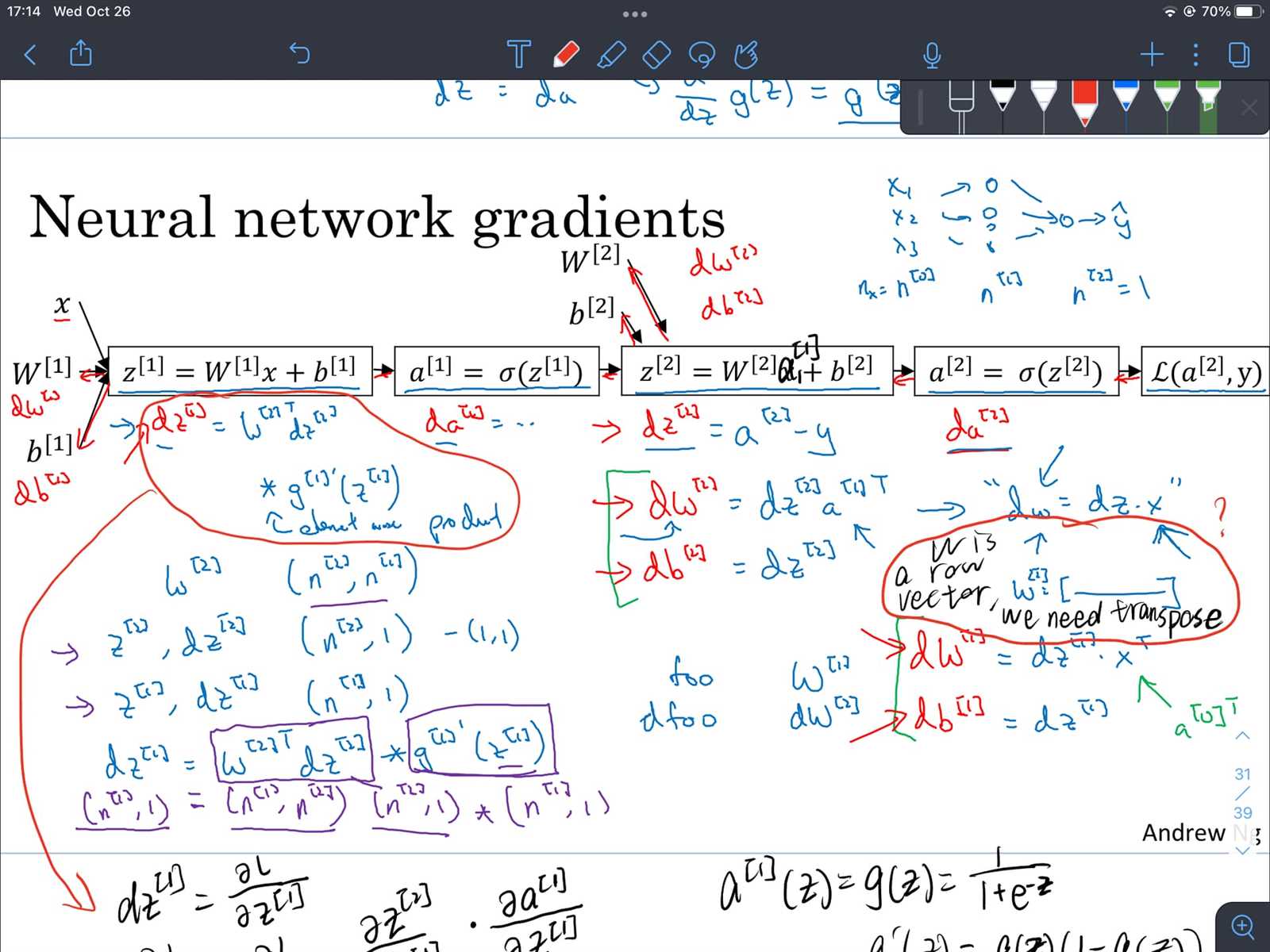

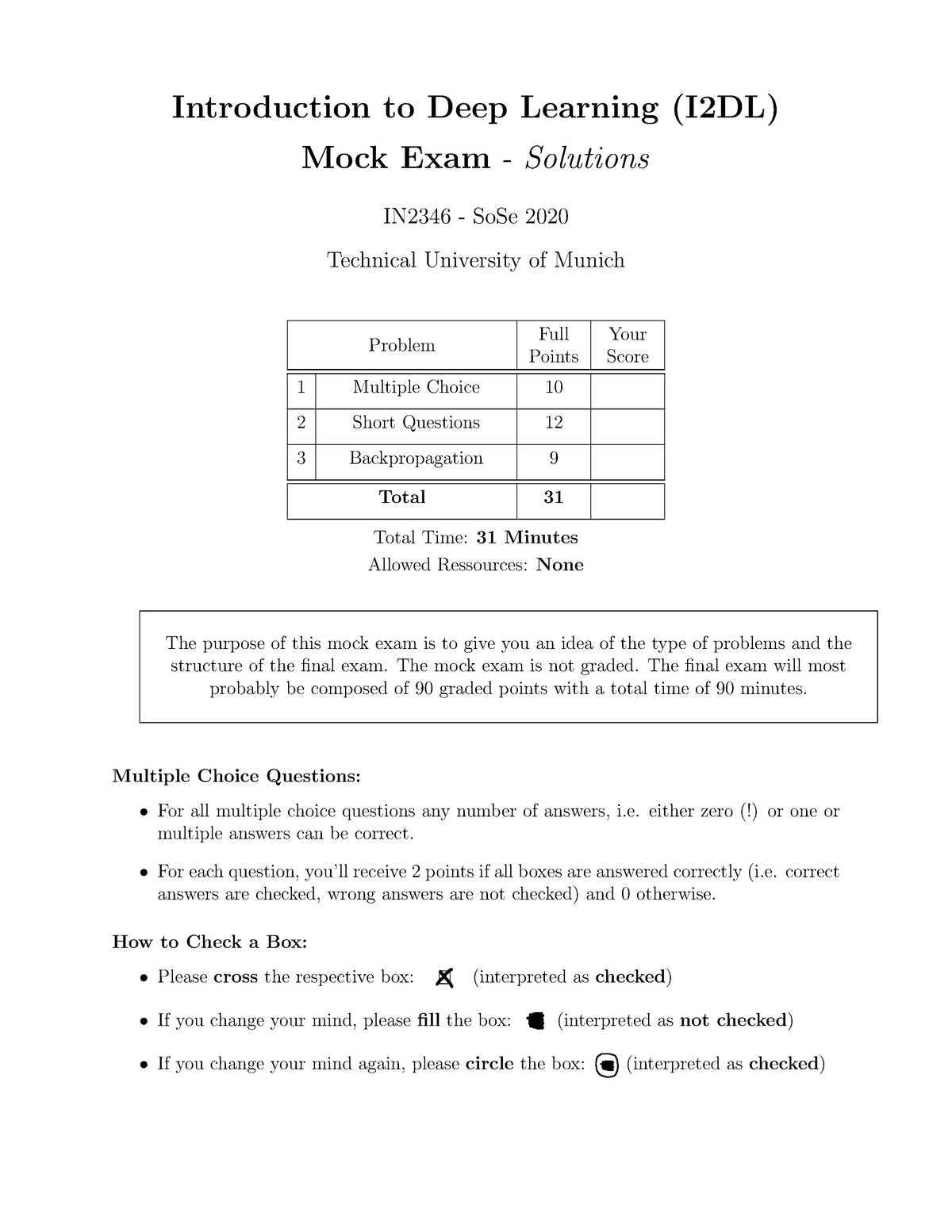

Backpropagation Process in Neural Networks

The backpropagation process is a fundamental technique used to optimize artificial systems by adjusting their internal parameters based on errors made during predictions. This process allows a model to learn by comparing its predictions to the actual results, identifying areas where improvement is necessary. The key idea behind backpropagation is to propagate the error backward through the layers of the model, updating weights to minimize discrepancies between the predicted and true outcomes.

Steps in the Backpropagation Process

Backpropagation involves several steps, all aimed at adjusting the model’s parameters for better accuracy:

- Forward Pass: The input data is passed through the layers, producing an output based on the current weights and biases of the model.

- Error Calculation: The difference between the predicted output and the actual target is calculated using a loss function, providing a measure of how far off the model’s predictions are.

- Backward Pass: The error is propagated backward through the layers. Gradients of the error with respect to each parameter are calculated using the chain rule of calculus.

- Weight Update: Using optimization techniques like gradient descent, the weights and biases are adjusted to reduce the error in future predictions.

Importance of Backpropagation

Backpropagation is essential for training deep models and improving their performance over time. It allows models to learn from errors, gradually enhancing their ability to generalize to new, unseen data. This process, when combined with techniques like regularization and optimization algorithms, ensures that the system can handle complex tasks and provide accurate results across a wide range of inputs.

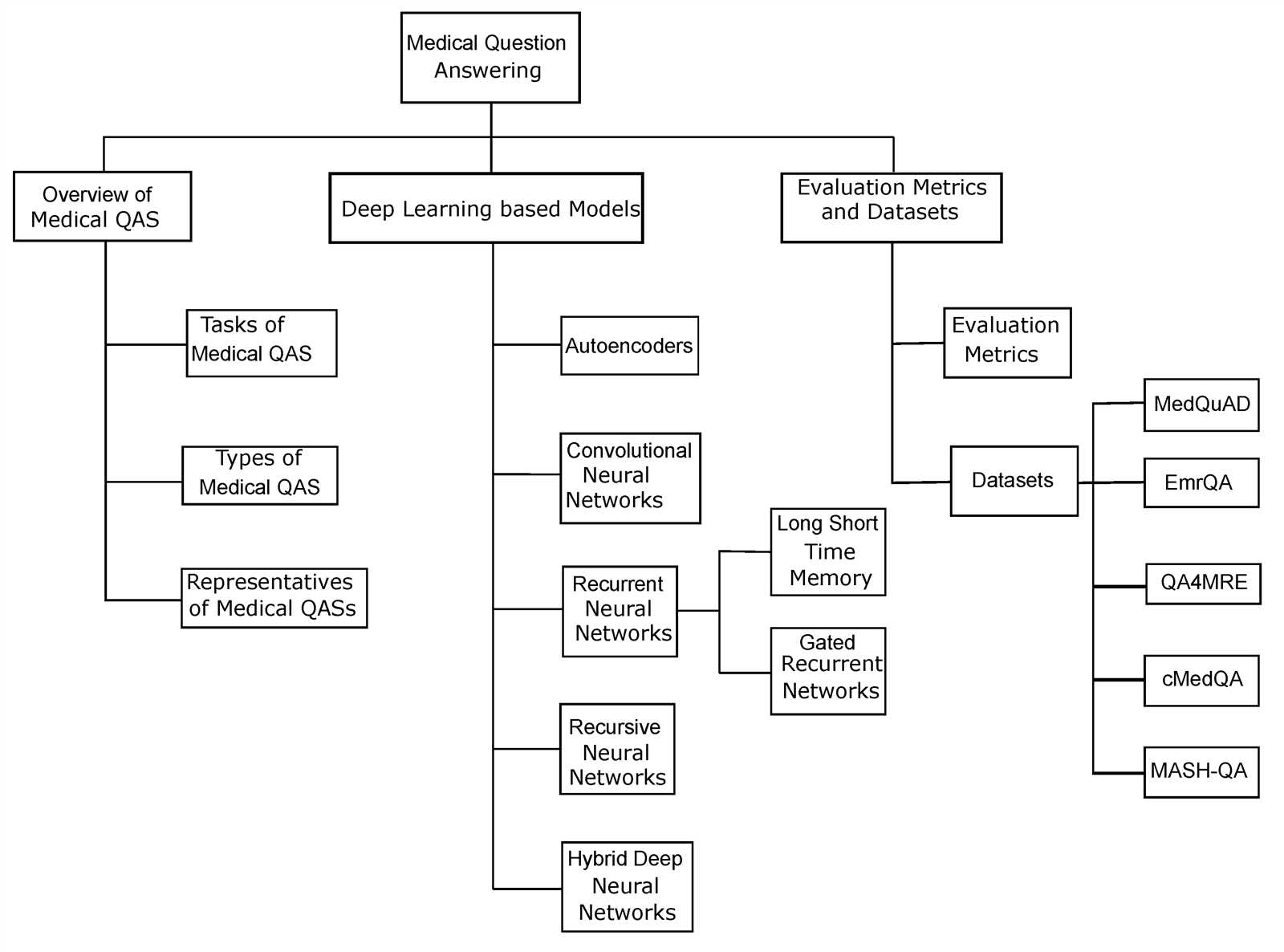

Types of Neural Networks to Study

In the field of artificial intelligence, various models are designed to solve different types of problems. Each model has its own strengths and is suited for specific tasks, from simple pattern recognition to complex decision-making systems. Understanding the different types of models and their applications is crucial for anyone looking to gain a deeper insight into the mechanics of intelligent systems.

Here are some key models to study:

- Feedforward Model: This is one of the simplest architectures, where data moves in a single direction from input to output without loops. It is commonly used in classification tasks and regression problems.

- Convolutional Model: Primarily used in image processing and pattern recognition, this model applies convolutional filters to detect features in input data, making it ideal for tasks like object recognition and computer vision.

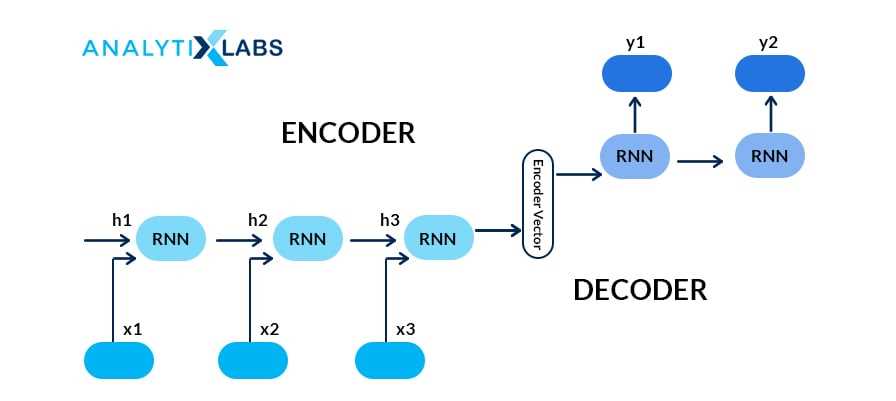

- Recurrent Model: Designed to handle sequential data, such as time series or text, this model processes information in a loop, allowing it to retain memory of past inputs. It is widely used in natural language processing and speech recognition.

- Generative Model: These models are designed to generate new data based on the patterns they have learned. They are used in tasks like image generation and data augmentation.

- Autoencoders: Typically used for unsupervised learning, these models compress input data into a lower-dimensional space and then reconstruct it. They are useful in tasks such as anomaly detection and feature extraction.

By studying these various models, one can gain a comprehensive understanding of how intelligent systems operate and how to choose the right approach for specific tasks.

How to Evaluate Neural Network Performance

Assessing the performance of a model is critical to understanding its effectiveness and identifying areas for improvement. Evaluating a model involves measuring how accurately it performs on a given task and how well it generalizes to new, unseen data. There are various metrics and methods that can be used to quantify model performance, depending on the type of task being performed, such as classification, regression, or generation.

Here are some common evaluation metrics used to measure the performance of intelligent systems:

| Metric | Application | Description |

|---|---|---|

| Accuracy | Classification | The proportion of correct predictions made by the model compared to the total predictions. |

| Precision | Classification | Measures the accuracy of positive predictions. It is the ratio of true positive predictions to the total number of positive predictions. |

| Recall | Classification | Measures the ability of the model to identify all relevant instances. It is the ratio of true positives to the total number of actual positive instances. |

| F1 Score | Classification | The harmonic mean of precision and recall, providing a balanced measure between them. |

| Mean Squared Error (MSE) | Regression | A measure of the average squared differences between predicted and actual values. |

| Root Mean Squared Error (RMSE) | Regression | The square root of the mean squared error, providing an error metric with the same units as the output variable. |

| R-squared | Regression | A measure of how well the model explains the variability in the data. A value closer to 1 indicates better performance. |

By using these metrics, one can quantitatively assess the model’s performance and make adjustments to improve its accuracy and generalization capabilities. Additionally, cross-validation and testing on separate datasets are vital steps to ensure that the model performs well on unseen data and is not overfitting to the training set.

Convolutional Neural Networks and Their Uses

In recent years, deep learning models designed for processing visual data have significantly advanced the field of artificial intelligence. These models, capable of automatically extracting complex features from input data, have revolutionized tasks like image recognition and classification. Their primary strength lies in their ability to identify patterns and structures within data, especially when dealing with high-dimensional inputs such as images and videos.

One of the key models in this area is the convolutional approach, which has been widely adopted for tasks related to computer vision. By using specialized layers that apply filters to detect specific features, these systems can recognize objects, faces, and even nuances in text or sound. The power of this method comes from its ability to work efficiently with large amounts of visual data, making it particularly useful in industries like healthcare, security, and entertainment.

Applications of Convolutional Models:

- Image Classification: Automatically categorizing images based on their content, such as identifying animals, plants, or people in photos.

- Object Detection: Locating and identifying multiple objects within an image, which is commonly used in self-driving cars for obstacle detection.

- Facial Recognition: Analyzing facial features to recognize individuals, which has applications in security and personalized experiences.

- Medical Imaging: Identifying diseases and abnormalities in medical scans like X-rays and MRIs, aiding doctors in diagnosis and treatment planning.

- Video Analysis: Tracking objects and events over time, such as monitoring behavior in surveillance footage or analyzing motion in sports.

By leveraging these powerful models, industries can extract valuable insights from vast amounts of visual data, enhancing decision-making and improving efficiency across various applications. With continuous advancements in this area, the potential uses of convolutional systems continue to expand, making them an essential tool for modern AI development.

Common Neural Network Architectures in Exams

In the field of artificial intelligence, various architectures are used to design models that can perform complex tasks. Each architecture is tailored for different types of data or specific tasks, offering distinct advantages depending on the problem being addressed. Understanding the most common structures is essential for those preparing for assessments in this field, as they form the foundation of many test scenarios and real-world applications.

Different architectures focus on distinct challenges, such as handling sequential data, image processing, or time-series predictions. Recognizing the strengths and applications of each model can provide critical insight when studying for tests or analyzing systems in professional environments.

Popular Architectures to Focus On

Below is a table summarizing key architectures commonly encountered in assessments:

| Architecture | Primary Use | Advantages |

|---|---|---|

| Convolutional Models | Image classification, object detection | Efficient with spatial data, reduces computational load |

| Recurrent Models | Time-series forecasting, speech recognition | Handles sequential data, remembers previous inputs |

| Feedforward Models | General-purpose tasks, classification, regression | Simpler design, easy to implement and train |

| Generative Models | Data generation, image synthesis | Creates new data similar to training data |

Key Points to Remember

When preparing for assessments, it is important to grasp the functionality, use cases, and design principles behind each model. This knowledge not only aids in answering theoretical questions but also helps in practical problem-solving scenarios. Knowing how each structure works in various environments will provide a strong foundation for tackling both conceptual and application-based tasks.

Neural Network Applications in Real Life

In recent years, advanced computational models have significantly impacted various industries, demonstrating their capabilities to solve complex problems and improve everyday processes. These models are increasingly being integrated into real-world applications, making tasks more efficient and enabling innovations that were once considered impossible. Their use spans a wide range of fields, from healthcare to entertainment, transforming the way businesses operate and individuals interact with technology.

Understanding the practical uses of these models is crucial, as they continue to evolve and contribute to breakthroughs across many sectors. In this section, we explore some of the most impactful and widespread applications of these technologies in real-world scenarios.

Key Applications in Various Industries

- Healthcare: Predictive analytics and diagnostic tools assist in early disease detection, personalized treatment plans, and drug discovery, improving patient care and outcomes.

- Finance: Fraud detection systems and risk management strategies help prevent financial crimes and optimize investment portfolios for better returns.

- Autonomous Vehicles: Self-driving cars rely on these models for real-time decision-making, navigation, and obstacle avoidance, making transportation safer and more efficient.

- Entertainment: Recommendation algorithms in streaming platforms personalize content suggestions, enhancing user experience and engagement.

- Retail: Personalized shopping experiences are powered by predictive analytics, allowing businesses to tailor marketing and product offerings to individual preferences.

Impact on Daily Life

The presence of these advanced models in daily life is becoming increasingly noticeable. From voice assistants that help manage tasks to facial recognition systems that enhance security, their influence is growing. As technology continues to progress, the integration of such models into even more areas of life promises further improvements and efficiencies.

Tips for Answering Neural Network Questions

When tackling complex inquiries about computational models and their applications, it is important to approach them with a clear strategy. Mastery of the concepts and the ability to apply them correctly are key to providing precise and well-structured responses. In this section, we provide some essential tips for answering questions related to these advanced algorithms and their workings.

Key Strategies to Follow

- Understand the Terminology: Ensure you grasp the fundamental terms and concepts. Misunderstanding key terms can lead to incorrect answers, so clarify definitions before proceeding.

- Break Down the Problem: Simplify complex scenarios by breaking them down into smaller, manageable parts. Addressing one aspect at a time helps structure your response logically.

- Use Clear Examples: Concrete examples or real-life applications can enhance the clarity of your explanation. They show your understanding and provide practical context for theoretical concepts.

- Focus on Core Concepts: Concentrate on the core principles behind the model you are discussing. This could include optimization methods, common architectures, or training techniques.

- Practice Problem-Solving: Regularly solve practical problems or case studies to solidify your grasp of key topics. This also helps you recognize the patterns and frameworks for answering similar inquiries in the future.

Approach to Complex Theories

- Clarify the Process: When explaining processes, such as training algorithms or model evaluation, clearly outline each step. Use diagrams or flowcharts where possible to visualize the steps involved.

- Check Your Logic: For theoretical or algorithmic questions, ensure that your reasoning is sound. Double-check that your steps logically follow one another and lead to the correct outcome.

- Be Concise but Detailed: Offer detailed explanations without over-explaining. Provide just enough information to demonstrate your knowledge while keeping your answers focused and to the point.

By employing these tips, you can improve your ability to answer questions accurately and confidently, demonstrating a solid understanding of the subject matter.