Successfully navigating your upcoming assessment requires a solid understanding of core concepts related to the operation of computing environments. By mastering key topics, you can approach the test with confidence and demonstrate your knowledge effectively. This section covers essential themes that will be addressed, along with helpful tips for preparation.

In this guide, you’ll explore common challenges, critical terminologies, and essential theories that are fundamental to the subject matter. Whether you’re reviewing key areas or refining your expertise, this content is designed to enhance your readiness. With the right approach, you can improve your performance and tackle even the most complex scenarios.

Preparation for the Practical Assessment

To excel in your upcoming evaluation, it’s crucial to develop a deep understanding of the core concepts that govern the functionality of computing frameworks. A comprehensive approach to studying will allow you to confidently tackle a wide range of topics, from foundational theories to advanced applications. Focus on honing your grasp of key subjects and practical scenarios that are often tested.

One of the most effective strategies is to break down the material into manageable sections. Begin by reviewing the most fundamental principles, ensuring you have a strong foundation. Then, gradually move on to more complex topics, exploring real-world examples to understand how theoretical knowledge is applied. Use a variety of study tools, including practice problems, to test your understanding and reinforce key ideas.

Time management plays a vital role in ensuring you’re well-prepared. Make sure to allocate enough time for each topic, balancing theory with hands-on practice. Don’t hesitate to revisit challenging areas to gain more clarity. Focus on key terms, processes, and methods that are frequently covered, and ensure you’re familiar with the common formats in which the material may appear.

Additionally, consider engaging in discussions with peers or instructors to clarify doubts and exchange insights. This collaborative approach can provide new perspectives and help you identify any gaps in your knowledge. With a systematic and focused preparation plan, you’ll be ready to confidently demonstrate your expertise when the time comes.

Key Topics to Focus On

For successful performance in your upcoming assessment, concentrating on the most relevant concepts is essential. Understanding the fundamental principles and mastering critical topics will not only help you tackle the challenges ahead but also provide a strong foundation for advanced problem-solving. Focus on areas that frequently appear and are crucial to the overall structure of the subject matter.

Critical Areas to Master

Below are some of the essential themes that are commonly tested. By thoroughly reviewing these subjects, you will be well-equipped to handle various scenarios and questions with confidence:

| Topic | Key Concepts |

|---|---|

| Resource Management | Memory Allocation, Process Scheduling, Deadlock Prevention |

| File Handling | File Structures, File Access Methods, Disk Organization |

| Security and Protection | Authentication, Encryption, Access Control Mechanisms |

| Virtualization | Hypervisors, Virtual Machines, Resource Allocation in Virtualized Environments |

| System Architecture | Hardware Interfaces, System Calls, Kernel Modes |

Focus on Practical Scenarios

It’s equally important to study practical applications of these topics. Understanding how each concept applies in real-world scenarios will improve your ability to analyze and solve problems effectively. Practice by solving case studies or exploring simulation exercises that reflect real-time challenges faced in various computing environments.

Essential Concepts for OS Exams

Understanding the foundational ideas behind the operation of modern computing environments is crucial for achieving success in your assessment. These core concepts form the backbone of the subject, and mastering them will ensure you’re equipped to address a wide range of topics. Focusing on the most vital principles will enhance both your theoretical knowledge and practical skills.

Core Areas to Focus On

Below are several critical ideas that should be well understood before the test. These concepts are central to many of the challenges presented in your evaluation:

- Processes and Threads: Understanding the difference between processes and threads, along with process control, synchronization, and communication methods.

- Memory Management: Key techniques such as paging, segmentation, and memory allocation strategies.

- File Management: Grasping file structures, access methods, and file system organization is essential.

- Input/Output Systems: Understanding I/O devices, buffering, and device drivers.

- Security and Protection: Concepts like authentication, authorization, encryption, and access control.

Practical Applications to Master

It’s not enough to know the theory behind each of these concepts–you must also be able to apply them in practical situations. Whether you’re asked to analyze a scenario or solve a problem, having hands-on experience with these topics will make a significant difference. Here are some areas where practical knowledge is key:

- Process scheduling algorithms and their real-time application.

- Memory management in virtual environments.

- File organization and how operating environments handle storage.

- Common troubleshooting techniques for resource allocation issues.

By strengthening your grasp of these concepts and practicing their application, you’ll improve your ability to tackle any challenges that come your way during the assessment.

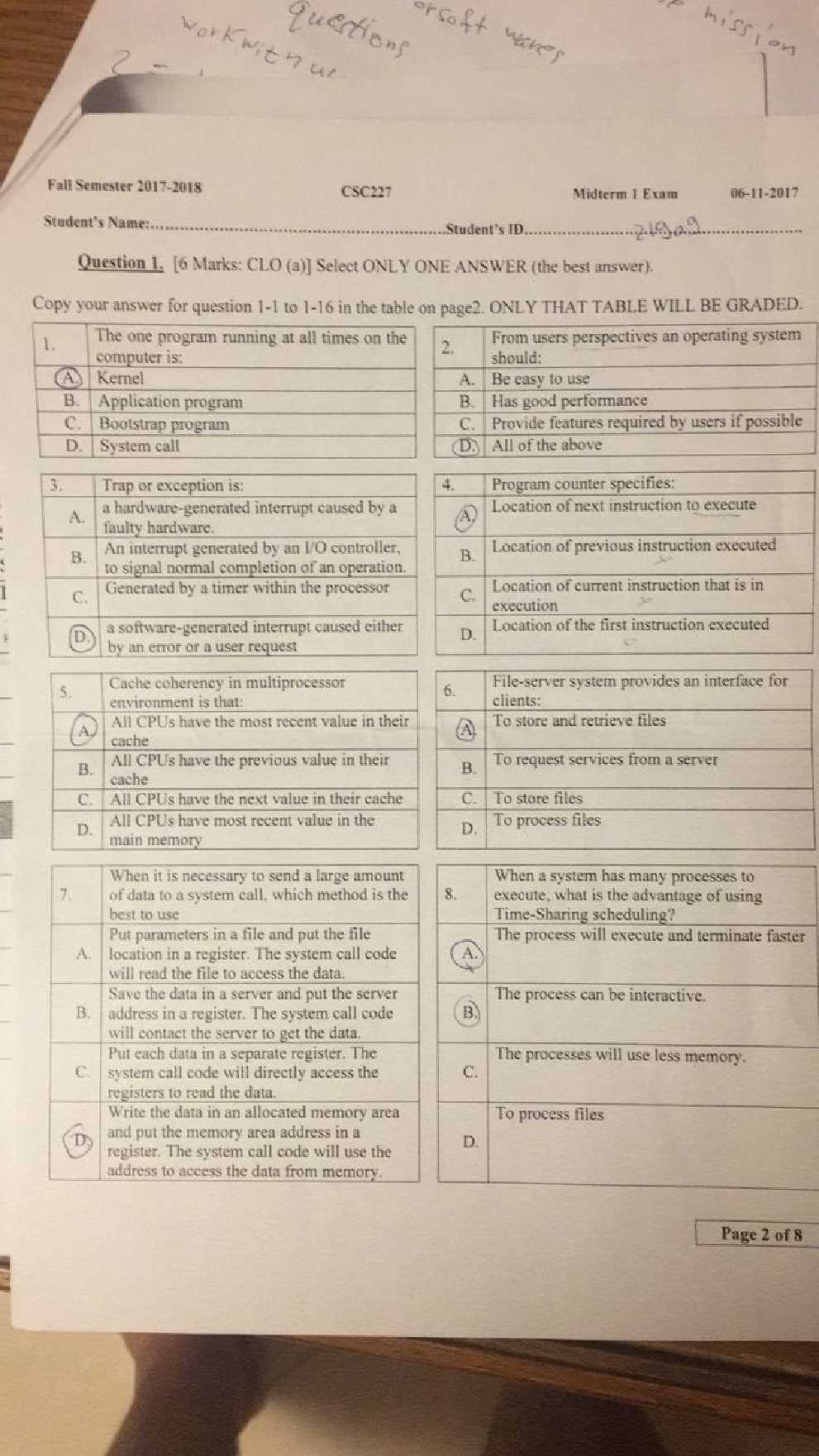

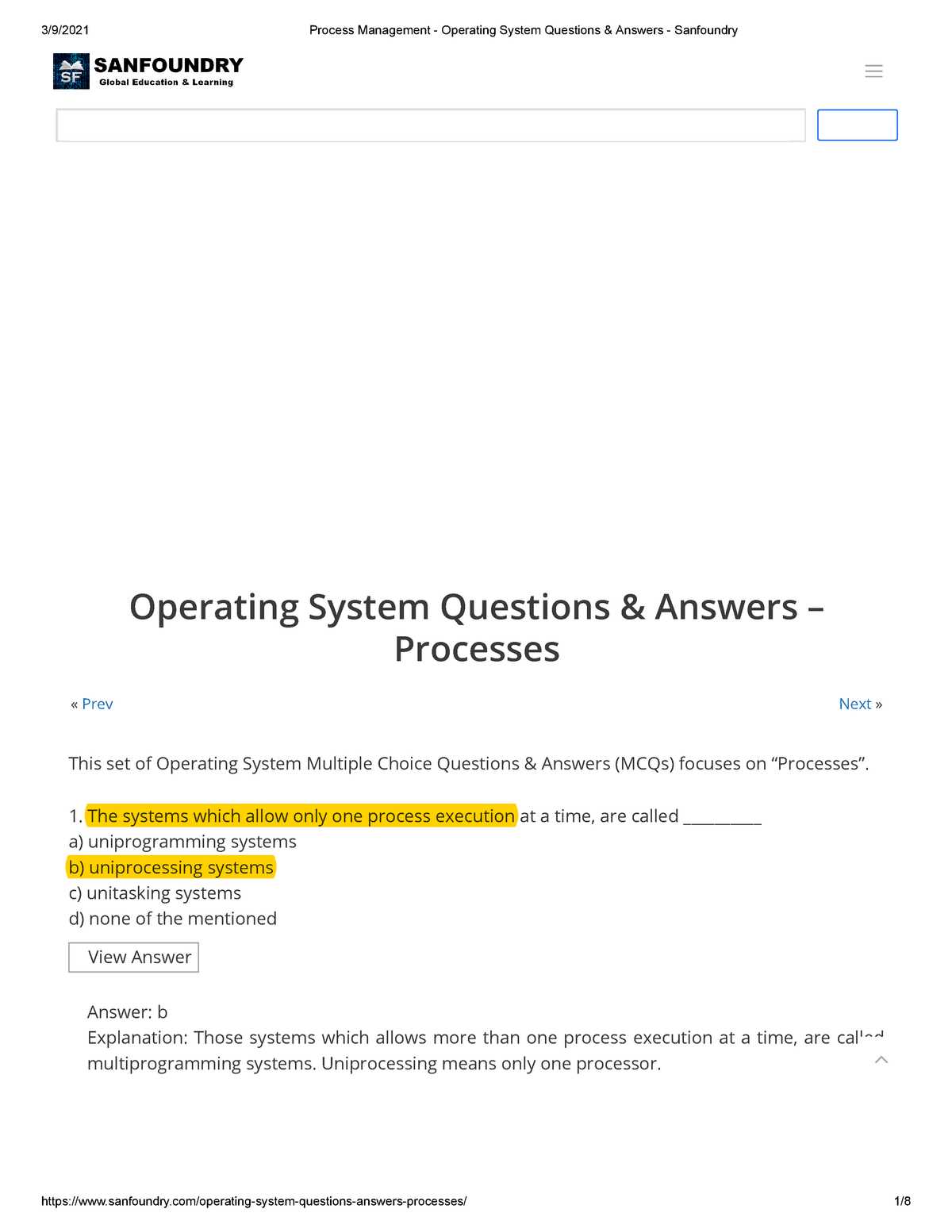

Common Questions on Process Management

One of the most critical areas to understand for your assessment involves how tasks are created, managed, and terminated within a computing environment. Efficient management of processes is key to ensuring optimal performance and resource utilization. Focusing on the most frequently tested aspects of this topic will give you a strong advantage when tackling related scenarios.

Key Topics in Process Control

Several essential concepts are frequently explored when discussing the management of active processes. It’s important to familiarize yourself with the following:

- Process Creation: How are new processes initiated, and what resources are allocated?

- Process Scheduling: What methods are used to prioritize and allocate processor time?

- Inter-process Communication: What mechanisms allow processes to communicate and synchronize?

- Process Termination: What steps are involved in safely ending a process?

- Context Switching: How does the environment handle switching between active tasks?

Real-World Applications of Process Management

Understanding the theory behind these concepts is important, but being able to apply them in practical situations is equally essential. Below are some common scenarios where process management plays a significant role:

- Handling multiple applications running concurrently in a multi-user environment.

- Managing the allocation of CPU time in a real-time processing system.

- Optimizing the efficiency of background processes versus foreground processes.

- Resolving issues when processes are stuck in a deadlock state.

By becoming proficient in these topics, you will be well-prepared to answer questions related to process management and showcase your ability to apply theoretical knowledge in real-world settings.

Memory Management Challenges Explained

Effective management of memory resources is essential for the smooth operation of any computing environment. The ability to allocate, track, and release memory efficiently can significantly impact performance. In this section, we will discuss the common challenges encountered in memory handling, as well as the methods used to address them.

Key Challenges in Memory Allocation

Managing memory requires balancing several competing priorities, such as speed, efficiency, and resource utilization. Below are some common issues that arise in memory management:

- Fragmentation: Over time, memory can become fragmented, making it difficult to allocate large blocks of continuous memory even if the total free memory is sufficient.

- Memory Leaks: Improper allocation or failure to release memory can result in memory leaks, which decrease available memory and affect system performance.

- Over-allocation: Allocating more memory than necessary can lead to inefficient resource use, potentially affecting other processes or applications.

- Under-allocation: Allocating insufficient memory can cause programs to crash or slow down due to resource shortages.

Techniques to Overcome Memory Issues

Several strategies and techniques are employed to address memory management challenges. Below are some common approaches:

-

Understanding File Systems in Detail

The way data is organized and stored on digital storage devices is crucial to how effectively it can be accessed and managed. Different methods of organizing files determine the efficiency of operations, such as saving, retrieving, or modifying data. In this section, we explore the underlying principles and structures that guide data storage, focusing on the most common formats and their functionalities.

Types of File Storage Methods

Various storage methods are used to organize files on a device, each offering different features, advantages, and limitations. Some of the most widely used storage formats include:

- FAT (File Allocation Table): An older storage structure that maps file locations in a table format. While simple and easy to use, it lacks scalability and efficiency when handling larger files or volumes of data.

- NTFS (New Technology File System): A modern, advanced structure commonly used in Windows environments. It provides support for large file sizes, file encryption, and data integrity, making it suitable for handling complex tasks.

- ext4 (Fourth Extended File System): A highly efficient file format for Linux-based environments. It offers journaling, improved performance, and better storage management for large data volumes.

- HFS+ (Hierarchical File System Plus): Previously the default format for Apple devices, offering good support for file management and data integrity, although being replaced by newer formats in more recent devices.

- APFS (Apple File System): The latest file management format for macOS and iOS, optimized for modern storage devices like SSDs. It emphasizes performance, security, and efficiency in managing files across devices.

Key Concepts in File Organization

The organization of files within a storage medium is critical for efficient data access. Some key concepts to understand include:

- File Naming: Each file is given a name that serves as a unique identifier, allowing for easy retrieval and organization within directories.

- Directories or Folders: These act as containers that group related files, making it easier to manage large numbers of files.

- Inodes: These are data structures used by certain file systems (suc

Types of Operating Systems You Must Know

Understanding the various types of management platforms that control hardware, coordinate tasks, and allow user interaction is essential for anyone interested in computing. Each platform is tailored to different needs, offering specific features to support a range of applications. Whether for personal use, enterprise-level tasks, or specialized functions, knowing the key types is crucial for both technical professionals and casual users alike.

Categories of Management Platforms

These platforms come in different forms, each designed to handle specific functions. The most common categories include:

- Batch Platforms: Designed to handle tasks that are processed in batches, these platforms execute jobs without user intervention. They are efficient for large-scale data processing.

- Time-Sharing Platforms: These allow multiple users to access the machine simultaneously. Resources are shared efficiently, providing each user with the illusion of having exclusive access.

- Real-Time Platforms: These are used in environments where immediate processing is critical. They are commonly found in industries like aerospace, automotive, and healthcare.

- Embedded Platforms: Found in specific devices like smartphones, appliances, and industrial machines, these platforms are optimized for a particular function with limited resources.

- Distributed Platforms: These manage multiple devices connected over a network, enabling them to function as a cohesive unit while sharing resources and data.

Popular Examples You Should Recognize

Here are some of the most commonly used platforms you should be familiar with:

- Windows: Known for its user-friendly interface, it is widely used in personal computing and business environments.

- Linux: A versatile, open-source platform often used for server environments, development, and programming tasks due to its flexibility and stability.

- Mac OS: This is the platform found on Apple computers, valued for its seamless integration with hardware and a design-focused interface.

- Android: Built on Linux, it is the dominant platform for mobile devices, offering an open ecosystem for app developers and users.

- RTOS (Real-Time): Aimed at environments requiring strict timing, this platform ensures tasks are completed within specific deadlines, crucial in industries like healthcare or automotive.

Familiarizing yourself with these platforms will provide a deeper understanding of how different environments cater to various needs. Each has its strengths, making them suitable for specific tasks and industries.

Real-World OS Applications and Use Cases

The platforms that manage hardware resources are not just theoretical concepts–they play a crucial role in nearly every aspect of our daily lives. From managing large data centers to controlling everyday gadgets, these platforms are embedded in various applications, each tailored to a specific need or environment. Understanding their real-world use cases is key to grasping their importance in both personal and professional settings.

Common Applications Across Industries

These management platforms are utilized in diverse fields, providing essential functionality across different types of environments. Some key areas where these platforms are deployed include:

- Mobile Devices: Smartphones, tablets, and wearables rely on efficient platforms to manage user interaction, communication, and resource usage. Platforms like Android and iOS are optimized for these tasks, ensuring smooth performance on mobile hardware.

- Enterprise Servers: Businesses use dedicated management platforms for their data centers and servers to handle everything from databases to web services. Platforms like Linux are often preferred in these environments for their scalability and reliability.

- Embedded Systems: Embedded platforms power devices like smart TVs, industrial machinery, automotive systems, and IoT devices. These systems require specialized, lightweight platforms that can perform specific tasks efficiently.

- Healthcare Devices: Medical equipment, from patient monitors to diagnostic machines, rely on real-time management platforms. These ensure timely processing and accurate results in critical situations.

Examples in Everyday Technology

Beyond specialized use cases, these platforms are also a cornerstone of many consumer products. Here are a few everyday examples:

- Personal Computers: The platform on a personal computer provides the essential interface for users to interact with applications, manage files, and perform tasks. Whether running Windows, macOS, or Linux, users rely on these platforms to run software and manage hardware.

- Smart Home Devices: From smart speakers to thermostats, these devices are powered by lightweight management platforms that handle communication, sensor data, and cloud interaction, providing seamless control and automation.

- Cloud Computing: On a larger scale, cloud platforms manage vast networks of servers and storage, enabling businesses and individuals to access data and services remotely. These platforms ensure that cloud services run efficiently, handling everything from resource allocation to security.

As technology continues to evolve, the role of these management platforms will only grow, making them an integral part of the modern digital landscape. Understanding their applications in real-world scenarios will help illuminate how they shape everything from the smallest gadgets to the largest data centers.

Reviewing System Calls and API Basics

For software to communicate with hardware and perform necessary tasks, it must rely on standardized methods. These methods enable applications to access the resources they need, such as memory, files, and input/output operations, through structured requests. This process is managed by two core concepts: system calls and application programming interfaces (APIs). Understanding how these tools function is key to understanding the inner workings of a platform.

System Calls Explained

System calls act as the intermediary between user-level programs and the core functions of the underlying environment. These calls allow programs to request services that are typically beyond their direct control, such as accessing files, managing processes, or allocating memory. By invoking specific functions in the kernel, system calls allow applications to perform complex tasks securely and efficiently. Some of the most common categories include:

- File Handling: Operations like opening, reading, writing, and closing files.

- Process Management: Creating, terminating, or controlling the execution of processes.

- Memory Allocation: Requesting and freeing memory blocks for program execution.

- Device Communication: Interacting with hardware peripherals such as printers or storage devices.

API Basics

APIs provide a set of tools that enable applications to interact with other software and services. Unlike system calls, which access the core functionalities of the platform, APIs allow for higher-level interactions. These interfaces define a set of commands or protocols that applications can use to request services from external systems or libraries. APIs are fundamental for building scalable applications, integrating third-party services, and ensuring cross-platform compatibility. Important elements of APIs include:

- Endpoints: Specific functions that an API exposes for developers to use.

- Data Handling: APIs allow for structured communication between systems, handling inputs and outputs ef

Interrupts and Their Role in OS

Interrupts are crucial mechanisms that enable an efficient and responsive environment in computing. They allow programs and processes to pause their normal flow and attend to more immediate tasks. When an interrupt occurs, it signals the processor to temporarily halt its current operations and address the task at hand. This concept is vital for the smooth functioning of software applications and ensures that the system remains responsive to both user inputs and hardware events.

How Interrupts Work

An interrupt is essentially a signal sent by hardware or software to get the attention of the processor. It causes the CPU to suspend its ongoing tasks and execute a predefined routine to handle the interrupt. Once the interrupt is processed, the CPU resumes its previous operation. Interrupts can come from a variety of sources, including:

- Hardware Interrupts: Generated by hardware devices such as keyboards, mice, network interfaces, or timers.

- Software Interrupts: Triggered by software or programs requesting specific services, such as system calls or exceptions.

Types of Interrupts

Interrupts can be categorized based on their source and how they are handled. The two primary types are:

Type of Interrupt Description Maskable Interrupts These can be delayed or ignored by the processor if necessary. They are used for non-urgent tasks. Non-Maskable Interrupts These cannot be ignored or delayed, ensuring that critical tasks such as hardware failures are addressed immediately. Interrupt handling is key to multitasking environments, where multiple processes are running concurrently. By efficiently managing interrupts, the processor can ensure that high-priority tasks are handled promptly, without interrupting the ongoing work of other applications. This concept is foundational in maintaining the responsiveness and reliability of a computing platform.

Scheduling Algorithms You Should Learn

Efficient task management is essential for ensuring that processes are executed in an optimal manner. Scheduling algorithms determine the order in which processes are executed, affecting system performance and resource utilization. Understanding these algorithms is critical for anyone involved in system-level programming or performance optimization. Different algorithms are designed to handle specific requirements such as fairness, response time, and throughput, making them essential tools for managing computational workloads.

Types of Scheduling Algorithms

There are several key algorithms, each with its own strengths and use cases. The most common ones include:

- First-Come, First-Served (FCFS): A simple approach where processes are executed in the order they arrive. While straightforward, it can lead to long waiting times for processes that arrive later.

- Shortest Job Next (SJN): Also known as Shortest Job First (SJF), this algorithm selects the process with the shortest burst time, minimizing the average waiting time.

- Round Robin (RR): This approach allocates a fixed time slice (quantum) to each process, ensuring that all processes receive equal CPU time in a cyclic order.

Advanced Scheduling Techniques

For more complex environments, other techniques are often used to optimize performance based on specific criteria:

- Priority Scheduling: Processes are assigned priorities, with the highest priority process being executed first. This can be either preemptive or non-preemptive.

- Multilevel Queue Scheduling: This method uses multiple queues, each dedicated to a different priority level. Processes are categorized and handled accordingly, allowing better management of varying process types.

- Fair Share Scheduling: Ensures that CPU time is fairly distributed among users or applications, prioritizing equity over efficiency.

Mastering these algorithms helps in analyzing system performance and ensuring that resources are used efficiently while minimizing delays and bottlenecks. A solid understanding of scheduling techniques is essential for anyone interested in building reliable and high-performing systems.

Examining OS Security and Protection

Ensuring the safety and privacy of data is a fundamental aspect of modern computing. Protection mechanisms within a system are designed to prevent unauthorized access, maintain confidentiality, and ensure the integrity of processes and stored data. Security is not just about preventing breaches but also about maintaining a stable and reliable environment, where users and resources are properly controlled and safeguarded from threats.

Key Security Features

Several essential elements contribute to the protection and security of computing environments. These features include access control, authentication, encryption, and more. They work in tandem to form a robust defense against external and internal threats:

Security Feature Description Access Control Restricts access to resources based on user roles and permissions, ensuring that only authorized individuals can perform certain actions. Authentication Verifies the identity of users or systems, typically using passwords, biometric data, or security tokens. Encryption Secures data by converting it into an unreadable format, which can only be decrypted with a proper key. Auditing Monitors and records system activity to detect any suspicious or unauthorized behavior. Protection Mechanisms

In addition to security features, protection mechanisms are employed to isolate processes and prevent them from interfering with one another. These mechanisms can be categorized into several types:

- Memory Protection: Ensures that processes do not access memory locations allocated to other processes, reducing the risk of data corruption.

- Process Isolation: Prevents processes from affecting one another, ensuring that one malfunctioning process does not compromise the whole environment.

- Privilege Separation: Limits the access rights of different processes to prevent escalation of privileges and misuse of resources.

- Sandboxing: Runs processes in isolated environments, where they can perform actions without affecting other parts of the system.

By understanding these core concepts, one can appreciate the complexity and importance of maintaining secure and protected environments, whether for individual systems or large-scale networks. These security mechanisms, when implemented properly, help in mitigating the risks posed by malicious attacks, ensuring the safety of both users and data.

How Virtualization Works in OS

Virtualization technology enables the creation of multiple simulated environments or virtual instances from a single physical machine. It allows for efficient resource management by enabling different software to run independently and securely within the same system. This separation of virtual environments helps optimize performance, increase flexibility, and reduce costs by maximizing the utilization of hardware resources.

Types of Virtualization

There are different types of virtualization that serve specific purposes. Each type allows for the isolation and management of resources, ensuring that multiple environments can operate on the same physical machine without interfering with one another:

- Hardware Virtualization: This involves creating virtual machines that can run their own operating environments. The virtual machines function as if they are independent systems, though they share the underlying hardware resources.

- Software Virtualization: Allows one software application to run in different operating environments, providing a controlled environment to execute programs without directly affecting the host system.

- Network Virtualization: This divides a physical network into multiple virtual networks, each with its own set of resources, allowing for better traffic management and improved security.

- Storage Virtualization: This aggregates physical storage from multiple devices into a single, virtual storage unit. It simplifies management and improves data accessibility across virtual environments.

How Virtualization Functions

The core of virtualization lies in a software layer known as the hypervisor or virtual machine monitor (VMM). The hypervisor sits between the hardware and the virtual machines, acting as an intermediary that allocates resources and ensures proper isolation of environments. There are two primary types of hypervisors:

- Type 1 Hypervisor: Runs directly on the physical hardware, managing virtual machines independently of a host operating environment. It is more efficient and provides greater security, as it does not rely on a host system.

- Type 2 Hypervisor: Runs on top of an existing operating environment and uses the host machine’s resources to create and manage virtual machines. While less efficient than Type 1, it is more flexible for personal or smaller-scale uses.

Through these mechanisms, virtualization enables better resource allocation, improved system management, and increased scalability. By abstracting the underlying hardware, it allows multiple operating environments to share resources in an efficient and secure manner, ultimately providing flexibility and cost savings for users and organizations alike.

Comparing Different OS Architectures

Various platforms are designed using different architectural models, each tailored to meet specific needs such as performance, reliability, scalability, or ease of maintenance. These designs shape how the core components interact, manage tasks, and allocate resources. Understanding the distinctions between these models helps in determining their advantages and drawbacks depending on the intended use.

Monolithic Architecture

This approach consolidates all essential functions into a single, large block of code. In such a setup, everything operates within a single layer, allowing direct communication between components. Although this may lead to faster execution in some cases, the lack of modularity can make it difficult to modify or maintain the structure over time.

- Advantages: Simple design, with efficient communication between components, which may result in better performance for specific tasks.

- Disadvantages: The system can become complex and difficult to troubleshoot. A failure in one area might affect the entire platform.

Microkernel Architecture

Unlike monolithic setups, the microkernel architecture divides the platform into smaller, independent modules. Only the most basic functions, such as process communication and hardware interaction, reside within the microkernel itself. Other services run as separate, user-level components. This structure enhances flexibility and fault tolerance.

- Advantages: Increased modularity allows for easier updates and better fault isolation, improving overall stability.

- Disadvantages: Performance may be slightly impacted due to the additional overhead required for communication between modules.

Each architectural design brings its own set of strengths and weaknesses. Monolithic setups are often favored for performance-heavy applications, while microkernel models excel in environments where reliability and isolation are top priorities. Choosing the right approach depends on the specific requirements of the platform in question.

Tools and Techniques for Troubleshooting

Effective problem resolution often requires a combination of the right tools and techniques to identify, analyze, and resolve issues efficiently. Troubleshooting can range from simple checks to more advanced diagnostic processes, depending on the complexity of the problem. Understanding the various available resources helps ensure quicker identification and mitigation of underlying issues.

One of the first steps in troubleshooting is recognizing the nature of the problem. Tools such as system monitors and logs can provide valuable insights into what is happening behind the scenes. These tools often display resource usage, error messages, and process states, helping to narrow down the scope of the issue. A solid understanding of the environment is crucial for interpreting these results effectively.

In addition to standard diagnostic tools, specific techniques such as isolating variables, performing stress tests, or even using debugging software may be necessary. These methods help simulate different scenarios to understand the behavior of processes under various conditions, allowing for more targeted fixes.

Ultimately, troubleshooting is a systematic process that involves gathering information, analyzing it for patterns, testing hypotheses, and applying solutions. By using the right combination of tools and strategies, most issues can be addressed effectively and with minimal disruption.

Tips for Answering OS Exam Questions

Successfully addressing assessments related to system concepts requires a strategic approach. Understanding the underlying principles, as well as how to structure responses, can significantly improve performance. It’s essential to grasp both theoretical foundations and practical applications to ensure comprehensive and well-rounded replies.

Master the Key Concepts

Before diving into specific questions, it is crucial to familiarize yourself with the fundamental topics of the subject. Concepts such as process management, memory handling, file organization, and security mechanisms should be at the forefront of your preparation. A solid understanding of these areas provides a strong foundation for tackling various types of queries. Focus on the major theories and real-world applications related to each topic.

Structured Responses

When responding to inquiries, clarity and organization are key. Begin with a brief introduction that addresses the main points of the question. Follow this with a detailed explanation, using examples wherever possible. Be concise yet thorough, ensuring you answer all parts of the question. Conclude with a summary or key takeaway if necessary. By presenting information in a structured manner, you demonstrate both knowledge and analytical ability.

Lastly, practicing time management is essential. Read through all questions before starting, and allocate time based on their complexity. Review your responses for accuracy and completeness, ensuring that each point is supported by logical reasoning or evidence where applicable.

Time Management During OS Exams

Efficient time management is crucial when tackling assessments focused on system concepts. Without proper planning, it’s easy to run out of time or fail to allocate enough attention to each topic. The key is to organize your approach to ensure that every section receives adequate focus, allowing you to maximize your performance within the given time frame.

Prioritize Your Tasks

At the start of the assessment, quickly review all tasks to get an overview of the scope. Identify questions that seem more straightforward or familiar, as they can be answered quickly, providing a solid foundation to build on. Set aside more challenging inquiries for later, once you’ve secured the easier ones. This will help to reduce anxiety and ensure that you’re not rushing through more complex sections at the end.

Allocate Time Wisely

Assign specific time limits to each question or section based on its complexity. For example, allocate less time for questions requiring short responses and more time for those requiring detailed analysis or multiple steps. Always leave a few minutes at the end for review to catch any mistakes or omissions in your responses. Sticking to these time boundaries helps maintain a steady pace throughout the assessment.

By practicing good time management, you ensure that all aspects of the assessment are covered effectively and with enough depth, ultimately improving the quality of your responses.

Resources for OS Exam Success

Achieving success in assessments focused on core concepts requires the right tools and materials to reinforce understanding. A well-rounded approach includes study guides, practice materials, and online resources that offer valuable insights into key topics. These resources help solidify knowledge, clarify difficult areas, and provide hands-on experience with common types of tasks encountered during evaluations.

Books and reference guides are invaluable for deep dives into theory and definitions. They offer comprehensive explanations and serve as excellent review tools. In addition to books, practice tests are indispensable for gauging knowledge retention and identifying weak points. Online platforms with interactive exercises or mock assessments offer simulated environments that help sharpen problem-solving skills.

Engaging with forums and online study groups can also provide a sense of community and peer support. By discussing challenging topics and learning from others, you gain new perspectives that can enhance your overall preparation. Ultimately, combining these diverse resources increases the likelihood of mastering the necessary material and performing well in any evaluation.