When preparing for roles that involve data analysis, understanding key concepts and methodologies is crucial. Employers expect candidates to demonstrate proficiency in various techniques and the ability to handle complex challenges. A strong grasp of these skills can set you apart from others in a competitive job market.

During the selection process, you may face challenges designed to assess your technical knowledge, problem-solving abilities, and analytical thinking. It’s important to be ready to discuss your approach to handling different scenarios, as well as your experience with relevant tools and methods. Being confident in your ability to explain complex topics clearly will leave a positive impression on potential employers.

Each assessment provides an opportunity to showcase your expertise and show that you can apply your knowledge in real-world situations. Mastering the basics, while also being able to tackle advanced problems, can help you stand out and increase your chances of success.

Essential Skills for Data-Related Job Assessments

In any role requiring data analysis, employers look for candidates who can think critically, solve problems efficiently, and communicate their findings clearly. A strong foundation in key concepts is vital for navigating challenges that may arise during the selection process. These abilities are essential for demonstrating your capability to handle data-driven tasks in a professional setting.

Among the most important competencies are proficiency with analytical techniques, familiarity with common tools, and the ability to interpret complex datasets. Moreover, being able to present your methodology and insights in a structured manner ensures that your approach is both understandable and compelling to others. These skills are often the deciding factors between candidates with similar technical backgrounds.

To stand out, candidates should also demonstrate adaptability. Being able to apply your knowledge across a variety of real-world problems shows versatility and the potential to thrive in dynamic environments. Mastering these essential skills will help you navigate assessments with confidence and highlight your strengths as a data professional.

Key Statistical Concepts to Know

Having a solid understanding of fundamental ideas is crucial for excelling in data-driven roles. These core principles form the foundation for analyzing, interpreting, and drawing conclusions from complex information. Mastering these concepts will enhance your ability to approach various tasks with precision and confidence.

Descriptive Analysis

This area involves summarizing data to uncover patterns and trends. It helps you make sense of large datasets by presenting them in a more manageable form. Key concepts include:

- Mean: The average value of a dataset.

- Median: The middle value when the data is sorted.

- Mode: The value that appears most frequently.

- Standard Deviation: A measure of how spread out the values are.

Inferential Methods

These techniques allow you to draw conclusions or make predictions based on sample data. This is essential for making informed decisions in situations where gathering full data is impractical. Key areas include:

- Hypothesis Testing: Determining the validity of assumptions using sample data.

- Confidence Intervals: Estimating a range within which the true value is likely to fall.

- Correlation: Assessing the relationship between two or more variables.

These concepts form the core of data analysis, enabling professionals to interpret information effectively and make sound, data-driven decisions. Mastering these areas is essential for anyone looking to advance in a role that involves working with data.

Preparing for Data Analysis Questions

When preparing for challenges related to analyzing datasets, it’s crucial to develop a deep understanding of various methods used to extract valuable insights. These types of problems often require you to demonstrate your proficiency with analytical techniques and your ability to apply them to real-world scenarios. Being ready to approach these tasks methodically will help you stand out during the selection process.

Understanding Key Methods

Familiarity with different techniques for examining data is vital. From basic exploration to advanced analysis, the following methods are frequently tested:

| Technique | Description |

|---|---|

| Data Cleaning | Identifying and correcting errors or inconsistencies within datasets. |

| Exploratory Data Analysis | Using visualizations and summary statistics to uncover patterns and relationships. |

| Regression Analysis | Modeling the relationship between variables to make predictions or understand correlations. |

| Time Series Forecasting | Analyzing time-ordered data to predict future values. |

Real-World Application

It’s important to apply these methods to real-world datasets to gain hands-on experience. Practice solving problems using actual data, whether it’s through online platforms, case studies, or previous projects. This practical knowledge will help you explain your process confidently and demonstrate your skills effectively.

Mastering these techniques ensures you’re well-equipped to tackle complex tasks and shows your readiness for any analytical role.

Common Hypothesis Testing Questions

When preparing for challenges related to evaluating assumptions, it is important to understand the different ways in which such problems are framed. These problems often test your ability to assess the validity of claims based on sample data. A strong grasp of key concepts, such as significance levels and test statistics, will help you approach these challenges with confidence.

Understanding Significance Levels

One of the most fundamental concepts to grasp is the idea of a significance level, which determines the threshold for rejecting the null hypothesis. The typical value of α is 0.05, but other values may be used depending on the context. Questions often involve determining whether a result is statistically significant at a given level, based on sample data.

Key Test Types

Another frequent area of focus is the ability to choose and apply the correct hypothesis test for a given scenario. Some common tests include:

- t-test: Used when comparing the means of two groups to assess if they are significantly different.

- Chi-squared test: Used for categorical data to evaluate how observed frequencies compare to expected frequencies.

- ANOVA: Applied when comparing the means of three or more groups to determine if at least one differs significantly.

Being able to explain when and why each test should be applied is essential for success in addressing these challenges.

How to Approach Probability Queries

When tackling challenges related to the likelihood of events, a systematic approach is key to finding accurate solutions. These problems often require a combination of logic, mathematical formulas, and clear reasoning to determine the chance of a specific outcome. Developing a structured method will help you effectively navigate these queries, even when faced with complex scenarios.

Understand the Problem Context

Before applying any formulas or models, ensure that you fully grasp the scenario presented. Carefully identify all possible outcomes and distinguish between independent and dependent events. For example, if dealing with events that are independent, the probability of multiple events happening can be calculated by multiplying their individual probabilities.

Key Probability Rules to Remember

Several fundamental rules guide how probabilities are calculated and interpreted:

- Addition Rule: If two events are mutually exclusive, the probability of either occurring is the sum of their individual probabilities.

- Multiplication Rule: For independent events, the probability of both occurring is the product of their individual probabilities.

- Complementary Events: The probability of an event not occurring is 1 minus the probability of the event occurring.

By mastering these essential rules, you will be well-equipped to approach probability-related problems with confidence and accuracy.

Understanding Regression Analysis Questions

When exploring the relationship between variables, it’s essential to grasp how certain factors influence others. This often involves modeling how one or more predictors affect a dependent variable. Having a solid understanding of the core concepts of regression allows you to analyze trends, predict outcomes, and make data-driven decisions with confidence.

The key to solving problems involving regression models is knowing how to interpret coefficients, assess model fit, and handle potential complications like multicollinearity. By being familiar with these aspects, you can accurately determine the strength and direction of relationships between variables.

It’s also important to understand the different types of regression techniques, such as linear, multiple, and logistic regression, as each has its own set of applications and assumptions. Mastery of these concepts ensures that you can approach any related problem effectively and select the right method for analysis.

Mastering Descriptive Statistics for Interviews

When preparing for challenges that require summarizing data, it’s essential to be familiar with the fundamental methods used to describe key features of a dataset. This area focuses on techniques that allow for quick insights into the data, such as measures of central tendency, spread, and the overall distribution. A strong grasp of these methods is vital for making sense of large datasets and clearly communicating findings.

Key Measures to Understand

Understanding the core concepts in this area is crucial for any data-related task. Here are some of the most common measures:

- Mean: The average of the dataset, providing a central value.

- Median: The middle value that separates the higher and lower halves of the data.

- Mode: The most frequently occurring value in the dataset.

- Standard Deviation: A measure of how spread out the values are from the mean.

Practical Application

Being able to calculate and interpret these values is a critical skill. Here’s an example table to demonstrate how these measures apply to a simple dataset:

| Data Points | Mean | Median | Mode | Standard Deviation |

|---|---|---|---|---|

| 2, 4, 4, 6, 8 | 4.8 | 4 | 4 | 2.49 |

Mastering these concepts enables you to efficiently summarize data and present clear, actionable insights, which is critical in any data-driven role.

Time Series Analysis Interview Tips

When tackling problems related to sequential data, it’s crucial to understand how patterns evolve over time. The ability to identify trends, seasonal effects, and cyclic patterns is a key part of answering challenges related to time-ordered datasets. Mastering these techniques demonstrates proficiency in handling temporal data, and can set you apart as a candidate capable of interpreting complex time-dependent information.

Before diving into specific methods, make sure you are comfortable with foundational concepts such as stationarity, autocorrelation, and the decomposition of time series into components. These concepts are often integral to solving related tasks and form the foundation for more advanced analysis techniques.

It’s important to practice applying these concepts to real-world examples, such as forecasting stock prices, weather patterns, or sales data. Being able to not only explain but also perform tasks like smoothing, trend analysis, and model selection will showcase your practical expertise during any assessment.

Explaining Statistical Software Proficiency

Proficiency in data analysis tools is essential for anyone handling complex datasets or performing advanced modeling tasks. Being able to confidently navigate software platforms is a critical skill, as these tools are designed to streamline the process of data manipulation, analysis, and visualization. Knowledge of the most widely-used software can not only improve efficiency but also ensure more accurate and reliable results.

Key Software to Master

There are several prominent software tools that are frequently used in data analysis. Here are some of the most commonly used platforms:

- R: Ideal for statistical analysis and visualization. It’s widely recognized for its versatility in modeling and data exploration.

- Python: Known for its extensive libraries such as Pandas, NumPy, and SciPy, Python is a favorite for data wrangling and machine learning applications.

- SPSS: A user-friendly interface for those working in social sciences and market research, it simplifies complex analyses with built-in statistical tests.

- Excel: While basic, Excel is still a powerful tool for handling smaller datasets, performing basic analyses, and creating simple visualizations.

Demonstrating Software Skills

When discussing your software proficiency, it’s important to highlight specific tasks you’ve successfully executed. This could include building regression models, conducting hypothesis tests, or creating visualizations. It’s also beneficial to discuss your comfort with automating tasks, cleaning data, and handling large datasets. Being able to walk through a typical analysis pipeline in your chosen tool demonstrates both your expertise and problem-solving ability.

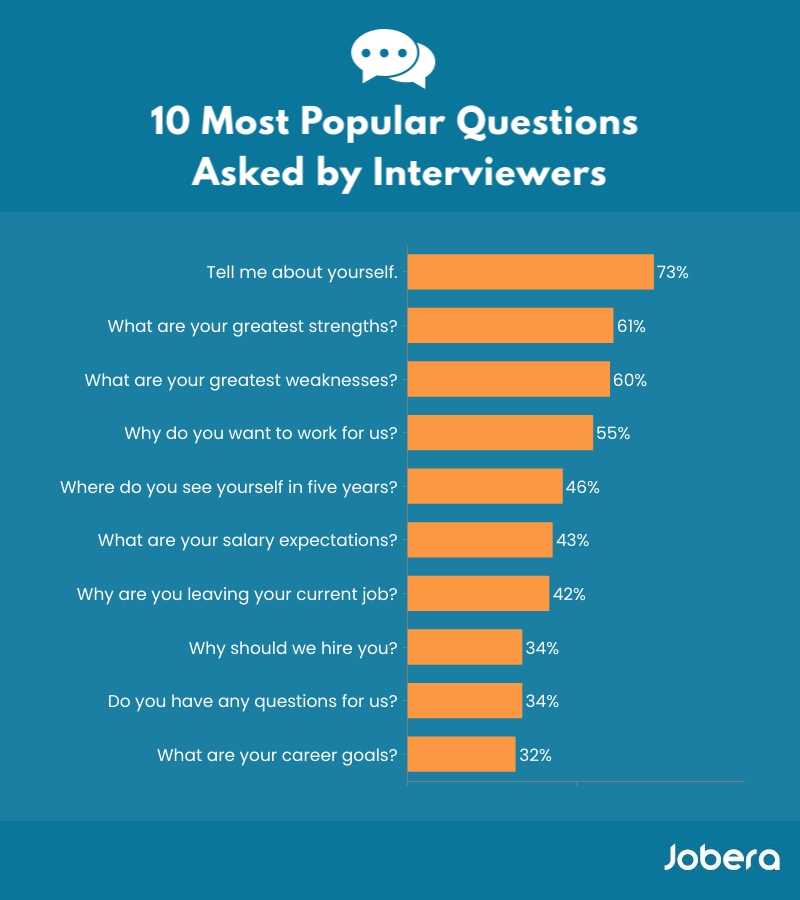

Behavioral Questions for Statisticians

During the selection process, employers often assess how a candidate will perform in real-world scenarios beyond just technical expertise. These types of inquiries help determine how individuals approach challenges, work in teams, manage pressure, and communicate complex ideas. It’s essential to showcase problem-solving skills, adaptability, and the ability to collaborate effectively with others.

Common questions often revolve around past experiences and behaviors in various situations. Responding to these types of questions with concrete examples allows candidates to highlight not only their technical proficiency but also their ability to work effectively under different conditions. Demonstrating how you’ve handled challenges in previous roles can help interviewers gauge your potential success in a new position.

Some of the most valuable insights can come from discussions about conflict resolution, dealing with tight deadlines, or adapting to shifting priorities. Interviewers may also ask how you handle setbacks or difficult situations, as these provide an opportunity to display your resilience and approach to problem-solving in the face of adversity.

Dealing with Complex Data Interpretation

Interpreting intricate datasets often requires a keen understanding of patterns, relationships, and anomalies. Whether you’re working with large volumes of information or intricate variables, the key lies in extracting meaningful insights that can drive decision-making. The ability to break down complex data and present it in an accessible manner is a critical skill in this field.

Here are some effective strategies for handling complex data interpretation:

- Data Cleaning: Ensure the dataset is free from errors, inconsistencies, and missing values before beginning the analysis. This step can significantly impact the accuracy of your insights.

- Visualization: Use charts, graphs, or heatmaps to highlight key trends and relationships. Visual tools make it easier to identify patterns that may not be immediately obvious in raw data.

- Segment Analysis: Break the data into smaller, manageable sections. This approach allows for focused analysis of specific variables or groups, making it easier to draw conclusions.

- Comparative Analysis: Compare different sets of data to identify correlations or differences. This can reveal deeper insights about how various factors influence each other.

In many cases, it’s also beneficial to employ statistical models and techniques to handle complex relationships between variables. By applying the right methodologies, you can enhance the reliability of your interpretations and provide a clearer view of the data’s significance.

Finally, effective communication of these insights is essential. Being able to explain complex findings to stakeholders with varying levels of expertise requires clarity and precision. Tailor your explanations to the audience, focusing on the most relevant insights while avoiding unnecessary technical jargon.

Answering Questions on Sampling Methods

When addressing inquiries related to the selection process of individuals or items from a larger group, it is crucial to demonstrate a strong understanding of the various techniques available. Knowing how to effectively collect data without bias is fundamental for drawing reliable conclusions from any study. These methods allow for the creation of representative subsets that can then be analyzed to infer broader trends.

Here are key concepts to keep in mind when discussing various approaches to sampling:

- Random Sampling: Every member of the population has an equal chance of being selected. This method helps eliminate bias and ensures that the sample represents the whole population fairly.

- Systematic Sampling: Items are selected at regular intervals from a sorted list. This approach is often used when it’s difficult to randomly select individuals, but care must be taken to avoid patterns that may skew results.

- Stratified Sampling: The population is divided into subgroups, or strata, based on a shared characteristic, and samples are taken from each subgroup. This technique ensures that all relevant groups are proportionally represented in the final sample.

- Cluster Sampling: The population is divided into clusters, and then a random sample of clusters is chosen. This method is useful when the population is large and dispersed, but it may introduce some variability due to the clusters themselves.

Each of these techniques has its advantages and drawbacks depending on the context of the data collection process. In response to questions, be ready to explain not only how each method works but also when it is appropriate to use each one. It is essential to demonstrate a deep understanding of how these methods impact the accuracy and relevance of the data collected.

Additionally, be prepared to discuss potential challenges such as sampling bias, which can occur if certain groups are underrepresented or overrepresented, and how to mitigate these risks to ensure valid results. Being able to identify the strengths and weaknesses of each method will showcase your analytical skills and knowledge of how to approach data collection effectively.

Tips for Answering Model Validation Queries

When discussing the process of evaluating the accuracy and effectiveness of a predictive model, it is important to showcase both theoretical knowledge and practical understanding. Model validation ensures that the predictions made by a model are reliable and can be generalized to new, unseen data. During discussions on this topic, focus on demonstrating your familiarity with different evaluation techniques and how they contribute to the reliability of the model’s results.

Consider the following strategies when addressing model validation inquiries:

- Cross-validation: This technique involves partitioning the data into multiple subsets and using each subset for both training and testing. By rotating through these subsets, you can assess how well the model performs across different data samples and reduce the likelihood of overfitting.

- Holdout Method: Divide the dataset into two parts: one for training the model and the other for testing. This approach is simple, but the key is to ensure that the test set is large enough to produce reliable results.

- Confusion Matrix: This tool is crucial for evaluating classification models. It shows the number of true positives, true negatives, false positives, and false negatives, helping you understand the performance of the model more comprehensively.

- ROC Curve and AUC: The receiver operating characteristic curve helps evaluate the trade-offs between sensitivity and specificity. The area under the curve (AUC) provides a measure of the model’s ability to distinguish between classes.

- Bias-Variance Tradeoff: Discuss how bias and variance are balanced during model training. A model with high bias may underfit the data, while one with high variance may overfit. Understanding how to manage this tradeoff is critical in achieving a well-performing model.

Additionally, be ready to explain the concept of overfitting and underfitting, as these are common issues when validating models. Overfitting occurs when the model is too complex and captures noise from the training data, while underfitting happens when the model is too simple to capture important patterns. Demonstrating how to use techniques like regularization or cross-validation to mitigate these issues can highlight your depth of knowledge.

Lastly, when discussing performance metrics, emphasize how you interpret and apply them in the context of the specific problem you are working to solve. Whether you are dealing with classification, regression, or clustering tasks, be prepared to justify why you selected a particular validation method and how it aligns with the goals of your model.

What Interviewers Expect from You

When you’re participating in a professional selection process, employers are not only looking for technical expertise but also for problem-solving abilities, communication skills, and your capacity to apply knowledge in real-world situations. It’s essential to demonstrate a combination of analytical thinking and practical experience that can help you navigate the complexities of the role. Beyond just answering inquiries, interviewers want to see how well you handle challenges and adapt to new information.

Here are key expectations interviewers typically have:

- Clarity in Communication: Being able to explain complex concepts in a simple, clear manner is vital. Whether discussing technical subjects or providing solutions, effective communication ensures your ideas are understood by a diverse audience.

- Problem-Solving Approach: Employers value candidates who can think critically and creatively. Demonstrating how you would approach a problem, break it down, and find a solution is often more important than simply having the right answer.

- Practical Application of Knowledge: Employers expect you to show how you can apply theoretical concepts in practical scenarios. Highlighting past experiences or providing examples where you’ve used your knowledge to solve real-world issues is a significant plus.

- Adaptability: Given the fast-paced nature of most industries, interviewers are looking for individuals who can adapt to change, learn new skills, and apply them quickly. Being open to learning and showcasing your flexibility can set you apart.

- Attention to Detail: Interviewers will assess your ability to spot inconsistencies or subtle details that could make a significant impact on the quality of your work. Precision is critical in many fields, especially when interpreting data or making decisions based on evidence.

- Teamwork and Collaboration: Even if you’re applying for a technical role, the ability to work well with others is often as important as individual contributions. Employers expect you to collaborate effectively, share insights, and be receptive to feedback.

Preparing for a professional evaluation involves more than just brushing up on technical skills. It’s important to understand that interviewers are looking for well-rounded individuals who can contribute to the team, bring new perspectives, and demonstrate a strong work ethic. Be ready to showcase how you meet these expectations and convey how you can add value to the organization.

Common Pitfalls in Statistical Interviews

When preparing for a selection process focused on technical expertise, many candidates inadvertently fall into certain traps. These pitfalls can stem from a lack of preparation, overconfidence, or misunderstanding the expectations of the role. Recognizing and avoiding these challenges can significantly improve your chances of success.

Overlooking Key Concepts

A common mistake is failing to grasp the fundamental principles of the subject matter. Even if advanced techniques are discussed, interviewers often expect candidates to understand core ideas thoroughly. Without this foundational knowledge, more complex problems can become difficult to approach effectively.

- Skipping Basic Theories: It’s easy to focus on more complex methods and forget the basics. Ignoring these core ideas can cause issues during discussions or when making decisions about specific tasks.

- Overcomplicating Solutions: In many cases, simple approaches are more effective. Trying to apply complex methods when a straightforward solution is available may demonstrate confusion or a lack of understanding.

Failure to Communicate Effectively

Another frequent issue is the inability to clearly explain your thought process. It’s important to convey your approach step-by-step so the interviewer can follow your reasoning. Stumbling through explanations or skipping crucial steps may give the impression of uncertainty or lack of preparation.

- Unclear Explanations: Attempting to explain complex topics without breaking them down into digestible pieces can confuse your audience and negatively affect your evaluation.

- Rushing Through Problems: While time management is important, hurrying through questions without providing adequate explanations can make it seem like you’re not fully engaged or capable of thorough analysis.

Inadequate Real-World Application

Employers often look for how well you can apply theoretical knowledge to practical situations. Failing to provide relevant examples or demonstrate practical application during the discussion may lead to missed opportunities.

- Lack of Contextual Examples: Relying solely on textbook definitions or methods without offering examples from past experiences might suggest a lack of practical exposure.

- Not Showing Problem-Solving Skills: Interviewers typically want to see how you approach challenges and use available resources to solve them. Without showing your problem-solving abilities, your suitability for the role may be questioned.

Avoiding these common mistakes can help present yourself as a capable and well-prepared candidate. Remember to focus on clarity, depth of understanding, and the ability to apply knowledge in real-world contexts. Preparation and communication are key factors in achieving success.

Building Confidence for Statistical Interviews

Approaching a technical discussion with confidence can significantly enhance your performance. Gaining trust in your abilities and knowledge comes with preparation and a clear strategy. Cultivating self-assurance not only boosts your presentation but also helps you navigate complex challenges with ease.

Master the Basics

A strong foundation in the fundamental concepts provides the groundwork for success. When you understand the core principles, you can approach more complex scenarios with confidence. Reviewing the basics will allow you to tackle straightforward questions quickly and move on to more difficult challenges without hesitation.

| Topic | Confidence-Building Activity |

|---|---|

| Probability Theory | Practice simple problems and focus on deriving solutions step-by-step. |

| Data Visualization | Work on creating clear, concise visual representations of data. |

| Model Interpretation | Explain models and results in layman’s terms to ensure a full grasp of the concepts. |

Rehearse with Mock Scenarios

Simulating realistic situations where you are required to apply your knowledge can help reduce anxiety. Practicing with mock exercises or by reviewing case studies allows you to familiarize yourself with the types of challenges that may arise. This proactive approach builds resilience and prepares you to handle pressure during real discussions.

By focusing on the fundamentals, practicing regularly, and simulating real-life challenges, you can approach technical discussions with assurance. Confidence is rooted in preparation, and the more you engage with the material, the more comfortable you will feel in a high-pressure environment.