Achieving success in challenging assessments requires proficiency with the right set of applications. These programs not only streamline problem-solving but also enhance productivity and accuracy. Understanding how to effectively use these resources is a key aspect of preparation for any major evaluation.

From programming environments to cloud-based platforms, each tool offers unique features that support various aspects of the test. Mastery of these resources allows individuals to handle complex tasks more efficiently, making the evaluation process smoother and more manageable. In this section, we’ll explore some of the most effective resources available to ensure optimal performance during your assessment.

Tools for Data Science Final Exam Answers

Effective preparation for assessments requires a deep understanding of the most relevant programs and applications. Knowing how to leverage these platforms can make a significant difference in your ability to solve problems quickly and accurately. Below, we will look into essential resources that can assist in tackling complex questions with ease.

Programming Environments

Programming languages and environments play a vital role in completing complex calculations and models. Some widely used platforms include:

- Python: A versatile language, ideal for solving statistical and algorithmic challenges.

- R: Especially useful for statistical analysis and data visualization.

- MATLAB: Popular for matrix manipulation and numerical computations.

Cloud-Based Platforms

Cloud services provide on-demand computational resources and collaborative tools, which can be indispensable for handling large datasets and sharing insights. Notable platforms include:

- Google Colab: A free environment with built-in GPU support for Python coding and collaborative work.

- Amazon Web Services (AWS): A powerful platform offering a range of tools for computing, storage, and machine learning.

- Microsoft Azure: Provides advanced analytics and machine learning capabilities in a cloud environment.

By mastering these platforms, you can ensure a smooth and efficient experience when faced with complex tasks during your assessment.

Top Software for Data Science Exams

Having the right software at your disposal is crucial when preparing for an important assessment. These applications are designed to handle complex computations, create visualizations, and facilitate data manipulation with ease. Mastering the following software will greatly enhance your ability to complete tasks accurately and efficiently under time pressure.

Jupyter Notebook is one of the most popular choices, offering an interactive interface where you can combine code, equations, and visualizations in one place. It is particularly useful for running Python scripts and conducting detailed analyses. With its user-friendly environment, it is an essential tool for solving problems during assessments that require coding and model implementation.

RStudio provides a robust environment for statistical computing and graphical output. It is ideal for handling large datasets, performing regression analyses, and visualizing trends. For anyone focused on statistical tasks, mastering RStudio can significantly improve your efficiency during testing.

MATLAB is another powerful platform, particularly favored for its advanced mathematical and numerical capabilities. It excels in matrix manipulation, data analysis, and simulation tasks, making it a top choice for complex mathematical problems that require precise results.

These software packages not only simplify your workflow but also boost your problem-solving speed, ensuring you can focus on analysis rather than technical hurdles during your preparation.

How to Leverage Python in Exams

Python is one of the most effective programming languages when it comes to problem-solving during high-stakes assessments. Its simplicity and flexibility allow you to write and test algorithms, analyze datasets, and visualize results quickly. Understanding how to use Python efficiently can give you a significant advantage in tackling complex challenges under time constraints.

Here are some key strategies to make the most of Python during your assessment:

- Master Libraries: Familiarize yourself with essential libraries like Pandas for data manipulation, NumPy for numerical computations, and Matplotlib for creating visualizations.

- Write Reusable Functions: Create functions to handle repetitive tasks, saving valuable time while ensuring accuracy.

- Use Jupyter Notebooks: This environment allows you to write, test, and debug code all in one place, with immediate visual feedback for your analyses.

- Focus on Efficiency: Optimize your code to run faster, especially when dealing with large datasets. This will help you save time and avoid errors.

By mastering these Python techniques, you will be better prepared to tackle any challenge during your assessment, turning complex problems into manageable tasks.

R Programming for Exam Success

R is a powerful language designed for statistical analysis and visualization, making it an essential tool when preparing for assessments that involve complex numerical tasks. Its flexibility and wide range of packages allow you to analyze and present data in ways that are both efficient and clear. By mastering R, you can quickly navigate through difficult questions and deliver precise results.

Here are some strategies for using R to boost your performance during your assessment:

- Data Manipulation with dplyr: This package simplifies data cleaning and transformation, allowing you to easily filter, select, and summarize your dataset.

- Visualization with ggplot2: One of the most powerful libraries for creating insightful graphs and charts, ggplot2 enables you to visualize trends and relationships quickly.

- Statistical Functions: R comes with a broad array of statistical tools, including tests for hypothesis, regression analysis, and clustering techniques.

- Reproducibility with RMarkdown: This tool allows you to combine code, text, and output in a single document, ensuring that your work is reproducible and clearly documented.

By incorporating these R techniques into your preparation, you will be well-equipped to solve problems effectively and present your findings with confidence.

Using SQL for Data Science Problems

Structured Query Language (SQL) is an essential skill when handling large volumes of organized information. It enables you to quickly extract, manipulate, and analyze data stored in relational databases. SQL’s ability to manage data efficiently makes it invaluable when solving complex problems that require working with vast amounts of structured records.

Key SQL Operations for Problem Solving

Mastering SQL can significantly improve your efficiency in analyzing data, especially when you’re tasked with retrieving and summarizing key information. Some essential SQL operations include:

| SQL Operation | Purpose |

|---|---|

| SELECT | Extracts specific data from one or more tables. |

| JOIN | Combines data from multiple tables based on related columns. |

| GROUP BY | Organizes data into groups for aggregation. |

| ORDER BY | Sorts the result set by one or more columns. |

Optimizing SQL Queries

When faced with large datasets, optimizing your queries can save valuable time. Efficient queries are essential for getting quick results, especially when dealing with multiple joins or large tables. Some tips include:

- Use Indexes: Indexing improves the speed of data retrieval operations.

- Limit Result Sets: Use LIMIT and WHERE clauses to focus on relevant data, reducing processing time.

- Avoid Subqueries: Whenever possible, try to rewrite subqueries as joins for better performance.

By mastering SQL, you can efficiently handle tasks that involve manipulating and analyzing large datasets, ensuring quick and accurate results during your assessment.

Machine Learning Libraries to Know

When tackling problems that require predictive modeling, classification, or clustering, machine learning libraries provide the necessary functions and tools to implement powerful algorithms. These libraries simplify complex tasks, allowing you to focus on the problem at hand rather than spending time on low-level coding. Understanding the most widely-used libraries can enhance your ability to build and optimize models efficiently during any challenge.

Some of the most important libraries to familiarize yourself with include:

- scikit-learn: A versatile library that includes a wide range of algorithms for classification, regression, and clustering, as well as tools for model evaluation and selection.

- TensorFlow: A powerful framework often used for deep learning tasks, it allows for easy implementation of neural networks and large-scale machine learning models.

- PyTorch: Known for its flexibility and ease of use, PyTorch is another deep learning framework that offers dynamic computation graphs, making it ideal for research and production models.

- Keras: Built on top of TensorFlow, Keras is a high-level neural networks API that simplifies the process of building and training deep learning models.

- XGBoost: This library is highly effective for implementing gradient boosting algorithms, offering fast and accurate models for both classification and regression tasks.

By mastering these libraries, you will be able to quickly build, test, and refine models to solve complex problems with precision and efficiency.

Data Visualization Tools for Exams

Effective visual representation of information is key when presenting findings or interpreting complex datasets. Using the right software to create charts, graphs, and other visual aids can significantly enhance your ability to communicate insights clearly and concisely. Mastering these platforms will allow you to transform raw numbers into meaningful visual formats, making it easier to convey your analysis during any assessment.

Popular Visualization Platforms

There are several programs and libraries that can help you quickly visualize results, from basic charts to intricate interactive dashboards:

- Matplotlib: A comprehensive Python library for creating static, animated, and interactive visualizations. It’s ideal for producing high-quality graphs like line plots, bar charts, and histograms.

- Seaborn: Built on top of Matplotlib, Seaborn simplifies the creation of complex visualizations, such as heatmaps and violin plots, while providing beautiful default themes.

- Tableau: A powerful and user-friendly platform known for its drag-and-drop functionality. It’s particularly effective for building interactive dashboards and data storytelling.

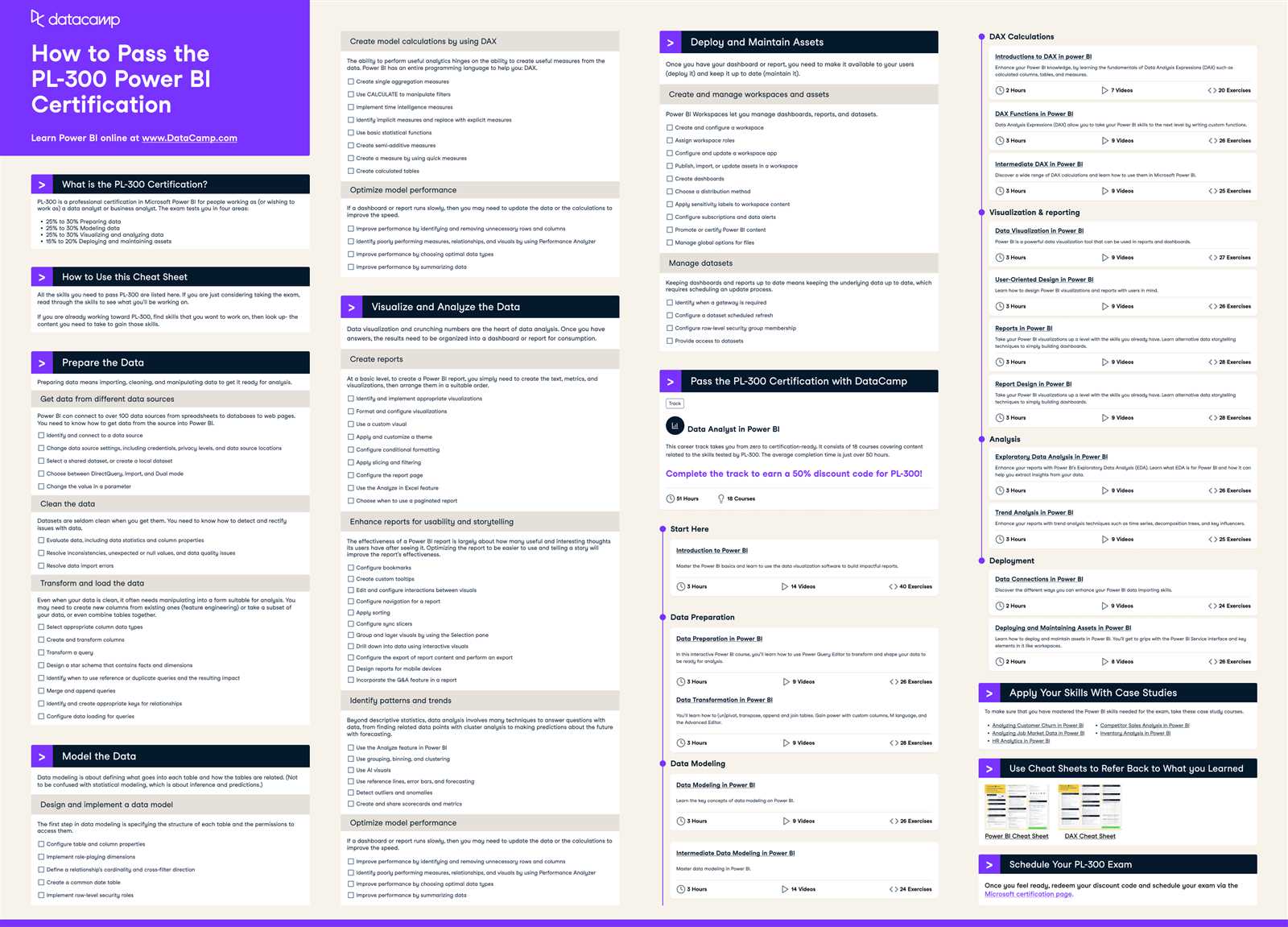

- Power BI: A Microsoft tool that offers similar functionality to Tableau, with deep integration into other Microsoft products, allowing for easy data importation and report generation.

Creating Effective Visuals

To create effective visuals that enhance your analysis, keep these tips in mind:

- Choose the Right Chart Type: Select a chart or graph that best represents the data you are analyzing, whether it’s a line chart for trends or a pie chart for proportions.

- Use Color Effectively: Color can help highlight key insights but should be used sparingly to avoid confusion. Stick to a clear, consistent color scheme.

- Keep it Simple: Avoid cluttering visuals with excessive details. Focus on key trends and insights to make your message clear.

By mastering these platforms and techniques, you will be able to present complex information in a way that is visually appealing and easy to understand, greatly improving your performance during assessments.

Cloud Platforms for Data Science Work

Cloud platforms offer scalable, flexible environments that enable users to run complex calculations and store large amounts of information without the need for on-premises infrastructure. These platforms provide powerful computational resources, access to a wide range of libraries, and the ability to collaborate with others in real time. Leveraging the cloud can significantly streamline workflows, especially when working with large datasets or running resource-intensive algorithms.

Some of the most popular cloud platforms that are widely used in analytics and modeling include:

- Google Cloud Platform (GCP): Known for its BigQuery service, GCP offers powerful tools for handling large-scale analytics, as well as machine learning services such as AutoML.

- Amazon Web Services (AWS): AWS provides a comprehensive suite of services, including EC2 instances for computational power, S3 for storage, and SageMaker for building and deploying models.

- Microsoft Azure: With its extensive machine learning tools and integration with other Microsoft services, Azure is a robust option for deploying and managing models at scale.

- IBM Cloud: Known for its AI and machine learning capabilities, IBM Cloud offers various tools for model development, data storage, and collaboration.

By using these cloud platforms, you can take advantage of virtually unlimited resources, enhance collaboration with teams, and scale projects efficiently, making them a key asset for tackling challenging analytical tasks.

Excel Tips for Exam Preparation

Excel is a powerful tool for organizing, analyzing, and visualizing information. It can streamline the process of preparing for assessments by allowing you to manage large datasets, perform complex calculations, and quickly analyze results. Mastering key features and shortcuts in Excel can save time and help you focus on problem-solving, making your preparation more efficient.

Essential Excel Features to Know

Here are some of the most useful Excel features that can enhance your preparation:

- Pivot Tables: Ideal for summarizing large datasets, pivot tables allow you to quickly analyze and compare data in various ways, helping you identify trends and patterns.

- Conditional Formatting: Use conditional formatting to highlight important values or trends in your data, making it easier to spot key insights at a glance.

- Formulas and Functions: Mastering basic formulas (SUM, AVERAGE, IF) and more advanced ones (VLOOKUP, INDEX-MATCH) can automate calculations and reduce errors in your analysis.

Speeding Up Your Workflow

To maximize your efficiency while using Excel, try incorporating these tips:

- Keyboard Shortcuts: Learning shortcuts such as Ctrl + C for copy, Ctrl + V for paste, and Ctrl + Z for undo can save you significant time while working in Excel.

- Data Validation: Use data validation to restrict the type of data that can be entered in cells, ensuring accuracy and reducing errors during input.

- Named Ranges: Assigning named ranges to specific cells or ranges of data makes it easier to reference and manage information throughout your workbook.

With these features and strategies, you can increase your productivity and effectiveness, making Excel a valuable asset in your study sessions.

Key Online Resources for Practice

Practicing with real-world problems and honing your skills through interactive platforms can make a significant difference in preparation. The availability of online resources has made it easier than ever to access practice questions, tutorials, and exercises that can help improve your problem-solving abilities. These platforms not only offer hands-on experience but also provide an opportunity to test your knowledge and track your progress over time.

Top Platforms for Skill Enhancement

Here are some highly regarded websites where you can practice and sharpen your skills:

- Kaggle: A well-known platform that offers datasets, competitions, and coding challenges. It’s perfect for practicing analytical skills and collaborating with a community of like-minded individuals.

- HackerRank: Focuses on coding challenges across various domains, including algorithms, data manipulation, and problem-solving. It’s ideal for improving technical proficiency.

- LeetCode: Specializes in algorithmic problems that can be tackled through coding, making it a great place to practice logical thinking and programming techniques.

- Coursera: Offers online courses and practice exercises from top universities and companies, helping you gain practical knowledge in a structured way.

Interactive Learning Communities

Joining active communities where you can interact with other learners and professionals can also help reinforce your learning:

- Stack Overflow: A popular platform for asking questions and finding solutions related to coding and technical challenges.

- Reddit: Subreddits like r/learnprogramming and r/MachineLearning provide discussions, resources, and advice from a diverse group of learners and professionals.

These online resources offer a wealth of opportunities to practice, refine, and apply your knowledge, which can make a significant impact on your preparation.

Text Mining Tools for Data Exams

Extracting valuable insights from large sets of unstructured text is a crucial skill in various assessments. Advanced software can help you process and analyze text efficiently, turning raw information into actionable knowledge. These applications assist in identifying patterns, categorizing information, and deriving key insights, making them indispensable for tackling challenges related to text-heavy tasks in evaluations.

Popular Applications for Text Processing

Here are some widely used platforms designed to help you analyze and mine textual data:

- NLTK (Natural Language Toolkit): A comprehensive library for Python that provides tools for processing human language data, including tokenization, stemming, and part-of-speech tagging.

- Spacy: An open-source library offering industrial-strength natural language processing (NLP) capabilities, including named entity recognition and syntactic parsing.

- Gensim: A library focused on topic modeling and document similarity analysis, widely used for unsupervised learning tasks in text mining.

- TextBlob: A simple Python library built for text processing tasks such as sentiment analysis, part-of-speech tagging, and translation.

Key Features to Look for

When selecting the right platform for text analysis, consider these important features:

| Feature | Description |

|---|---|

| Text Preprocessing | Tools for cleaning and preparing text data, including removing stopwords, normalizing text, and tokenizing sentences. |

| Sentiment Analysis | Identifying the sentiment of the text, which can be crucial in understanding the tone or emotions expressed in the content. |

| Named Entity Recognition (NER) | Detecting proper nouns, such as names, places, and dates, to extract structured information from unstructured text. |

| Topic Modeling | Uncovering hidden thematic structures within a collection of documents using unsupervised learning techniques. |

By using these resources, you can gain a deeper understanding of textual data, which will help you approach text-related tasks more efficiently and accurately during your preparation.

Automating Tasks with Jupyter Notebooks

Automating repetitive tasks and processes is a key component of efficient workflows. Using interactive coding environments, you can write and execute code in a structured format, allowing for quick testing, debugging, and iteration. This approach streamlines tasks and makes complex procedures more manageable, especially when working with large datasets or computational models.

Jupyter Notebooks offer a versatile platform for automating a wide range of tasks. With its ability to combine code, visualizations, and text in one document, it facilitates not only execution but also the documentation of each step in the process. This makes it easier to revisit, modify, and optimize code when necessary.

Advantages of Jupyter Notebooks for Automation

- Code Execution and Documentation: You can write and execute code in a cell-by-cell manner, making it easy to document every step of your automation process.

- Visualization Integration: Jupyter allows you to seamlessly integrate plots and charts, enabling you to visualize the output of automated tasks, such as statistical analysis or model performance.

- Reproducibility: With Jupyter, you can create fully reproducible workflows. Sharing your notebook with others ensures that they can replicate your process and results.

- Integration with Libraries: The platform supports various libraries and languages, providing flexibility in automating tasks such as data cleaning, manipulation, and even machine learning model deployment.

Common Automated Tasks in Jupyter Notebooks

- Data Cleaning: Automating the process of removing missing values, formatting data, and detecting outliers.

- Model Training: Automating the process of training and evaluating machine learning models by running pre-defined code pipelines.

- Batch Processing: Running repetitive tasks over large datasets, such as parsing or transforming files, without manual intervention.

- Reporting: Generating automated reports with visualizations and summaries based on analysis, saving time and reducing errors.

By incorporating Jupyter Notebooks into your workflow, you can automate complex processes and focus on higher-level problem-solving, boosting both efficiency and productivity.

Using Git for Version Control

Version control is essential for managing and tracking changes in coding projects, especially when multiple contributors are involved. By allowing developers to monitor alterations to files, revert to previous versions, and efficiently merge contributions, version control systems streamline collaboration and ensure project integrity.

Git is a distributed version control system that enables teams to track and manage code changes effectively. It provides a robust framework for organizing files, handling updates, and resolving conflicts. Whether working alone or as part of a team, Git offers significant advantages in maintaining consistency across projects.

Benefits of Using Git

- Track Changes: Git logs all modifications made to files, providing a complete history of changes, including who made them and when.

- Branching and Merging: Git allows you to create branches for experimental work, which can be merged back into the main project once the changes are reviewed and finalized.

- Collaboration: Git simplifies collaboration by enabling multiple users to work on the same project without overwriting each other’s work.

- Backup and Recovery: By maintaining local and remote repositories, Git provides redundancy and protection against data loss.

Key Git Commands for Version Control

- git init: Initializes a new Git repository in your project directory.

- git add: Stages changes to be committed, allowing you to specify which modifications to track.

- git commit: Saves your changes to the repository with a descriptive message.

- git push: Uploads local commits to a remote repository, making them available to collaborators.

- git pull: Fetches changes from the remote repository and integrates them into your local environment.

By incorporating Git into your workflow, you can ensure seamless version management, promote collaboration, and enhance overall project efficiency. Whether you’re working with large teams or solo, mastering Git is an essential skill for effective code management.

Important Libraries for Exam Efficiency

When preparing for tests, especially in a technical or programming environment, using the right software packages can significantly boost your productivity and accuracy. Libraries offer pre-built functions and routines that can simplify complex tasks, saving time and reducing the potential for errors. By integrating these resources into your workflow, you can enhance efficiency and streamline the problem-solving process.

Here are some key libraries that can be incredibly useful when working through assignments and practice problems:

| Library | Primary Use | Best For |

|---|---|---|

| Numpy | Numerical operations and array handling | Mathematical and statistical tasks |

| Pandas | Data manipulation and analysis | Handling structured datasets |

| Matplotlib | Data visualization | Creating charts and graphs for insights |

| Seaborn | Statistical plotting | Advanced visualizations and relationships |

| Scikit-learn | Machine learning algorithms | Building models for classification and regression |

These libraries provide essential functionality that can save valuable time during assessments. Whether you are working with data structures, running statistical models, or visualizing results, they ensure that you have the tools needed to tackle complex tasks efficiently. Using these pre-built functions allows you to focus on understanding core concepts rather than spending time on low-level implementation details.

Optimizing Data Cleaning Processes

Efficiently preparing and organizing information is crucial for achieving accurate results in any technical analysis. Cleaning data involves removing inaccuracies, handling missing values, and ensuring consistency across datasets. Optimizing these tasks not only saves time but also enhances the reliability of the outcomes. By employing best practices and utilizing the right approaches, you can streamline the entire process, reducing potential errors and enhancing the quality of your work.

The following strategies can significantly improve the efficiency of your cleaning workflow:

- Automating Missing Value Handling: Identifying and filling in missing data automatically can speed up the process and reduce human error. Approaches like imputation or forward/backward filling can be helpful.

- Standardizing Formats: Ensuring uniformity in units, date formats, and text values makes the dataset more manageable and less prone to inconsistencies.

- Removing Duplicates: Identifying and eliminating duplicate entries can prevent skewed analysis and unnecessary repetition in the dataset.

- Outlier Detection: Detecting and addressing outliers is important for ensuring that extreme values do not distort the final results.

- Efficient Data Filtering: Filtering out irrelevant rows or columns can speed up processing and improve clarity during analysis.

By focusing on these core practices, the time spent on cleaning tasks can be significantly reduced, allowing for more attention to other aspects of your project. In addition, employing a systematic approach ensures that the data is consistent, reliable, and ready for advanced analysis.

Best Practices for Exam Time Management

Effective management of time during any assessment is essential for maximizing performance. With limited hours available, it’s crucial to allocate time wisely, prioritize tasks, and stay focused to ensure each section is completed accurately. A strategic approach helps avoid last-minute panic and allows you to address each challenge systematically. By using proven techniques, you can boost efficiency and reduce stress throughout the process.

The following time management strategies can enhance your performance during assessments:

1. Prioritize and Plan

Before starting, quickly review the questions or tasks, marking those that seem more difficult or time-consuming. Allocate more time for complex problems, while leaving simpler questions for later. Creating a brief plan helps you tackle the assessment in an organized manner, ensuring no part is neglected.

2. Set Time Limits for Each Task

Break down the total time available into segments, setting a specific time limit for each section. This prevents you from getting stuck on one task and ensures that all parts of the assessment are completed. If you’re unable to solve a problem within the allotted time, move on and revisit it later if time permits.

By adopting these strategies, you can approach your tasks with a clear and focused mindset, ensuring that time is used efficiently and nothing is left undone.