In this section, we delve into the core concepts of a vital mathematical topic, helping you better grasp the underlying principles and techniques. Mastery of these principles is essential for solving complex questions that often appear in assessments.

Analyzing problems step by step is crucial for avoiding common pitfalls. By breaking down each task methodically, you can approach challenges with confidence, ensuring clarity and precision in your approach. Understanding the structure of each problem will guide you to the correct conclusion without unnecessary confusion.

Reviewing common strategies and methods enables you to optimize your performance. Focusing on key concepts and applying them correctly is the key to success. In this guide, we’ll help you identify the most effective ways to approach these problems, ensuring you’re prepared for any situation that may arise.

Key Concepts in Chapter 8 Statistics

Understanding the essential principles is critical for mastering the content of this section. The material focuses on analyzing data sets, interpreting results, and applying mathematical models to solve real-world problems. These concepts form the foundation for successfully navigating through more complex tasks and tests.

One of the core ideas explored here is data distribution. This involves understanding how values are spread across a set, and how that distribution can impact conclusions. Knowing the different types of distributions helps in choosing the right approach to solve problems effectively.

Another important aspect is probability theory, which is essential for predicting outcomes and making informed decisions based on statistical evidence. By mastering probability rules and their applications, you can better assess the likelihood of various events and refine your analytical skills.

Hypothesis testing is also a focal point, helping to determine the validity of assumptions based on sample data. Being able to test hypotheses properly is vital in making data-driven decisions in various fields, from research to business analytics.

Understanding Statistical Test Methods

Mastering the various methods used to analyze data is crucial for making accurate conclusions. These techniques are designed to evaluate hypotheses, assess relationships, and determine the likelihood of specific outcomes. Each method provides a structured approach to solve different types of problems efficiently.

Types of Methods

Among the most common approaches are parametric and non-parametric methods. Parametric methods assume a certain distribution in the data, such as normality, and use parameters like mean and variance for analysis. Non-parametric methods, on the other hand, do not rely on such assumptions and are often used when the data does not meet the requirements for parametric testing.

Choosing the Right Approach

It is essential to choose the appropriate method depending on the nature of the data and the objective of the analysis. For example, when comparing two groups, t-tests or Mann-Whitney U tests may be suitable. On the other hand, when analyzing relationships between variables, correlation or regression techniques might be more effective.

Common Mistakes in Chapter 8 Tests

When working through problems in this section, students often make a few key errors that can lead to incorrect conclusions. These mistakes, though common, can be avoided with careful attention to detail and a solid understanding of the material. Recognizing and addressing these pitfalls will improve both accuracy and efficiency during assessments.

Misunderstanding Problem Requirements

One of the most frequent issues is failing to fully comprehend the problem’s requirements before starting the solution. It’s easy to overlook key details that dictate the correct approach, leading to errors in calculation or interpretation. Common issues include:

- Confusing different types of variables and their relationships.

- Misinterpreting instructions about what needs to be calculated.

- Overlooking conditions that affect the choice of method.

Calculation and Conceptual Errors

Even with a correct understanding of the problem, calculation mistakes are another major stumbling block. These errors can occur at any stage of the process and often result from:

- Rushing through complex steps without double-checking work.

- Confusing formulas or incorrectly applying them to the data.

- Forgetting to adjust for specific conditions, such as sample size or distribution assumptions.

Taking the time to review both the instructions and your work is crucial for avoiding these mistakes and ensuring that every aspect of the problem is handled correctly.

How to Approach Statistics Test Questions

Approaching complex problems effectively requires a structured and logical mindset. When faced with challenging questions, it’s important to break them down into smaller, more manageable parts. This allows you to identify the underlying principles and apply the correct methods with confidence.

Read the instructions carefully before diving into calculations. Often, questions contain key information that guides you on how to handle the data or choose the appropriate technique. Pay attention to the details, such as sample size, variable types, and specific requirements that might affect your approach.

Plan your approach step by step. Start by identifying what is being asked, then determine which formulas or concepts are relevant to solving the problem. This helps to avoid confusion and ensures that you are addressing the correct issue from the start.

Breaking Down Complex Statistical Problems

Solving intricate problems requires a methodical approach that simplifies each component. By deconstructing the problem into smaller, more manageable steps, you can identify the core issues and apply the right techniques to achieve an accurate solution. This process not only helps in reducing errors but also improves overall efficiency when tackling difficult questions.

Step-by-Step Approach

Start by analyzing the given information and identifying the key elements. This might include the data provided, what needs to be calculated, and any conditions or assumptions that influence the solution. A step-by-step breakdown ensures that no critical details are overlooked.

Organizing Data for Clarity

Properly organizing the data can significantly ease the problem-solving process. Grouping similar pieces of information together and setting up clear tables or charts will help you visualize relationships and trends more effectively.

| Data Element | Value | Unit of Measure |

|---|---|---|

| Sample Mean | 50 | Units |

| Sample Size | 30 | Participants |

| Standard Deviation | 10 | Units |

By organizing the data in this way, it becomes easier to apply relevant formulas and compare values in a more systematic manner. This approach reduces confusion and enhances the accuracy of your results.

Tips for Solving Probability Questions

Solving probability-related problems requires both a solid understanding of the core concepts and a logical approach to handling various scenarios. The key to success is breaking down each question, focusing on the conditions provided, and applying the right principles to calculate the likelihood of specific events occurring.

Identify the Type of Problem

Begin by determining whether the question involves independent or dependent events. Independent events do not affect each other’s outcomes, while dependent events are influenced by the occurrence of a previous event. Recognizing this distinction is crucial for applying the correct formula.

Use a Structured Approach

Always follow a clear, step-by-step process when solving probability problems. Start by calculating individual probabilities, then combine them based on the type of event (e.g., addition for mutually exclusive events, multiplication for independent events). Organizing the problem visually can also help you stay focused and avoid mistakes.

Interpreting Test Results Accurately

Interpreting the results of an analysis is as crucial as the process of gathering and calculating the data. To draw accurate conclusions, it’s essential to carefully evaluate the outcomes and understand their implications. Misinterpretation can lead to incorrect decisions, so a thorough understanding of what the results signify is key.

When reviewing results, consider the following factors to ensure accurate interpretation:

- Context: Always consider the context in which the data was collected. What are the conditions or assumptions underlying the study? This will influence how the results should be interpreted.

- Significance: Check whether the results are statistically significant. A result may show a difference, but if it’s not statistically significant, it may not be meaningful.

- Margin of Error: Understand the margin of error, as it indicates the degree of uncertainty in the results. Larger margins of error suggest that conclusions drawn from the data should be approached cautiously.

To enhance your interpretation skills, it’s helpful to organize the data visually, such as with graphs or tables, to make relationships clearer and ensure that you aren’t missing critical patterns or anomalies.

- Double-check calculations to avoid errors.

- Consider alternative explanations or interpretations of the results.

- Consult relevant references to confirm your interpretation aligns with established theories or findings.

Explaining Confidence Intervals in Detail

Understanding the concept of confidence intervals is essential for making reliable inferences about a population based on sample data. A confidence interval provides a range of values within which the true parameter of interest is likely to fall, with a specified level of certainty. This range helps quantify the uncertainty inherent in statistical estimates.

Confidence level refers to the degree of certainty we have that the true parameter lies within the calculated range. For example, a 95% confidence interval suggests that if the sampling process were repeated many times, approximately 95% of the intervals calculated would contain the true population parameter.

Interpreting the interval is crucial: the wider the interval, the less precise the estimate, but also the greater the likelihood that it contains the true parameter. A narrow interval implies a more precise estimate, but it could also mean less confidence in its accuracy. Balancing precision and confidence is key when using confidence intervals in decision-making.

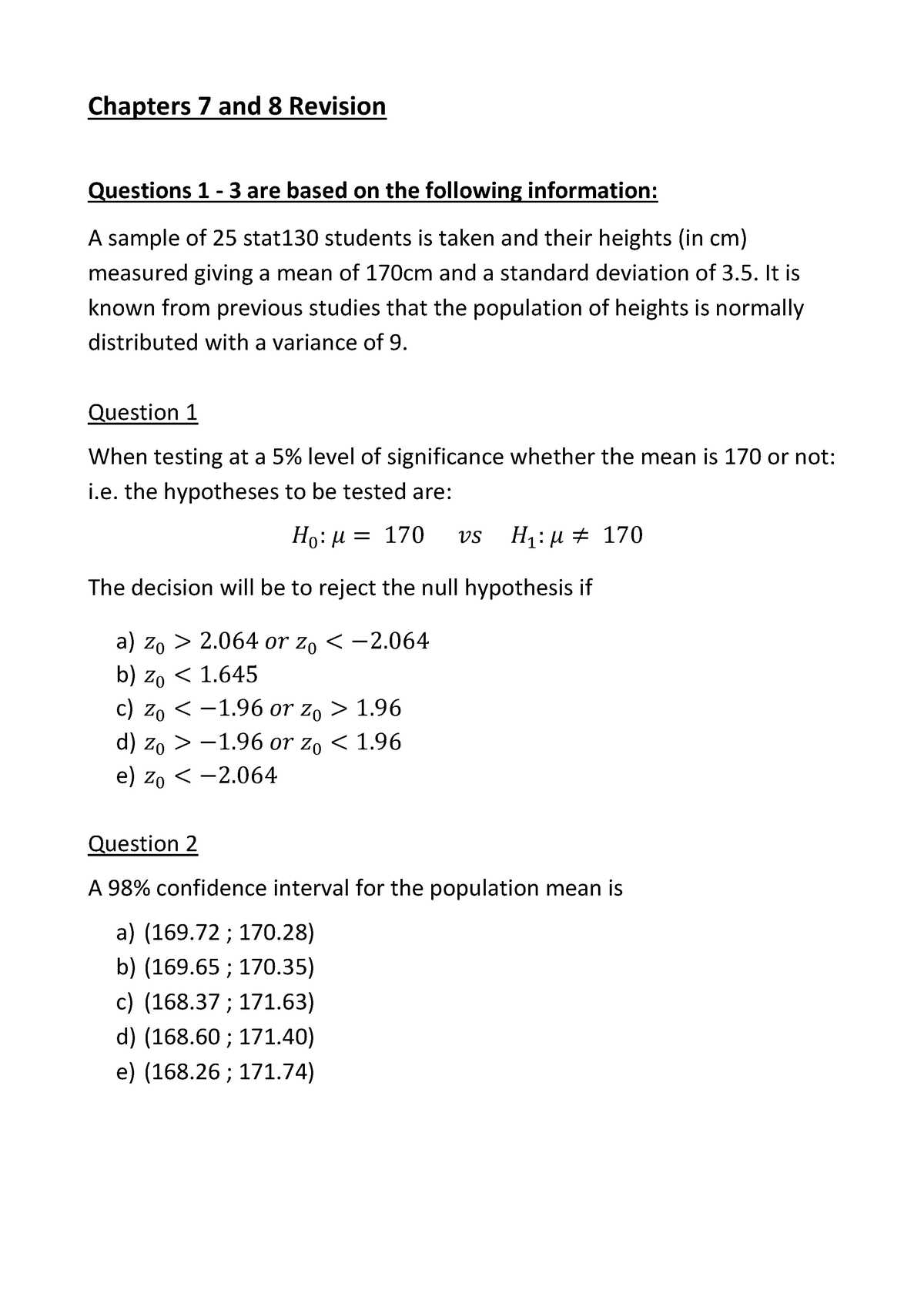

Understanding Hypothesis Testing in Chapter 8

Hypothesis testing is a fundamental technique used to evaluate assumptions or claims about a population based on sample data. It involves making an initial assumption (the null hypothesis) and then determining whether the evidence supports this assumption or if an alternative hypothesis is more likely. This method allows researchers to draw conclusions about a population without examining every individual within it.

In hypothesis testing, the goal is to assess whether the data provides enough evidence to reject the null hypothesis in favor of the alternative hypothesis. The process involves calculating a test statistic, comparing it to a critical value, and determining the probability of observing the test statistic given that the null hypothesis is true.

| Step | Description |

|---|---|

| Step 1: Define Hypotheses | State the null hypothesis (H₀) and the alternative hypothesis (H₁). |

| Step 2: Select Significance Level | Choose the significance level (α), usually 0.05 or 0.01. |

| Step 3: Compute the Test Statistic | Calculate the statistic based on sample data (e.g., z-score or t-value). |

| Step 4: Compare with Critical Value | Compare the computed test statistic with the critical value from the distribution table. |

| Step 5: Make a Decision | If the test statistic exceeds the critical value, reject the null hypothesis. |

By following these steps, researchers can systematically determine whether there is sufficient evidence to support or refute a hypothesis, making it a powerful tool for decision-making in various fields of study.

Examining Key Statistical Formulas

Mathematical formulas are essential tools for solving problems and drawing conclusions based on sample data. These equations allow us to quantify relationships, estimate population parameters, and assess the likelihood of certain outcomes. By understanding these formulas, one can gain a deeper insight into the underlying principles of data analysis and make more informed decisions.

Some of the most commonly used formulas in data analysis include those for calculating averages, variability, and probabilities. These calculations provide a foundation for more complex analyses, allowing researchers to interpret data in a structured and logical way. Below are some of the fundamental formulas that form the basis of statistical analysis:

| Formula | Description |

|---|---|

| Mean (μ) = ΣX / N | Used to calculate the average of a dataset, where ΣX is the sum of all values, and N is the total number of observations. |

| Standard Deviation (σ) = √(Σ(X – μ)² / N) | Measures the dispersion or spread of data around the mean, showing how much individual values deviate from the average. |

| Variance (σ²) = Σ(X – μ)² / N | Represents the squared difference from the mean, giving insight into the extent of variability in a dataset. |

| P (A) = Favorable outcomes / Total outcomes | Calculates the probability of an event occurring by dividing the number of favorable outcomes by the total number of possible outcomes. |

These formulas provide the foundation for much of the data analysis performed in various fields. A solid understanding of how to apply and interpret them is crucial for anyone involved in quantitative research or decision-making processes.

Strategies for Time Management During Tests

Effective time management is crucial when faced with any form of evaluation, as it ensures that all questions are addressed thoroughly while avoiding unnecessary stress. The ability to prioritize tasks, allocate appropriate time to each section, and stay focused throughout the process can significantly improve performance. Strategic planning and disciplined execution are key to navigating time constraints efficiently.

To maximize your time during an evaluation, consider these strategies:

- Preview the Entire Exam: Before starting, quickly glance through the entire set of questions to get an overview. This allows you to allocate time wisely, deciding which sections to spend more time on and which to answer quickly.

- Allocate Time per Section: Based on the difficulty and length of the sections, set a time limit for each one. Stick to this schedule to avoid spending too much time on any one part.

- Start with Easier Questions: Tackle the questions you feel most confident about first. This boosts your confidence and ensures you collect the easier points early on.

- Don’t Dwell on Stuck Questions: If you encounter a challenging question, move on and return to it later. Spending too much time on one question can waste valuable minutes.

- Keep Track of Time: Regularly check the clock to ensure you’re staying on pace. Consider setting small time checkpoints to help you stay aligned with your plan.

By managing time effectively, you can reduce anxiety, increase efficiency, and ensure that every aspect of the evaluation is completed within the allotted time frame.

How to Review Your Test Answers

Reviewing your work before submitting is an essential step in any evaluation. It offers an opportunity to double-check your calculations, ensure clarity in your responses, and correct any errors you may have missed in the initial pass. The key to an effective review process is being systematic and focused on potential mistakes, rather than rushing through the material again.

Follow these steps to review your responses thoroughly and efficiently:

| Step | Action |

|---|---|

| Step 1: Check for Missed Questions | Ensure every question has been answered. If you skipped any, make sure to complete them before moving forward. |

| Step 2: Verify Key Calculations | Go over any calculations or numerical responses. Double-check your math to ensure accuracy and that you’ve applied the right formulas. |

| Step 3: Look for Inconsistencies | Review your answers for any contradictions or inconsistent responses. If your answers don’t align with each other, reassess your reasoning. |

| Step 4: Review the Clarity of Written Responses | Ensure that your written answers are clear and well-organized. Make sure you’ve fully answered the question and provided the necessary details. |

| Step 5: Manage Your Time Wisely | Allocate enough time to review each section. Don’t rush through the process; take the time to evaluate each answer carefully. |

By following a structured review process, you can catch errors, clarify any misunderstandings, and enhance the overall quality of your responses before final submission.

Improving Your Statistical Analysis Skills

Developing strong analytical abilities is essential for interpreting data accurately and making informed decisions. The process of examining data and drawing meaningful conclusions requires a combination of critical thinking, familiarity with key methods, and practical experience. With consistent effort and a structured approach, anyone can improve their proficiency in data analysis.

Key Strategies for Enhancement

- Master the Fundamentals: Understand the core concepts and techniques used in analysis. This includes knowledge of averages, distributions, correlations, and other essential tools that form the basis of deeper analysis.

- Practice Regularly: Consistent practice with real datasets will help reinforce theoretical knowledge. Look for opportunities to work with different types of data and refine your problem-solving skills.

- Seek Feedback: Collaborate with peers or mentors to get feedback on your approaches and solutions. Constructive criticism can help identify areas for improvement and deepen your understanding.

- Learn New Tools: Familiarize yourself with advanced software tools and techniques used in data analysis, such as programming languages, statistical packages, or data visualization platforms.

Improvement Through Application

Applying your analytical skills to real-world problems is one of the most effective ways to improve. Whether it’s working on case studies, solving practical problems, or analyzing historical data, hands-on experience will allow you to hone your abilities and gain confidence. Moreover, continuous learning and staying updated on new methods and trends will keep you ahead in the field of analysis.

By following these strategies and consistently applying yourself to the learning process, you’ll find that your skills in analyzing data will steadily improve, empowering you to make better decisions and draw accurate conclusions in any scenario.

Using Graphs and Charts in Tests

Visual representations of data, such as graphs and charts, are powerful tools for conveying information clearly and efficiently. In many evaluations, these visuals can be used to simplify complex data sets, highlight key trends, and support analytical conclusions. The ability to interpret and create these visuals is a critical skill for presenting data effectively and understanding underlying patterns.

Advantages of Visual Data Representation

- Clarity: Graphs and charts help present large amounts of data in a more digestible format, making it easier to identify relationships and trends at a glance.

- Insightful Comparisons: Visuals allow for quick comparisons between different data points or categories, helping to highlight significant differences or similarities.

- Improved Recall: Visual learners can retain information more effectively when data is presented graphically, making it easier to remember key points during the evaluation process.

How to Use Graphs and Charts Effectively

When using graphs and charts during an evaluation, it is important to focus on clarity and relevance. Choose the right type of graph–whether it’s a bar chart, line graph, pie chart, or histogram–based on the kind of data you’re working with. Ensure that all axes, labels, and legends are clearly defined and that the data is presented in a straightforward manner.

Additionally, when interpreting graphs and charts, take time to analyze the key patterns, correlations, and trends. Being able to draw conclusions from visuals can significantly improve your ability to answer questions related to the data and make informed judgments.

Understanding Statistical Significance

In the context of data analysis, statistical significance refers to the likelihood that a result or effect observed in a study is not due to random chance. It helps to determine whether the findings are meaningful and can be reliably used to make decisions or draw conclusions. When results are statistically significant, it indicates that there is enough evidence to support a claim or hypothesis, beyond mere coincidence.

How to Interpret Statistical Significance

Statistical significance is commonly assessed through the p-value, which measures the probability of obtaining results as extreme as, or more extreme than, those observed, under the assumption that there is no actual effect. A smaller p-value (typically less than 0.05) suggests that the results are statistically significant and unlikely to have occurred by chance. However, it is essential to consider the context, sample size, and research design before making final conclusions.

Common Pitfalls to Avoid

While statistical significance is an important tool in data analysis, it is not infallible. A significant result does not necessarily mean that the effect is large or practically important. Furthermore, relying solely on statistical significance without considering the real-world implications or the effect size can lead to misinterpretations. It’s crucial to combine statistical significance with other factors like context, experimental design, and real-world applicability when drawing conclusions.

Real-World Applications of Statistics

Understanding and applying data analysis techniques is essential in a wide variety of fields. These methods allow professionals to make informed decisions based on empirical evidence, rather than assumptions or guesswork. From business to healthcare, the power of data-driven insights is transforming industries and improving outcomes.

Key Areas of Application

Here are some of the most impactful fields where data analysis plays a crucial role:

- Healthcare: Statistical analysis helps medical professionals evaluate treatment effectiveness, predict disease outbreaks, and optimize patient care strategies.

- Business and Marketing: Companies rely on customer behavior data to optimize products, tailor marketing strategies, and improve customer satisfaction.

- Public Policy: Governments use data to analyze social trends, assess policy effectiveness, and make decisions that impact public health, education, and the economy.

- Sports Analytics: Teams and coaches use performance data to improve player training, strategy, and game performance.

- Environmental Studies: Data analysis helps scientists monitor climate change, track pollution, and develop sustainable practices for natural resource management.

Improving Decision-Making

By using quantitative methods, professionals can move beyond intuition to make more accurate predictions and solutions. This scientific approach allows organizations to optimize operations, mitigate risks, and improve outcomes across diverse sectors. Whether making product development decisions, analyzing market trends, or evaluating public health initiatives, the application of data analysis enhances the precision of decision-making and fosters more informed choices.

Preparing for Advanced Statistical Tests

To effectively approach complex data analysis techniques, it is crucial to have a solid understanding of foundational concepts and methods. Advanced procedures often require a deeper knowledge of assumptions, model fitting, and interpreting results. Preparation involves not only grasping these advanced tools but also applying them in real-world scenarios to ensure accurate insights.

Steps for Effective Preparation

Here are key strategies to help prepare for more sophisticated methods of data analysis:

- Master Basic Concepts: Before diving into advanced techniques, ensure a strong grasp of essential concepts like probability distributions, correlation, and regression analysis.

- Understand Assumptions: Many advanced methods rely on specific assumptions about data. Familiarize yourself with these assumptions (e.g., normality, independence) to avoid misapplying methods.

- Practice with Real-World Data: Hands-on experience with datasets allows you to refine skills and understand the nuances of each technique in practical applications.

- Learn Data Cleaning Techniques: Properly preparing data for analysis is key to ensuring reliable results. This includes handling missing values, outliers, and ensuring consistency across datasets.

- Review Statistical Software: Proficiency with tools like R, Python, or SPSS is essential for efficiently implementing complex models and performing detailed analysis.

Key Areas to Focus On

While preparing for advanced data analysis, there are specific areas that require attention:

- Multivariate Analysis: Techniques like MANOVA and factor analysis are used when dealing with multiple variables simultaneously. Understanding how to interpret these analyses is crucial for deriving meaningful conclusions.

- Hypothesis Testing: A deep understanding of hypothesis formulation, p-values, and statistical power is essential when performing tests like t-tests, chi-square tests, or ANOVA.

- Model Evaluation: Advanced analysis often involves constructing predictive models. Learn how to evaluate model performance using metrics like R-squared, AUC, and cross-validation.

By following these guidelines and ensuring a thorough understanding of underlying principles, you’ll be well-prepared to tackle more advanced analytical challenges and provide meaningful insights from complex data.