Modern information systems play a pivotal role in organizing, storing, and retrieving vital information across various domains. Understanding their core principles is crucial for effectively managing information in today’s digital landscape.

This article delves into key aspects of data frameworks, providing detailed insights to help both beginners and experts enhance their knowledge. From structural design to operational efficiency, every concept is tailored for clarity and usability.

Whether you’re optimizing existing structures or learning about efficient storage strategies, this guide offers practical tips and thorough explanations. Explore innovative solutions and deepen your understanding of how information systems work in diverse environments.

Understanding Data Systems Concepts and Structures

Efficiently managing and organizing information relies on foundational principles that govern the storage, accessibility, and consistency of data. These concepts form the backbone of tools and frameworks used to handle vast amounts of information across industries.

Core elements include methods for structuring data to ensure logical organization, enabling seamless navigation and retrieval. Approaches such as grouping related elements and establishing connections between them play a significant role in maintaining system efficiency and reliability.

Furthermore, understanding the distinctions between various organizational models allows for selecting the most appropriate solution for specific needs. Each model offers unique strengths, making it essential to evaluate their capabilities based on factors like scalability, speed, and complexity.

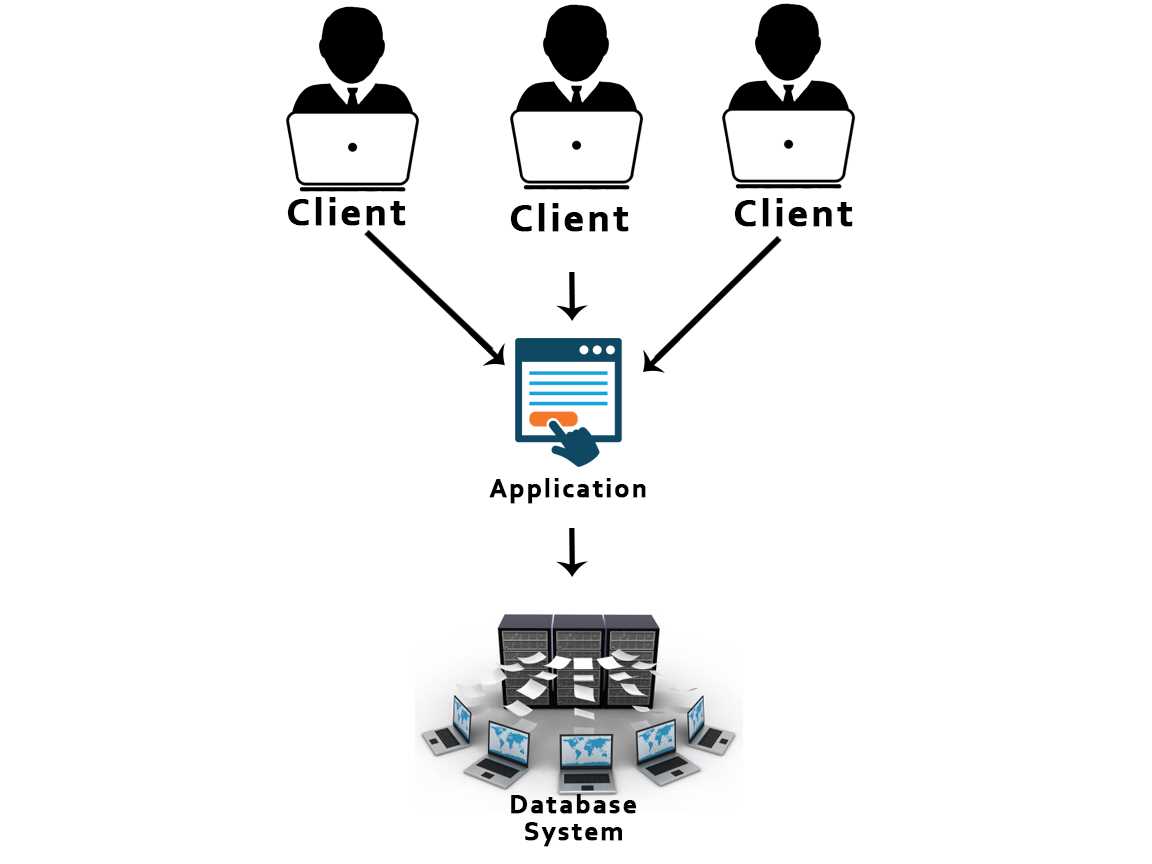

How Information Systems Store and Manage Data

Information management frameworks are designed to handle large volumes of structured and unstructured data. They rely on organized methods to ensure efficiency, security, and quick access to information when needed.

Storage systems operate by arranging data into logical formats that promote ease of use. Common methods include grouping related data points and indexing them for rapid retrieval. These systems also implement techniques to maintain accuracy and prevent conflicts during simultaneous operations.

- Data is often divided into smaller segments to streamline storage and reduce redundancy.

- Indexes are created to enable faster searches, minimizing the time required to locate specific information.

- Structured relationships are established to connect different sets of information logically.

Management techniques focus on maintaining consistency and integrity while supporting multiple users. These include mechanisms for handling updates, preventing unauthorized access, and ensuring reliable backups in case of failures.

- Transaction systems enforce rules to keep information consistent during modifications.

- Security measures, such as authentication and encryption, protect sensitive data.

- Regular backup routines safeguard against data loss caused by unexpected events.

By combining these strategies, information systems provide robust solutions for organizing, storing, and managing complex datasets efficiently.

Choosing the Right Information Storage Type

Selecting the appropriate storage system is a critical decision that impacts both performance and scalability. Different storage models are optimized for various use cases, each offering unique advantages based on the nature of the data and the requirements of the application.

When evaluating potential options, consider factors such as data structure, access speed, and system complexity. The right choice will balance flexibility, speed, and resource utilization while addressing specific business or technical needs.

- Relational models are well-suited for highly structured data, where relationships between entities are critical for organizing information.

- Document-based systems are better for unstructured data, offering flexibility to store various formats such as text or JSON.

- Key-value stores provide high-speed access to simple data structures, ideal for applications requiring fast reads and writes.

It’s important to match the system’s capabilities with project demands, including anticipated traffic volume and data consistency requirements. Some storage solutions are designed to excel at horizontal scaling, while others are better for vertical scaling or complex transactions.

- Consider future scalability to ensure the system can handle growing datasets.

- Evaluate speed requirements to choose an architecture that minimizes delays in accessing critical information.

- Assess consistency needs to determine whether eventual consistency or strict data integrity is required.

By thoroughly understanding these factors, it’s possible to choose the right system that will support long-term goals while optimizing efficiency and performance.

Key Differences Between SQL and NoSQL

Choosing between structured and flexible data models depends on the requirements of the system being developed. The two primary approaches offer distinct methods for handling data, each with its own set of strengths and trade-offs based on the nature of the information and the intended application.

Systems based on structured models rely on predefined schemas and tables, making them ideal for complex relationships and ensuring data consistency. In contrast, flexible models allow for dynamic data structures, providing more freedom in how data is stored, but may lack the strict consistency of traditional systems.

- Structured Models (SQL) require a fixed schema where tables are defined, and relationships between entities are explicitly defined using keys.

- Flexible Models (NoSQL) allow for unstructured or semi-structured data, accommodating various formats like key-value pairs, documents, or graphs.

- Scalability: Structured models tend to scale vertically, whereas flexible systems are designed to scale horizontally, making them better suited for large-scale distributed applications.

- Consistency: Structured models emphasize strong consistency and ACID (Atomicity, Consistency, Isolation, Durability) properties, whereas flexible models often provide eventual consistency for better availability.

Ultimately, the choice between these two approaches depends on the specific use case. If your application needs fast, complex queries with reliable consistency, structured models are preferable. However, for handling large amounts of unstructured data or real-time applications with rapid growth, flexible systems may be a more suitable solution.

Common Data Management Challenges and Solutions

Handling large volumes of information comes with its own set of difficulties, ranging from ensuring fast access to maintaining consistency. Overcoming these challenges requires both a strong understanding of the underlying systems and the implementation of effective strategies for optimization and troubleshooting.

Performance Bottlenecks

As data grows, performance can degrade due to slow retrieval times or inefficient queries. One common issue is the improper use of indexes, which can lead to delays in locating specific records.

- Implementing proper indexing and optimizing queries can significantly enhance performance.

- Using caching mechanisms reduces the need for frequent data retrieval, improving response times.

Data Integrity and Consistency

Ensuring that data remains accurate and reliable is crucial, especially when multiple users are accessing and modifying information simultaneously. Conflicts can arise if proper control mechanisms are not in place.

- Implementing strict transactional controls (such as ACID properties) helps maintain consistency during updates.

- Using conflict resolution strategies, like versioning or optimistic concurrency control, can help manage simultaneous changes effectively.

By addressing these common issues through well-designed structures and strategies, organizations can improve the efficiency, reliability, and scalability of their systems, ensuring smooth operation even as data demands increase.

Exploring Relational Data Management Systems

Relational systems offer a structured approach to organizing and accessing information, focusing on the logical relationships between different data entities. By utilizing tables and predefined schemas, these systems provide consistency and efficiency for complex queries and transactions.

The key feature of relational systems is the use of structured tables where data is organized into rows and columns. Each table has a primary key to uniquely identify records, while foreign keys establish relationships between different sets of data.

- Data Integrity: Enforces consistency through constraints such as uniqueness, referential integrity, and domain constraints.

- Structured Query Language (SQL): Allows users to query, manipulate, and manage information with standardized commands, making it easier to work with large datasets.

- Normalization: Organizes data to reduce redundancy and ensure data integrity, making it easier to manage large amounts of information.

While relational systems offer clear advantages in data consistency and management, they may face challenges when dealing with massive, unstructured data or applications requiring high scalability. However, for many businesses and applications, these systems remain the foundation for managing structured data efficiently.

- Easy-to-implement query systems ensure fast and reliable data retrieval.

- Helps maintain strong relationships between various types of data, making it easier to understand and analyze.

- Ideal for transactional systems where data accuracy is critical.

Optimizing Queries for Better Performance

Improving the efficiency of data retrieval processes is essential for maintaining smooth system performance, especially as data grows in volume. Query optimization focuses on refining search and retrieval operations to reduce processing time and ensure faster response times.

Effective optimization requires identifying and addressing bottlenecks that slow down queries. This can involve adjusting how data is accessed, indexed, or retrieved, ensuring that operations are as efficient as possible.

- Indexing: Properly indexing frequently queried columns helps speed up search operations, making data retrieval faster.

- Query Refactoring: Simplifying or restructuring queries can often reduce their complexity, resulting in quicker execution times.

- Limit Data Retrieval: Fetch only the necessary data by using filters and limiting result sets, preventing unnecessary load on the system.

In addition to these techniques, regular performance monitoring can help pinpoint slow operations, allowing for further adjustments and fine-tuning. By implementing these strategies, applications can significantly reduce latency, improving overall performance and user experience.

- Monitor query execution plans to identify slow operations.

- Use caching to store frequently accessed data, reducing the need to query repeatedly.

- Break down complex queries into smaller, more manageable operations.

Best Practices for Data Security

Ensuring the safety and confidentiality of sensitive information is a critical aspect of managing any information storage system. Implementing robust security measures helps prevent unauthorized access, data breaches, and potential loss of critical data. Following proven security strategies is essential for safeguarding data from external and internal threats.

There are several best practices that organizations can follow to strengthen their data security posture. These practices cover a wide range of aspects, from access control to encryption, and should be part of an overall comprehensive security strategy.

| Security Practice | Description |

|---|---|

| Access Control | Limit access to sensitive data to authorized users only. Implement role-based access control (RBAC) to ensure that users have the minimum privileges required to perform their tasks. |

| Data Encryption | Encrypt data both at rest and in transit to protect it from being intercepted or accessed by unauthorized parties. This ensures that even if data is compromised, it remains unreadable without the proper keys. |

| Regular Audits | Conduct regular security audits to identify vulnerabilities in the system. This includes reviewing access logs, monitoring for suspicious activity, and checking for any gaps in security measures. |

| Backup and Recovery | Ensure that there are frequent backups of critical information. Develop a reliable recovery plan to restore data in case of breaches, system failures, or disasters. |

| Software Patching | Regularly update and patch software to address known security vulnerabilities. Outdated systems are more susceptible to attacks, making timely patches an essential part of maintaining security. |

By integrating these best practices into a comprehensive security plan, organizations can significantly reduce the risk of unauthorized access and protect their valuable information assets from a wide range of security threats.

Scaling Data Systems for Growing Applications

As applications evolve and their user bases expand, the need to scale information storage systems becomes increasingly critical. Managing larger volumes of data without compromising performance or reliability is key to sustaining growth and ensuring seamless user experiences. Scaling is about finding ways to maintain system efficiency while accommodating growing demands.

Effective scaling requires a strategic approach to both horizontal and vertical expansion. Whether adding more resources to an existing setup or distributing workloads across multiple systems, careful planning is needed to ensure smooth operation as the system scales up.

Vertical Scaling

Vertical scaling involves enhancing the performance of existing infrastructure by upgrading hardware components, such as increasing CPU power, adding more memory, or expanding storage. This approach is often easier to implement but has limitations in terms of how much a single system can handle.

- Advantages: Simple to implement, minimal changes to system architecture.

- Disadvantages: Limited by hardware capacity, may eventually become costly and inefficient for very large applications.

Horizontal Scaling

Horizontal scaling, on the other hand, involves distributing data and workloads across multiple systems, allowing for a more scalable and flexible infrastructure. This approach can better handle large-scale applications, ensuring high availability and improved fault tolerance.

- Advantages: Can handle a larger volume of data, increases redundancy and fault tolerance, and can be more cost-effective for very large applications.

- Disadvantages: More complex to implement and manage, requires careful data partitioning and load balancing.

Choosing the right scaling strategy depends on the specific needs and growth projections of the application. In many cases, a hybrid approach that combines both vertical and horizontal scaling can provide the best balance between performance and cost-efficiency.

Backup Strategies to Protect Your Data

In today’s digital world, safeguarding critical information from unexpected loss or corruption is essential. Having a solid backup plan ensures that, in the event of a system failure, human error, or cyberattack, your data can be restored quickly and accurately. An effective backup strategy is not just about storing copies of data but doing so in a way that is reliable, accessible, and secure.

There are several approaches to backing up data, each with its own strengths depending on the size of the data, frequency of changes, and the level of protection required. A combination of these methods often offers the best results.

- Full Backups: A complete copy of all data, typically performed at regular intervals. While comprehensive, it can be time-consuming and requires substantial storage space.

- Incremental Backups: Only the data that has changed since the last backup is stored. This method saves time and space but may take longer to restore, as all previous backups need to be applied.

- Differential Backups: Similar to incremental backups, but this method stores all changes made since the last full backup. It strikes a balance between speed and restoration time.

Another important consideration is where and how data is stored. Backup locations can include:

- On-Site Storage: Storing backups on local servers or external drives offers fast access but can be vulnerable to physical damage or theft.

- Off-Site Storage: Using cloud-based storage or remote data centers provides added protection in case of local disasters but may involve slower retrieval times.

Regularly testing your backup strategy is just as important as creating it. Routine tests ensure that data can be restored quickly and correctly, and that the backup process is working as expected. This helps avoid unpleasant surprises when disaster strikes.

Understanding Indexing and Its Benefits

Efficient data retrieval is crucial for optimizing the performance of information management systems, especially when dealing with large volumes of data. Indexing is a technique used to speed up the process of locating specific information without having to search through every record. By creating a structured map to access data, indexing can significantly reduce search times and improve overall system efficiency.

At its core, indexing works by organizing data in a way that allows for faster lookups, much like an index in a book, which helps you quickly find the page containing a particular topic. This can be applied to various types of systems to enhance performance, particularly for read-heavy applications that require quick access to specific records.

- Improved Query Speed: By reducing the time it takes to locate relevant data, indexing enhances query performance, making it faster to retrieve information even in large datasets.

- Optimized Storage: Indexes can help minimize the amount of storage required for certain types of queries by allowing for more efficient use of memory and storage resources.

- Enhanced Search Capabilities: Complex searches involving multiple fields or conditions can benefit from indexing, providing more relevant results in less time.

However, it’s important to note that while indexing offers significant performance gains, it also introduces some trade-offs. The process of creating and maintaining indexes requires resources, and excessive or poorly designed indexing can result in overhead that diminishes system performance. Therefore, careful planning and understanding of which data fields to index are critical for maximizing the benefits.

Managing Transactions in Information Systems

In complex systems where data is constantly being read, updated, or modified, maintaining consistency and reliability is essential. Transactions are a fundamental concept in ensuring that operations are executed correctly and that the system remains in a stable state even in the event of errors or interruptions. By managing operations as individual transactions, systems can guarantee that either all changes are applied, or none are, thus preserving integrity.

Transactions are typically governed by four key principles, commonly known as ACID properties: Atomicity, Consistency, Isolation, and Durability. These principles provide a framework for ensuring that operations are reliable and recoverable, helping to prevent data corruption and loss.

Atomicity: All or Nothing

Atomicity ensures that a transaction is treated as a single, indivisible unit. This means that either all the changes within a transaction are applied, or none are, even if a failure occurs midway through the process. It guarantees that the system will never be left in an incomplete or inconsistent state.

Consistency, Isolation, and Durability

Consistency ensures that a transaction takes the system from one valid state to another, maintaining integrity rules throughout. Isolation makes sure that transactions do not interfere with each other, preventing concurrent operations from causing data anomalies. Finally, durability guarantees that once a transaction is committed, the changes are permanent, even in the event of a power failure or crash.

Effective transaction management helps systems maintain smooth operation by preventing inconsistencies and ensuring that changes are safely applied. Whether in online transactions, financial systems, or large-scale applications, proper handling of these operations is vital to achieving reliable performance and user trust.

How to Migrate Data Between Systems

Transferring information from one platform to another can be a complex process, requiring careful planning to ensure that the data remains intact and accessible. Whether upgrading to a new system or consolidating multiple sources, migration is a critical task that requires attention to detail and thorough testing. The goal is to ensure that all relevant data is successfully moved without loss, corruption, or downtime.

The process typically involves several steps, including data extraction, transformation, validation, and loading into the new system. Each of these stages must be carefully managed to avoid inconsistencies and errors. Proper tools and strategies are essential for ensuring the integrity and speed of the transfer.

- Pre-Migration Planning: Begin by assessing the source system and identifying the data to be transferred. Understand the structure and format of the data to ensure compatibility with the target system.

- Data Cleaning: Before migration, clean the data by removing duplicates, correcting errors, and ensuring that it conforms to the target system’s format. This reduces the risk of complications during the transfer.

- Data Mapping: Map the data fields from the source system to the corresponding fields in the destination system. This ensures that data is correctly aligned and transformed during the transfer process.

- Testing and Validation: Run test migrations to validate that the data has been correctly transferred and that all processes are functioning as expected. This step helps identify any issues before the final migration.

- Final Migration: Once everything has been tested and validated, perform the actual migration. Ensure that backups are made before proceeding, so that any unforeseen issues can be addressed quickly.

- Post-Migration Monitoring: After the migration, monitor the system for any discrepancies or issues that may arise. This includes checking for data integrity, performance, and ensuring that users can access the information they need.

By following a structured approach, organizations can ensure a smooth transition when moving data between systems. The success of this process depends on proper planning, careful execution, and thorough testing at every stage.

Exploring Cloud-Based Solutions

With the rise of cloud technology, businesses now have the opportunity to move their storage and management needs to off-site platforms, offering a variety of benefits. Cloud-based systems provide a scalable, flexible, and cost-effective approach for organizations looking to manage their information. These solutions allow for easier access, better reliability, and reduced infrastructure costs, while also simplifying system maintenance and updates.

When considering a cloud-based platform, it’s essential to understand the different models available. Each model comes with its own set of advantages depending on the specific needs of the organization. Whether you’re looking for a fully managed solution or something more customizable, there are options to suit a wide range of business requirements.

Types of Cloud-Based Systems

| Model | Description | Pros |

|---|---|---|

| Public Cloud | Third-party providers offer shared infrastructure to customers over the internet. | Cost-effective, easy scalability, minimal maintenance |

| Private Cloud | Dedicated infrastructure for a single organization, either on-premises or hosted externally. | Enhanced security, full control, customizable |

| Hybrid Cloud | Combination of both public and private clouds, offering flexibility to move workloads between environments. | Flexibility, optimized resources, secure handling of sensitive data |

Benefits of Cloud-Based Solutions

One of the major advantages of utilizing cloud-based platforms is the scalability they offer. Businesses can easily scale up or down based on demand without worrying about physical hardware limitations. Furthermore, cloud solutions often come with built-in security features, backup options, and disaster recovery plans, ensuring data safety and continuity.

Cost-efficiency is another key factor. With cloud services, there is no need to invest in expensive infrastructure or hire a large IT staff for maintenance and upgrades. Instead, organizations pay for what they use, making it more affordable in the long run.

As companies continue to shift toward more digital operations, exploring cloud options is a logical step to ensure greater efficiency, flexibility, and growth potential. Understanding the different models and their benefits will help businesses make informed decisions when selecting the right cloud-based system for their needs.

Decoding Normalization Techniques

When managing large sets of information, it’s essential to organize the data in a way that reduces redundancy and improves efficiency. The process of structuring the data to eliminate unnecessary duplication while maintaining the relationships between different elements is critical for optimal performance. By applying certain techniques, systems can handle larger amounts of information with greater speed and accuracy.

Normalization is a multi-step approach aimed at minimizing anomalies that may arise during data insertion, deletion, or modification. By adhering to a series of formal rules, information can be divided into logical segments that maintain integrity and reduce storage costs.

Stages of Normalization

| Normal Form | Description | Goal |

|---|---|---|

| First Normal Form (1NF) | Ensures all fields contain atomic values, meaning no repeating groups or arrays. | Eliminate duplicate records, ensure atomicity of data |

| Second Normal Form (2NF) | Achieves 1NF and removes partial dependencies, meaning non-key attributes depend on the entire primary key. | Remove partial dependencies |

| Third Normal Form (3NF) | Achieves 2NF and eliminates transitive dependencies, ensuring that non-key attributes are only dependent on the primary key. | Remove transitive dependencies |

| Boyce-Codd Normal Form (BCNF) | Refines 3NF by removing any remaining anomalies where a non-prime attribute determines a candidate key. | Refine dependencies for greater precision |

Benefits of Normalization

Applying normalization techniques helps to maintain the integrity of information, ensuring that updates, deletions, and insertions are processed without causing inconsistency. It also leads to a reduction in redundant data, optimizing storage space and minimizing potential errors.

While normalization brings several advantages, it’s essential to strike a balance. Over-normalization can result in excessive complexity, which may harm system performance. Therefore, understanding when to stop normalizing is just as crucial as applying the right techniques to ensure both efficiency and clarity.

Essential Tools for Management

Efficiently organizing, maintaining, and querying vast amounts of information requires a set of specialized tools. These tools facilitate everything from data storage to complex queries, providing users with the necessary functionality to ensure consistency, reliability, and performance. Whether for simple operations or complex integrations, having the right tools can significantly streamline workflows and improve productivity.

Managing data involves various tasks such as designing structures, monitoring performance, securing sensitive information, and ensuring scalability. The right set of instruments helps simplify these processes, allowing for effective handling of everything from everyday tasks to advanced operations.

Popular Management Tools

- MySQL Workbench – A unified visual tool for database design, query execution, and server administration. It provides an easy interface for users to model and manage their systems.

- pgAdmin – A comprehensive management tool for PostgreSQL, providing users with a powerful yet intuitive platform to manage complex data operations and perform queries.

- MongoDB Compass – A GUI for MongoDB that helps visualize data, build queries, and optimize performance. It’s especially useful for NoSQL environments.

- SQL Server Management Studio (SSMS) – A popular tool for managing SQL Server environments, allowing users to configure, monitor, and administer their systems efficiently.

- Oracle SQL Developer – A free integrated development environment (IDE) designed to help users manage Oracle environments, write SQL queries, and manage data structures.

Key Features to Look For

- Query Optimization – Tools should help improve query execution by providing insights into performance bottlenecks and suggesting optimizations.

- Backup and Recovery – Reliable tools should include automated backup options, ensuring data integrity and facilitating quick recovery during failure scenarios.

- Security Features – Tools with built-in encryption, access control, and audit tracking are essential to safeguard sensitive information.

- Scalability Support – As systems grow, having tools that can efficiently scale resources and handle increased data volumes is crucial for future-proofing.

Utilizing the right combination of these tools ensures streamlined operations, better decision-making, and a solid foundation for expanding data-driven systems.

Future Trends in Data Technology

As the demand for faster, more reliable systems increases, the evolution of data management continues to shape the way businesses and individuals store, retrieve, and manipulate vast amounts of information. The future holds several exciting trends that promise to enhance performance, scalability, and security, while addressing emerging challenges posed by new technologies.

In this context, several innovations are expected to redefine how we manage digital assets, leveraging advancements in artificial intelligence, automation, and cloud computing. These developments will not only streamline current practices but also introduce new paradigms for handling large-scale data operations.

Key Trends to Watch

- AI-Driven Insights – Artificial intelligence is rapidly transforming the field by offering automated decision-making capabilities and predictive analytics. AI can assist in optimizing queries, identifying patterns, and suggesting improvements to enhance overall efficiency.

- Serverless Architectures – As cloud technologies continue to evolve, serverless computing is becoming increasingly popular. This model allows developers to focus on writing code without worrying about managing underlying hardware, offering greater flexibility and cost efficiency.

- Distributed Systems – With the rise of big data, distributed architectures are becoming more common. These systems enable seamless scaling by dividing data across multiple servers, reducing latency, and ensuring high availability.

- Blockchain Integration – The decentralized nature of blockchain is influencing data security and transparency. It holds potential for providing tamper-proof storage and ensuring data integrity, particularly in sectors requiring high levels of trust.

- Quantum Computing – While still in its early stages, quantum computing is poised to revolutionize the way data is processed. With its ability to solve complex problems exponentially faster, it may significantly enhance computational capabilities.

Challenges and Opportunities

- Data Privacy – As data volume grows, ensuring privacy remains a critical issue. New technologies must adapt to meet evolving regulatory standards while maintaining secure access to sensitive information.

- Real-Time Processing – With the advent of IoT devices and smart systems, real-time data processing will become a key requirement. Innovations in processing frameworks will enable faster data handling and more immediate responses to user demands.

- Multi-Model Systems – The future will likely see more hybrid systems capable of handling different data types, from relational to unstructured. These models allow for better flexibility and efficiency, catering to various use cases and industries.

As these trends gain traction, businesses and tech professionals must stay ahead of the curve to capitalize on the evolving landscape of data management. By embracing these advancements, organizations can unlock new opportunities, optimize operations, and prepare for the demands of tomorrow.